Tested with Pytorch 1.12.1 and CUDA 11.6 and Pytoch3d 0.7.1

git clone https://github.com/fzhiheng/RoGS.git-

conda create -n rogs python=3.7 -y conda activate rogs pip install torch==1.12.1+cu116 torchvision==0.13.1+cu116 torchaudio==0.12.1 --extra-index-url https://download.pytorch.org/whl/cu116 pip install addict PyYAML tqdm scipy pytz plyfile opencv-python pyrotation pyquaternion nuscenes-devkit

-

Install pytorch3d.

-

Install the diff-gaussian-rasterization with orthographic camera

git clone --recursive https://github.com/fzhiheng/diff-gs-depth-alpha.git && cd diff-gs-depth-alpha python setup.py install cd ..

-

Install the diff-gaussian-rasterization to optimize semantic

In order to optimize the semantic and try not to lose performance (there is some performance loss in dynamically allocating memory when the channel does not need to be specified). We still use the library above. Only a few changes are needed.

git clone --recursive https://github.com/fzhiheng/diff-gs-depth-alpha.git diff-gs-label && cd diff-gs-label mv diff_gaussian_rasterization diff-gs-label # follow the instructions below to modify the file python setup.py install

Set

NUM_CHANNELSin filecuda_rasterizer/config.htonum_class( 7 for nuScenes and 5 for KITTI) and change alldiff_gaussian_rasterizationinsetup.pytodiff-gs-label. On the dataset KITTI, we changed the name of the library todiff-gs-label2. In practice, you can setNUM_CHANNELSaccording to the category of your semantic segmentation and change the name of the library.

In configs/local_nusc.yaml and configs/local_nusc_mini.yaml

-

base_dir: Put official nuScenes here, e.g.{base_dir}/v1.0-trainval -

image_dir: Put segmentation results here. We use the segmentation results provided by Rome. You can download here. -

road_gt_dir:Put ground truth here. To produce ground truth:python -m preprocess.process --nusc_root /dataset/nuScenes/v1.0-mini --seg_root /dataset/nuScenes/nuScenes_clip ---save_root /dataset/nuScenes/ -v mini --scene_names scene-0655

In configs/local_kitti.yaml

-

base_dir: Put official kitti odometry dataset here, e.g.{base_dir}/sequences -

image_dir: Put segmentation results here. We use the segmentation results provided by Rome. You can download here. -

pose_dir: Put kitti odometry pose here, e.g.{pose_dir}/dataset/poses

python train.py --config configs/local_nusc_mini.yaml@article{feng2024rogs,

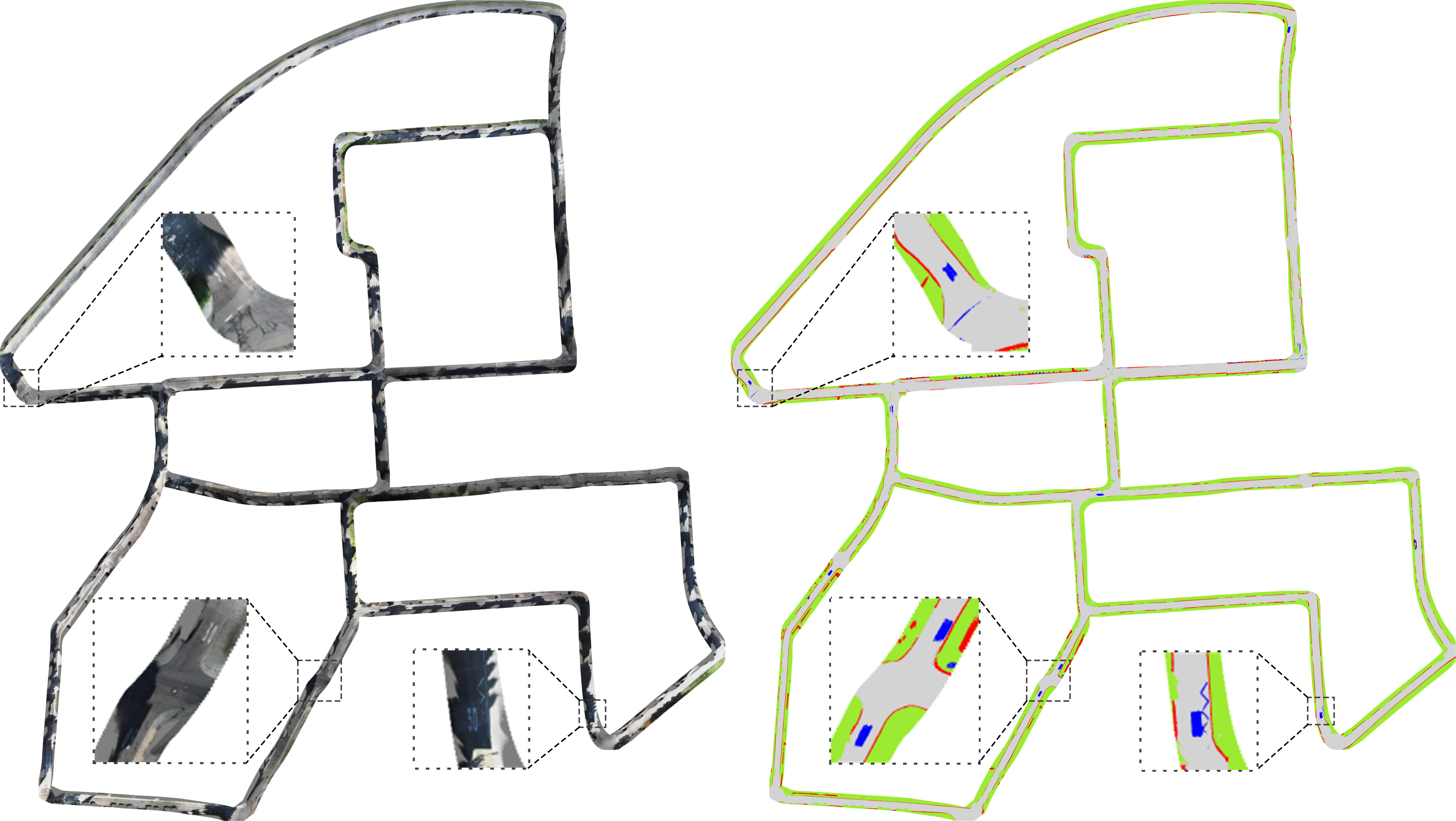

title={RoGS: Large Scale Road Surface Reconstruction based on 2D Gaussian Splatting},

author={Feng, Zhiheng and Wu, Wenhua and Wang, Hesheng},

journal={arXiv preprint arXiv:2405.14342},

year={2024}

}

This project largely references 3D Gaussian Splatting and RoMe. Thanks for their amazing works!