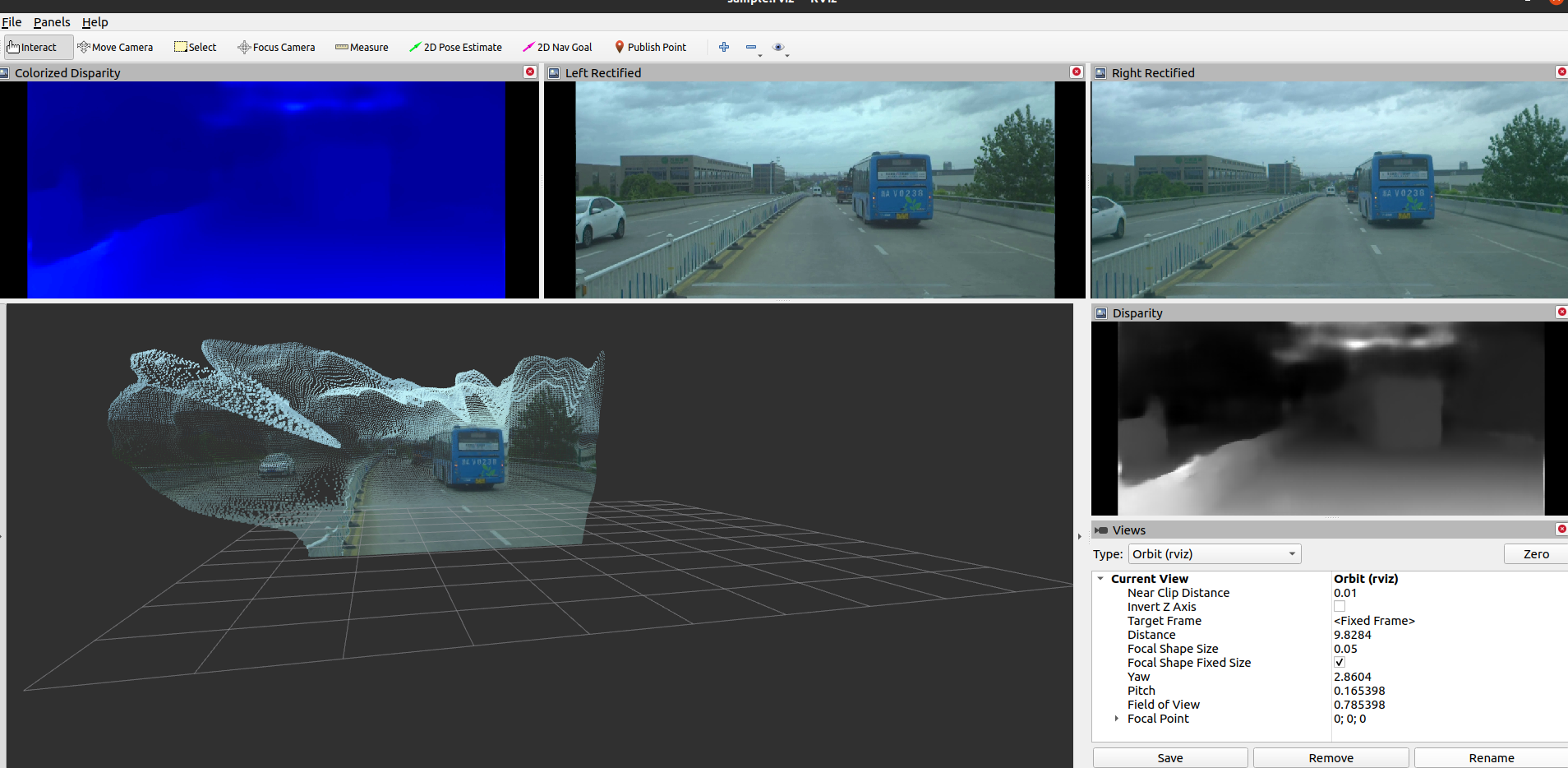

ROS package for stereo matching and point cloud projection using AANet

- create ros nodes to get disparity map from stereo matching with AANet

- create pseudo-lidar point cloud from disparity map with camera info

- create stereo_matching conda environment

conda env create --file environment.yml- Fetch AANet

git submodule update --init --recursive- Activate conda environment and build deformable convolution for aanet

conda activate stereo_matching

cd ./scripts/aanet/nets/deform_conv && bash build.sh- python3: this package (also the original implementation) uses python3, which is taboo for ROS1. One solution to avoid confliction between ROS1 and python3 is to run roslaunch under the directory with stereo_matching env activated.

Better to build with catkin or colcon Before building with ROS build tool, config current ROS workspace to use python in stereo_matching conda environment with

catkin config -DPYTHON_EXECUTABLE=`which python` -DPYTHON_INCLUDE_DIR=`python -c "from __future__ import print_function; from distutils.sysconfig import get_python_inc; print(get_python_inc())"` -DPYTHON_LIBRARY=`python -c "from __future__ import print_function; import distutils.sysconfig as sysconfig; print(sysconfig.get_config_var('LIBDIR') + '/' + sysconfig.get_config_var('LDLIBRARY'))"`

catkin build-

A sample rosbag of stereo images created from DrivingStereo Dataset with this tool has been prepared HERE. Please download and extract into ./data/output.bag

-

Download AANet+ KITTI 2015 model from MODEL ZOO and put into ./data/aanet+_kitti15-2075aea1.pth

-

Start sample roslaunch

roslaunch aanet_stereo_matching_ros aanet_stereo_matching_ros_rviz_sample.launchPlease take a look at the sample roslaunch to know how to use the parameters. Tune img_scale with bigger value (1.0 means original image size) would yeild better point cloud at the cost of speed. Currently the perspective projection from disparity to point cloud in python seems costly. Might be better to just propagate disparity message and do the projection in C++ package.