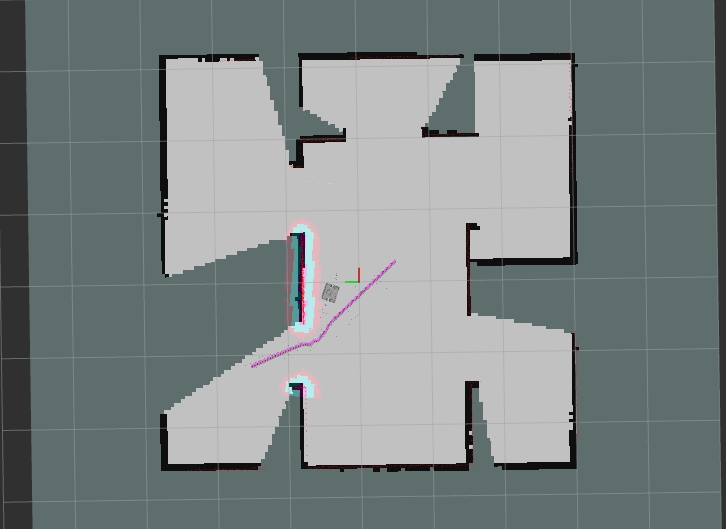

This projecr is used for my gruduation design Deep Reinforcement Learning Based Autonomous Exploration in office Environments, it simulates Rosbot movement on Gazebo and trains a rainforcement learning model DQN.DQN model is mainly used for choose a frontiner as next exploration target.

The model formulation process can reference to this thesis Deep Reinforcement Learning Supervised Autonomous Exploration in Office Environments, the source code and frame mainly reference to kuwabaray/ros_rl_for_slam

- dqn_for_slam: Build a costume GYM enviroment using ROS and Gazebo api and also build a DQN model(Keras-rl2, Tensorflow)

- rosbot_description: Based on rosbot_description. turned off camera and Infrared for computational load and reduced friction.

- simulate_robot_rl: The entry point of training launches all nodes

- simulate_map: Map for simulation

- slam_gmapping: Based on slam_gmapping. Added the service and functions that clear a map and restart gmapping.

environment

- Ubuntu 20.04

- ROS Noetic

- miniconda

ros package

- geometry2

- slam_gmapping

- robot_localization

- navigation

find requirements.yaml to see all environment.

Start trainning process by runing the commod below in order.

FIXME: a lot of absoluate path lies in rl_worker.py and robot_rl_env.py, fix it before running.

roslaunch simulation_map maze1_nogui.launch

roslaunch simulate_robot_rl simulate_robot.launch

roslaunch mynav pathplan.launch

python ./dqn_for_slam/dqn_for_slam/rl_worker.pyTrained models are saved to ~/dqn_for_slam/dqn_for_slam/models/

mail: nianfeifly@163.com