This workspace provides some ROS drivers and calibration tools of sensors I have tested. At present, the workspace includes the introductions of the following sensor drivers and calibration tools.

Sensors

| Type | Model |

|---|---|

| IMU | Xsens MTi-series |

| LiDAR | Velodyne-16, RS-Ruby(128), Livox-Horizon, Ouster OS0, hesai-Pandar64 |

| Camera | Realsense D435-series, Ladybug3 |

Calibration tools

| Intrinsic Calibration | Extrinsic Calibration |

|---|---|

| IMU | LiDAR<-->IMU |

| Camera | LiDAR<-->Camera |

| Camera<-->IMU | |

| Camera<-->Camera | |

| LiDAR<-->LiDAR |

- Introduction

- Quick Start

- TO DO

- Reference

- File tree

sudo apt-get install libdw-dev libpcap-dev

catkin_make -DCATKIN_WHITELIST_PACKAGES="code_utils"

catkin_make -DCATKIN_WHITELIST_PACKAGES=""The default configuration can publish sensors_msg/IMU message including orientation, angular_velocity, linear_acceleration. So you can read the IMU message by run the following code.

roslaunch read_pkg view_imu.launchIf there is an error called [ERROR] [1627211303.220970]: Fatal: could not find proper MT device. May the following code will solve the problem.

sudo chmod 777 /dev/ttyUSB0Configure the IMU:

Xsens IMUs have much configuration we can modify, such as

baudrate,Synchronization settings,timeout, etc. You can have an instruction by run the following code.rosrun xsens_driver mtdevice.py -hThe most common configuration is to configure which kind of messages to publish. The following configuration is to set the driver to publish orientation, linear_acceleration, angular_velocity, which are very important information for robotic navigation.

rosrun xsens_driver mtdevice.py --configure="oq,aa,wr"

-

Configure the network:

Edit connections --> Edit --> IPv4 Settings( Method: Manual, Addresses: Add --> Address: 192.168.1.10, Netmask: 255.255.255.0, Gateway:192.168.1.10,注:velodyne会根据当前所连接的本地IP,动态分配给自己相同网段的IP,所以只需要设置本地为静态IP即可) & modify Connection name --> save

-

Get the velodyne xml file from https://github.com/Kitware/VeloView/tree/master/share and convert to a yaml file. (Optional)

-

Run it

roslaunch velodyne_pointcloud VLP16_points.launch-

Modify the config file in

driver_ws/src/driver_pkg/rslidar_sdk/config/and modify the name of config file in thestart.launch.Refer to 参数说明 for details.

-

Run it

roslaunch rslidar_sdk start.launchCompress the lidar data

For RS-Ruby(128), which can reach about 70Mb/s bandwidth, some computers may lose frames during rosbag record . To solve this problem, we can record the compressed point cloud topic. Refer to 录制ROS数据包&离线解析ROS数据包 for details.

Then decompress the compressed topic when using the bag file.

roslaunch rslidar_sdk decoder.launchRefer to https://github.com/yanliang-wang/ladybug_driver_ws.

Refer to README for detailed configurations. Refer to livox_ros_driver for the latest driver.

- Run it

roslaunch livox_ros_driver livox_lidar_rviz.launchRefer to 中文指南,README for details.

a. Get the IP address of your Ouster sensor after connecting to your computer.

avahi-browse -lr _roger._tcpb. 驱动

# Modify the sensor_hostname in the view_ouster.launch

roslaunch read_pkg view_ouster.launchc. 录制数据集

OS0(64-ring) can reach about 33Mb/s bandwidth when recording /os_cloud_node/points. So we can record the compressed topics.

rosbag record /os_node/imu_packets /os_node/lidar_packetsDecompress

roslaunch read_pkg view_ouster_decoder.launch

rosbag play XXX.bagNote: There are 9 channels for one point in the ouster point cloud, which are

x, y, z, intensity, t, reflectivity, ring, ambient, range.

The default IP of the hesai LiDAR is 192.168.1.201. We can read sensor data by setting local static IP to 192.168.1.XXX.

roslaunch hesai_lidar hesai_lidar.launch lidar_type:="Pandar64" frame_id:="Pandar64" Format converting

Similar to RS-lidar, we can convert the format of the point in the Hesai point cloud to Velodyne's.

rosrun hesai_to_velodyne hesai_to_velodyne XYZIRT XYZIRTRefer to kalibr for installation.

Step:

-

prepare the calibration board and the config file of calibration board: download or create custom targets

-

record the throttled camera topic

rosrun topic_tools throttle messages /camera/color/image_raw 4 /camera/color/image_raw_throttle # 4hz is enough rosbag record /camera/color/image_raw_throttlewhen collecting the data, the calibration board should be in the FOV of the camera and the pose for the camera should be as diverse as possible .

-

calibrate it

rosrun kalibr kalibr_calibrate_cameras --bag d435-2022-06-12-11-20-10.bag --topics /camera/color/image_raw_throttle --models pinhole-radtan --target april_6x6.yaml

As for the distortion model of a camera, you can use

pinhole-radtanif you are using a pin-hole camera.You can get more details on https://github.com/ethz-asl/kalibr/wiki/supported-models#distortion-models.

And the calibration result will be saved in

<bag-name>-results-cam.txt.

Refer to 【Ros】摄像头标定camera calibration.

Refer to imu_utils for installation.

Step:

- collect the data while the IMU is Stationary, with a two hours duration, at least two hours suggested;

- modify the param of the launch file;

<launch>

<node pkg="imu_utils" type="imu_an" name="imu_an" output="screen">

<param name="imu_topic" type="string" value= "/imu/data"/>

<param name="imu_name" type="string" value= "xsens"/>

<param name="data_save_path" type="string" value= "$(find imu_utils)/data/"/>

<param name="max_time_min" type="int" value= "120"/> <!-- the duration of your bag (Unit: minute) -->

<param name="max_cluster" type="int" value= "100"/>

</node>

</launch>- roslaunch the rosnode and play the bag file;

roslaunch imu_utils xsens.launch

rosbag play -r 200 XXX.bag- see the result;

The calibration result is saved in imu_utils/data.

Refer to lidar_imu_calib for installation.

Step:

-

use rosbag tool record imu and lidar data(Note: The pose for the sensors platform should be as diverse as possible, for example, a trajectory shaped like "8".)

rosbag record /imu /lidar_points

-

config launch file

lidar_topic: lidar data topic name imu_topic: imu data topic name bag_file: *.bag file record imu and lidar data topic

-

start

roslaunch lidar_imu_calib calib_exR_lidar2imu.launch

Refer to plycal.

We can get the synchronized camera and lidar data by lidar_camera_tools, which is needed by the plycal.

# Modify the configuration in the get_sync_data.launch

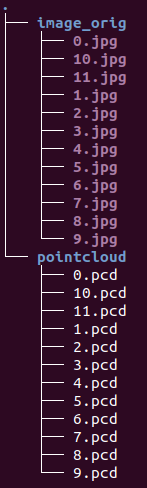

roslaunch lidar_camera_tools get_sync_data.launchPush the space button to save the current synchronized data. The result is as follows.

Refer to kalibr.

Refer to kalibr.

Refer to lidar_appearance_calibration or MLC.

- Detailed introduction to calibration tools between camera and LiDAR

- Detailed introduction to calibration tools between camera and IMU

- Detailed introduction to calibration tools between LiDARs

- Detailed introduction to calibration tools between cameras

- Detailed introduction to calibration tools of camera intrinsic parameter

- Ubuntu在ROS下安装Velodyne驱动

- Velodyne driver

- Xsens IMU driver

- 【传感器】IMU Xsens使用

- rslidar driver

- lidar_imu_calib

- imu_utils

- livox_ros_driver

- ouster_example

- HesaiLidar_General_ROS

├── calib_pkg # including the calibration tools

├── driver_pkg # including the ROS drivers

└── read_pkg # including config file, rviz file and launch file to read and display sensor data