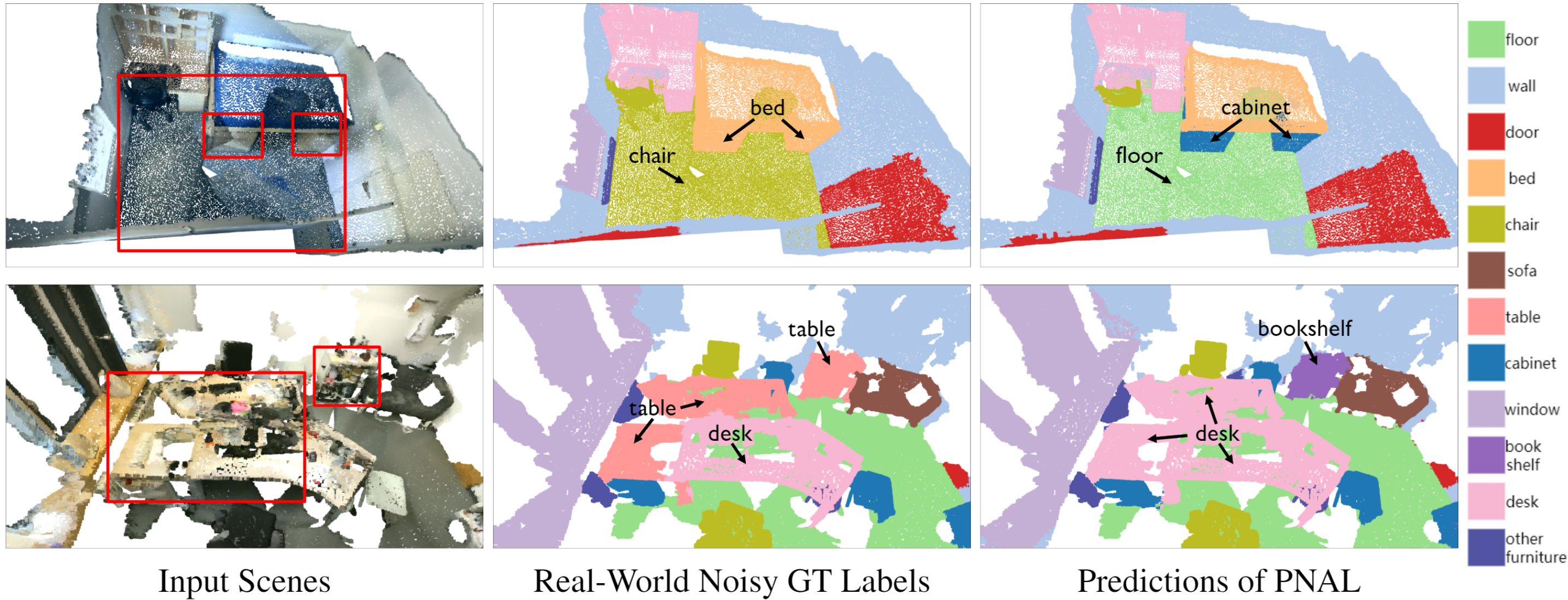

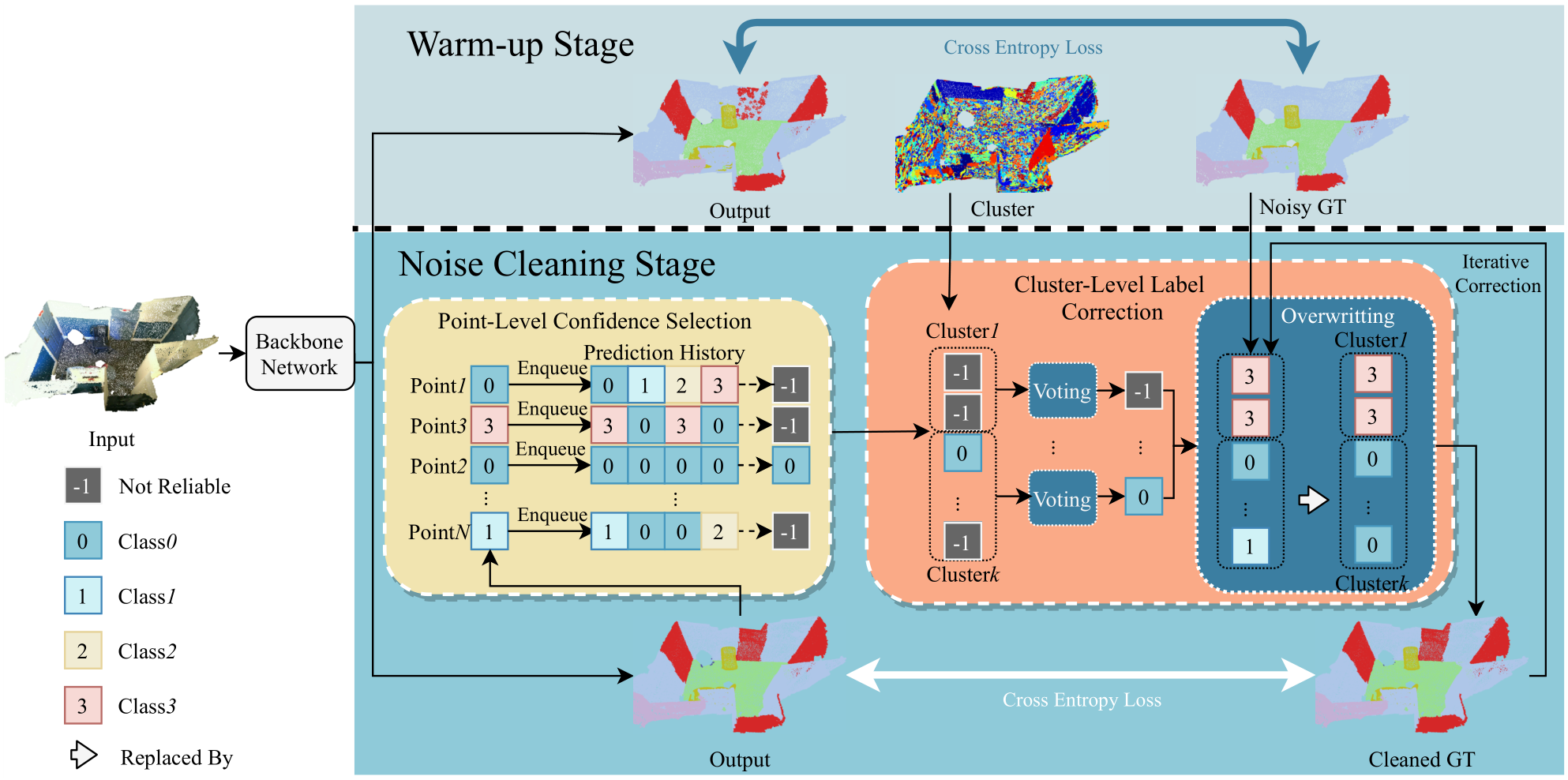

This repository is the official pytorch implementation of the proposed Point Noise-Adaptive Learning (PNAL) framework our ICCV 2021 oral paper, Learning with Noisy Labels for Robust Point Cloud Segmentation.

Shuquan Ye1,

Dongdong Chen2,

Songfang Han3,

Jing Liao1

1City University of Hong Kong, 2Microsoft Cloud AI,3 University of California

2021/10/17: initial release.

Ubuntu 18.04

Conda with python = 3.7.7

pytorch = 1.5.0

cuda = 10.1, cudnn = 7.6.3

torchvision = 0.6.0

torch_geometric = 1.6.1

By default, we train with a single GPU >= 10000MiB, with batchsize=12

Download and unzip.

Note that point cloud data is NOT included in the above file, according to ScanNet Terms of Use.

Download all meshes from ScannNetv2 validation set to mesh/.

And then extract by

python find_rgb.py

Download per60_0.018_DBSCANCluster, the S3DIS dataset with 60% symmetric noise and clustered by DBSCAN.

Move it to NL_S3DIS/ and unzip.

TODO

download and unzip data_raw.zip, the clean data and based on this we make noise.

e.g. create 60% symmetric noise:

```python make_NL_S3DIS.py --noiserate_percent 60 --alpha 0.85 --root data_with_ins_label```

You can further switch cluster methods and noise types in S3DIS_instance.

download ply_data_all_h5, the raw S3DIS dataset.

move it to NL_S3DIS/raw and unzip.

done.

Go into NL_S3DIS/ and run

python compare_labels.py

. Be patient and wait for it end to print Overall Noise Rate for you.

You can run under ours PNAL pipeline with different configs, by:

bash run_pnal.sh

e.g., run DGCNN on S3DIS with 60% symmetric noise in our prepared configs/PNAL.yaml.

You can run without ours PNAL pipeline with different configs, by:

bash run.sh

e.g., run DGCNN on S3DIS with Symmetric Cross Entropy (SCE) Loss in our prepared configs/SCE.yaml, and

you can run with common Cross Entropy (CE) loss or Generalized Cross Entropy (GCE) Loss by change LOSS_FUNCTION from SCE to "" or GCE...

@article{pnal2021,

author = {Ye, Shuquan and Chen, Dongdong and Han, Songfang and Liao, Jing},

title = {Learning with Noisy Labels for Robust Point Cloud Segmentation},

journal = {International Conference on Computer Vision},

year = {2021},

}

😸 We thank a lot for the flexible codebase of SELFIE, pytorch_geometric, Truncated-Loss.

😽 I would like to give my particular thanks to Jiaying Lin, my special friend, for his constructive suggestions, generous support to this project, as well as the tremendous love given to me~