This repository contains the code for

@inproceedings{yew2020-RobustSync,

title={Learning Iterative Robust Transformation Synchronization},

author={Yew, Zi Jian and Lee, Gim Hee},

booktitle={International Conference on 3D Vision (3DV)},

year={2021}

}

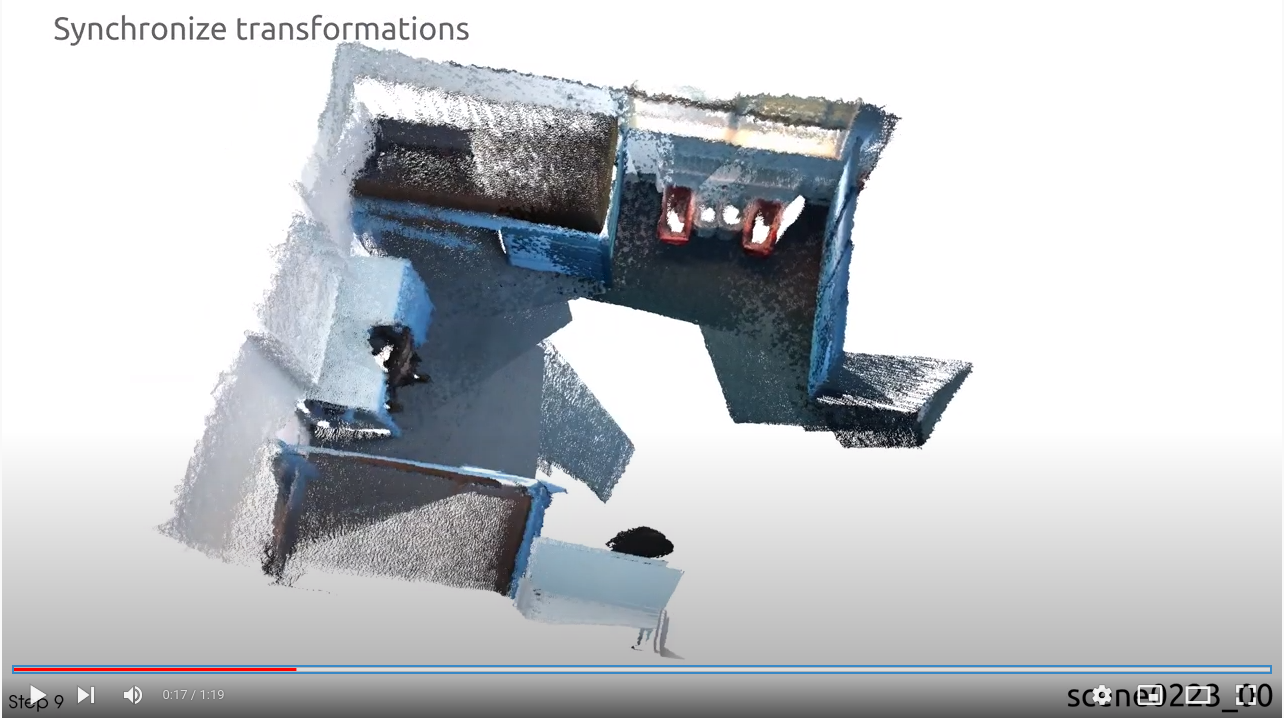

See the following link for a video demonstration on the ScanNet dataset.

This code has been tested on PyTorch 1.7.1 (w/ Cuda 10.2) with PyTorch Geometric 1.7.1. Note that our code currently does not support PyTorch Geometric v2. You can install the required packages by running:

pip install -r requirements.txtThen install torch geometric (and its required packages) by running the following:

export TORCH=1.7.1

export CUDA=cu102

pip install torch-scatter==2.0.7 -f https://pytorch-geometric.com/whl/torch-${TORCH}+${CUDA}.html

pip install torch-sparse==0.6.9 -f https://pytorch-geometric.com/whl/torch-${TORCH}+${CUDA}.html

pip install torch-cluster==1.5.9 -f https://pytorch-geometric.com/whl/torch-${TORCH}+${CUDA}.html

pip install torch-spline-conv==1.2.1 -f https://pytorch-geometric.com/whl/torch-${TORCH}+${CUDA}.html

pip install torch-geometric==1.7.1If you want to process the ScanNet data yourself, you will also also need to install the following:

- OpenCV (

pip install opencv-python) - Open3D (

pip install open3d) - nibabel (

pip install nibabel) - Minkowski Engine (

pip install MinkowskiEngine==0.4.3)

For this, you may either use the provided scripts to generate the dataset yourself, or download the dataset:

-

Generate data: Run the MATLAB script

src/scripts/NeuRoRA/Example_generate_data_pytourch.m. The generated data (~7GB) will be stored indata/neurora/gt_graph_random_large_outliers.h5.Note that due to randomness in the generation scripts, the generated data will not be exactly the same as ours and the evaluation results might differ slightly.

-

Download data: Please email me if you need the exact data I used.

We provide the view graphs for our training/validation/test sets here (85MB). Place the downloaded data into data/.

If you wish to reprocess the raw dataset yourself, follow the following steps for the train/val dataset:

- First, download the raw datasets from the ScanNet website. You need the .sens file.

- Extract the depth images and poses from the .sens file, e.g. using the SensReader.

- Downloaded the pretrained models for FCGF, and pairwise registration block of LMPR, and place them in

- Run the script

src/data_procesing/process_scannet.pyto generate the training/validation dataset.

For the test dataset, you may contact the authors of LMPR to get the point clouds used, after which you can run step 4 above to generate the view graphs. Note that the relative poses will differ slightly from those reported in their/our paper due to randomness (e.g. from RANSAC).

We provide the view graphs for training here. Place the downloaded data into data/.

Alternatively, you may process the data yourself by running the script src/data_processing/process_3dmatch.py on the 3DMatch dataset downloaded from LMPR project page.

Our pretrained checkpoints are found in this repository under src/ckpt/:

| Checkpoint | Description |

|---|---|

| neurora.pth | Checkpoint for NeuRoRA synthetic dataset (Table 1 in paper) |

| scannet.pth | Checkpoint trained on ScanNet dataset (Table 3, row 6 in paper) |

| 3dmatch.pth | Checkpoint trained on 3DMatch dataset (Table 3, row 5 in paper) |

Run the following to evaluate our pretrained model (change the --resume argument accordingly if you're using your own trained model):

cd src

python inference_graphs.py --dev --dataset neurora --hidden_dim 64 --val_batch_size 1 --resume ckpt/neurora.pthThe metrics will be printed at the end of inference, and the predicted absolute rotations are also saved in logdev/computed_transforms/output_transforms.h5. Note that the metrics might differ slightly if you are using your own generated data.

Also, since our evaluation implementation might differ from the official MATLAB ones, it is recommended that you rerun the evaluation on the predicted transforms by following the next step.

Evaluation using official MATLAB scripts. We also provide the functionality to evaluate the predicted rotations using the MATLAB scripts from NeuRoRA, which we provide at src/scripts/NeuRoRA/test_synthetic.m (change the path at L6 accordingly if incorrect).

Run the following to evaluate our pretrained model. As before, change the --resume argument accordingly if you're using your own trained model:

cd src

python inference_graphs.py --dev --dataset scannet --resume ckpt/scannet.pthThe metrics will be printed at the end of inference.

We use a smaller network for rotation averaging, compared to the other experiments on rigid-motion synchronization.

cd src

python train_graph.py --dataset neurora --hidden_dim 64 --train_batch_size 1 --val_batch_size 2The performance should saturate around 1.6-1.7 deg mean rotation error during validation.

For our experiments, we tried two settings:

-

Train using ScanNet dataset:

cd src python train_graph.py --dataset scannet -

Train using 3DMatch dataset (direct generalisation test):

cd src python train_graph.py --dataset 3dmatch