Collect and summarize point cloud sota methods.

|

|

|

|

|

|

|

|

|

|

|

|

| pointcloud with language model | |

| pointcloud change detection |

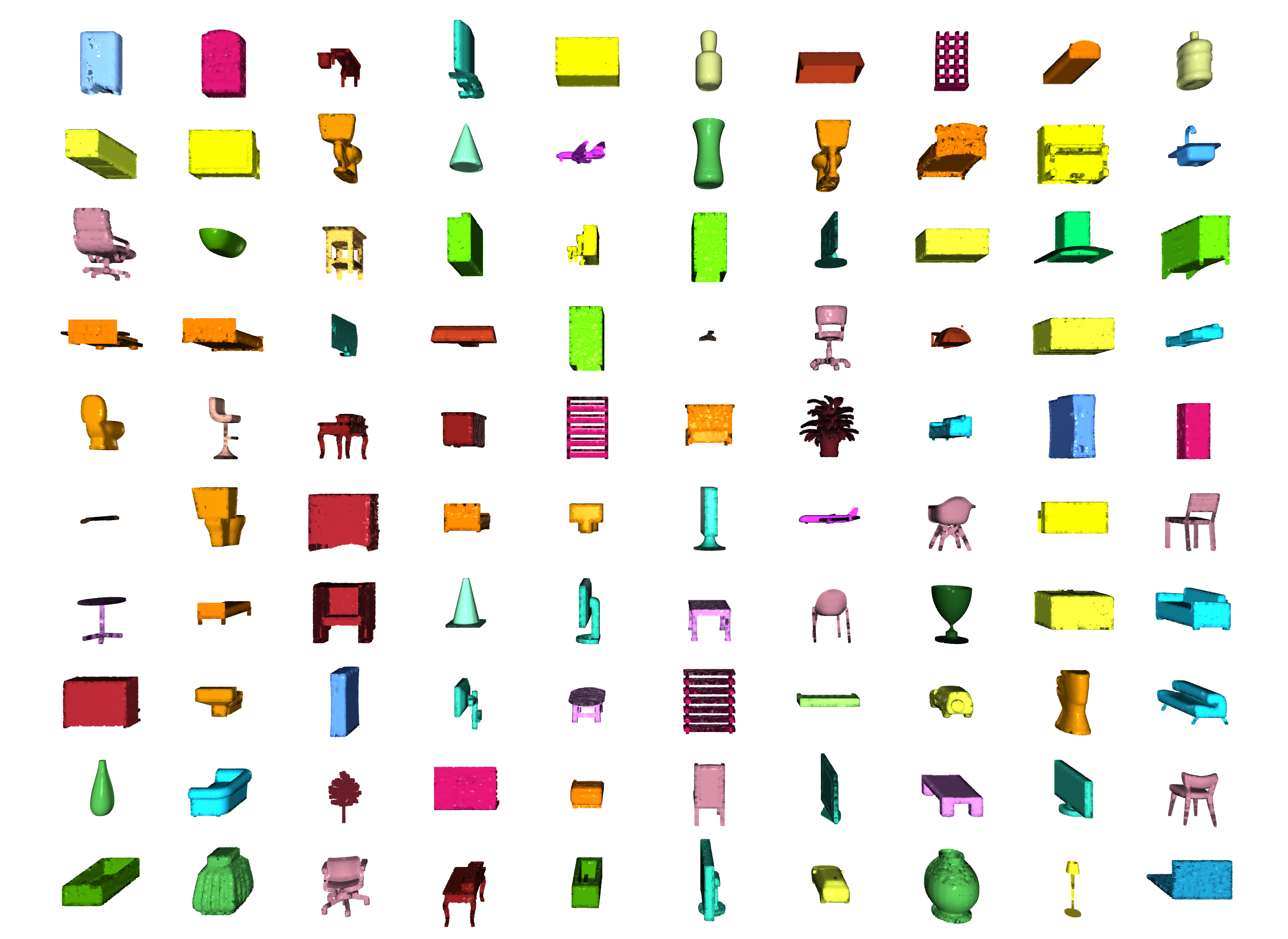

- [ModelNet] ModelNet . [

classification] - [scanobjectnn] The dataset contains ~15,000 objects that are categorized into 15 categories with 2902 unique object instances [

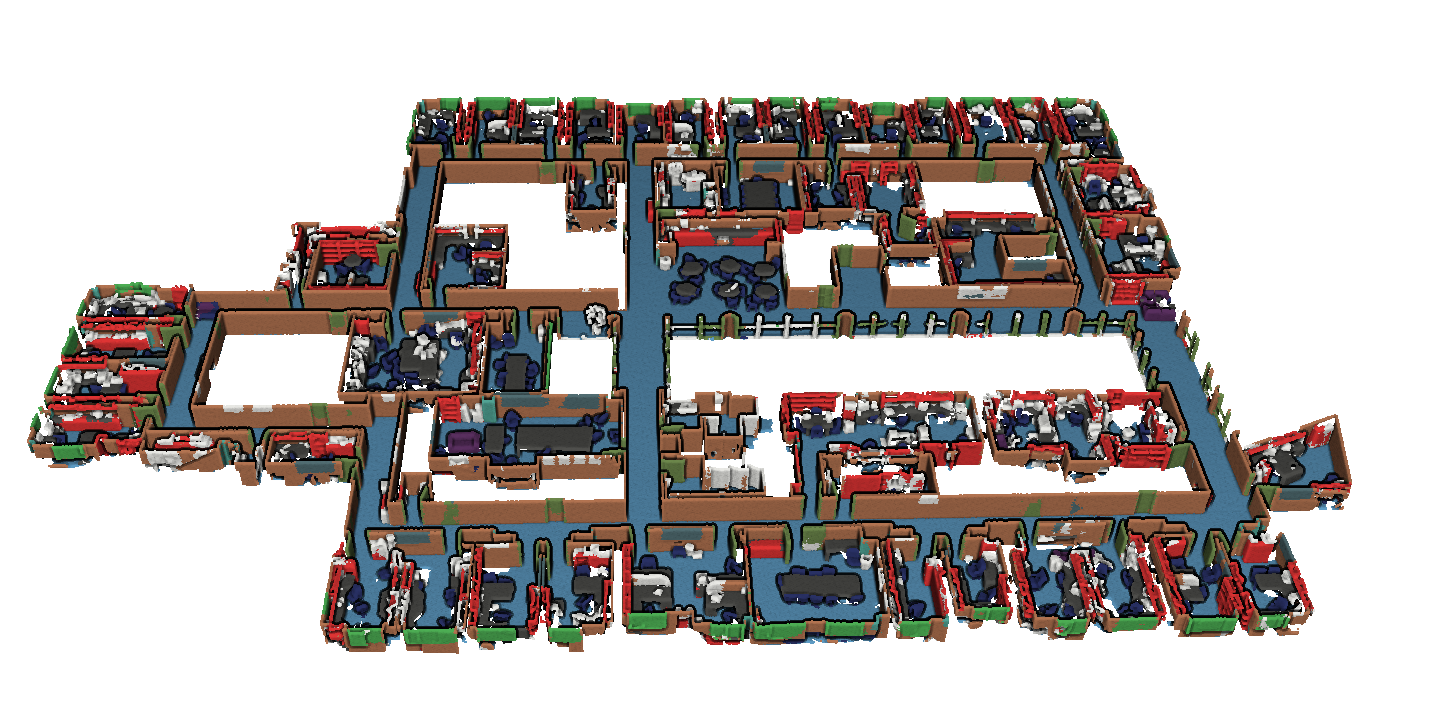

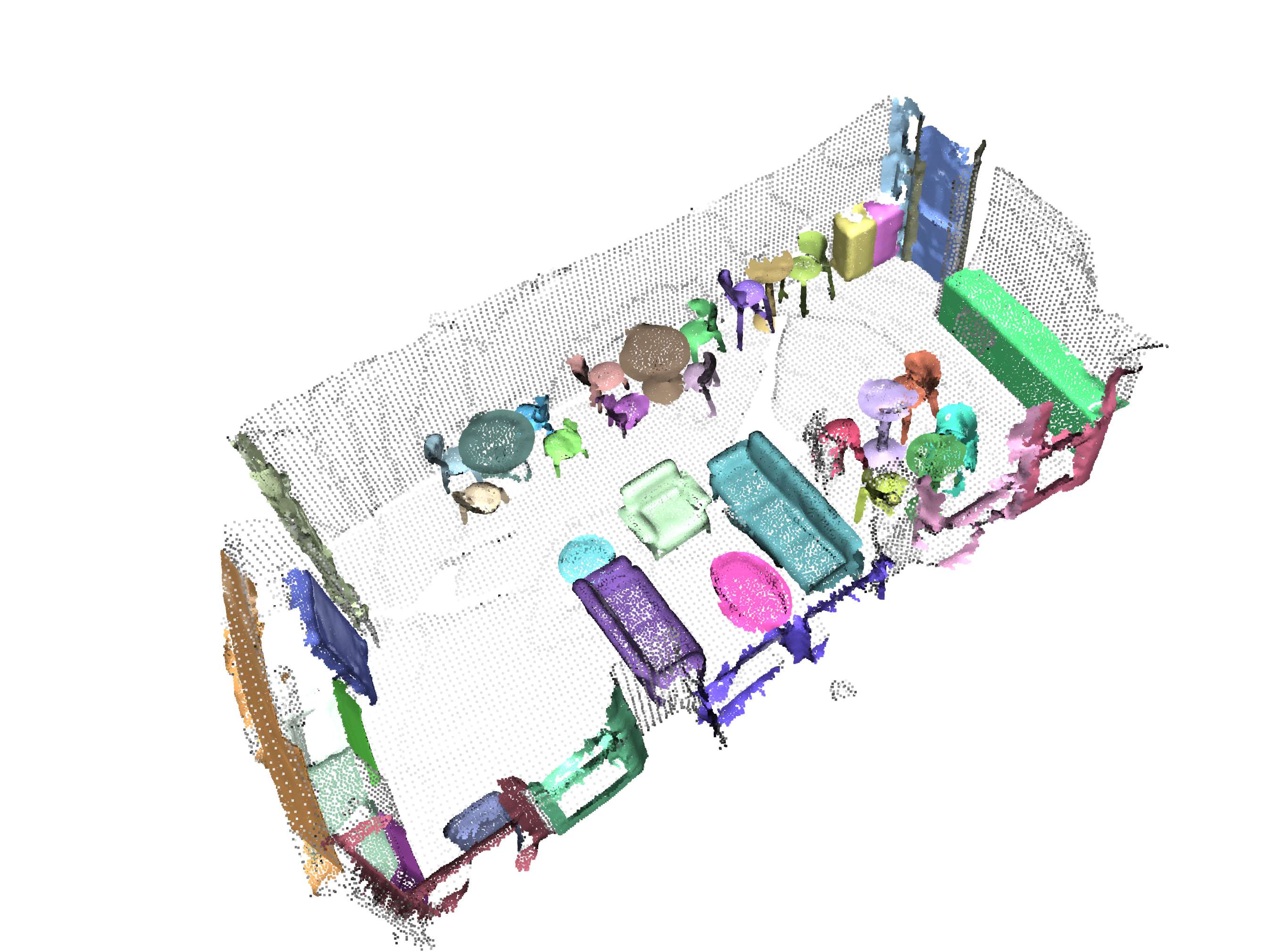

classification] - [ScanNet] Richly-annotated 3D Reconstructions of Indoor Scenes. [

classificationsegmentation] - [S3DIS] The Stanford Large-Scale 3D Indoor Spaces Dataset. [

segmentation] - [npm3d] A Large-scale Mobile LiDAR Dataset for Semantic Segmentation of Urban Roadways [

segmentation] - [KITTI-360] Corresponding to over 320k images and 100k laser scans in a driving distance of 73.7km. annotate both static and dynamic 3D scene elements with rough bounding primitives and transfer this information into the image domain, resulting in dense semantic & instance annotations for both 3D point clouds and 2D images. [

segmentation] - [semantic3d] Large-Scale Point Cloud Classification Benchmark! a large labelled 3D point cloud data set of natural scenes with over 4 billion points in total. It also covers a range of diverse urban scenes. [

segmentation] - SemanticKITTI Sequential Semantic Segmentation, 28 classes, for autonomous driving. All sequences of KITTI odometry labeled. [

segmentation] - [ScribbleKITTI] Choose SemanticKITTI for its current wide use and established benchmark,ScribbleKITTI contains 189 million labeled points corresponding to only 8.06% of the total point count [

segmentation] - [STPLS3D] a large-scale photogrammetry 3D point cloud dataset, termed Semantic Terrain Points Labeling - Synthetic 3D (STPLS3D), which is composed of high-quality, rich-annotated point clouds from real-world and synthetic environments.[

segmentation] - [DALES] A Large-scale Aerial LiDAR Data Set for Point Cloud Segmentation,a new large-scale aerial LiDAR data set with nearly a half-billion points spanning 10 square kilometers of area [

segmentation] - [SensatUrban] This dataset is an urban-scale photogrammetric point cloud dataset with nearly three billion richly annotated points, which is five times the number of labeled points than the existing largest point cloud dataset. Our dataset consists of large areas from two UK cities, covering about 6 km^2 of the city landscape. In the dataset, each 3D point is labeled as one of 13 semantic classes, such as ground, vegetation, car, etc.. [

segmentation] *[H3D] H3D propose a benchmark consisting of highly dense LiDAR point clouds captured at four different epochs. The respective point clouds are manually labeled into 11 classes and are used to derive labeled textured 3D meshes as an alternative representation. UAV-based simultaneous data collection of both LiDAR data and imagery from the same platform,High density LiDAR data of 800 points/m² enriched by RGB colors of on board cameras incorporating a GSD of 2-3 cm [segmentation] - [KITTI] The KITTI Vision Benchmark Suite. [

detection] - [Waymo] The Waymo Open Dataset is comprised of high resolution sensor data collected by Waymo self-driving cars in a wide variety of conditions.[

detectionsegmentation] - [APOLLOSCAPE] The nuScenes dataset is a large-scale autonomous driving dataset.[

detectionsegmentation] - [nuScenes] The nuScenes dataset is a large-scale autonomous driving dataset.[

detectionsegmentation] - [3D Match] Keypoint Matching Benchmark, Geometric Registration Benchmark, RGB-D Reconstruction Datasets. [

registrationreconstruction] - [ETH] Challenging data sets for point cloud registration algorithms [

registration] - [objaverse] Objaverse-XL is an open dataset of over 10 million 3D objects! With it, we train Zero123-XL, a foundation model for 3D, observing incredible 3D generalization abilities.[

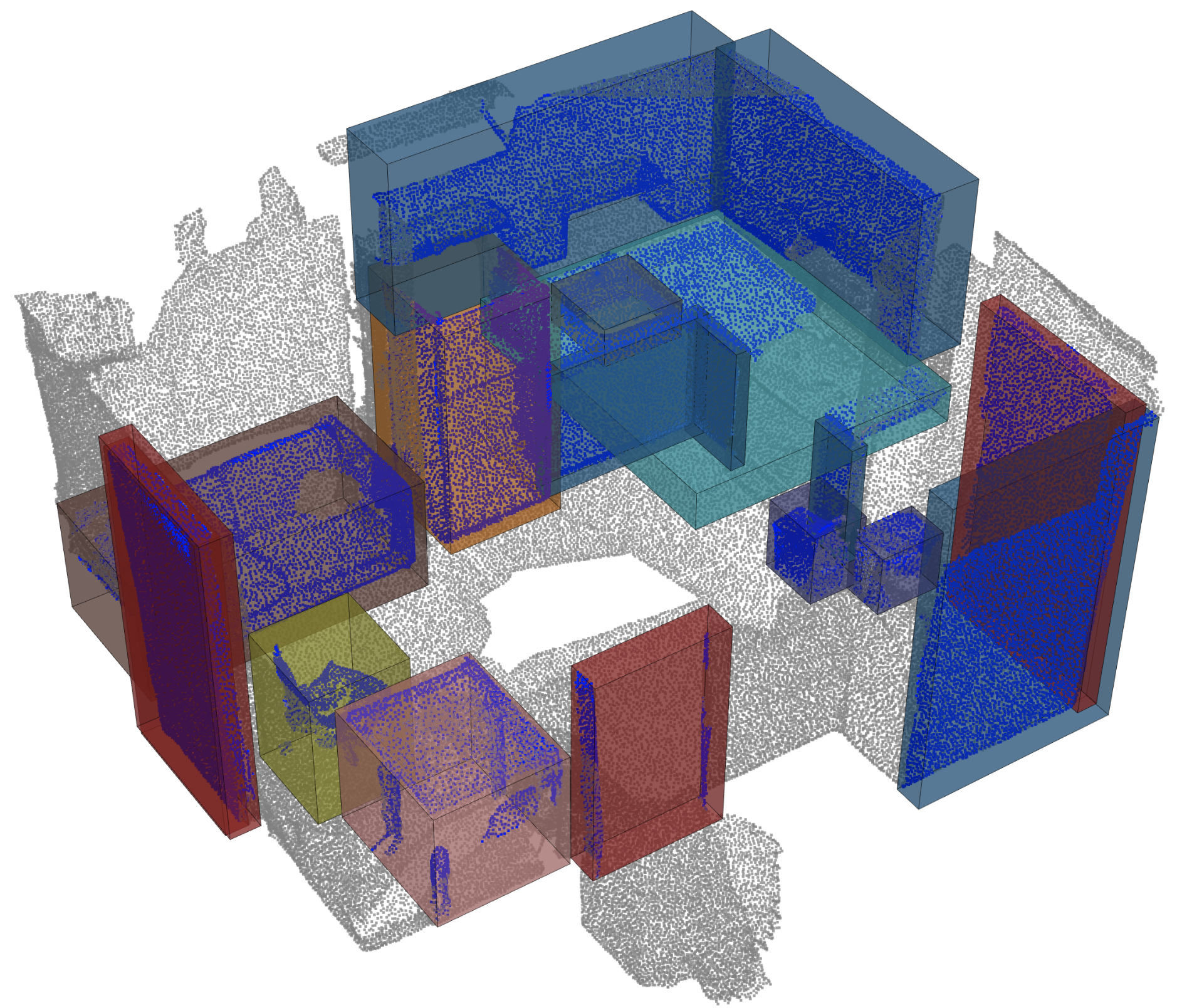

Multi-modal] - [ScanRfer] 3D Object Localization in RGB-D Scans using Natural Language.[

Multi-modal] - [DriveLM] DriveLM is an autonomous driving (AD) dataset incorporating linguistic information. Through DriveLM, we want to connect large language models and autonomous driving systems, and eventually introduce the reasoning ability of Large Language Models in autonomous driving (AD) to make decisions and ensure explainable planning.

[

Multi-modal] - [ScanQA] 3D Question Answering for Spatial Scene Understanding. A new 3D spatial understanding task for 3D question answering (3D-QA). In the 3D-QA task, models receive visual information from the entire 3D scene of a rich RGB-D indoor scan and answer given textual questions about the 3D scene

[

Multi-modal] - [urb3dcd-v2] The dataset is based on LoD2 models of the first and second districts of Lyon, France. To conduct fair qualitative and quantitative evaluation of point clouds change detection techniques. This first version of the dataset is composed of point clouds at a challenging low resolution of around 0.5 points/meter²

[

Change-Detection]

| Year | |||

|---|---|---|---|

| GraphBEV | Towards Robust BEV Feature Alignment for Multi-Modal 3D Object Detection | -- | 2024 |

| DSVT | Dynamic Sparse Voxel Transformer with Rotated Sets | github | 2023 |

| BEVFusion | an efficient and generic multi-task multi-sensor fusion framework | github | 2023 |

| Pillar R-CNN | Pillar R-CNN for Point Cloud 3D Object Detection | github | 2023 |

| TransFusion | Robust LiDAR-Camera Fusion for 3D Object Detection with Transformers | github | 2022 |

| CenterFormer | CenterFormer: Center-based Transformer for 3D Object Detection | github | 2022 |

| CenterPoint | Center-based 3D Object Detection and Tracking | github | 2020 |

| TR3D | Towards Real-Time Indoor 3D Object Detection | github | 2023 |

| PV-RCNN++ | Point-Voxel Feature Set Abstraction With Local Vector Representation for 3D Object Detection | github | 2021 |

| Year | |||

|---|---|---|---|

| PoinTr | PoinTr: Diverse Point Cloud Completion with Geometry-Aware Transformers | github | 2023 |

| MaskSurf | Masked Surfel Prediction for Self-Supervised Point Cloud Learning | github | 2022 |

| Year | |||

|---|---|---|---|

| 3D-LLM | 3D-LLM: Injecting the 3D World into Large Language Models | github | 2023 |

| PointLLM | PointLLM: Empowering Large Language Models to Understand Point Clouds | github | 2023 |

| Year | |||

|---|---|---|---|

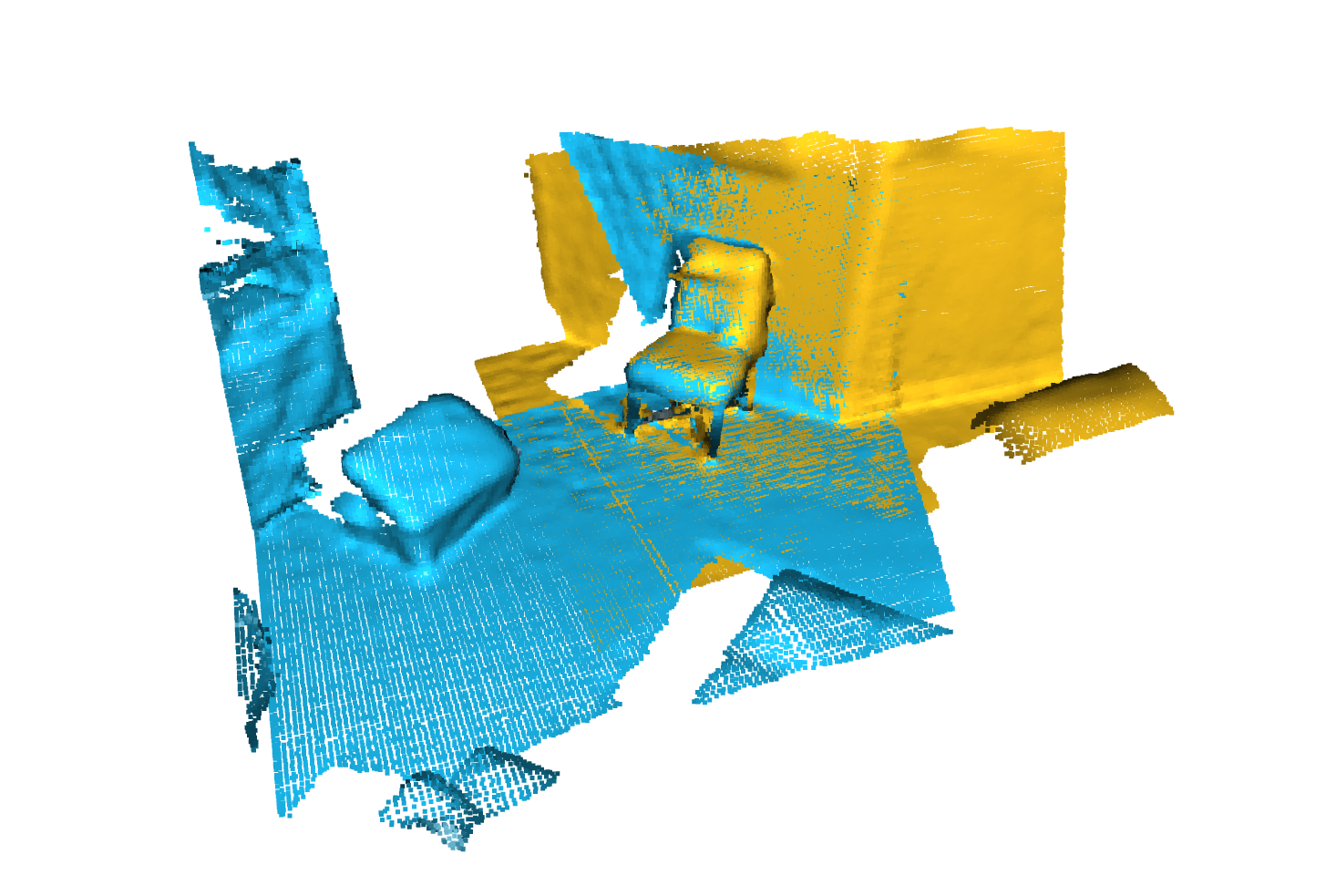

| DC3DCD | unsupervised learning for multiclass 3D point cloud change detection | github | 2023 |

| Siamese KPConv | 3D multiple change detection from raw point clouds using deep learning | github | 2023 |

| A Review | Three Dimensional Change Detection Using Point Clouds: A Review | github | 2022 |

-

[Pointcept]

Pointcept is a powerful and flexible codebase for point cloud perception research. (recommend) -

[mmdetection3d]

MMDetection3D is an open source object detection toolbox based on PyTorch, towards the next-generation platform for general 3D detection. It is a part of the OpenMMLab project developed by MMLab.- Support multi-modality/single-modality detectors out of box

It directly supports multi-modality/single-modality detectors including MVXNet, VoteNet, PointPillars, etc.

- Support indoor/outdoor 3D detection out of box

It directly supports popular indoor and outdoor 3D detection datasets, including ScanNet, SUNRGB-D, Waymo, nuScenes, Lyft, and KITTI. For nuScenes dataset, we also support nuImages dataset.

- Natural integration with 2D detection

All the about 300+ models, methods of 40+ papers, and modules supported in MMDetection can be trained or used in this codebase.

- High efficiency

-

[open3d]

Open3D is an open-source library that supports rapid development of software that deals with 3D data. The Open3D frontend exposes a set of carefully selected data structures and algorithms in both C++ and Python. The backend is highly optimized and is set up for parallelization. Open3D was developed from a clean slate with a small and carefully considered set of dependencies. It can be set up on different platforms and compiled from source with minimal effort. The code is clean, consistently styled, and maintained via a clear code review mechanism. Open3D has been used in a number of published research projects and is actively deployed in the cloud.Core features

- Simple installation via conda and pip

- 3D data structures

- 3D data processing algorithms

- Scene reconstruction

- Surface alignment

- PBR rendering

- 3D visualization

- Python binding

-

[OpenPCDet]

OpenPCDet is a clear, simple, self-contained open source project for LiDAR-based 3D object detection. It is also the official code release of [PointRCNN], [Part-A2-Net], [PV-RCNN], [Voxel R-CNN], [PV-RCNN++] and [MPPNet]. -

Torch Points 3D is a framework for developing and testing common deep learning models to solve tasks related to unstructured 3D spatial data i.e. Point Clouds. The framework currently integrates some of the best published architectures and it integrates the most common public datasests for ease of reproducibility. It heavily relies on Pytorch Geometric and Facebook Hydra library thanks for the great work!

-

Learning3D is an open-source library that supports the development of deep learning algorithms that deal with 3D data. The Learning3D exposes a set of state of art deep neural networks in python. A modular code has been provided for further development. We welcome contributions from the open-source community.

-

CloudCompare is a 3D point cloud (and triangular mesh) processing software. It was originally designed to perform comparison between two 3D points clouds (such as the ones obtained with a laser scanner) or between a point cloud and a triangular mesh. It relies on an octree structure that is highly optimized for this particular use-case. It was also meant to deal with huge point clouds (typically more than 10 million points, and up to 120 million with 2 GB of memory).