Youmin Zhang · Matteo Poggi · Stefano Mattoccia

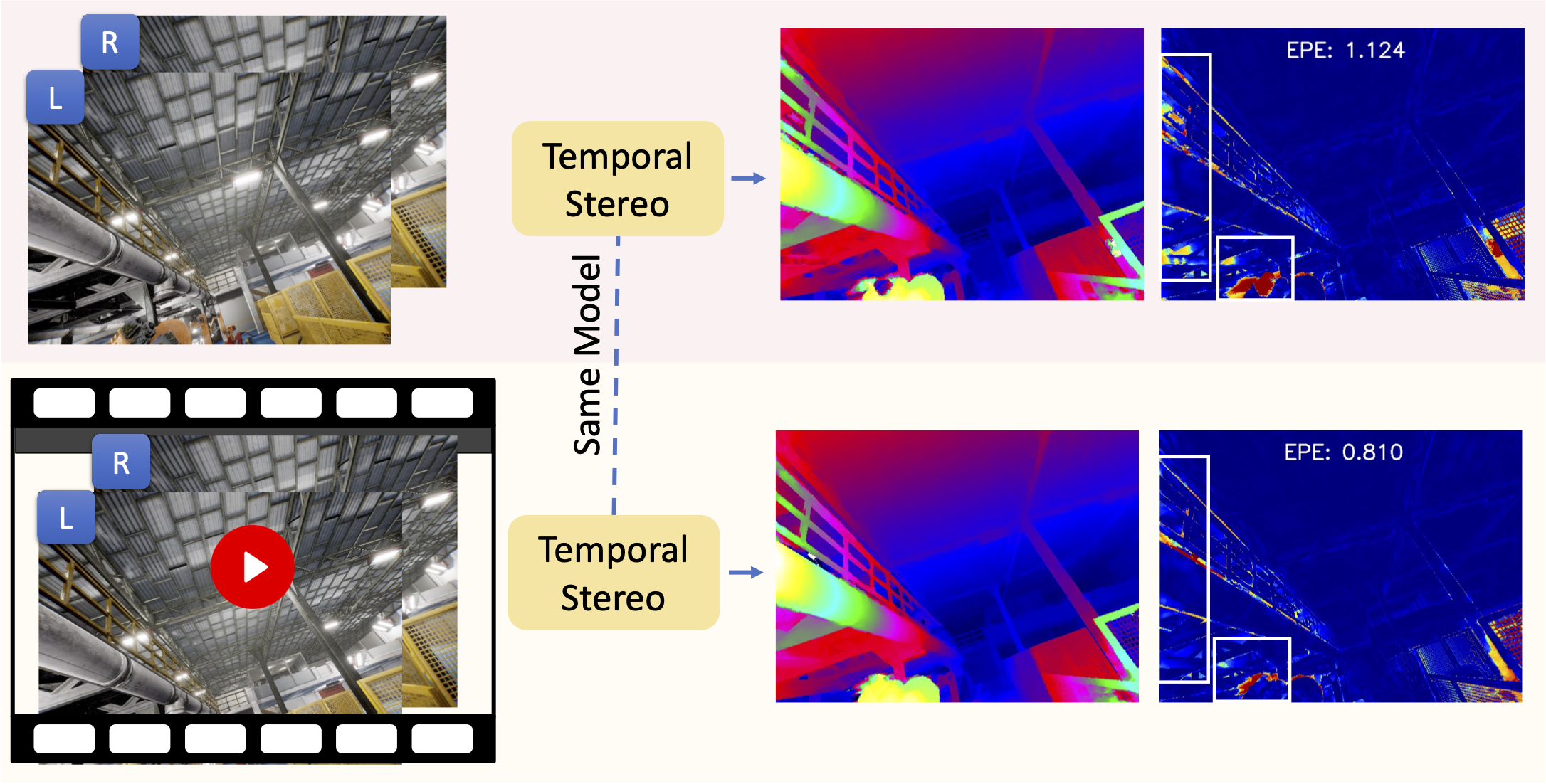

TemporalStereo Architecture, the first supervised stereo network based on video.

Currently, our codebase supports the training on flyingthings3d, while other parts will be ready soon...

Besides, pretrained checkpoints on various datasets are already given, please refer to the following section.

Assuming a fresh Anaconda distribution, you can install the dependencies with:

conda create -n temporalstereo python=3.8

conda activate temporalstereoWe ran our experiments with PyTorch 1.10.1+, CUDA 11.3, Python 3.8 and Ubuntu 20.04.

We used NVIDIA Apex (commit @ 4ef930c1c884fdca5f472ab2ce7cb9b505d26c1a) for multi-GPU training.

Apex can be installed as follows:

$ cd PATH_TO_INSTALL

$ git clone https://github.com/NVIDIA/apex

$ cd apex

$ git reset --hard 4ef930c1c884fdca5f472ab2ce7cb9b505d26c1a

$ pip install -v --no-cache-dir --global-option="--cpp_ext" --global-option="--cuda_ext" ./ python -m pip install 'git+https://github.com/facebookresearch/detectron2.git' # for cuda 11.3, refer to https://docs.cupy.dev/en/stable/install.html

pip install cupy-cuda113pip install -r requirements.txtWe used three datasets for training and evaluation.

All Annfile files (*.json) are available here.

Besides, we had also put the generation script there for you to get annfile by yourself.

The Flyingthings3D/SceneFlow can be downloaded here.

After that, you will get a data structure as follows:

FlyingThings3D

├── disparity

│ ├── TEST

│ ├── TRAIN

└── frames_finalpass

│ ├── TEST

│ ├── TRAIN

Processed KITTI 2012/2015 dataset and KITTI Raw Sequences can be downloaded from Baidu Wangpan, with password: iros, or DropBox.

Besides, the above link we only upload the pseudo labels of the KITTI Raw Sequences, for raw image downloading, you can refer to this for help.

For KITTI 2012 & 2015, we provide stereo image sequences, estimated poses by ORBSLAM3, and calibration files.

The processed TartanAir dataset can be downloaded here.

Note: batch size is set for each GPU

$ cd THIS_PROJECT_ROOT/projects/TemporalStereo

# sceneflow

python dist_train.py --config-file ./configs/sceneflow.yamlDuring the training, tensorboard logs are saved under the experiments directory. To run the tensorboard:

$ cd THIS_PROJECT_ROOT/

$ tensorboard --logdir=. --bind_allThen you can access the tensorboard via http://YOUR_SERVER_IP:6006

Pretrained checkpoints on various datasets are all available here.

$ cd THIS_PROJECT_ROOT/projects/TemporalStereo

# please remember to modify the parameters according to your case

# run a demo

./demo.sh

# submit to kitti

./submit.sh

# inference on a video

./video.sh

Thanks the authors for their works:

If you find our work useful in your research please consider citing our paper:

@inproceedings{Zhang2023TemporalStereo,

title = {TemporalStereo: Efficient Spatial-Temporal Stereo Matching Network},

author = {Zhang, Youmin and Poggi, Matteo and Mattoccia, Stefano},

booktitle = {IROS},

year = {2023}

}