@inproceedings{cai2022sc6d,

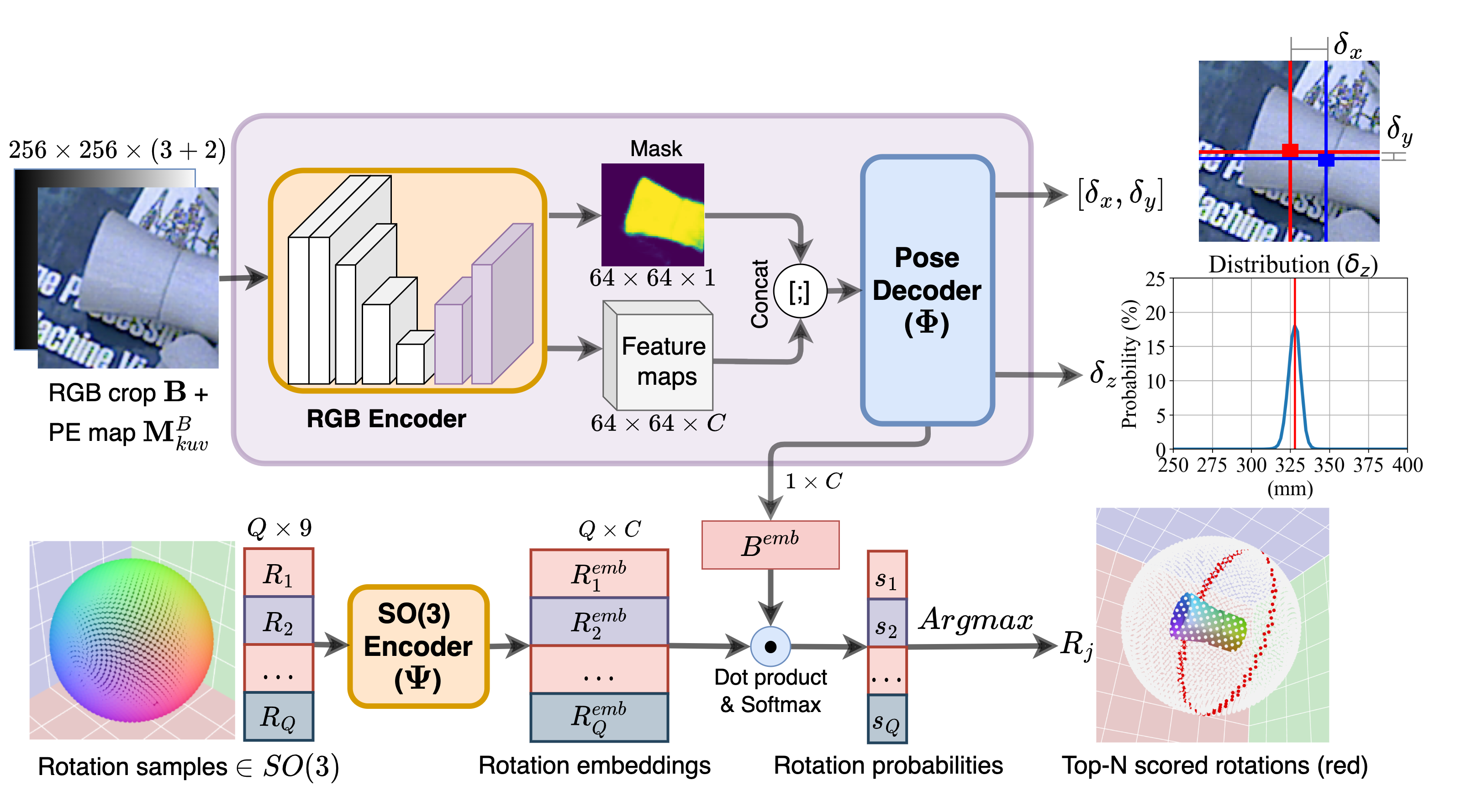

title={SC6D: Symmetry-agnostic and Correspondence-free 6D Object Pose Estimation},

author={Cai, Dingding and Heikkil{\"a}, Janne and Rahtu, Esa},

booktitle={2022 International Conference on 3D Vision (3DV)},

year={2022},

organization={IEEE}

}Please start by installing Miniconda3 with Pyhton3.8 or above.

git clone https://github.com/dingdingcai/SC6D-pose.git

cd SC6D-pose

conda env create -f environment.yml

conda activate sc6d

pip install torch==1.8.0+cu111 torchvision==0.9.0+cu111 -f https://download.pytorch.org/whl/torch_stable.html

pip install "git+https://github.com/facebookresearch/pytorch3d.git"

python -m pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu111/torch1.8/index.htmlOur evaluation is conducted on three benchmark datasets all downloaded from BOP website. All three datasets are stored in the same directory, e.g. BOP_Dataset/tless, BOP_Dataset/ycbv, BOP_Dataset/itodd, and set the "DATASET_ROOT" (in config.py) to the BOP_Dataset directory.

This project requires the evaluation code from bop_toolkit.

The pre-trained models can be downloaded here, all the models are saved to the checkpoints directory, for example, checkpoints/tless, checkpoints/ycbv, checkpoints/itodd.

Download the predicted detection results from BOP Challenge 2022 and decompress it to the root directory.

unzip bop22_default_detections_and_segmentations.zip

Evaluation on the model trained using only PBR images.

python inference.py --dataset_name tless --gpu_id 0

Evaluation on the model first trained using the PBR images and finetuned with the combined Synt+Real images

python inference.py --dataset_name tless --gpu_id 0 --eval_finetune

To train SC6D, download the VOC2012 dataset and set the "VOC_BG_ROOT" (in config.py) to the VOC2012 directory

bash training.sh# change the "NAME" variable for a new dataset.

-

- The evaluation code is based on bop_toolkit.