This is official implementation (preview version) of Dancing with Still Images: Video Distillation via Static-Dynamic Disentanglement in CVPR 2024.

Ziyu Wang *, Yue Xu *, Cewu Lu and Yong-Lu Li

* Equal contribution

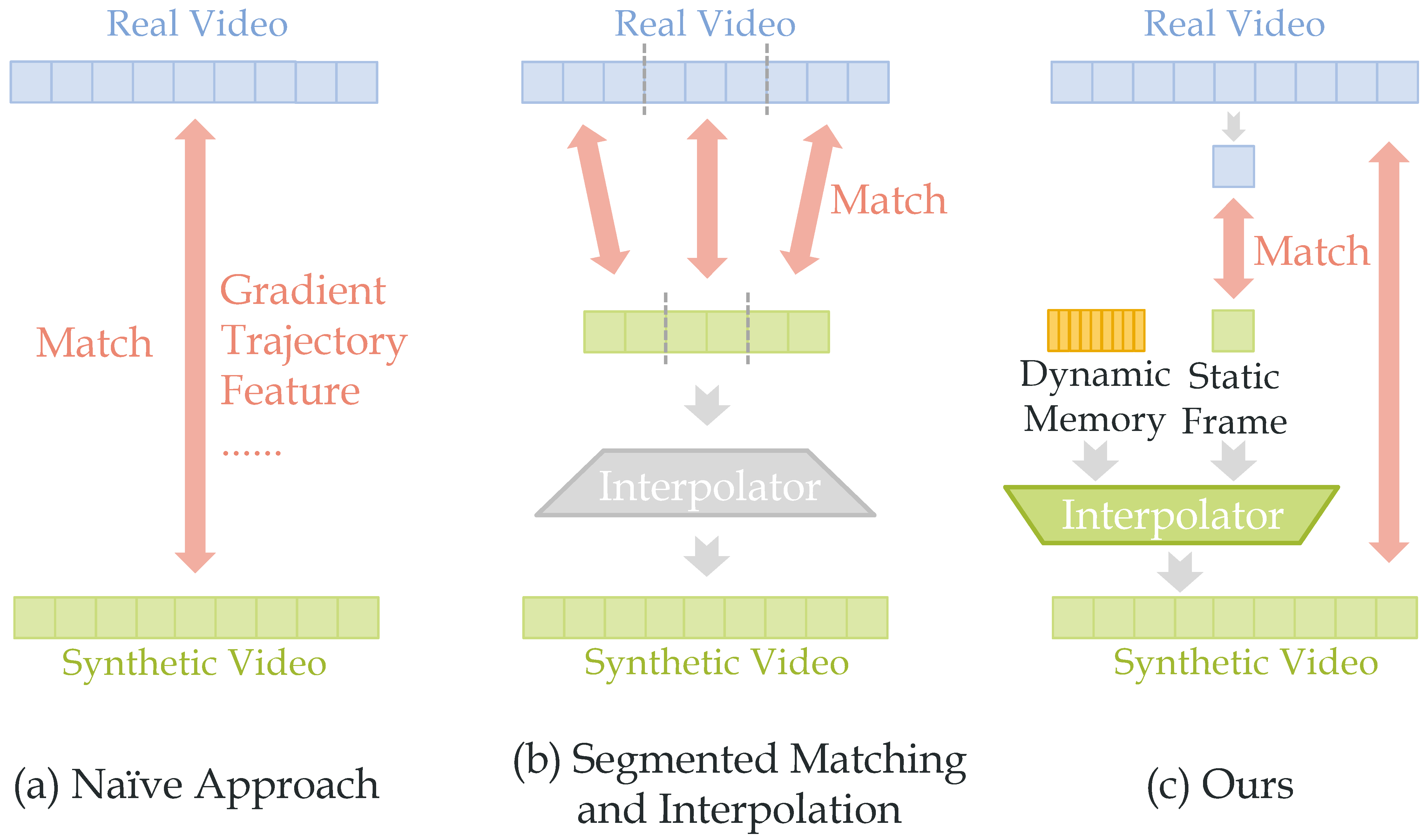

In this work, we provide the first systematic study of video distillation and introduce a taxonomy to categorize temporal compression. It first distills the videos into still images as static memory and then compensates the dynamic and motion information with a learnable dynamic memory block.

In this work, we provide the first systematic study of video distillation and introduce a taxonomy to categorize temporal compression. It first distills the videos into still images as static memory and then compensates the dynamic and motion information with a learnable dynamic memory block.

This is the preview version code of our work, only uploading and validating the main experiments. We will update full code as soon as possible. If there are any questions, please contact me(wangxiaoyi2021@sjtu.edu.cn).

- Upload code for preprocessing datasets.

- Update code for large-scale datasets.

- Upload code for FRePo/FRePo+Ours.

Our method is a plug-and-play module.

- Clone our repo.

git clone git@github.com:yuz1wan/video_distillation.git

cd video_distillation

- Prepare video datasets.

For convenience, we use the video dataset in the form of frames. For UCF101 and HMDB51, we use the RGB frames provided in twostreamfusion repository and then resize them. For Kinetics-400 and Something-Something V2, we extract frames using the code in extract_frames/. You can adjust the parameters to extract frames of different sizes and quantities.

distill_utils

├── data

│ ├── HMDB51

│ │ ├── hmdb51_splits.csv

│ │ └── jpegs_112

│ ├── Kinetics

│ │ ├── broken_videos.txt

│ │ ├── replacement

│ │ ├── short_videos.txt

│ │ ├── test

│ │ ├── test.csv

│ │ ├── train

│ │ ├── train.csv

│ │ ├── val

│ │ └── validate.csv

│ ├── SSv2

│ │ ├── frame

│ │ ├── annot_train.json

│ │ ├── annot_val.json

│ │ └── class_list.json

│ └── UCF101

│ ├── jpegs_112

│ │ ├── v_ApplyEyeMakeup_g01_c01

│ │ ├── v_ApplyEyeMakeup_g01_c02

│ │ ├── v_ApplyEyeMakeup_g01_c03

│ │ └── ...

│ ├── UCF101actions.pkl

│ ├── ucf101_splits1.csv

│ └── ucf50_splits1.csv

└── ...

- Static Learning.

We use DC for static learning. You can find DC code in this repo and we provide code to load single frame data at utils.py and distill_utils/dataset.py. Or you can use static memory trained by us. - Dynamic Fine-tuning.

We have thoroughly documented the parameters employed in our experiments in Suppl. For DM/DM+Ours

cd sh/baseline

# bash DM.sh GPU_num Dateset Learning_rate IPC

bash DM.sh 0 miniUCF101 100 1

# for DM+Ours

cd ../s2d

# for ipc=1

bash s2d_DM_ms.sh 0,1,2,3 miniUCF101 1e-4 1e-5

# for ipc=5

bash s2d_DM_ms_5.sh 0,1,2,3 miniUCF101 1e3 1e-6

For MTT/MTT+Ours, it is necessary to first train the expert trajectory with buffer.py (refer MTT).

cd sh/baseline

# bash buffer.sh GPU_num Dateset

bash buffer.sh 0 miniUCF101

# bash MTT.sh GPU_num Dateset Learning_rate IPC

bash MTT.sh 0 miniUCF101 1e5 1

cd ../s2d

# for ipc=1

bash s2d_MTT_ms.sh 0,1,2,3 miniUCF101 1e4 1e-3

# for ipc=5

bash s2d_MTT_ms_5.sh 0,1,2,3 miniUCF101 1e4 1e-3

This work is built upon the code from

- VICO-UoE/DatasetCondensation

- GeorgeCazenavette/mtt-distillation

- yongchao97/FRePo

- Huage001/DatasetFactorization

We also thank the Awesome project.