``Computer Vision in the Wild (CVinW)'' is an emerging research field. This writeup provides a quick introduction of CVinW and maintains a collection of papers on the topic. If you find some missing papers or resourses, please open issues or pull requests (recommended).

- What is Computer Vision in the Wild (CVinW)?

- Papers on Task-level Transfer with Pre-trained Models

- Papers on Efficient Model Adaptation

- Papers on Out-of-domain Generalization

- Acknowledgements

Developing a transferable foundation model/system that can effortlessly adapt to a large range of visual tasks in the wild. It comes with two key factors: (i) The task transfer scenarios are broad, and (ii) The task transfer cost is low. The main idea is illustrated as follows, please see the detailed description in ELEVATER paper.

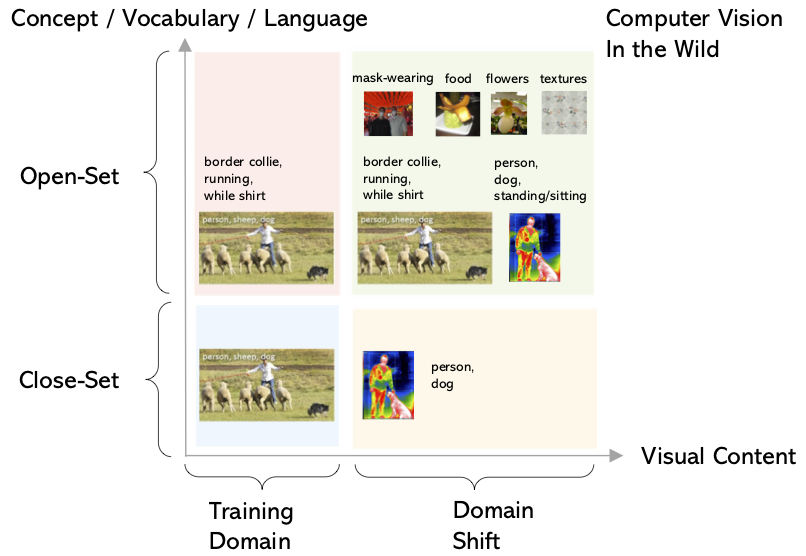

We illustrate and compare CVinW with other settings using a 2D chart in Figure 1, where the space is constructed with two orthogonal dimensions: input image distribution and output concept set. The 2D chart is divided into four quadrants, based on how the model evaluation stage is different from model development stage. For any visual recognition problems at different granularity such as image classification, object detection and segmentation, the modeling setup cann be categorized into one of the four settings. We see an emerging trend on moving towards CVinW. Interested in the various pre-trained vision models that move towards CVinW? please check out Section 🔥``Papers on Task-level Transfer with Pre-trained Models''.

|

|

| A brief definition with a four-quadrant chart | Figure 1: The comparison of CVinW with other existing settings |

|---|

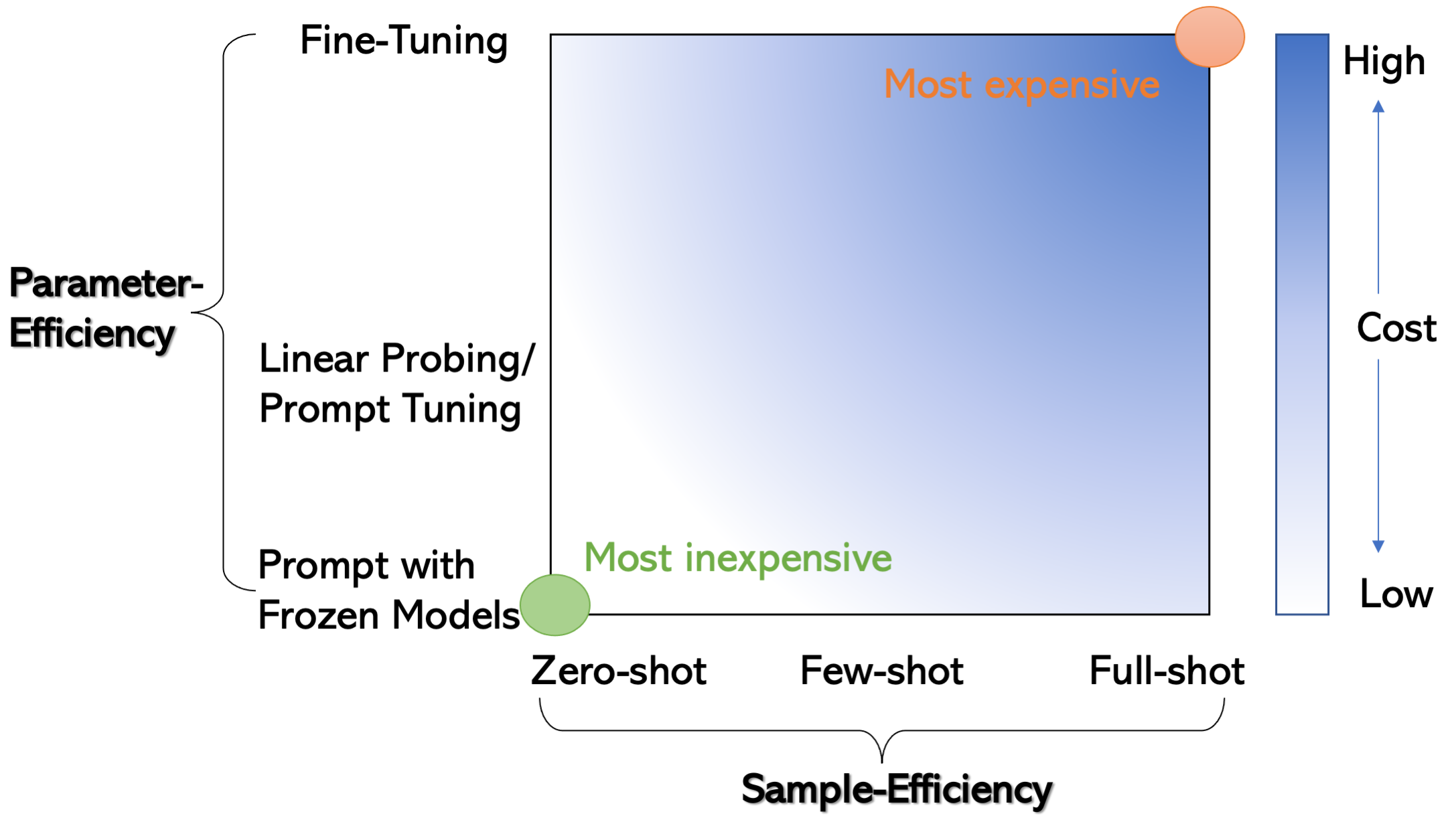

One major advantage of pre-trained models is the promise that they can transfer to downstream tasks effortlessly. The model adaptation cost is considered in two orthogonal dimensions: sample-efficiency and parameter-efficiency, as illustrated in Figure 2. The bottom-left corner and top-right corner is the most inexpensive and expensive adaptation strategy, respectively. One may interpolate and make combinations in the 2D space, to get different model adaptation methods with different cost. To efficient adapt large vision models of the gradaully increaseing size, we see an emerging need on efficient model adaptation. Interested in contributing your smart efficient adaptation algorithms and see how it differs from existing papers? please check out Section ❄️``Papers on Efficient Model Adaptation'' .

ELEVATER: A Benchmark and Toolkit for Evaluating Language-Augmented Visual Models.

Chunyuan Li*, Haotian Liu*, Liunian Harold Li, Pengchuan Zhang, Jyoti Aneja, Jianwei Yang, Ping Jin, Houdong Hu, Zicheng Liu, Yong Jae Lee, Jianfeng Gao.

NeurIPS 2022 (Datasets and Benchmarks Track).

[paper] [benchmark]

- [02/2023] Organizing the 2nd Workshop @ CVPR2023 on Computer Vision in the Wild (CVinW), where two new challenges are hosted to evaluate the zero-shot, few-shot and full-shot performance of pre-trained vision models in downstream tasks:

- ``Segmentation in the Wild (SGinW)'' Challenge evaluates on 25 image segmentation tasks.

- ``Roboflow 100 for Object Detection in the Wild'' Challenge evaluates on 100 object detection tasks.

[Workshop]

[Workshop]  [SGinW Challenge]

[SGinW Challenge]

[RF100 Challenge]

[RF100 Challenge]

- [09/2022] Organizing ECCV Workshop Computer Vision in the Wild (CVinW), where two challenges are hosted to evaluate the zero-shot, few-shot and full-shot performance of pre-trained vision models in downstream tasks:

- ``Image Classification in the Wild (ICinW)'' Challenge evaluates on 20 image classification tasks.

- ``Object Detection in the Wild (ODinW)'' Challenge evaluates on 35 object detection tasks.

[Workshop]

[Workshop]  [ICinW Challenge]

[ICinW Challenge]

[ODinW Challenge]

[ODinW Challenge]

[CLIP] Learning Transferable Visual Models From Natural Language Supervision.

Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, Ilya Sutskever.

ICML 2021.

[paper] [code]

[ALIGN] Scaling Up Visual and Vision-Language Representation Learning With Noisy Text Supervision.

Chao Jia, Yinfei Yang, Ye Xia, Yi-Ting Chen, Zarana Parekh, Hieu Pham, Quoc V. Le, Yunhsuan Sung, Zhen Li, Tom Duerig.

ICML 2021.

[paper]

OpenCLIP.

Gabriel Ilharco*, Mitchell Wortsman*, Nicholas Carlini, Rohan Taori, Achal Dave, Vaishaal Shankar, John Miller, Hongseok Namkoong, Hannaneh Hajishirzi, Ali Farhadi, Ludwig Schmidt.

10.5281/zenodo.5143773, 2021.

[code]

Florence: A New Foundation Model for Computer Vision.

Lu Yuan, Dongdong Chen, Yi-Ling Chen, Noel Codella, Xiyang Dai, Jianfeng Gao, Houdong Hu, Xuedong Huang, Boxin Li, Chunyuan Li, Ce Liu, Mengchen Liu, Zicheng Liu, Yumao Lu, Yu Shi, Lijuan Wang, Jianfeng Wang, Bin Xiao, Zhen Xiao, Jianwei Yang, Michael Zeng, Luowei Zhou, Pengchuan Zhang.

arXiv:2111.11432, 2022.

[paper]

[UniCL] Unified Contrastive Learning in Image-Text-Label Space.

Jianwei Yang*, Chunyuan Li*, Pengchuan Zhang*, Bin Xiao*, Ce Liu, Lu Yuan, Jianfeng Gao.

CVPR 2022.

[paper] [code]

LiT: Zero-Shot Transfer with Locked-image text Tuning.

Xiaohua Zhai, Xiao Wang, Basil Mustafa, Andreas Steiner, Daniel Keysers, Alexander Kolesnikov, Lucas Beyer.

CVPR 2022.

[paper]

[DeCLIP] Supervision Exists Everywhere: A Data Efficient Contrastive Language-Image Pre-training Paradigm.

Yangguang Li, Feng Liang, Lichen Zhao, Yufeng Cui, Wanli Ouyang, Jing Shao, Fengwei Yu, Junjie Yan.

ICLR 2022.

[paper] [code]

FILIP: Fine-grained Interactive Language-Image Pre-Training.

Lewei Yao, Runhui Huang, Lu Hou, Guansong Lu, Minzhe Niu, Hang Xu, Xiaodan Liang, Zhenguo Li, Xin Jiang, Chunjing Xu.

ICLR 2022.

[paper]

SLIP: Self-supervision meets Language-Image Pre-training.

Norman Mu, Alexander Kirillov, David Wagner, Saining Xie.

ECCV 2022.

[paper] [code]

[MS-CLIP]: Learning Visual Representation from Modality-Shared Contrastive Language-Image Pre-training.

Haoxuan You*, Luowei Zhou*, Bin Xiao*, Noel Codella*, Yu Cheng, Ruochen Xu, Shih-Fu Chang, Lu Yuan.

ECCV 2022.

[paper] [code]

MultiMAE: Multi-modal Multi-task Masked Autoencoders.

Roman Bachmann, David Mizrahi, Andrei Atanov, Amir Zamir.

ECCV 2022.

[paper] [code] [project]

[M3AE] Multimodal Masked Autoencoders Learn Transferable Representations.

Xinyang Geng, Hao Liu, Lisa Lee, Dale Schuurmans, Sergey Levine, Pieter Abbeel.

arXiv:2205.14204, 2022.

[paper] [code]

CyCLIP: Cyclic Contrastive Language-Image Pretraining.

Shashank Goel, Hritik Bansal, Sumit Bhatia, Ryan A. Rossi, Vishwa Vinay, Aditya Grover.

NeurIPS 2022.

[paper] [code]

PyramidCLIP: Hierarchical Feature Alignment for Vision-language Model Pretraining.

Yuting Gao, Jinfeng Liu, Zihan Xu, Jun Zhang, Ke Li, Rongrong Ji, Chunhua Shen.

NeurIPS 2022.

[paper]

K-LITE: Learning Transferable Visual Models with External Knowledge.

Sheng Shen*, Chunyuan Li*, Xiaowei Hu*, Yujia Xie, Jianwei Yang, Pengchuan Zhang, Anna Rohrbach, Zhe Gan, Lijuan Wang, Lu Yuan, Ce Liu, Kurt Keutzer, Trevor Darrell, Jianfeng Gao.

NeurIPS 2022.

[paper]

UniCLIP: Unified Framework for Contrastive Language-Image Pre-training.

Janghyeon Lee, Jongsuk Kim, Hyounguk Shon, Bumsoo Kim, Seung Hwan Kim, Honglak Lee, Junmo Kim.

NeurIPS 2022.

[paper]

CoCa: Contrastive Captioners are Image-Text Foundation Models.

Jiahui Yu, Zirui Wang, Vijay Vasudevan, Legg Yeung, Mojtaba Seyedhosseini, Yonghui Wu.

arXiv:2205.01917, 2022.

[paper]

Prefix Conditioning Unifies Language and Label Supervision.

Kuniaki Saito, Kihyuk Sohn, Xiang Zhang, Chun-Liang Li, Chen-Yu Lee, Kate Saenko, Tomas Pfister.

arXiv:2206.01125, 2022.

[paper]

MaskCLIP: Masked Self-Distillation Advances Contrastive Language-Image Pretraining.

Xiaoyi Dong, Yinglin Zheng, Jianmin Bao, Ting Zhang, Dongdong Chen, Hao Yang, Ming Zeng, Weiming Zhang, Lu Yuan, Dong Chen, Fang Wen, Nenghai Yu.

arXiv:2208.12262, 2021

[paper]

[BASIC] Combined Scaling for Open-Vocabulary Image Classification.

Hieu Pham, Zihang Dai, Golnaz Ghiasi, Kenji Kawaguchi, Hanxiao Liu, Adams Wei Yu, Jiahui Yu, Yi-Ting Chen, Minh-Thang Luong, Yonghui Wu, Mingxing Tan, Quoc V. Le.

arXiv:2111.10050, 2022.

[paper]

[LIMoE] Multimodal Contrastive Learning with LIMoE: the Language-Image Mixture of Experts.

Basil Mustafa, Carlos Riquelme, Joan Puigcerver, Rodolphe Jenatton, Neil Houlsby.

arXiv:2206.02770, 2022.

[paper]

MURAL: Multimodal, Multitask Retrieval Across Languages.

Aashi Jain, Mandy Guo, Krishna Srinivasan, Ting Chen, Sneha Kudugunta, Chao Jia, Yinfei Yang, Jason Baldridge.

arXiv:2109.05125, 2022.

[paper]

PaLI: A Jointly-Scaled Multilingual Language-Image Model.

Xi Chen, Xiao Wang, Soravit Changpinyo, AJ Piergiovanni, Piotr Padlewski, Daniel Salz, Sebastian Goodman, Adam Grycner, Basil Mustafa, Lucas Beyer, Alexander Kolesnikov, Joan Puigcerver, Nan Ding, Keran Rong, Hassan Akbari, Gaurav Mishra, Linting Xue, Ashish Thapliyal, James Bradbury, Weicheng Kuo, Mojtaba Seyedhosseini, Chao Jia, Burcu Karagol Ayan, Carlos Riquelme, Andreas Steiner, Anelia Angelova, Xiaohua Zhai, Neil Houlsby, Radu Soricut.

arXiv:2209.06794, 2022.

[paper]

Chinese CLIP: Contrastive Vision-Language Pretraining in Chinese.

An Yang, Junshu Pan, Junyang Lin, Rui Men, Yichang Zhang, Jingren Zhou, Chang Zhou.

arXiv:2211.01335, 2022.

[paper]

[project]

[FLIP] Scaling Language-Image Pre-training via Masking.

Yanghao Li, Haoqi Fan, Ronghang Hu, Christoph Feichtenhofer, Kaiming He.

arXiv:2212.00794, 2022.

[paper]

Self Supervision Does Not Help Natural Language Supervision at Scale.

Floris Weers, Vaishaal Shankar, Angelos Katharopoulos, Yinfei Yang, Tom Gunter.

arXiv:2301.07836, 2023.

[paper]

STAIR: Learning Sparse Text and Image Representation in Grounded Tokens.

Chen Chen, Bowen Zhang, Liangliang Cao, Jiguang Shen, Tom Gunter, Albin Madappally Jose, Alexander Toshev, Jonathon Shlens, Ruoming Pang, Yinfei Yang.

arXiv:2301.13081, 2023.

[paper]

[REACT] Learning Customized Visual Models with Retrieval-Augmented Knowledge.

Haotian Liu, Kilho Son, Jianwei Yang, Ce Liu, Jianfeng Gao, Yong Jae Lee, Chunyuan Li.

CVPR, 2023.

[paper] [project] [code]

Open-Vocabulary Object Detection Using Captions.

Alireza Zareian, Kevin Dela Rosa, Derek Hao Hu, Shih-Fu Chang.

CVPR 2021.

[paper]

MDETR: Modulated Detection for End-to-End Multi-Modal Understanding.

Aishwarya Kamath, Mannat Singh, Yann LeCun, Gabriel Synnaeve, Ishan Misra, Nicolas Carion.

ICCV 2021.

[paper] [code]

[VILD]

Open-vocabulary Object Detection via Vision and Language Knowledge Distillation.

Xiuye Gu, Tsung-Yi Lin, Weicheng Kuo, Yin Cui.

ICLR 2022.

[paper]

[GLIP] Grounded Language-Image Pre-training.

Liunian Harold Li*, Pengchuan Zhang*, Haotian Zhang*, Jianwei Yang, Chunyuan Li, Yiwu Zhong, Lijuan Wang, Lu Yuan, Lei Zhang, Jenq-Neng Hwang, Kai-Wei Chang, Jianfeng Gao

CVPR 2022.

[paper] [code]

RegionCLIP: Region-based Language-Image Pretraining.

Yiwu Zhong, Jianwei Yang, Pengchuan Zhang, Chunyuan Li, Noel Codella, Liunian Harold Li, Luowei Zhou, Xiyang Dai, Lu Yuan, Yin Li, Jianfeng Gao.

CVPR 2022.

[paper] [code]

[OV-DETR] Open-Vocabulary DETR with Conditional Matching Yuhang.

Yuhang Zang, Wei Li, Kaiyang Zhou, Chen Huang, Chen Change Loy.

ECCV 2022.

[paper] [code]

[OWL-ViT] Simple Open-Vocabulary Object Detection with Vision Transformers.

Matthias Minderer, Alexey Gritsenko, Austin Stone, Maxim Neumann, Dirk Weissenborn, Alexey Dosovitskiy, Aravindh Mahendran, Anurag Arnab, Mostafa Dehghani, Zhuoran Shen, Xiao Wang, Xiaohua Zhai, Thomas Kipf, Neil Houlsby.

ECCV 2022.

[paper]

[Detic] Detecting Twenty-thousand Classes using Image-level Supervision.

Xingyi Zhou, Rohit Girdhar, Armand Joulin, Philipp Krähenbühl, Ishan Misra.

ECCV 2022.

[paper] [code]

X-DETR: A Versatile Architecture for Instance-wise Vision-Language Tasks.

Zhaowei Cai, Gukyeong Kwon, Avinash Ravichandran, Erhan Bas, Zhuowen Tu, Rahul Bhotika, Stefano Soatto.

ECCV 2022.

[paper]

FindIt: Generalized Localization with Natural Language Queries.

Weicheng Kuo, Fred Bertsch, Wei Li, AJ Piergiovanni, Mohammad Saffar, Anelia Angelova.

ECCV 2022.

[paper]

Open Vocabulary Object Detection with Pseudo Bounding-Box Labels.

Mingfei Gao, Chen Xing, Juan Carlos Niebles, Junnan Li, Ran Xu, Wenhao Liu, Caiming Xiong.

ECCV 2022.

[paper] [code] [blog]

PromptDet: Towards Open-vocabulary Detection using Uncurated Images.

Chengjian Feng, Yujie Zhong, Zequn Jie, Xiangxiang Chu, Haibing Ren, Xiaolin Wei, Weidi Xie, Lin Ma.

ECCV 2022.

[paper] [code]

Class-agnostic Object Detection with Multi-modal Transformer.

Muhammad Maaz, Hanoona Rasheed, Salman Khan, Fahad Shahbaz Khan, Rao Muhammad Anwer and Ming-Hsuan Yang.

ECCV 2022.

[paper] [code]

[Object Centric OVD] -- Bridging the Gap between Object and Image-level Representations for Open-Vocabulary Detection.

Hanoona Rasheed, Muhammad Maaz, Muhammad Uzair Khattak, Salman Khan, Fahad Shahbaz Khan.

NeurIPS 2022

[paper] [code]

[GLIPv2] Unifying Localization and Vision-Language Understanding.

Haotian Zhang*, Pengchuan Zhang*, Xiaowei Hu, Yen-Chun Chen, Liunian Harold Li, Xiyang Dai, Lijuan Wang, Lu Yuan, Jenq-Neng Hwang, Jianfeng Gao.

NeurIPS 2022

[paper] [code]

[FIBER] Coarse-to-Fine Vision-Language Pre-training with Fusion in the Backbone.

Zi-Yi Dou, Aishwarya Kamath, Zhe Gan, Pengchuan Zhang, Jianfeng Wang, Linjie Li, Zicheng Liu, Ce Liu, Yann LeCun, Nanyun Peng, Jianfeng Gao, Lijuan Wang.

NeurIPS 2022

[paper] [code]

[DetCLIP] Dictionary-Enriched Visual-Concept Paralleled Pre-training for Open-world Detection.

Lewei Yao, Jianhua Han, Youpeng Wen, Xiaodan Liang, Dan Xu, Wei zhang, Zhenguo Li, Chunjing Xu, Hang Xu.

NeurIPS 2022

[paper]

OmDet: Language-Aware Object Detection with Large-scale Vision-Language Multi-dataset Pre-training.

Tiancheng Zhao, Peng Liu, Xiaopeng Lu, Kyusong Lee

arxiv:2209.0594

[paper]

F-VLM: Open-Vocabulary Object Detection upon Frozen Vision and Language Models

.

Weicheng Kuo, Yin Cui, Xiuye Gu, AJ Piergiovanni, Anelia Angelova.

ICLR 2023.

[paper]

[project]

[OVAD] Open-vocabulary Attribute Detection

.

María A. Bravo, Sudhanshu Mittal, Simon Ging, Thomas Brox.

arxiv:2211.12914

[paper]

[project]

Learning Object-Language Alignments for Open-Vocabulary Object Detection.

Chuang Lin, Peize Sun, Yi Jiang, Ping Luo, Lizhen Qu, Gholamreza Haffari, Zehuan Yuan, Jianfei Cai

ICLR 2023.

[paper] [code]

Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection

Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Chunyuan Li, Jianwei Yang, Hang Su, Jun Zhu, Lei Zhang

Arxiv 2023.

[paper] [code]

Open-Set 3D Detection via Image-level Class and Debiased Cross-modal Contrastive Learning.

Anonymous.

[OpenReview]

PhraseCut: Language-based Image Segmentation in the Wild

Chenyun Wu, Zhe Lin, Scott Cohen, Trung Bui, Subhransu Maji.

CVPR 2020.

[paper] [project]

GroupViT: Semantic Segmentation Emerges from Text Supervision

Jiarui Xu, Shalini De Mello, Sifei Liu, Wonmin Byeon, Thomas Breuel, Jan Kautz, Xiaolong Wang.

CVPR 2022.

[paper] [code] [project]

[CLIPSeg]

Image Segmentation Using Text and Image Prompts.

Timo Lüddecke, Alexander S. Ecker.

CVPR 2022.

[paper] [code]

DenseCLIP: Language-Guided Dense Prediction with Context-Aware Prompting.

Yongming Rao*, Wenliang Zhao*, Guangyi Chen, Yansong Tang, Zheng Zhu, Guan Huang, Jie Zhou, Jiwen Lu.

CVPR, 2022

[paper] [code]

Open-Vocabulary Instance Segmentation via Robust Cross-Modal Pseudo-Labeling.

Dat Huynh, Jason Kuen, Zhe Lin, Jiuxiang Gu, Ehsan Elhamifar.

CVPR 2022.

[paper]

[ZegFormer] Decoupling Zero-Shot Semantic Segmentation.

Jian Ding, Nan Xue, Gui-Song Xia, Dengxin Dai.

CVPR 2022.

[paper] [code]

[OpenSeg]

Scaling Open-Vocabulary Image Segmentation with Image-Level Labels.

Golnaz Ghiasi, Xiuye Gu, Yin Cui, Tsung-Yi Lin.

ECCV 2022.

[paper]

A Simple Baseline for Zero-shot Semantic Segmentation with Pre-trained Vision-language Model.

Mengde Xu, Zheng Zhang, Fangyun Wei, Yutong Lin, Yue Cao, Han Hu, Xiang Bai.

ECCV 2022

[paper] [code]

[MaskCLIP] Extract Free Dense Labels from CLIP.

Chong Zhou, Chen Change Loy, Bo Dai.

ECCV 2022

[paper] [code]

Open-Vocabulary Panoptic Segmentation with MaskCLIP.

Zheng Ding, Jieke Wang, Zhuowen Tu.

arXiv:2208.08984, 2022

[paper]

[LSeg]

Language-driven Semantic Segmentation.

Boyi Li, Kilian Q Weinberger, Serge Belongie, Vladlen Koltun, René Ranftl.

ICLR 2022.

[paper] [code]

Perceptual Grouping in Vision-Language Models.

Kanchana Ranasinghe, Brandon McKinzie, Sachin Ravi, Yinfei Yang, Alexander Toshev, Jonathon Shlens.

arXiv 2210.09996, 2022.

[paper]

[TCL] Learning to Generate Text-grounded Mask for Open-world Semantic Segmentation from Only Image-Text Pairs.

Junbum Cha, Jonghwan Mun, Byungseok Rho.

CVPR 2023.

[paper] [code] [demo]

[OVSeg] Open-Vocabulary Semantic Segmentation with Mask-adapted CLIP.

Feng Liang, Bichen Wu, Xiaoliang Dai, Kunpeng Li, Yinan Zhao, Hang Zhang, Peizhao Zhang, Peter Vajda, Diana Marculescu.

CVPR 2023.

[paper] [project] [demo]

[X-Decoder] Generalized Decoding for Pixel, Image, and Language.

Xueyan Zou*, Zi-Yi Dou*, Jianwei Yang*, Zhe Gan, Linjie Li, Chunyuan Li, Xiyang Dai, Harkirat Behl, Jianfeng Wang, Lu Yuan, Nanyun Peng, Lijuan Wang, Yong Jae Lee^, Jianfeng Gao^.

CVPR 2023.

[paper] [project] [code] [demo]

[SAN] Side Adapter Network for Open-Vocabulary Semantic Segmentation.

Mengde Xu, Zheng Zhang, Fangyun Wei, Han Hu, Xiang Bai.

CVPR 2023.

[paper] [code]

[ODISE] Open-Vocabulary Panoptic Segmentation with Text-to-Image Diffusion Models.

Jiarui Xu, Sifei Liu, Arash Vahdat, Wonmin Byeon, Xiaolong Wang, Shalini De Mello.

CVPR 2023.

[paper] [project] [code] [demo]

[OpenSeeD] A Simple Framework for Open-Vocabulary Segmentation and Detection.

Hao Zhang, Feng Li, Xueyan Zou, Shilong Liu, Chunyuan Li, Jianfeng Gao, Jianwei Yang, Lei Zhang.

arxiv 2303.08131, 2023.

[paper] [code]

[SAM] Segment Anything.

Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alex Berg, Wan-Yen Lo, Piotr Dollar, Ross Girshick.

2023.

[paper]

[code]

[project]

[demo]

[SEEM] Segment Everything Everywhere All at Once.

Xueyan Zou, Jianwei Yang, Hao Zhang, Feng Li, Linjie Li, Jianfeng Gao, Yong Jae Lee.

arxiv 2303.06718, 2023.

[paper] [code]

Language-Grounded Indoor 3D Semantic Segmentation in the Wild.

David Rozenberszki, Or Litany, Angela Dai.

ECCV 2022.

[paper] [code] [project]

Semantic Abstraction: Open-World 3D Scene Understanding from 2D Vision-Language Models.

Huy Ha, Shuran Song.

CoRL 2022.

[paper] [project]

OpenScene: 3D Scene Understanding with Open Vocabularies.

Songyou Peng, Kyle Genova, Chiyu "Max" Jiang, Andrea Tagliasacchi, Marc Pollefeys, Thomas Funkhouser.

CVPR 2023.

[paper] [project]

Language-driven Open-Vocabulary 3D Scene Understanding

Runyu Ding, Jihan Yang, Chuhui Xue, Wenqing Zhang, Song Bai, Xiaojuan Qi.

arXiv:2211.16312 , 2022.

[paper] [code]

Expanding Language-Image Pretrained Models for General Video Recognition.

Bolin Ni, Houwen Peng, Minghao Chen, Songyang Zhang, Gaofeng Meng, Jianlong Fu, Shiming Xiang, Haibin Ling.

ECCV 2022

[paper] [code]

Multimodal Open-Vocabulary Video Classification via Pre-Trained Vision and Language Models.

Rui Qian, Yeqing Li, Zheng Xu, Ming-Hsuan Yang, Serge Belongie, Yin Cui.

arXiv:2207.07646

[paper]

RLIP: Relational Language-Image Pre-training for Human-Object Interaction Detection.

Hangjie Yuan, Jianwen Jiang, Samuel Albanie, Tao Feng, Ziyuan Huang, Dong Ni, Mingqian Tang.

NeurIPS 2022

[paper] [code]

🆕 This is a new research topic: grounded image generation based on any open-set concept, include text and visual prompt. All the text-to-image pre-trained generation models allow open-set prompting at the image-level, and thus belong to ``Grounded Image Generation in the Wild'' by default. This paper collection focuses on more fine-grained controlability in the image generation, such as specifying new concept at the the level of bounding box, masks, edge/depth maps etc.

GLIGEN: Open-Set Grounded Text-to-Image Generation.

Yuheng Li, Haotian Liu, Qingyang Wu, Fangzhou Mu, Jianwei Yang, Jianfeng Gao, Chunyuan Li, Yong Jae Lee.

CVPR 2023

[paper] [project] [code] [demo] [YouTube video]

ReCo: Region-Controlled Text-to-Image Generation.

Zhengyuan Yang, Jianfeng Wang, Zhe Gan, Linjie Li, Kevin Lin, Chenfei Wu, Nan Duan, Zicheng Liu, Ce Liu, Michael Zeng, Lijuan Wang.

CVPR 2023

[paper]

[ControlNet] Adding Conditional Control to Text-to-Image Diffusion Models.

Lvmin Zhang, Maneesh Agrawala.

arxiv 2302.05543, 2023

[paper] [code]

T2I-Adapter: Learning Adapters to Dig out More Controllable Ability for Text-to-Image Diffusion Models.

Chong Mou, Xintao Wang, Liangbin Xie, Jian Zhang, Zhongang Qi, Ying Shan, Xiaohu Qie.

arxiv 2302.08453, 2023

[paper] [code]

Composer: Creative and Controllable Image Synthesis with Composable Conditions.

Lianghua Huang, Di Chen, Yu Liu, Yujun Shen, Deli Zhao, Jingren Zhou.

arxiv 2302.09778 2023

[paper] [project] [code]

Universal Guidance for Diffusion Models.

Arpit Bansal, Hong-Min Chu, Avi Schwarzschild, Soumyadip Sengupta, Micah Goldblum, Jonas Geiping, Tom Goldstein.

arxiv2302.07121, 2023

[paper] [code]

Modulating Pretrained Diffusion Models for Multimodal Image Synthesis.

Cusuh Ham, James Hays, Jingwan Lu, Krishna Kumar Singh, Zhifei Zhang, Tobias Hinz.

arxiv2302.12764, 2023

[paper]

🆕 This is a new research topic: build general-purpose multimodal assistants based on large language models (LLM). One prominent example is OpenAI Multimodal GPT-4. A comphrensive list paper list is compiled at Awesome-Multimodal-Large-Language-Models. Our collection maintains the a brief list for the completenes of CVinW.

GPT-4 Technical Report.

OpenAI

arxiv2303.08774, 2023

[paper]

Visual Instruction Tuning.

Haotian Liu*, Chunyuan Li*, Qingyang Wu, Yong Jae Lee

arxiv/2304.08485, 2023

[paper]

[website]

MiniGPT-4: Enhancing Vision-Language Understanding with Advanced Large Language Models

Deyao Zhu* , Jun Chen*, Xiaoqian Shen, Xiang Li, Mohamed Elhoseiny

arxiv2304.10592, 2023

[paper]

[website]

Large Multimodal Models: Notes on CVPR 2023 Tutorial.

Chunyuan Li.

CVPR Tutorial, 2023

[📊 Slides]

[📝 Notes]

[🎥 YouTube]

[🇨🇳 Bilibli]

[🌐 Tutorial Webiste]

[CoOp] Learning to Prompt for Vision-Language Models.

Kaiyang Zhou, Jingkang Yang, Chen Change Loy, Ziwei Liu.

IJCV 2022

[paper] [code]

[CoCoOp] Conditional Prompt Learning for Vision-Language Models..

Kaiyang Zhou, Jingkang Yang, Chen Change Loy, Ziwei Liu.

CVPR 2022

[paper] [code]

Prompt Distribution Learning.

Yuning Lu, Jianzhuang Liu, Yonggang Zhang, Yajing Liu, Xinmei Tian.

CVPR 2022

[paper]

VL-Adapter: Parameter-Efficient Transfer Learning for Vision-and-Language Tasks.

Yi-Lin Sung, Jaemin Cho, Mohit Bansal.

CVPR, 2022

[paper] [code]

[VPT] Visual Prompt Tuning.

Menglin Jia, Luming Tang, Bor-Chun Chen, Claire Cardie, Serge Belongie, Bharath Hariharan, Ser-Nam Lim.

ECCV 2022

[paper]

Tip-Adapter: Training-free CLIP-Adapter for Better Vision-Language Modeling.

Renrui Zhang, Rongyao Fang, Wei Zhang, Peng Gao, Kunchang Li, Jifeng Dai, Yu Qiao, Hongsheng Li.

ECCV 2022

[paper] [code]

CLIP-Adapter: Better Vision-Language Models with Feature Adapters.

Peng Gao, Shijie Geng, Renrui Zhang, Teli Ma, Rongyao Fang, Yongfeng Zhang, Hongsheng Li, Yu Qiao.

arXiv:2110.04544 2022

[paper] [code]

Exploring Visual Prompts for Adapting Large-Scale Models.

Hyojin Bahng, Ali Jahanian, Swami Sankaranarayanan, Phillip Isola.

arXiv:2203.17274 2022

[paper] [code] [project]

[NOAH] Neural Prompt Search.

Yuanhan Zhang, Kaiyang Zhou, Ziwei Liu.

arXiv:2206.04673, 2022

[paper] [code]

Parameter-efficient Fine-tuning for Vision Transformers.

Xuehai He, Chunyuan Li, Pengchuan Zhang, Jianwei Yang, Xin Eric Wang.

arXiv:2203.16329, 2022

[paper]

[CuPL] What does a platypus look like? Generating customized prompts for zero-shot image classification.

Sarah Pratt, Rosanne Liu, Ali Farhadi.

arXiv:2209.03320, 2022

[paper] [code]

AdaptFormer: Adapting Vision Transformers for Scalable Visual Recognition.

Shoufa Chen, Chongjian Ge, Zhan Tong, Jiangliu Wang, Yibing Song, Jue Wang, Ping Luo.

arXiv:2205.13535, 2022

[paper] [code]

Vision Transformer Adapter for Dense Predictions.

Zhe Chen, Yuchen Duan, Wenhai Wang, Junjun He, Tong Lu, Jifeng Dai, Yu Qiao.

arXiv:2205.08534, 2022

[paper] [code]

Convolutional Bypasses Are Better Vision Transformer Adapters.

Shibo Jie, Zhi-Hong Deng.

arXiv:2207.07039, 2022

[paper] [code]

Conv-Adapter: Exploring Parameter Efficient Transfer Learning for ConvNets.

Hao Chen, Ran Tao, Han Zhang, Yidong Wang, Wei Ye, Jindong Wang, Guosheng Hu, Marios Savvides.

arXiv:2208.07463, 2022

[paper]

VL-PET: Vision-and-Language Parameter-Efficient Tuning via Granularity Control.

Zi-Yuan Hu, Yanyang Li, Michael R. Lyu, Liwei Wang.

ICCV, 2023

[paper] [code] [project]

Contrastive Prompt Tuning Improves Generalization in Vision-Language Models.

Anonymous.

[OpenReview]

Variational Prompt Tuning Improves Generalization of Vision-Language Models.

Anonymous.

[OpenReview]

MaPLe: Multi-modal Prompt Learning.

Anonymous.

[OpenReview]

Prompt Tuning with Prompt-aligned Gradient for Vision-Language Models.

Anonymous.

[OpenReview]

Prompt Learning with Optimal Transport for Vision-Language Models.

Anonymous.

[OpenReview]

Unified Vision and Language Prompt Learning.

Anonymous.

[OpenReview]

Rethinking the Value of Prompt Learning for Vision-Language Models.

Anonymous.

[OpenReview]

Language-Aware Soft Prompting for Vision & Language Foundation Models.

Anonymous.

[OpenReview]

ST-Adapter: Parameter-Efficient Image-to-Video Transfer Learning for Action Recognition.

Junting Pan, Ziyi Lin, Xiatian Zhu, Jing Shao, Hongsheng Li.

NeurIPS 2022

[paper]

[DetPro] Learning to Prompt for Open-Vocabulary Object Detection with Vision-Language Model.

Yu Du, Fangyun Wei, Zihe Zhang, Miaojing Shi, Yue Gao, Guoqi Li.

CVPR 2022

[paper] [code]

Multimodal Few-Shot Object Detection with Meta-Learning Based Cross-Modal Prompting.

Guangxing Han, Jiawei Ma, Shiyuan Huang, Long Chen, Rama Chellappa, and Shih-Fu Chang.

arXiv:2204.07841 2022

[paper]

Learning to Compose Soft Prompts for Compositional Zero-Shot Learning.

Nihal V. Nayak, Peilin Yu, Stephen H. Bach.

arXiv:2204.03574 2022

[paper] [code]

[WiSE-FT] Robust Fine-Tuning of Zero-Shot Models.

Mitchell Wortsman, Gabriel Ilharco, Jong Wook Kim, Mike Li, Simon Kornblith, Rebecca Roelofs, Raphael Gontijo Lopes, Hannaneh Hajishirzi, Ali Farhadi, Hongseok Namkoong, Ludwig Schmidt.

CVPR 2022

[paper] [code]

[PAINT] Patching open-vocabulary models by interpolating weights.

Gabriel Ilharco, Mitchell Wortsman, Samir Yitzhak Gadre, Shuran Song, Hannaneh Hajishirzi, Simon Kornblith, Ali Farhadi, Ludwig Schmidt.

arXiv:2208.05592 2022

[paper]

Learning to Prompt for Continual Learning.

Zifeng Wang, Zizhao Zhang, Chen-Yu Lee, Han Zhang, Ruoxi Sun, Xiaoqi Ren, Guolong Su, Vincent Perot, Jennifer Dy, Tomas Pfister.

CVPR, 2022

[paper] [code]

Bridge-Prompt: Towards Ordinal Action Understanding in Instructional Videos.

Muheng Li, Lei Chen, Yueqi Duan, Zhilan Hu, Jianjiang Feng, Jie Zhou, Jiwen Lu.

CVPR, 2022

[paper] [code]

Prompting Visual-Language Models for Efficient Video Understanding.

Chen Ju, Tengda Han, Kunhao Zheng, Ya Zhang, Weidi Xie.

ECCV, 2022

[paper] [code]

Polyhistor: Parameter-Efficient Multi-Task Adaptation for Dense Vision Tasks.

Yen-Cheng Liu, Chih-Yao Ma, Junjiao Tian, Zijian He, Zsolt Kira.

NeurIPS, 2022

[paper] [project]

Prompt Vision Transformer for Domain Generalization.

Zangwei Zheng, Xiangyu Yue, Kai Wang, Yang You.

arXiv:2208.08914, 2022

[paper]

Domain Adaptation via Prompt Learning.

Chunjiang Ge, Rui Huang, Mixue Xie, Zihang Lai, Shiji Song, Shuang Li, Gao Huang.

arXiv:2202.06687, 2022

[paper]

Visual Prompting via Image Inpainting.

Amir Bar, Yossi Gandelsman, Trevor Darrell, Amir Globerson, Alexei A. Efros.

arXiv:2209.00647, 2022

[paper] [code]

Visual Prompting: Modifying Pixel Space to Adapt Pre-trained Models.

Hyojin Bahng, Ali Jahanian, Swami Sankaranarayanan, Phillip Isola.

arXiv:2203.17274, 2022

[paper] [code]

Towards Out-Of-Distribution Generalization: A Survey.

Zheyan Shen, Jiashuo Liu, Yue He, Xingxuan Zhang, Renzhe Xu, Han Yu, Peng Cui.

arXiv:2108.13624, 2021

[paper]

Generalizing to Unseen Domains: A Survey on Domain Generalization.

Wang, Jindong, Cuiling Lan, Chang Liu, Yidong Ouyang, Wenjun Zeng, and Tao Qin.

IJCAI 2021

[paper]

Domain Generalization: A Survey.

Zhou, Kaiyang, Ziwei Liu, Yu Qiao, Tao Xiang, and Chen Change Loy.

TPAMI 2022

[paper]

Episodic Training for Domain Generalization.

Da Li, Jianshu Zhang, Yongxin Yang, Cong Liu, Yi-Zhe Song, Timothy M. Hospedales.

ICCV 2019

[paper][code]

Domain Generalization by Solving Jigsaw Puzzles.

Fabio Maria Carlucci, Antonio D'Innocente, Silvia Bucci, Barbara Caputo, Tatiana Tommasi.

CVPR 2019

[paper][code]

Invariant Risk Minimization.

Martin Arjovsky, Léon Bottou, Ishaan Gulrajani, David Lopez-Paz.

arXiv:1907.02893, 2019

[paper] [code]

Deep Domain-Adversarial Image Generation for Domain Generalisation.

Kaiyang Zhou, Yongxin Yang, Timothy Hospedales, Tao Xiang.

AAAI 2020

[paper]

Learning to Generate Novel Domains for Domain Generalization.

Kaiyang Zhou, Yongxin Yang, Timothy Hospedales, Tao Xiang.

ECCV 2020

[paper]

Improved OOD Generalization via Adversarial Training and Pre-training.

Mingyang Yi, Lu Hou, Jiacheng Sun, Lifeng Shang, Xin Jiang, Qun Liu, Zhi-Ming Ma.

ICML 2021

[paper]

Distributionally Robust Neural Networks for Group Shifts: On the Importance of Regularization for Worst-Case Generalization.

Shiori Sagawa, Pang Wei Koh, Tatsunori B. Hashimoto, Percy Liang.

ICLR 2020

[paper] [code]

Domain Generalization with MixStyle.

Kaiyang Zhou, Yongxin Yang, Yu Qiao, Tao Xiang.

ICLR 2021

[paper][code]

In Search of Lost Domain Generalization.

Ishaan Gulrajani, David Lopez-Paz.

ICLR 2021

[paper] [code]

Out-of-Distribution Generalization via Risk Extrapolation (REx).

David Krueger, Ethan Caballero, Joern-Henrik Jacobsen, Amy Zhang, Jonathan Binas, Dinghuai Zhang, Remi Le Priol, Aaron Courville.

ICLR 2021

[paper] [code]

SWAD: Domain Generalization by Seeking Flat Minima.

Junbum Cha, Sanghyuk Chun, Kyungjae Lee, Han-Cheol Cho, Seunghyun Park, Yunsung Lee, Sungrae Park.

NeurIPS 2021

[paper] [code]

Domain Invariant Representation Learning with Domain Density Transformations.

Marco Federici, Ryota Tomioka, Patrick Forré.

NeurIPS 2021

[paper] [code]

An Information-theoretic Approach to Distribution Shifts.

A. Tuan Nguyen, Toan Tran, Yarin Gal, Atılım Güneş Baydin.

NeurIPS 2021

[paper] [code]

Gradient Matching for Domain Generalization.

Yuge Shi, Jeffrey Seely, Philip H.S. Torr, N. Siddharth, Awni Hannun, Nicolas Usunier, Gabriel Synnaeve.

ICLR 2022

[paper] [code]

Visual Representation Learning Does Not Generalize Strongly Within the Same Domain.

Lukas Schott, Julius von Kügelgen, Frederik Träuble, Peter Gehler, Chris Russell, Matthias Bethge, Bernhard Schölkopf, Francesco Locatello, Wieland Brendel.

ICLR 2022

[paper] [code]

MetaShift: A Dataset of Datasets for Evaluating Contextual Distribution Shifts and Training Conflicts.

Weixin Liang and James Zou.

ICLR 2022

[paper] [code]

Fishr: Invariant Gradient Variances for Out-of-Distribution Generalization.

Alexandre Rame, Corentin Dancette, Matthieu Cord.

ICML 2022

[paper] [code]

[MIRO] Domain Generalization by Mutual-Information Regularization with Pre-trained Models.

Junbum Cha, Kyungjae Lee, Sungrae Park, Sanghyuk Chun.

ECCV 2022

[paper] [code]

Diverse Weight Averaging for Out-of-Distribution Generalization.

Alexandre Rame, Matthieu Kirchmeyer, Thibaud Rahier, Alain Rakotomamonjy, Patrick Gallinari, Matthieu Cord.

NeurIPS 2022

[paper] [code]

Wild-Time: A Benchmark of in-the-Wild Distribution Shift over Time.

Huaxiu Yao, Caroline Choi, Bochuan Cao, Yoonho Lee, Pang Wei Koh, Chelsea Finn.

NeurIPS 2022

[paper] [code]

WILDS: A Benchmark of in-the-Wild Distribution Shifts.

Pang Wei Koh, Shiori Sagawa, Henrik Marklund, Sang Michael Xie, Marvin Zhang, Akshay Balsubramani, Weihua Hu, Michihiro Yasunaga, Richard Lanas Phillips, Irena Gao, Tony Lee, Etienne David, Ian Stavness, Wei Guo, Berton A. Earnshaw, Imran S. Haque, Sara Beery, Jure Leskovec, Anshul Kundaje, Emma Pierson, Sergey Levine, Chelsea Finn, Percy Liang.

PMLR 2022

[paper] [code]

SIMPLE: Specialized Model-Sample Matching for Domain Generalization.

Ziyue Li, Kan Ren, Xinyang Jiang, Yifei Shen, Haipeng Zhang, Dongsheng Li

ICLR 2023

[paper] [code]

Sparse Mixture-of-Experts are Domain Generalizable Learners.

Bo Li, Yifei Shen, Jingkang Yang, Yezhen Wang, Jiawei Ren, Tong Che, Jun Zhang, Ziwei Liu

ICLR 2023

[paper] [code]

Evaluating Model Robustness and Stability to Dataset Shift.

Adarsh Subbaswamy, Roy Adams, Suchi Saria.

AISTATS 2021

[paper] [code]

Plex: Towards Reliability using Pretrained Large Model Extensions.

Dustin Tran, Jeremiah Liu, Michael W. Dusenberry, Du Phan, Mark Collier, Jie Ren, Kehang Han, Zi Wang, Zelda Mariet, Huiyi Hu, Neil Band, Tim G. J. Rudner, Karan Singhal, Zachary Nado, Joost van Amersfoort, Andreas Kirsch, Rodolphe Jenatton, Nithum Thain, Honglin Yuan, Kelly Buchanan, Kevin Murphy, D. Sculley, Yarin Gal, Zoubin Ghahramani, Jasper Snoek, Balaji Lakshminarayanan.

First Workshop of Pre-training: Perspectives, Pitfalls, and Paths Forward at ICML 2022

[paper] [code]

We thank all the authors above for their great works! Related Reading List

If you find this repository useful, please consider giving a star ⭐ and citation 🍺:

@article{li2022elevater,

title={ELEVATER: A Benchmark and Toolkit for Evaluating Language-Augmented Visual Models},

author={Li, Chunyuan and Liu, Haotian and Li, Liunian Harold and Zhang, Pengchuan and Aneja, Jyoti and Yang, Jianwei and Jin, Ping and Hu, Houdong and Liu, Zicheng and Lee, Yong Jae and Gao, Jianfeng},

journal={Neural Information Processing Systems},

year={2022}

}