Repository containing the code for the paper "NLBAC: A Neural ODEs-based Framework for Stable and Safe Reinforcement Learning", and this code is developed based on the code: https://github.com/LiqunZhao/A-Barrier-Lyapunov-Actor-Critic-Reinforcement-Learning-Approach-for-Safe-and-Stable-Control and https://github.com/yemam3/SAC-RCBF

This repository only contains the code with clear comments for the algorithms Neural ordinary differential equations-based Lyapunov Barrier Actor Critic (NLBAC), for other algorithms, please refer to:

MBPPO-Lagrangian: https://github.com/akjayant/mbppol

LAC: https://github.com/hithmh/Actor-critic-with-stability-guarantee

CPO, PPO-Lagrangian and TRPO-Lagrangian: https://github.com/openai/safety-starter-agents

The experiments are run with Pytorch, and wandb (https://wandb.ai/site) is used to save the data and draw the graphs. To run the experiments, some packages are listed below with their versions (in my conda environment).

python: 3.6.13

pytorch: 1.10.2

numpy: 1.17.5

wandb: 0.12.11

gym: 0.15.7

torchdiffeq 0.2.3Two environments called Unicycle and SimulatedCars are provided in this repository. In Unicycle, a unicycle is required to arrive at the

desired location, i.e., destination, while avoiding collisions with obstacles. SimulatedCars involves a chain of five cars following each other on a straight road. The goal is to control the acceleration of the 4th car to keep

a desired distance from the 3rd car while avoiding collisions with other cars.

Interested readers can also explore the option of using their own customized environments. Detailed instructions can be found below.

You can follow the 3 steps below to run the RL-training part directly since a pre-trained model has been provided:

- Update the

neural_ode_model_ptvariable in line 130 ofNeural-ordinary-differential-equations-based-Lyapunov-Barrier-Actor-Critic-NLBAC/Unicycle/Unicycle_RL_training/sac_cbf_clf/sac_cbf_clf.pywith the correct path to the provided pre-trained model that aligns with your computing environment - Navigate to the directory

Neural-ordinary-differential-equations-based-Lyapunov-Barrier-Actor-Critic-NLBAC/Unicycle/Unicycle_RL_training - Run the command

python main.py --env Unicycle --gamma_b 50 --max_episodes 200 --cuda --updates_per_step 2 --batch_size 128 --seed 0 --start_steps 1000

If you want to first pre-train a new model and then run the RL-training part, please follow the steps listed below:

- Navigate to the directory

Neural-ordinary-differential-equations-based-Lyapunov-Barrier-Actor-Critic-NLBAC/Unicycle/Unicycle_modelling - Run the file

Unicycle_modelling.py(You can first change the names of the data collected and pre-trained model, as well as the path where the pre-trained model is saved in the file. See lines 56, 295, 310, 311. Make sure that they align with each other). - Copy the new pre-trained model to

Neural-ordinary-differential-equations-based-Lyapunov-Barrier-Actor-Critic-NLBAC/Unicycle/Unicycle_RL_training/sac_cbf_clf - Update the

neural_ode_model_ptvariable in line 130 ofNeural-ordinary-differential-equations-based-Lyapunov-Barrier-Actor-Critic-NLBAC/Unicycle/Unicycle_RL_training/sac_cbf_clf/sac_cbf_clf.pywith the correct path to the new pre-trained model that aligns with your computing environment - Navigate to the directory

Neural-ordinary-differential-equations-based-Lyapunov-Barrier-Actor-Critic-NLBAC/Unicycle/Unicycle_RL_training - Run the command

python main.py --env Unicycle --gamma_b 50 --max_episodes 200 --cuda --updates_per_step 2 --batch_size 128 --seed 0 --start_steps 1000

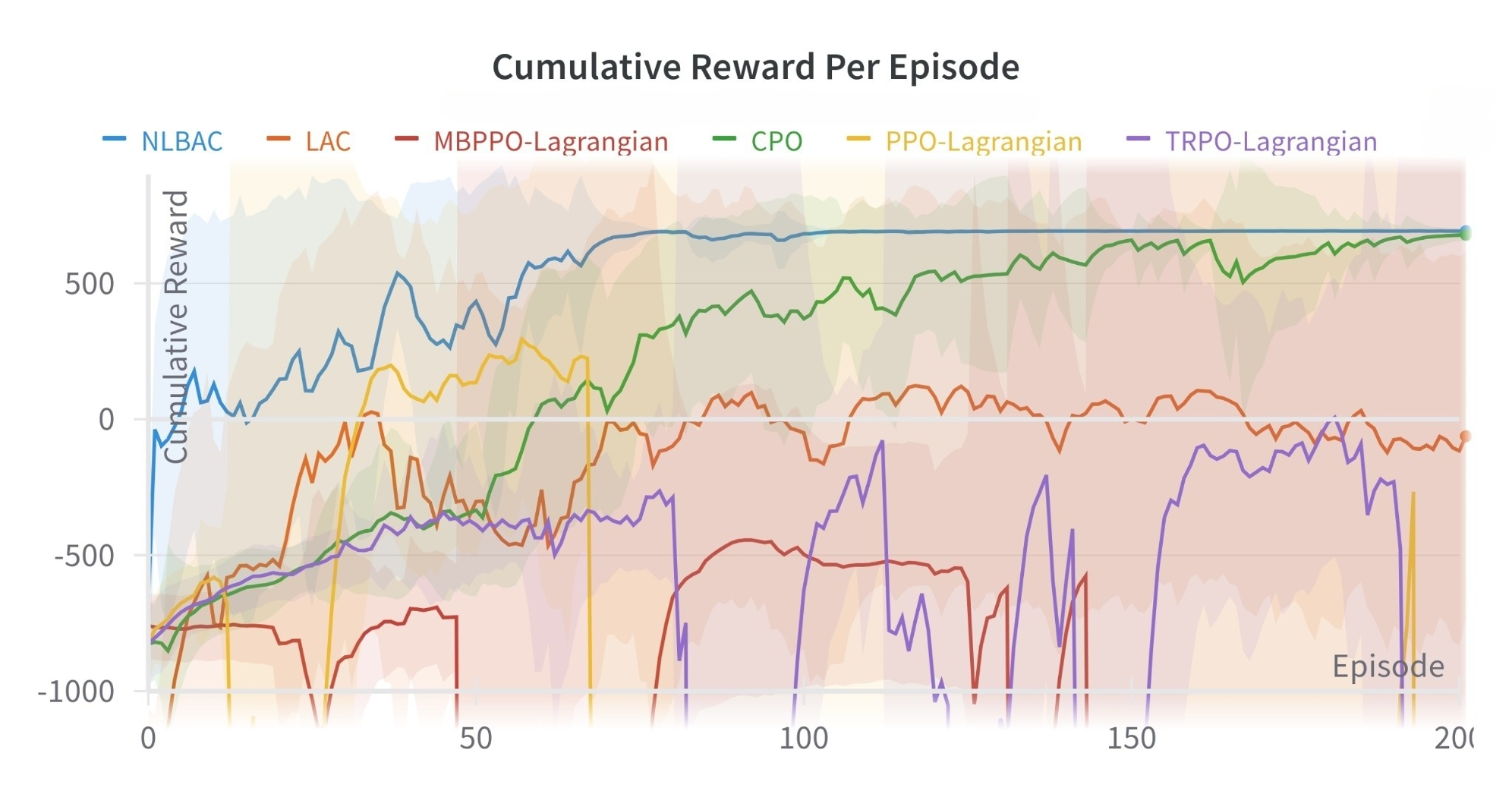

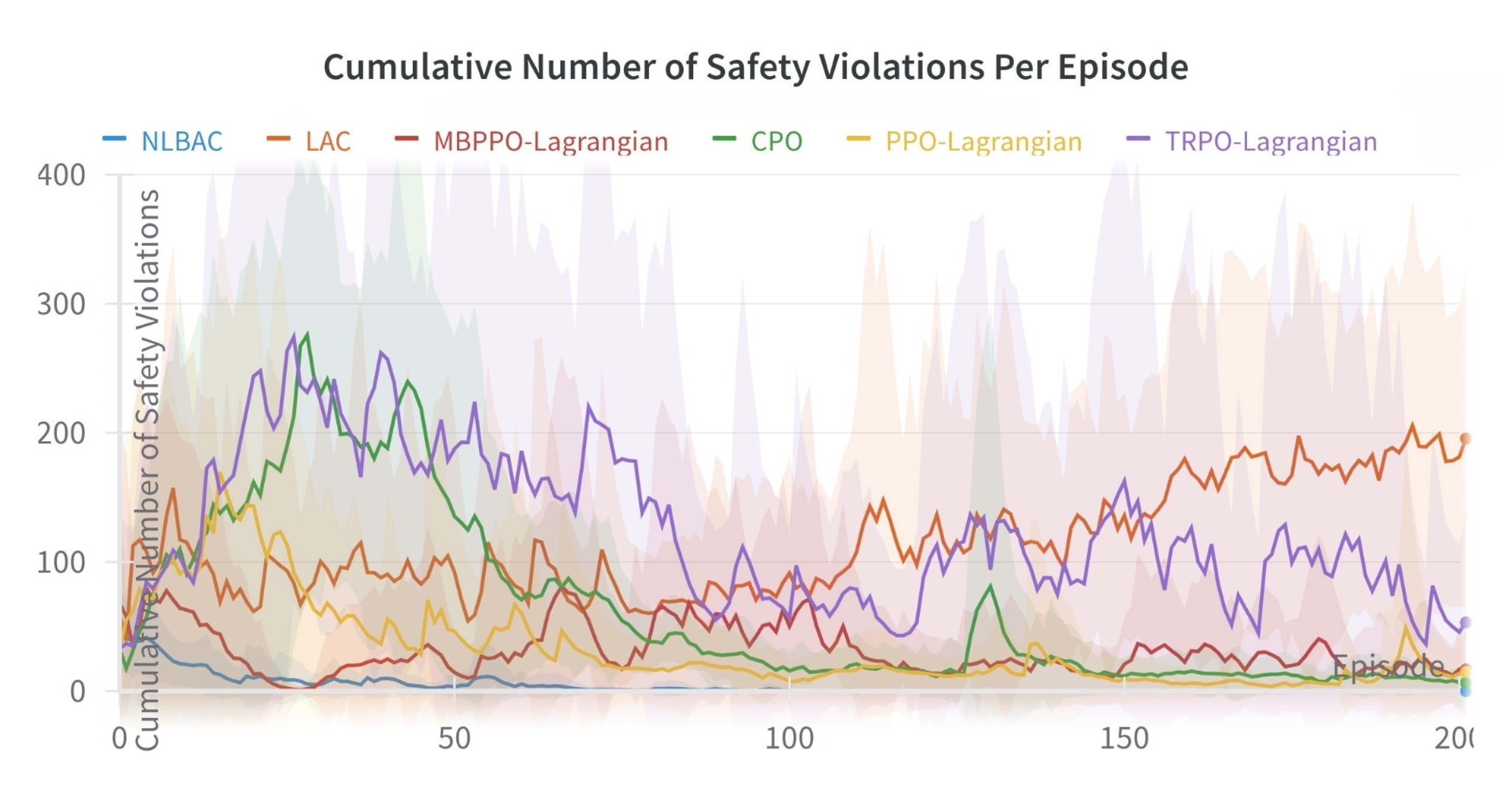

Here are the results obtained by my machine:

You can follow the 3 steps below to run the RL-training part directly since a pre-trained model has been provided:

- Update the

neural_ode_model_ptvariable in line 126 ofNeural-ordinary-differential-equations-based-Lyapunov-Barrier-Actor-Critic-NLBAC/Simulated_Car_Following/Simulated_Car_Following_RL_training/sac_cbf_clf/sac_cbf_clf.pywith the correct path to the provided pre-trained model that aligns with your computing environment - Navigate to the directory

Neural-ordinary-differential-equations-based-Lyapunov-Barrier-Actor-Critic-NLBAC/Simulated_Car_Following/Simulated_Car_Following_RL_training - Run the command

python main.py --env SimulatedCars --gamma_b 0.5 --max_episodes 200 --cuda --updates_per_step 2 --batch_size 256 --seed 0 --start_steps 200

If you want to first pre-train a new model and then run the RL-training part, please follow the steps listed below:

- Navigate to the directory

Neural-ordinary-differential-equations-based-Lyapunov-Barrier-Actor-Critic-NLBAC/Simulated_Car_Following/Simulated_Car_Following_modelling - Run the file

simulated_cars_following_modelling.py(You can first change the names of the data collected and pre-trained model, as well as the path where the pre-trained model is saved in the file. See lines 59, 289, 306, 307. Make sure that they align with each other). - Copy the new pre-trained model to

Neural-ordinary-differential-equations-based-Lyapunov-Barrier-Actor-Critic-NLBAC/Simulated_Car_Following/Simulated_Car_Following_RL_training/sac_cbf_clf - Update the

neural_ode_model_ptvariable in line 126 ofNeural-ordinary-differential-equations-based-Lyapunov-Barrier-Actor-Critic-NLBAC/Simulated_Car_Following/Simulated_Car_Following_RL_training/sac_cbf_clf/sac_cbf_clf.pywith the correct path to the new pre-trained model that aligns with your computing environment - Navigate to the directory

Neural-ordinary-differential-equations-based-Lyapunov-Barrier-Actor-Critic-NLBAC/Simulated_Car_Following/Simulated_Car_Following_RL_training - Run the command

python main.py --env SimulatedCars --gamma_b 0.5 --max_episodes 200 --cuda --updates_per_step 2 --batch_size 256 --seed 0 --start_steps 200

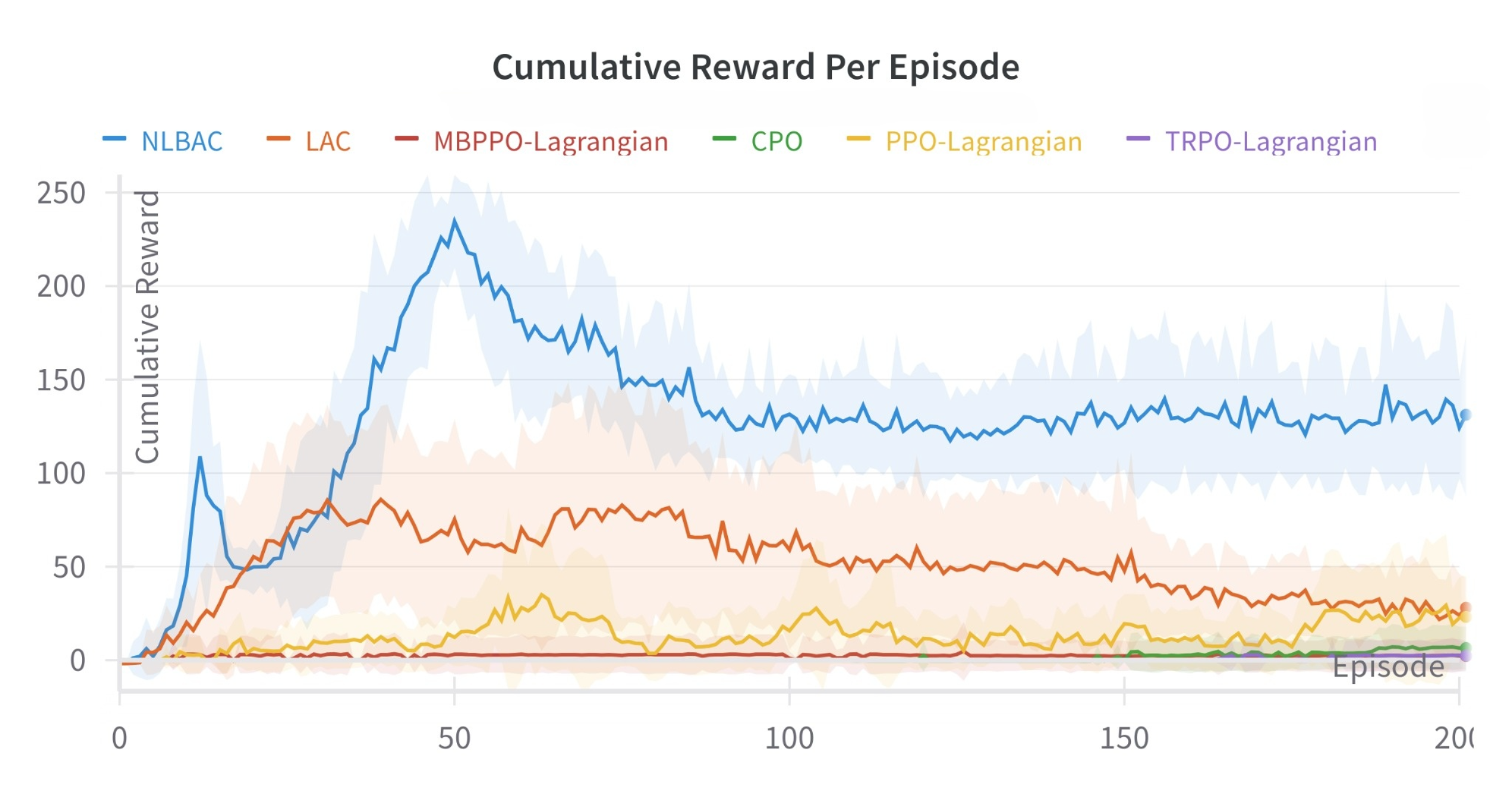

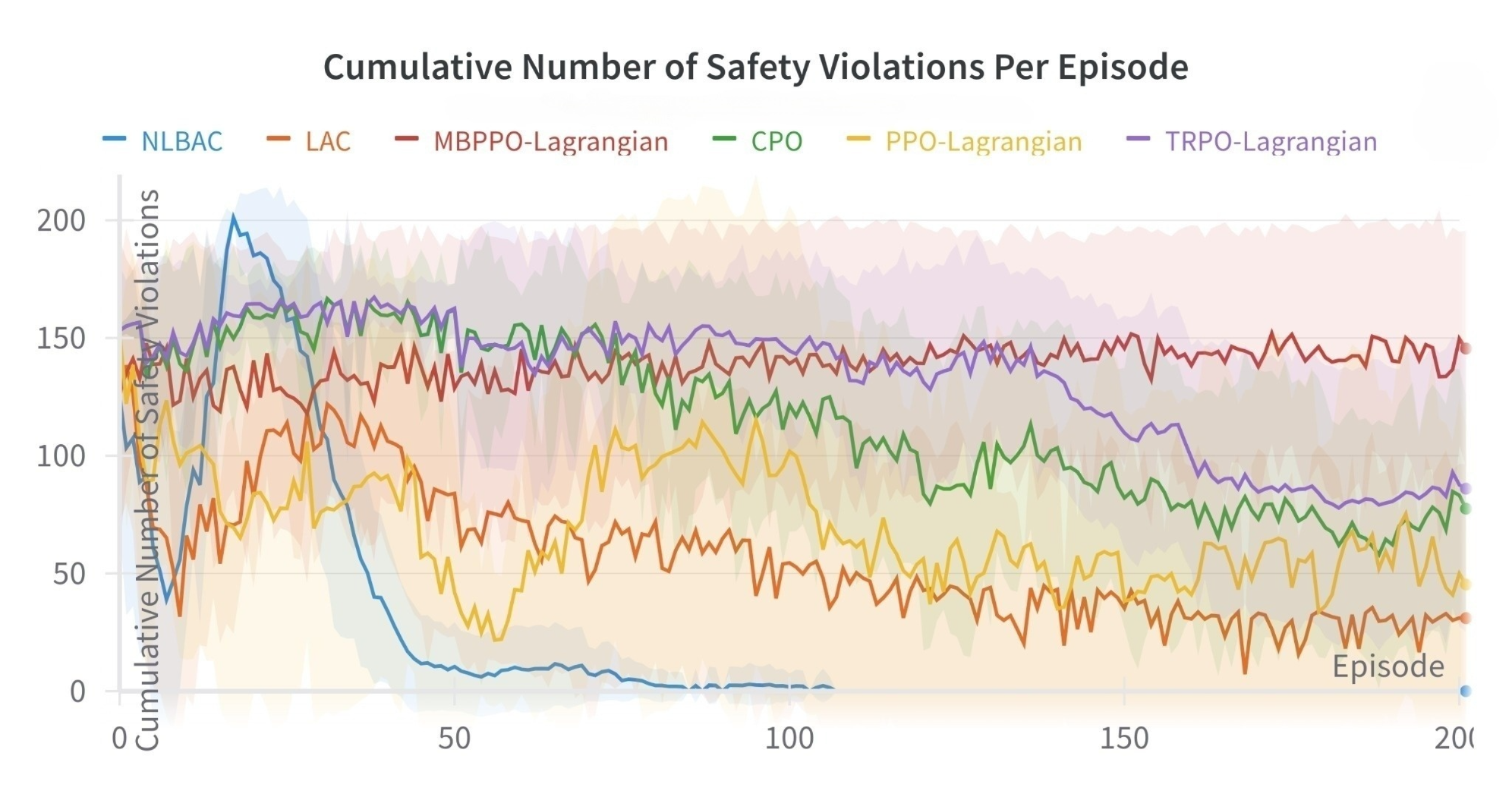

Here are the results obtained by my machine:

The whole process is similar:

- Copy the folder

Unicycleand rename it as your customized environmentYour_customized_environment - Prepare you own customized environment and do some adjustments. Here is one point:

- Outputs of your own customized

env.stepfunction. Besidesnext obs,reward,doneandinfothat are commonly used in RL literature, here we still need:constraint: Difference between the current state and the desired state, and is required to decrease. It is also used to approximate the Lyapunov network.- Some lists used as inputs of the Lyapunov network (if

obsandnext obsare not used as inputs of the Lyapunov network directly). See the aforementioned environments as examples. - Other info like the number of safety violations and value of safety cost (usually used in algorithms like CPO, PPO-Lagrangian and TRPO-Lagrangian)

- Add the new customized environment in the file

build_env.py, and change some if statements regardingdynamics_modeinsac_cbf_clf.py - Change the replay buffer since the outputs of

env.stepare changed. - Tune the hyperparameters like learning rates, batch size, number of hidden states and so on if necessary.

- Navigate to the directory

Neural-ordinary-differential-equations-based-Lyapunov-Barrier-Actor-Critic-NLBAC/Your_customized_environment/Your_customized_environment_modelling - Run the file

Your_customized_environment_modelling.py(You can change the names of the data collected and pre-trained model, the path where the pre-trained model is saved, and some parameters like the input and output dimensions and the amount of data collected for pre-training. Make sure that they align with each other). - Copy the new pre-trained model to

Neural-ordinary-differential-equations-based-Lyapunov-Barrier-Actor-Critic-NLBAC/Your_customized_environment/Your_customized_environment_RL_training/sac_cbf_clf - Update the

neural_ode_model_ptvariable inNeural-ordinary-differential-equations-based-Lyapunov-Barrier-Actor-Critic-NLBAC/Your_customized_environment/Your_customized_environment_RL_training/sac_cbf_clf/sac_cbf_clf.pywith the correct path to the new pre-trained model that aligns with your computing environment - Navigate to the directory

Neural-ordinary-differential-equations-based-Lyapunov-Barrier-Actor-Critic-NLBAC/Your_customized_environment/Your_customized_environment_RL_training - Run the command

python main.py --env Your_customized_environment --gamma_b 0.5 --max_episodes 200 --cuda --updates_per_step 2 --batch_size 256 --seed 0 --start_steps 200. Change the arguments if necessary.

If you have some questions regarding the code or the paper, please do not hesitate to contact me by email. My email address is liqun.zhao@eng.ox.ac.uk.