This work is based on our paper. We proposed a new framework to explore and search for the target in unknown environment based on Large Language Model. Our work is based on SemExp and L3MVN, implemented in PyTorch.

Author: Bangguo Yu, Hamidreza Kasaei and Ming Cao

Affiliation: University of Groningen

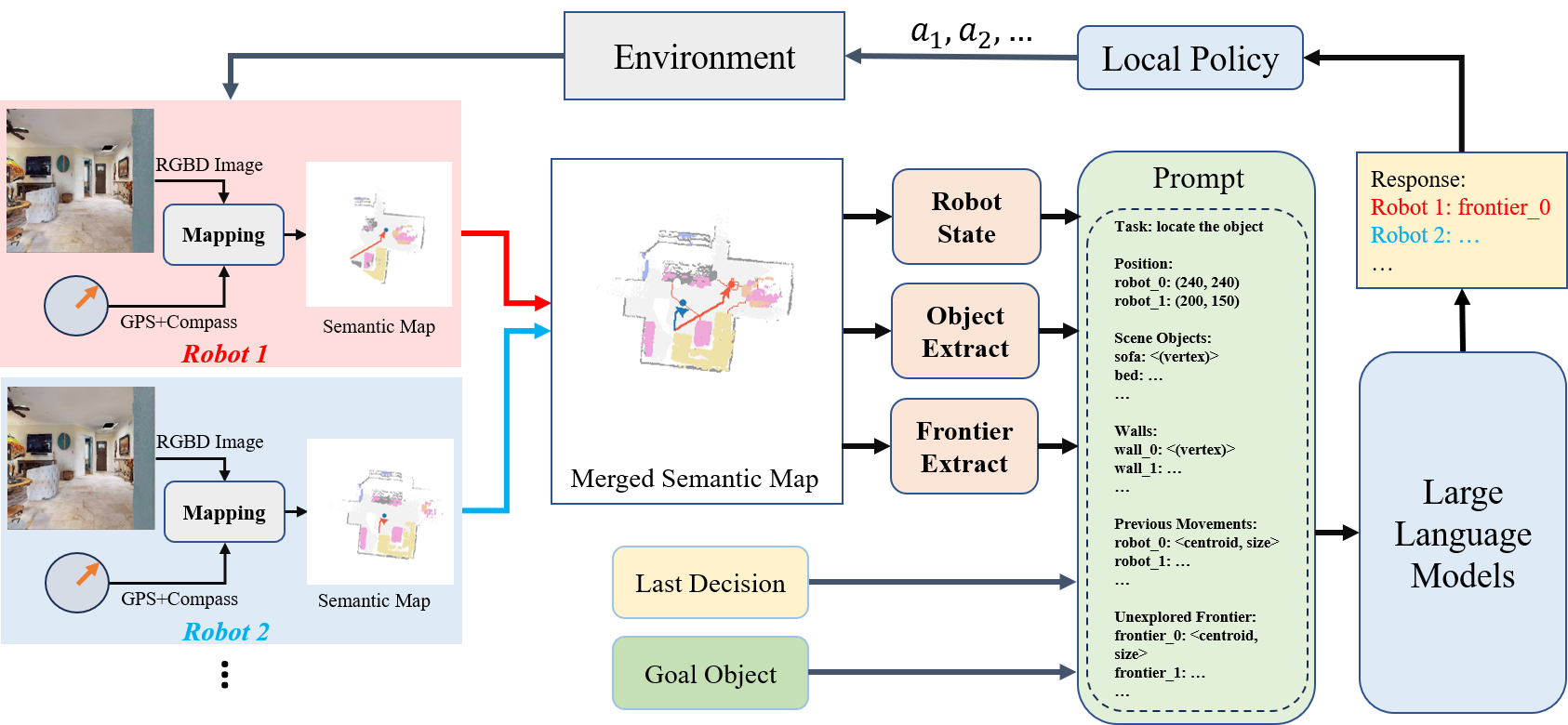

In advanced human-robot interaction tasks, visual target navigation is crucial for autonomous robots navigating unknown environments. While numerous approaches have been developed in the past, most are designed for single-robot operations, which often suffer from reduced efficiency and robustness due to environmental complexities. Furthermore, learning policies for multi-robot collaboration is resource-intensive. To address these challenges, we propose Co-NavGPT, an innovative framework that integrates Large Language Models (LLMs) as a global planner for multi-robot cooperative visual target navigation. Co-NavGPT encodes the explored environment data into prompts, enhancing LLMs’ scene comprehension. It then assigns exploration frontiers to each robot for efficient target search. Experimental results on Habitat-Matterport 3D (HM3D) demonstrate that Co-NavGPT surpasses existing models in success rates and efficiency without any learning process, demonstrating the vast potential of LLMs in multi-robot collaboration domains. The supplementary video, prompts, and code can be accessed via the following link: https://sites.google.com/view/co-navgpt.

The code has been tested only with Python 3.10.8, CUDA 11.7.

- Installing Dependencies

-

We use adjusted versions of habitat-sim and habitat-lab as specified below:

-

Installing habitat-sim:

git clone https://github.com/facebookresearch/habitat-sim.git

cd habitat-sim; git checkout tags/challenge-2022;

pip install -r requirements.txt;

python setup.py install --headless

python setup.py install # (for Mac OS)

- Installing habitat-lab:

git clone https://github.com/facebookresearch/habitat-lab.git

cd habitat-lab; git checkout tags/challenge-2022;

pip install -e .

Back to the curent repo, and replace the habitat folder in habitat-lab rope for the multi-robot setting:

mv -r multi-robot-setting/habitat enter-your-path/habitat-lab

-

Install pytorch according to your system configuration. The code is tested on torch v2.0.1, torchvision 0.15.2.

-

Install detectron2 according to your system configuration. If you are using conda:

- Download HM3D_v0.2 datasets:

Download HM3D dataset using download utility and instructions:

python -m habitat_sim.utils.datasets_download --username <api-token-id> --password <api-token-secret> --uids hm3d_minival_v0.2

- Download additional datasets

Download the segmentation model in RedNet/model path.

Clone the repository and install other requirements:

git clone https://github.com/ybgdgh/Co-NavGPT

cd Co-NavGPT/

pip install -r requirements.txt

The code requires the datasets in a data folder in the following format (same as habitat-lab):

Co-NavGPT/

data/

scene_datasets/

matterport_category_mappings.tsv

object_norm_inv_perplexity.npy

versioned_data

objectgoal_hm3d/

train/

val/

val_mini/

For evaluating the framework, you need to setup your openai api keys in the 'exp_main_gpt.py', then run:

python exp_main_gpt.py