[arXiv] | [Codes]

Bin Ren1,2

1University of Pisa, Italy,

2University of Trento, Italy,

3Tencent AI Lab, China,

4University of Modena and Reggio Emilia, Italy,

5Beijing Jiaotong University, China

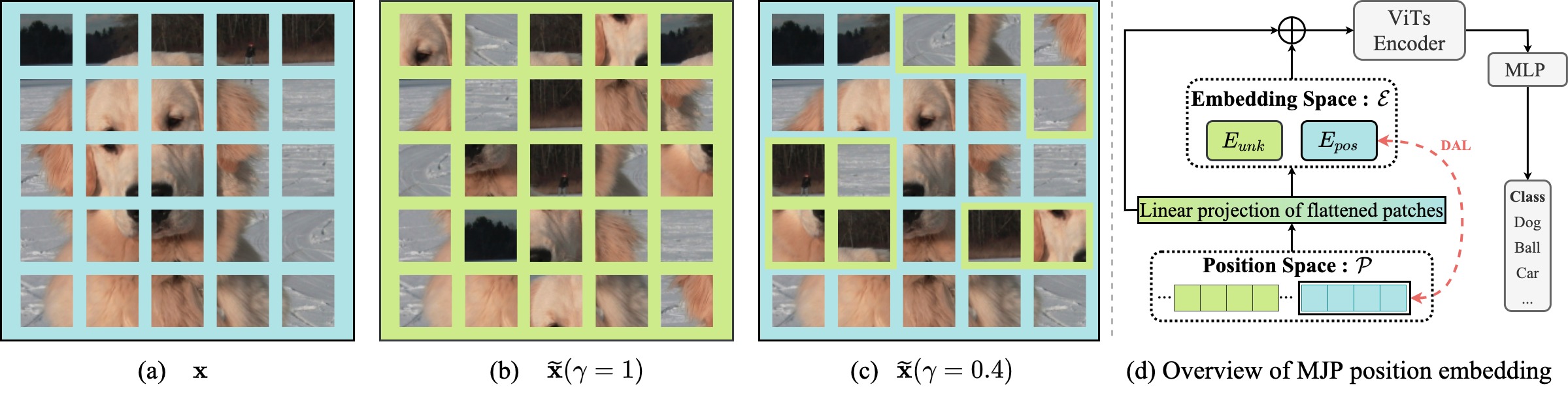

The main idea of random jigsaw shuffle algorithm and the overview the proposed MJP.

The repository offers the official implementation of our paper in PyTorch.

🦖News(March 4, 2023)! Our paper is accepted by CVPR2023!

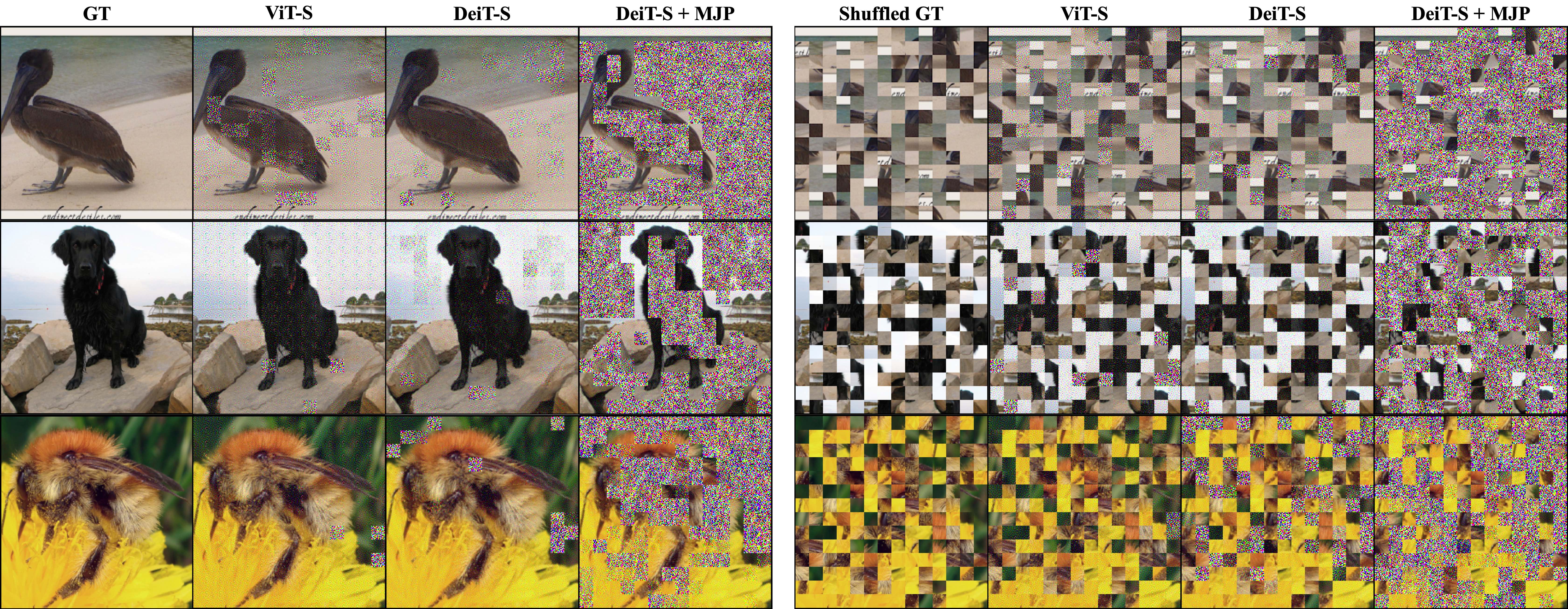

Position Embeddings (PEs), an arguably indispensable component in Vision Transformers (ViTs), have been shown to improve the performance of ViTs on many vision tasks. However, PEs have a potentially high risk of privacy leakage since the spatial information of the input patches is exposed. This caveat naturally raises a series of interesting questions about the impact of PEs on accuracy, privacy, prediction consistency, \etc. To tackle these issues, we propose a Masked Jigsaw Puzzle (MJP) position embedding method. In particular, MJP first shuffles the selected patches via our block-wise random jigsaw puzzle shuffle algorithm, and their corresponding PEs are occluded. Meanwhile, for the non-occluded patches, the PEs remain the original ones but their spatial relation is strengthened via our dense absolute localization regressor. The experimental results reveal that 1) PEs explicitly encode the 2D spatial relationship and lead to severe privacy leakage problems under gradient inversion attack; 2) Training ViTs with the naively shuffled patches can alleviate the problem, but it harms the accuracy; 3) Under a certain shuffle ratio, the proposed MJP not only boosts the performance and robustness on large-scale datasets (\emph{i.e.}, ImageNet-1K and ImageNet-C, -A/O) but also improves the privacy preservation ability under typical gradient attacks by a large margin.

| Dataset | Download Link |

|---|---|

| ImageNet | train,val |

- Download the datasets by using codes in the

scriptsfolder.

dataset_name

|__train

| |__category1

| | |__xxx.jpg

| | |__...

| |__category2

| | |__xxx.jpg

| | |__...

| |__...

|__val

|__category1

| |__xxx.jpg

| |__...

|__category2

| |__xxx.jpg

| |__...

|__...

You can find our pretrained checkpoints and 999 images sampled from ImageNet for attacking here.

After prepare the datasets, we can simply start the training with 8 NVIDIA V100 GPUs:

$ sh train.sh

- Accuracy on Masked Jigsaw Puzzle

$ python3 eval.py

- Consistency on Masked Jigsaw Puzzle

$ python3 consistency.py

- Evaluations on image reconstructions

See the codes MSE, PSNR/SSIM, FFT2D, LPIPS.

Visual comparisons on image recovery with gradient attacks.

We refer to the public repo: JonasGeiping/breaching.

This repo is built on several existing projects:

If you take use of our code or feel our paper is useful for you, please cite our papers:

@article{ren2023masked,

author = {Ren, Bin and Liu, Yahui and Song, Yue and Bi, Wei and and Cucchiara, Rita and Sebe, Nicu and Wang, Wei},

title = {Masked Jigsaw Puzzle: A Versatile Position Embedding for Vision Transformers},

journal = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2023}

}

If you have any questions, please contact me without hesitation (yahui.cvrs AT gmail.com or bin.ren AT unitn.it).