University of Pennsylvania, CIS 565: GPU Programming and Architecture, Final Project

Chang Liu (LinkedIn | Personal Website), Alex Fu ( LinkedIn | Personal Website) and Yilin Liu ( LinkedIn | Personal Website)

Tested on a personal laptop: i7-12700 @ 4.90GHz with 16GB RAM, RTX 3070 Ti Laptop 8GB

This is the our final project for UPenn's CIS 565 GPU Architecture and Programming Course. Our motivation and goal is to explore the possibility of real-time global illumination majorly based on path tracing techniques. With one month of trial and effort, our renderer has been shaped into a real-time path tracer based on Vulkan RT and ReSTIR algorithms. In our renderer, we have implemented ReSTIR DI, ReSTIR GI and a denoiser.

-

Real-time direct illumination based on ReSTIR DI

-

Real-time indirect illumination based on ReSTIR GI

-

Denoiser

-

Tessellation Free Displacement Mapping (independent implementation on branch displacement map)

Reservoir sampling is another discrete distribution sampling technique beside rejection sampling, CDF inversion and alias table. It allows a single sample to be drawn from multiple candidates at

Resampled Importance Sampling (RIS) can be regarded as an extension of importance sampling. For an estimator in Monte Carlo integration:

$$

I=\frac{1}{N}\sum\frac{f(X_i)}{p(X_i)}

$$

where

In real-time ray tracing, the most important thing would probably be the number of samples. The more samples, the higher quality but at the cost of lower performance. Because reservoirs can be easily merged, reuse of samples among frames or in space is possible. If reservoirs are stored in screen space, then we can operate on them just as what we do in temporal anti-aliasing and filtering. We can find past reservoirs in previous frames using reprojection or take reservoirs from neighboring pixels. ReSTIR is right the algorithm doing this work. By combining reservoir reuse and RIS, ReSTIR, especially ReSTIR DI, is able to evaluate as much path contribution with only one intersection test.

Our ray tracing pipeline can be divided to three main stages: direct stage, indirect stage and denoiser stage. In direct stage, the render generates G-Buffer, reservoirs for direct illumination and perform direct ReSTIR. In the second stage, we do path tracing to collect new indirect samples and evaluate indirect illumination. The last stage filters the result generate by previous stages and finally compose the noise-free output.

All our ReSTIR and denoising process later require screen space geometry information, so we need to generate G-Buffer. By this time, our G-Buffer is generated along with direct reservoirs within the same first pass. To reduce bandwidth occupancy, our G-Buffer components are compressed with different techniques. Here is our G-Buffer layout:

We keep the depth full 32 bit float for sufficient precision when used for indirect ReSTIR, and compress the rest that don't require much precision, such as albedo. For material ID, we hashed it to 8 bits. Also, out motion vector is stored as integer coordinates.

Compared to uncompressed layout, a total of 56 - 20 = 36 bytes is saved.

We implemented ReSTIR DI [B. Bitterli et al., SIG 2020] for our direct illumination.

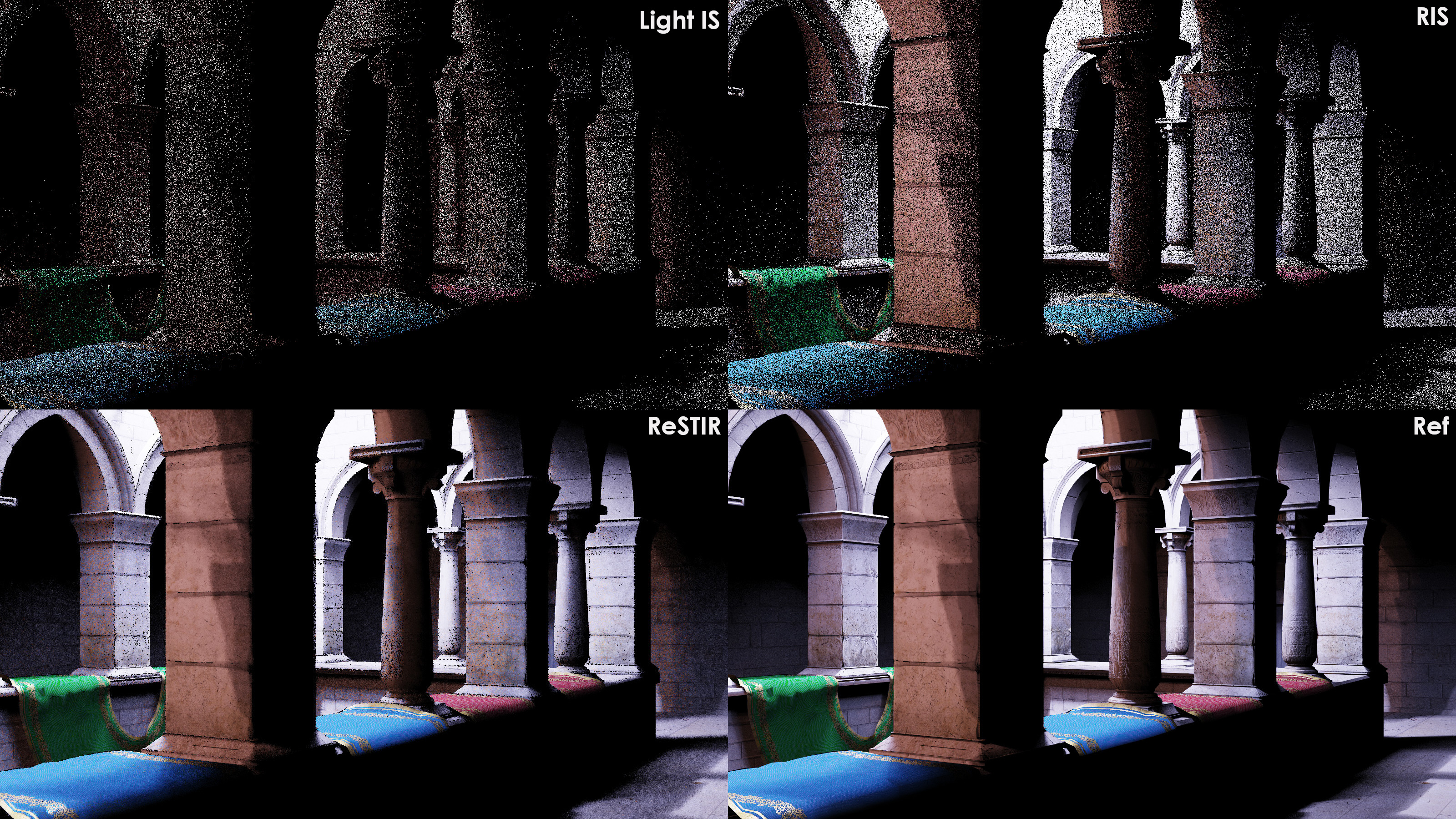

The effect of ReSTIR DI. From another CUDA program we developed to validate ReSTIR before we implemented it in this renderer

In our Vulkan renderer, in order to achieve real-time, we traded quality for speed

However, different from the original paper, we did several modifications to make it more suitable for real-time path tracing:

- First, we observed that, to maintain a relatively temporally stable output from ReSTIR, the number of RIS samples we sample in each ray tracing pass does not have to be very large (e.g., M = 32 in the paper). M = 4 is enough.

- Second, for reservoir clamping, we clamped the M of valid temporally neighboring reservoir to about 80x of current ones. This helps our direct illumination to quickly adapt to updated camera perspectives while keeping stable.

- Last, we decided not to use spatial resampling in our final implementation. This is mostly because spatial resampling is time consuming, and our implementation of didn't yield significant improvement in quality. We'd rather use a spatial denoiser after temporal reuse.

For indirect illumination, we implemented ReSTIR GI [S. Ouyang et al., HPG 2021]. The input samples to indirect reservoir are generated by standard path tracing with Multiple Importance Sampling. Similar to DI, we did not use spatial resampling.

ReSTIR GI is different from DI when generating samples to put into reservoir. In ReSTIR DI, sampling a light path is simple, because once we get the first intersection point from camera we can pick a point on light source and connect it to the intersection point to evaluate

| No ReSTIR | ReSTIR |

|---|---|

|

|

For indirect illumination, samples reuse greatly improves image quality, even if in each iteration we only add one sample to the reservoir.

Based on the nature of tracing longer paths and perform more occlusion tests, we observed that tracing indirect lighting is much slower than direct. For example, before we optimized indirect lighting, running the Bistro Exterior scene takes 3.9 ms for direct and 25 ms for indirect.

Wasting time on tracing relatively insignificant component is not effective. Usually the variation of indirect illumination is at lower frequency based on the assumption that most surfaces in a scene are diffuse. With not so sharp variation, we could possibly trace less rays. In our path tracer, we reduce the resolution for indirect lighting to 1/4 of direct lighting. Later denoising for indirect lighting is also running at this resolution, greatly reducing denoising time.

Besides, we did as suggested in the ReSTIR GI paper, that to decide whether to trace longer paths with Russian roulette at block level. We only allow 25% of rays to trace multiple bounces, while the rest 75% trace one bounce.

In both ReSTIR DI and GI we have already included reuse of temporally neighboring samples, which gives us pretty decent temporally stable results. Therefore when it comes to denoising, we don't necessarily need a spatiotemporal denoiser like SVGF, not to say that temporally reused outputs from ReSTIR are correlated and prone to artifacts if denoised temporally.

Just like what we did in project 4, our denoising process is logically divided into three stages: demodulation, filtering and remodulation. We let the output from ReSTIR to be divided by screen-space albedo (this can be a simple trick by setting surface albedo to 1), and do tone mapping to compress radiance values into a range that denoiser can handle well.

The direct and indirect components are filtered separately and merged after filtering. For direct we use a 4 level wavelet since it's already smooth. For indirect, we use a 5 level wavelet to reduce flickering. Also, in case of large radiance values that are hard to be filtered, we do tone mapping before filtering and tone map back after filtering.

| Demodulated Input | Denoised + Remodulated | |

|---|---|---|

| Direct |  |

|

| Indirect |  |

|

| Combined |  |

|

By the time we finished this project, we also tried bilateral filter, which only takes one pass. It turned out this filter worked well with direct illumination with faster speed but at the cost of lower quality.

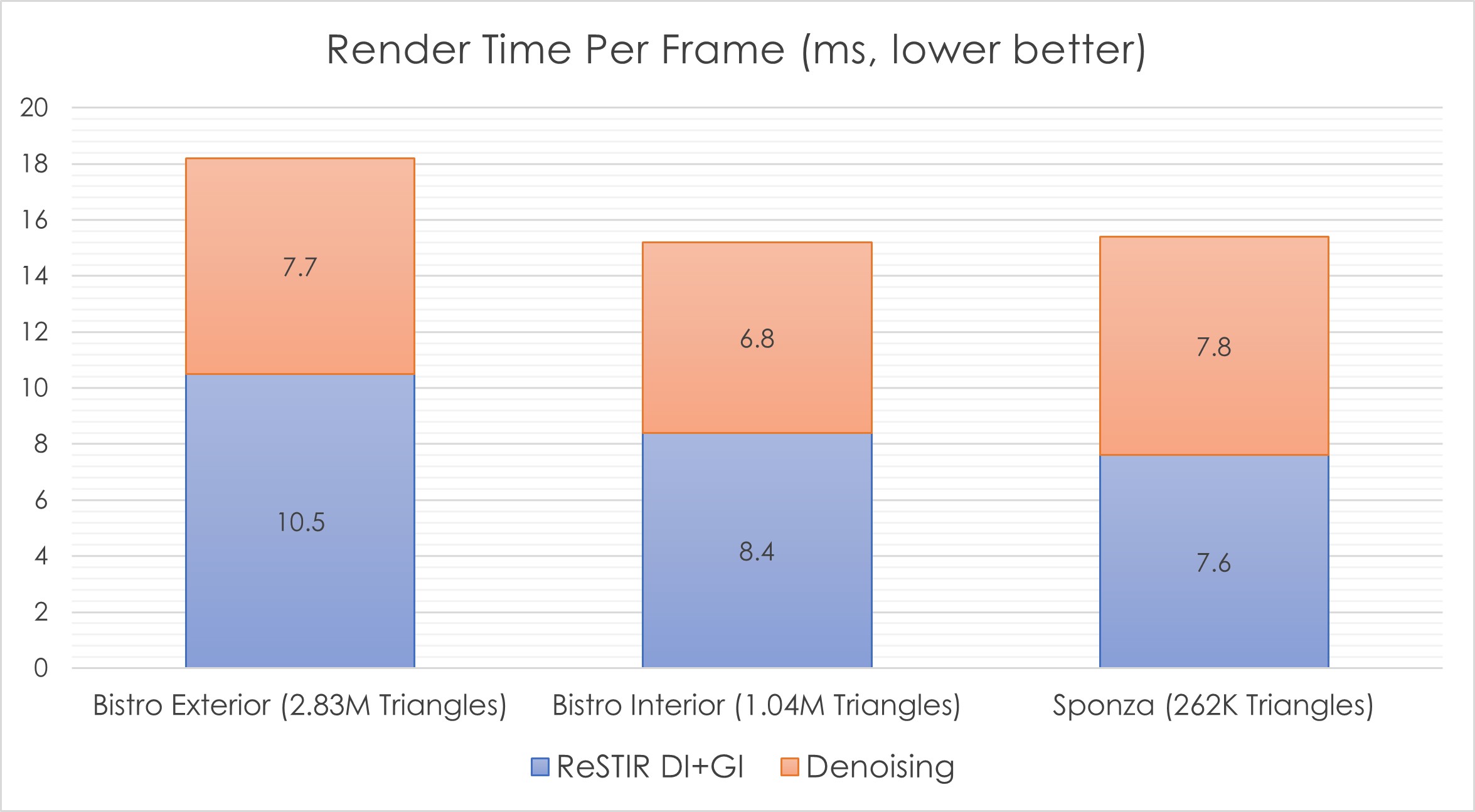

We tested our path tracer under 1920x1080, with indirect tracing depth = 4 for three scenes. Here is our result:

From the figure, our path tracer is able to render large scene (such as Bistro Exterior, which has 2.83 million triangles) within 20 ms (~50 FPS), efficient within our expectation. However, the denoiser takes about 8 ms, which is still a point to optimize.

Even if we managed to implement so such features, our project is far from perfect. There is still long way to go to make it close to production level. Here we've came up with ideas to improve our path tracer.

After ReSTIR DI, Rearchitecting Spatiotemporal Resampling for Production [C. Wymann et al, HPG 2021] tried to improve. It points out, the bottleneck of the original resampling algorithm lies in sampling of light sources, which relies on the use of alias table. Although alias table has the advantage of

Our work is currently based on earlier ReSTIR papers from 2020 and 2021. Actually, there has been a recent new research on ReSTIR, the Generalized Resampled Importance Sampling [D. Lin et al, SIG 2022], which extends ReSTIR DI from

We are looking forward to implementing this in our path tracer.

For this project, we did not have much time for denoiser implementation. The A-Trous filter we chose worked just fine, but still not much to our satisfactory.

For example, it is likely to produce overly blurred indirect image because it treats all filter points as having the same spatiotemporal variance. As we know, the better SVGF algorithm uses variance to drive A-Trous kernel during filtering. So is it possible to derive an estimator of variance from the process of ReSTIR? This would be an interesting topic to work on.

Besides, Nvidia has a post about a denoiser specially designed to fit in the features of ReSTIR, the ReLAX denoiser which derives from SVGF, but delicately modified to handle correlated patterns introduced by spatiotemporal reuse.

We wanted Tessellation Free Displacement Mapping to work with our ReSTIR PTGI to produce gorgeous frames with great detail and we knew the novelty of its idea. However, since it wasn't able to utilize current RTX pipeline well, it would bring huge performance gap to our ReSTIR pipeline. Therefore, we only implemented it as a separate feature. In the future, when more customized acceleration structure/shaders are allowed in RTX pipeline, it will make a difference.