Project Page | ArXiv | Paper | Demo video

The repo includes download instructions for the datasets and evaluation code used in following papers.

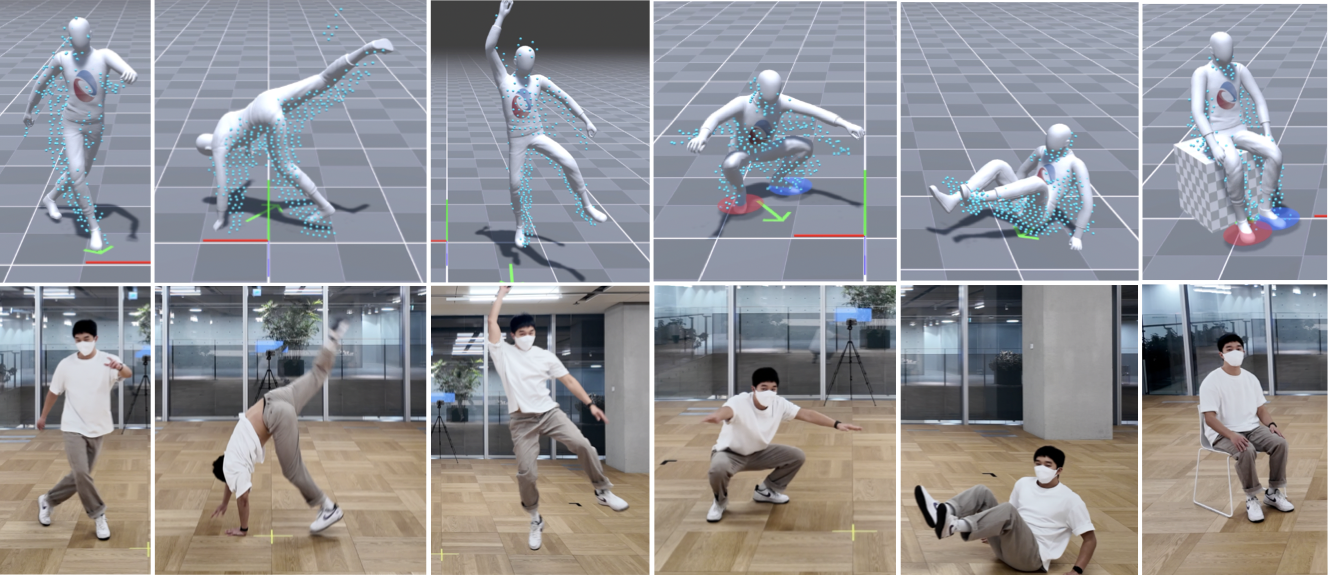

- ELMO: Enhanced Real-time LiDAR Motion Capture through Upsampling, ACM Transactions on Graphics (Proc. SIGGRAPH ASIA), 2024

- MOVIN: Real-time Motion Capture using a Single LiDAR, Computer Graphics Forum (Proc. Pacific Graphics), 2023

- Python 3.8+

- numpy

- scipy

- pandas

- h5py

- matplotlib

Clone this repository and create environment:

git clone https://github.com/MOVIN3D/ELMO_SIGASIA2024.git

cd ELMO_SIGASIA2024

conda create -n elmo python=3.9

conda activate elmoInstall the other dependencies:

pip install -r requirements.txt We construct the dataset, a high-quality, temporal-spatial synchronized single LiDAR-Motion Capture dataset.

- ELMO dataset: 20 subjects (12 males / 8 females). 17 subjects for train and 3 subjects for test.

- MOVIN dataset: 10 subjects (4 males / 6 females)). 8 subjects for train and 2 subjects for test.

To download the ELMO dataset (17.44GB) and the MOVIN dataset (2.33GB), run the following commands:

bash download.sh ELMO

bash download.sh MOVINThe file structure should be like:

ELMO_SIGASIA2024/

└── datasets

├── ELMO_dataset

| ├── test

| | ├──1201_172_W

| | | ├──lidar

| | | | ├──Locomotion.h5

| | | | └──Static.h5

| | | └──mocap

| | | ├──Locomotion.bvh

| | | └──Static.bvh

| | ├── ...

| |

| └── train

| ├──0905_155_F

| ...

|

├── MOVIN_dataset

| ├── test

| | ├──162_F

| | | ├──lidar

| | | | ├──Locomotion.h5

| | | | └──Static.h5

| | | └──mocap

| | | ├──Locomotion.bvh

| | | └──Static.bvh

| | ├── ...

| |

| └── train

| ├──157_F

| ...

...

The dataset includes the following:

*.bvh: 60Hz Motion Capture files contatining 21 joints.*.hdf5: LiDAR 3d point cloud data.

- ELMO dataset

| Range of Motion | |

|---|---|

| Freely rotating an individual joint/joints | in place & while moving |

| Static Movements | Locomotion |

|---|---|

| T-pose, A-pose, Idle, Look, Roll head | Normal walk, Walk with free upper-body motions |

| Elbows bent up & down, Stretch arms | Normal Jog, Jog with free upper-body motions |

| Bow, Touch toes, Lean, Rotate arms | Normal Run, Run with free upper-body motions |

| Hands on waist, Twist torso, Hula hoop | Normal Crouch, Crouch with free upper-body motions |

| Lunge, Squat, Jumping Jack, Kick, Lift knee | Transitions with changing pace |

| Turn in place, Walk in place, Run in place | Moving backward with changing pace |

| Sit on the floor | Jump (one-legged, both-legged, running, ...) |

- MOVIN dataset

| Static | Locomotion |

|---|---|

| T-pose, A-pose, Idle, Hands on waist | Walking |

| Elbows bent up, down | Jogging |

| Bow, Look, Roll head | Running |

| Windmill arms, Touch toes | Crouching |

| Twist torso, Hula hoop, Lean | Transitions |

| Lunge, Squat, Jumping Jack | Moving backward |

| Kick, Turn | Jumping |

| Walk / Run in place | Sitting on the floor |

To load and visualize the datasets, you can use the viz_mocap_pcd.py script.

This script allows you to load both the motion capture data (BVH) and the corresponding LiDAR point cloud data (HDF5), and visualize them together.

-

Ensure you have the required datasets in the appropriate directories.

-

Run the script using Python. For example:

python viz_mocap_pcd.py --bvh ./datasets/ELMO_dataset/test/1201_175_M/mocap/Locomotion.bvh \ --h5 ./datasets/ELMO_dataset/test/1201_175_M/lidar/Locomotion.h5

Scripts for evaluating motion data from different models and datasets. The evaluation process compares the output of various models against ground truth data, calculating errors in position, rotation, linear velocity, and angular velocity. Please refer to paper in detail.

To download the Evaluation dataset, run the following commands:

bash download.sh EVALThe repository is organized as follows:

evaluate_mELMO_dELMO.py: Evaluates ELMO model on ELMO datasetevaluate_mELMO_dMOVIN.py: Evaluates ELMO model on MOVIN datasetevaluate_mMOVIN_dELMO.py: Evaluates MOVIN model on ELMO datasetevaluate_mNIKI_dELMO.py: Evaluates NIKI model on ELMO datasetevaluate_mNIKI_dMOVN.py: Evaluates NIKI model on MOVIN dataset

-

Ensure you have the required datasets in the appropriate directories.

-

Run the evaluation scripts using Python. For example:

python evaluate_mELMO_dELMO.py -

The results will be printed and also saved in the

results/directory, organized by dataset and model.

If you find this work useful for your research, please cite our papers:

@article{jang2024elmo,

title={ELMO: Enhanced Real-time LiDAR Motion Capture through Upsampling},

author={Jang, Deok-Kyeong and Yang, Dongseok and Jang, Deok-Yun and Choi, Byeoli and Shin, Donghoon and Lee, Sung-hee},

journal={arXiv preprint arXiv:2410.06963},

year={2024}

}

@inproceedings{jang2023movin,

title={MOVIN: Real-time Motion Capture using a Single LiDAR},

author={Jang, Deok-Kyeong and Yang, Dongseok and Jang, Deok-Yun and Choi, Byeoli and Jin, Taeil and Lee, Sung-Hee},

booktitle={Computer Graphics Forum},

volume={42},

number={7},

pages={e14961},

year={2023},

organization={Wiley Online Library}

}

This project is only for research or education purposes, and not freely available for commercial use or redistribution. The dataset is available only under the terms of the Attribution-NonCommercial 4.0 International (CC BY-NC 4.0) license.