Training-in-Simulator-Inference-in-Real-World

Motivation:

Deep learning in the research field of autonomous driving systems (ADS) always suffers from data acquiring and annotation. For novel sensing modalities such as Light Detection and Ranging (LiDAR), it takes even more effort to annotate since it is difficult for human beings to label on the point clouds. Tackling this issue, we explore the use of synthetic data to train a neural network for real world inference.

Dataset used:

- Semantic KITTI

- Self generated dataset using Carla Simulator

Requirements:

- Carla 0.9.12: Steps for installation and system requirements - Method which I used: Package installation from github

- Conda 4.10.3

- Numpy 1.21.2

- Tensorflow 2.4.1/Pytorch 1.11.0

- Carla has maintained detailed documentation about its core concepts and Python API reference

Method to collect data with carla:

- Open a terminal in the main installed

carlapackage folder. Run the following command to execute package file and start the simulation:

./CarlaUE4.sh

- Copy and paste the file

src/utils/datacollector.py(in this repo) inside your installed carla package at locationPythonAPI/examplesfolder. - Open another terminal inside

PythonAPI/exampleand run following command to start collecting data:

python3 datacollector.py --sync -m Town01 -l

- Optional- Run in parallel in new terminal

python3 generate_traffic.py -n 50 -w 50 # spawn 50 vehicles and pedestrians

python3 dynamic_weather.py # collect the dataset using varying weather conditions.

Folder structure:

/PythonAPI/examples/dataset/

└── sequences/

├── Town01/

│ ├── poses.txt

│ ├── camera_depth/

│ │ ├── images/

│ │ │ ├ WorldSnapshot(frame=26)_1.png

│ │ │ ├ WorldSnapshot(frame=27)_1.png

│ │ ├── raw_data/

│ │ │ ├── binary

│ │ │ │ ├ WorldSnapshot(frame=26).npy

│ │ │ │ ├ WorldSnapshot(frame=27).npy

│ ├── camera_rgb/

│ │ ├── images/

│ │ │ ├ WorldSnapshot(frame=26)_0.png

│ │ │ ├ WorldSnapshot(frame=27)_0.png

│ │ ├── raw_data/

│ │ │ ├── binary

│ │ │ │ ├ WorldSnapshot(frame=26).npy

│ │ │ │ ├ WorldSnapshot(frame=27).npy

│ ├── camera_semseg/

│ │ ├── images/

│ │ │ ├ WorldSnapshot(frame=26)_2.png

│ │ │ ├ WorldSnapshot(frame=27)_2.png

│ │ ├── raw_data/

│ │ │ ├── binary

│ │ │ │ ├ WorldSnapshot(frame=26).npy

│ │ │ │ ├ WorldSnapshot(frame=27).npy

│ ├── lidar_semseg/

│ │ ├── images/

│ │ │ ├ 00000026.png

│ │ │ ├ 00000027.png

│ │ ├── raw_data/

│ │ │ ├── binary_files

│ │ │ │ ├ WorldSnapshot(frame=26)_3.bin

│ │ │ │ ├ WorldSnapshot(frame=27)_3.bin

│ │ │ ├── ground_truth

│ │ │ │ ├ WorldSnapshot(frame=26)_3.label

│ │ │ │ ├ WorldSnapshot(frame=27)_3.label

│ │ │ ├── ply_files

│ │ │ │ ├ WorldSnapshot(frame=26)_3.ply

│ │ │ │ ├ WorldSnapshot(frame=27)_3.ply

│ │ │ ├── updated_ground_truth # Label remapping to be in accordance with Semantic KITTI labels

│ │ │ │ ├ WorldSnapshot(frame=26)_3_new.label

│ │ │ │ ├ WorldSnapshot(frame=27)_3_new.label

├── Town02/

.

.

.

└── Town05/

lidar_semseg/images contains Spherically Projected images(Range-view).

lidar_semseg/raw_data/updated_ground_truth contains label remapping for only 3 classes:

- 0 - background

- 1 - car

- 2 - pedestrians

Semantic-KITTI modifier:

- Download dataset - Semantic KITTI

- Run

KITTI_data_modifer.pyto create projected bin files, label files and images of given sequencedata_odometry_velodyne_proj, data_odometry_labels_projimg, data_odometry_labels_proj. - Semantic KITTI labels are remapped corresponding to Carla labels.

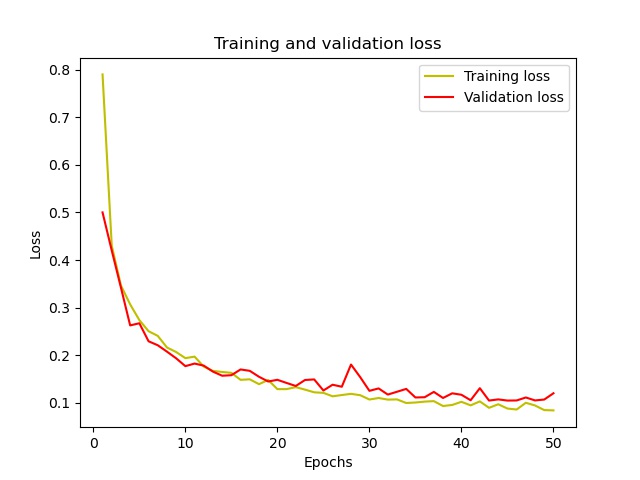

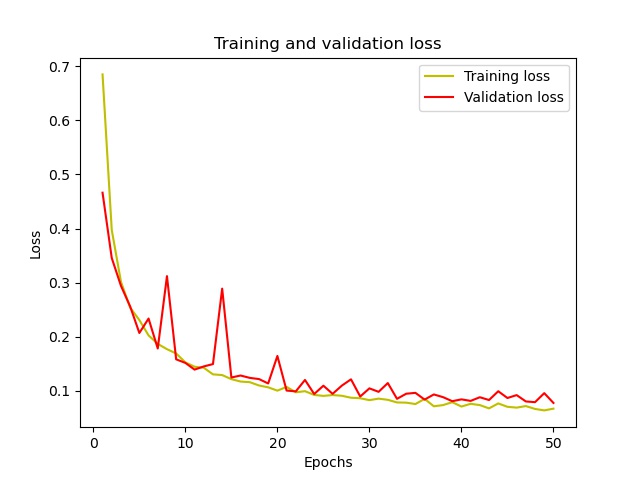

Training the Model:

- Make sure you are inside /src folder and then run the following command to set PYTHONPATH for utils folder.

export PYTHONPATH=$PYTHONPATH:$(pwd)/utils

- After this, To train the model Run the following command:

python3 nets/modified_SqueezeSeg_Tensorflow.py 3 0 6 50

Here, You can change the parameters as per your needs.

- The First integer (3) denotes the number of classes for which the model has to learn to segment (this has to be same compared to the number of classes in your ground truth labels).

- If you want to test the model, you need to set the second argument to 1.

- The third argument sets the batch size.

- The Fourth argument sets the Number of Epochs.

- For Testing, Run the following command:

python3 nets/modified_SqueezeSeg_Tensorflow.py 3 1 6 50