This repository contains implementations of Deep Q-Learning (DQN) and Q-Learning algorithms for apprenticeship learning, based on the paper “Apprenticeship Learning via Inverse Reinforcement Learning" by P. Abbeel and A. Y. Ng, applied to two classic control tasks: CartPole and Pendulum.

Apprenticeship Learning via Inverse Reinforcement Learning combines principles of reinforcement learning and inverse reinforcement learning to enable agents to learn from expert demonstrations. The agent learns to perform a task by observing demonstrations provided by an expert, without explicit guidance or reward signals. Instead of learning directly from rewards, the algorithm seeks to infer the underlying reward function from the expert demonstrations and then optimize the agent's behavior based on this inferred reward function.

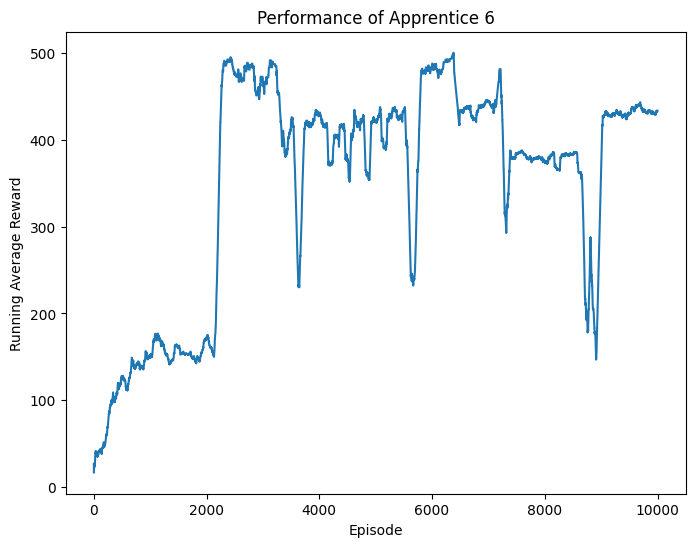

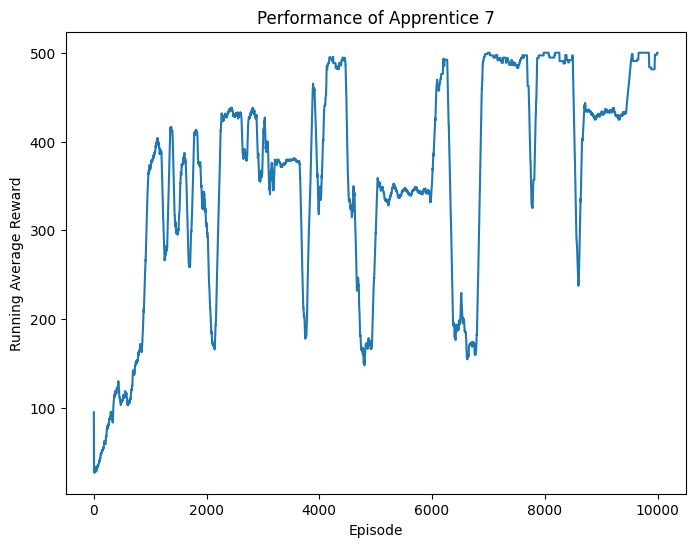

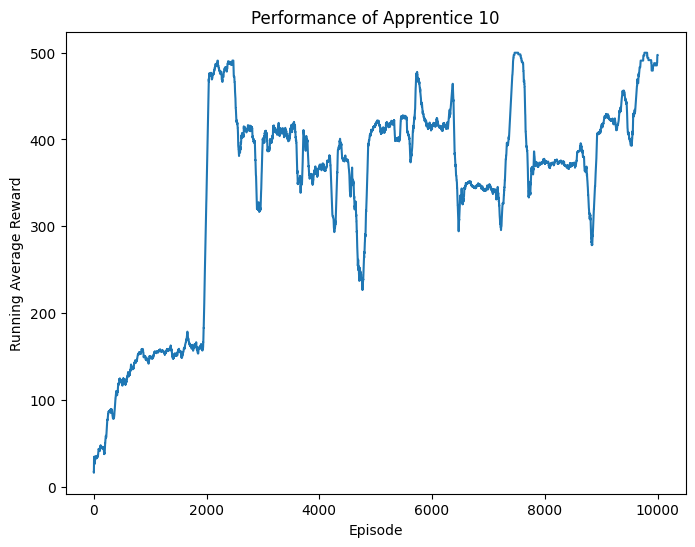

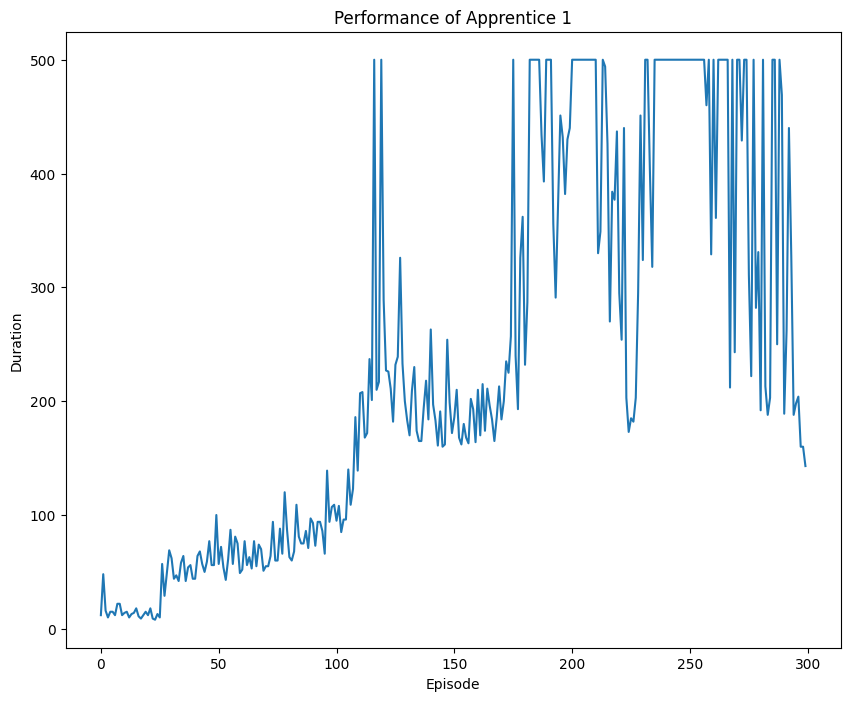

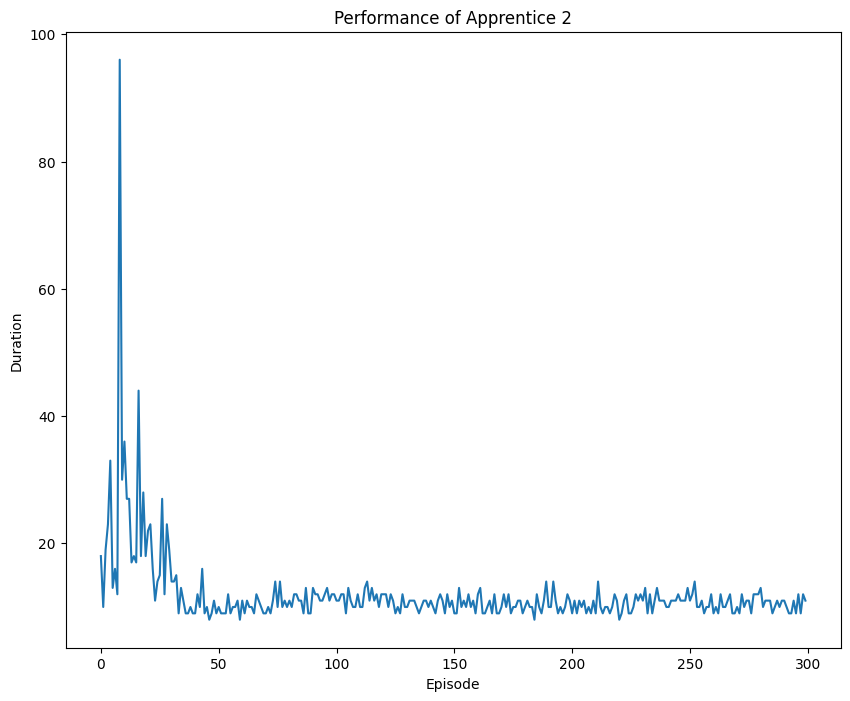

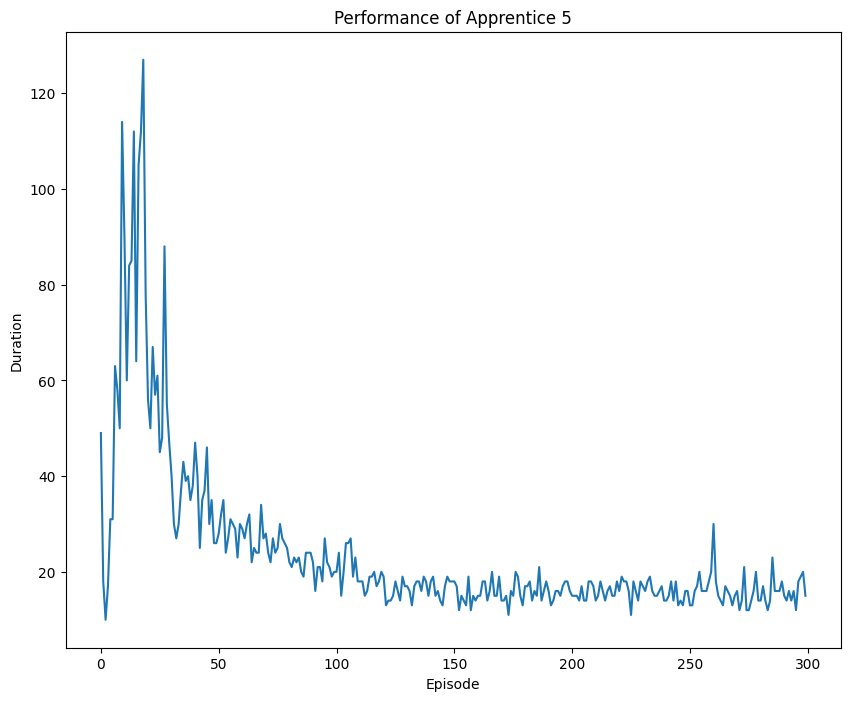

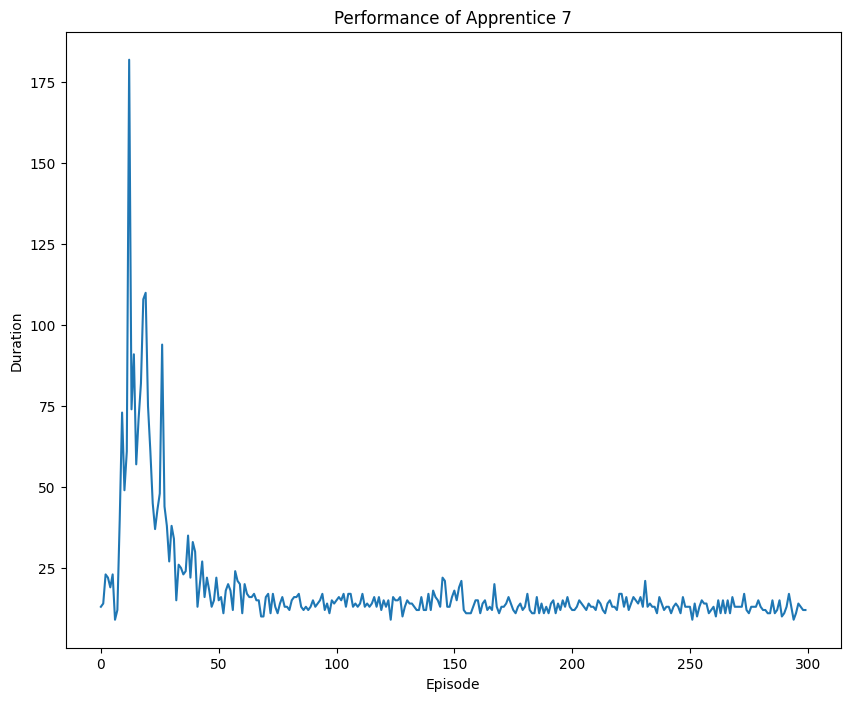

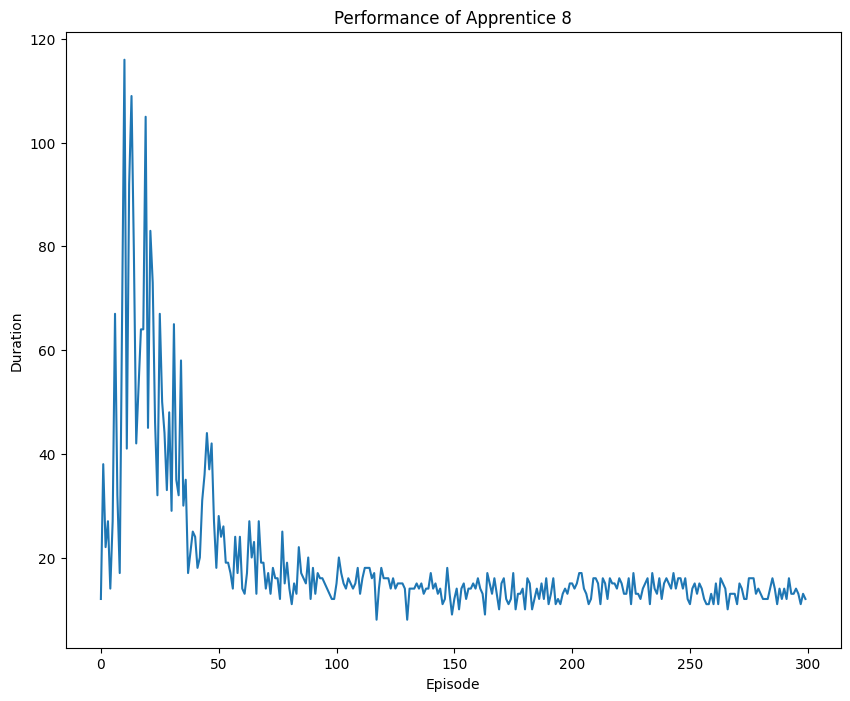

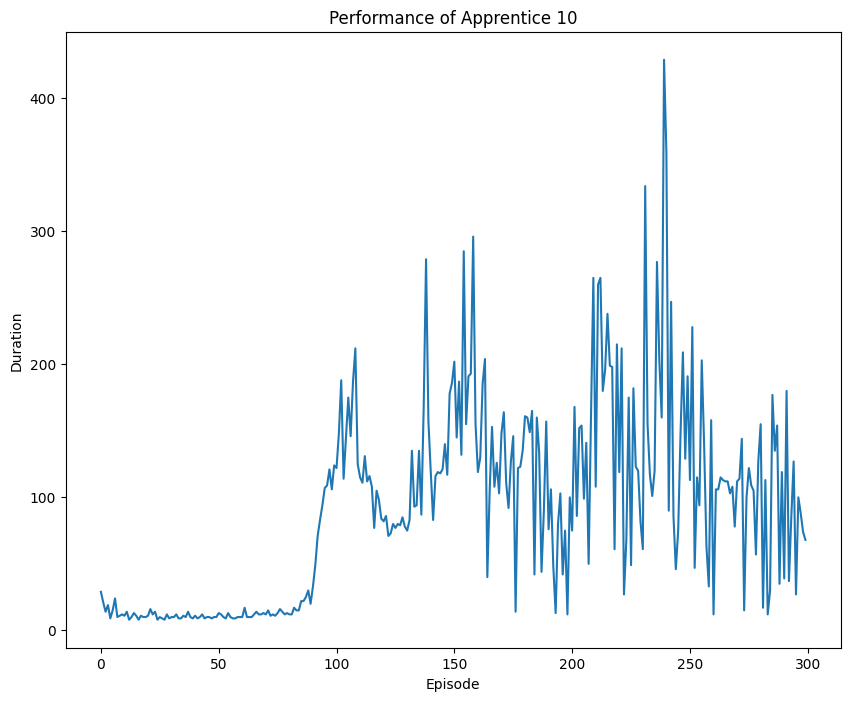

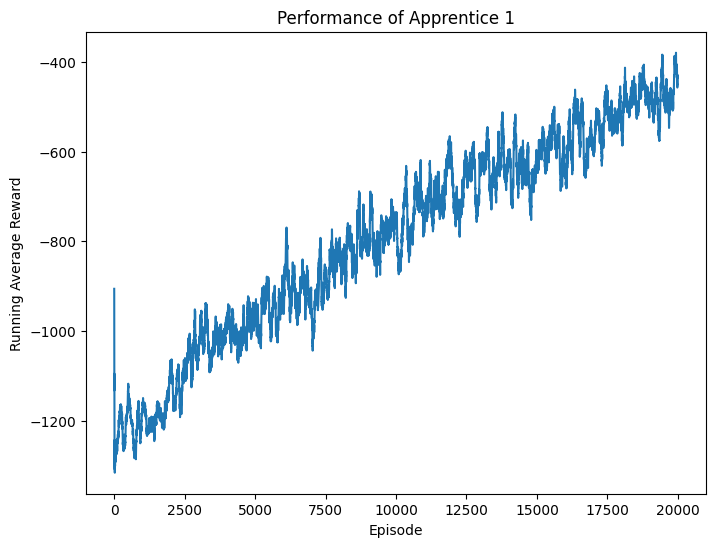

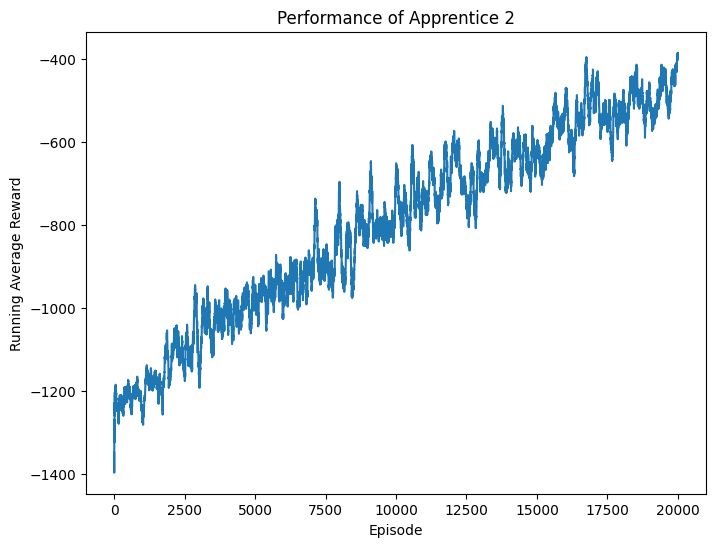

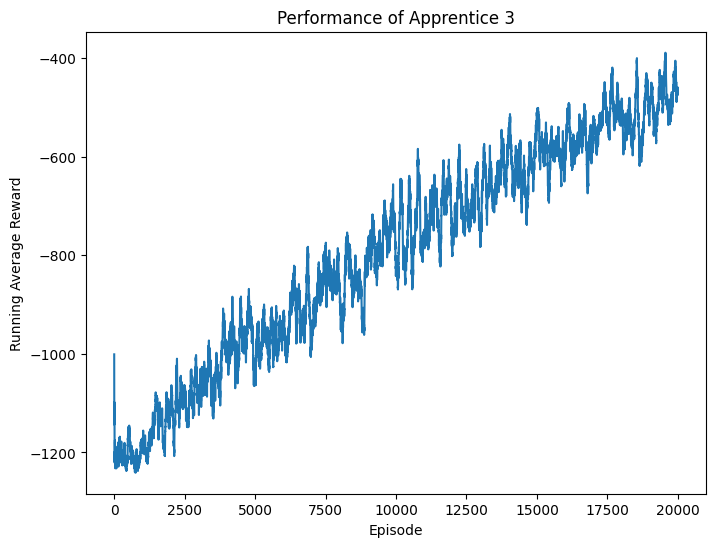

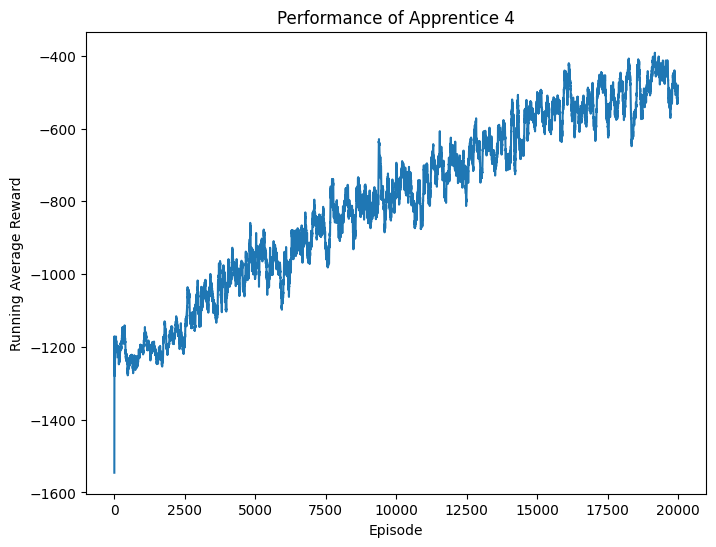

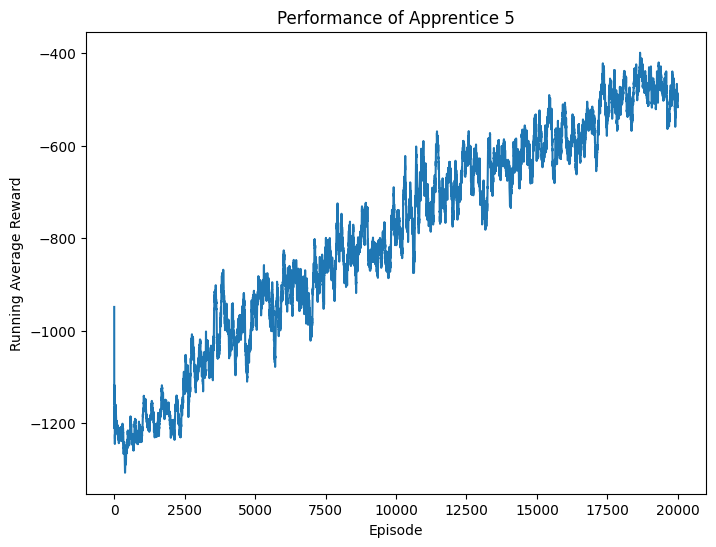

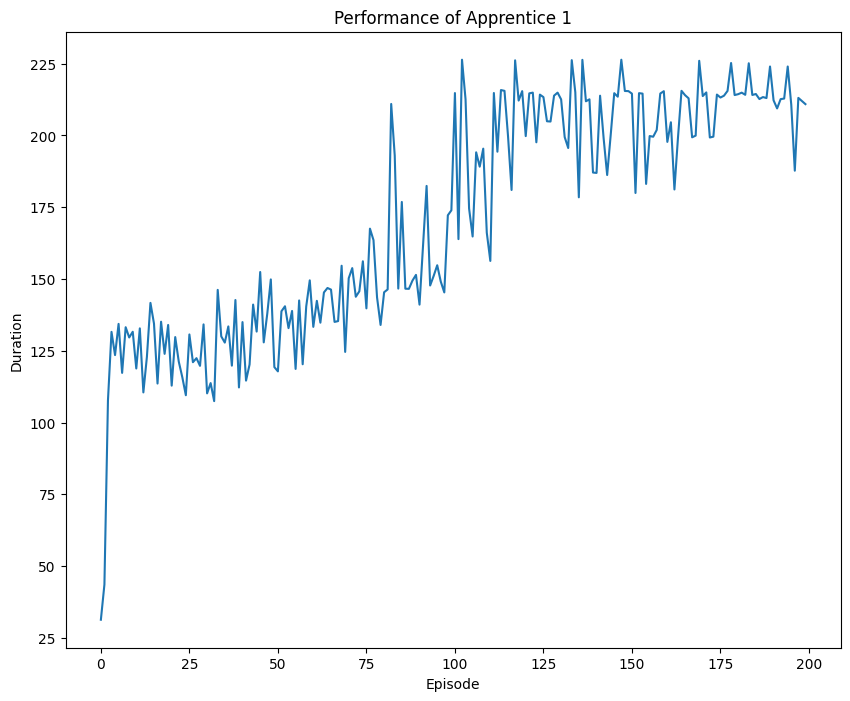

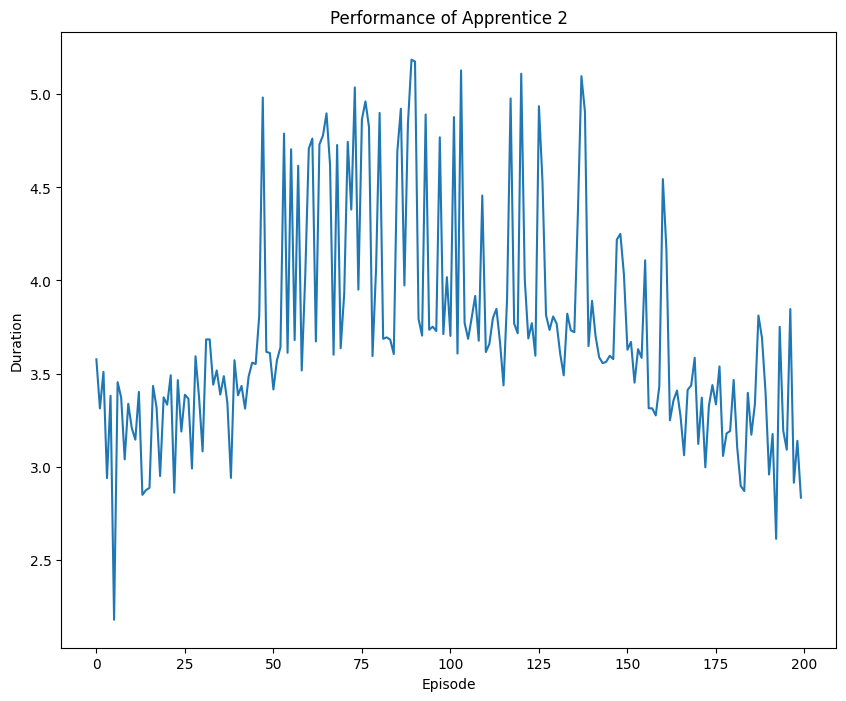

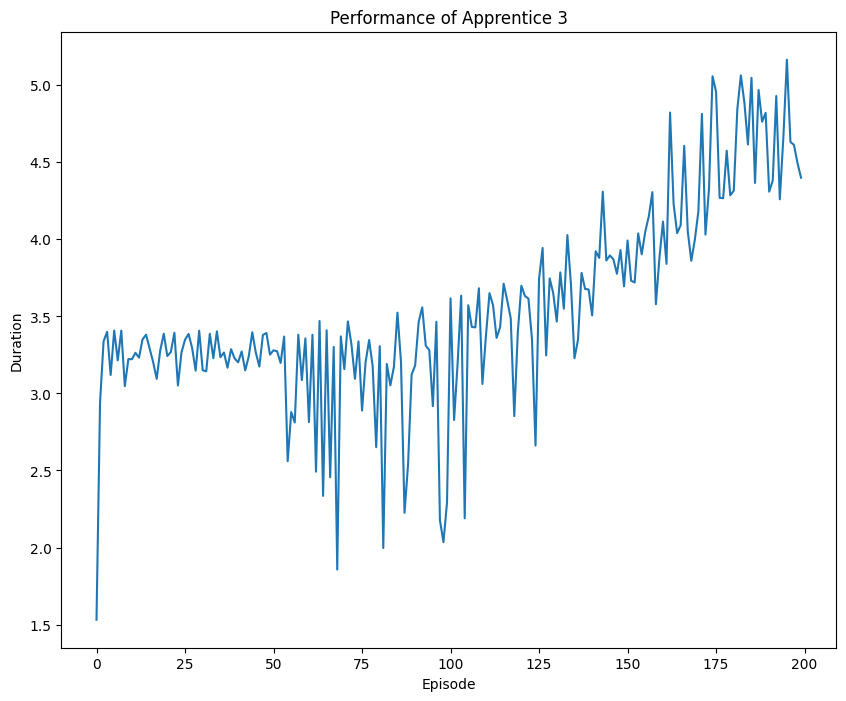

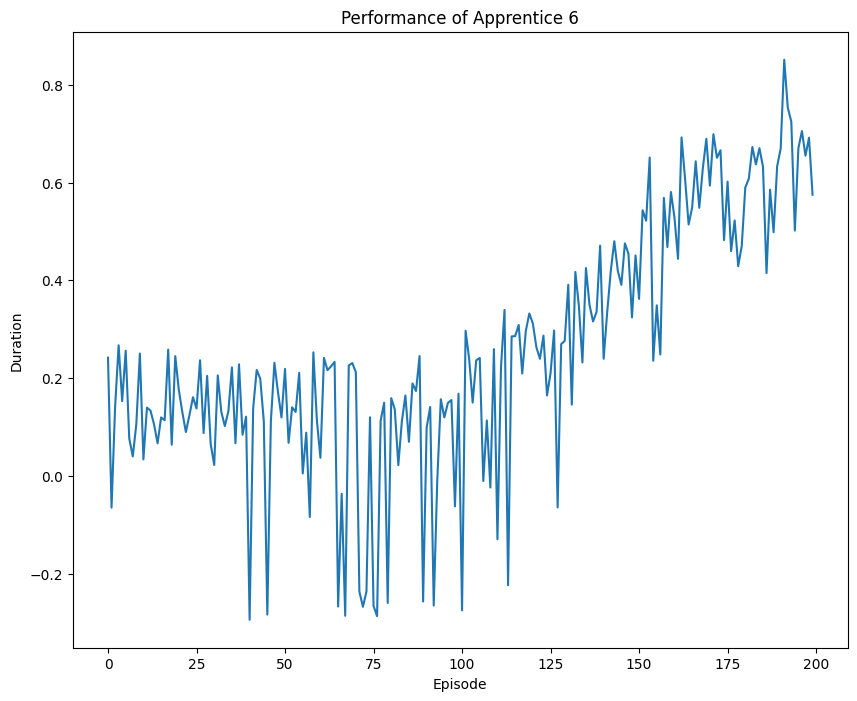

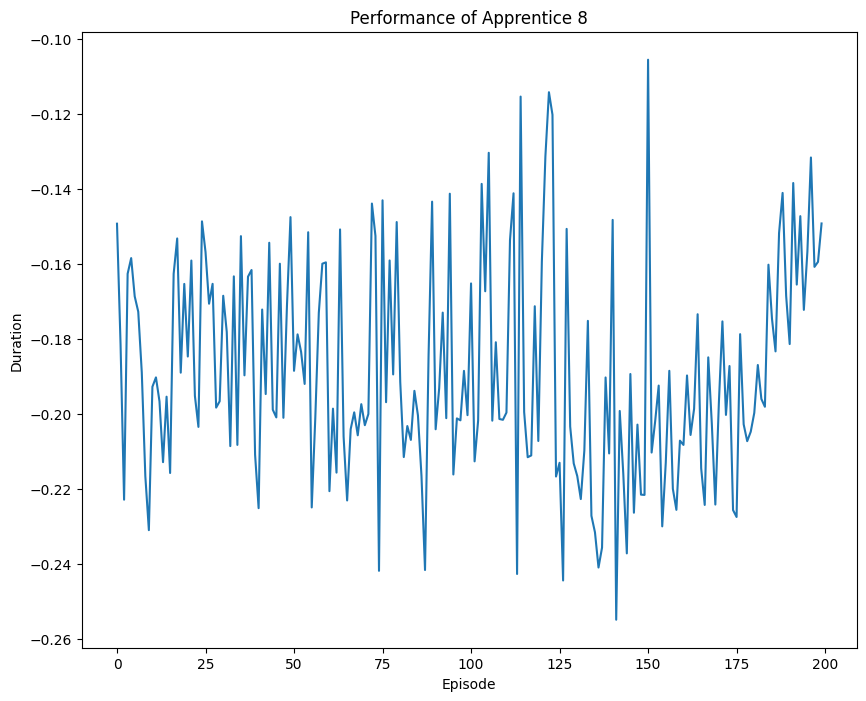

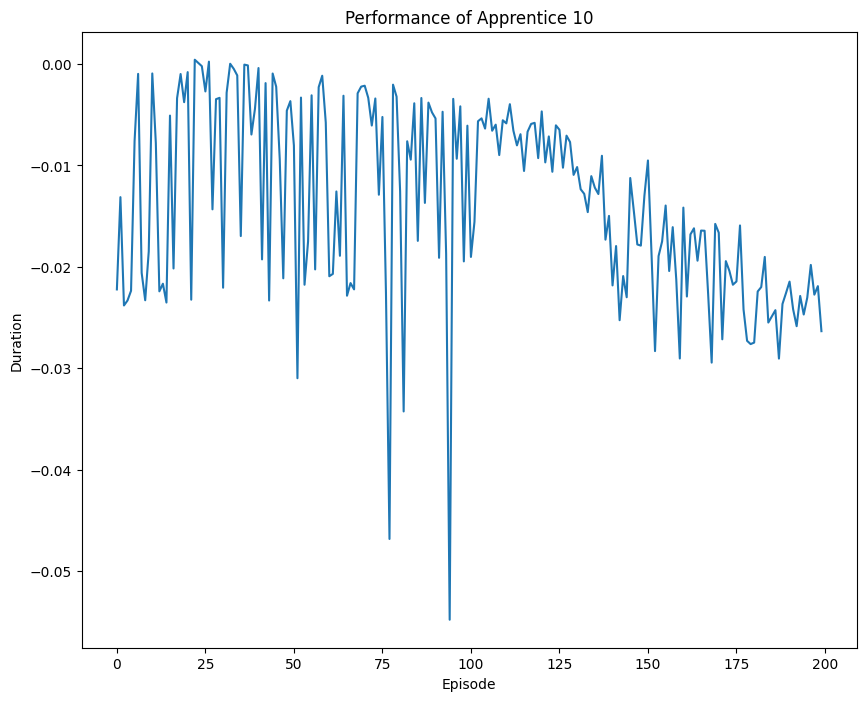

One approach to implementing this is the Projection Method Algorithm, which iteratively refines the agent's policy based on the difference between the expert's behavior and the agent's behavior. At each iteration, the algorithm computes a weight vector that maximally separates the expert's feature expectations from the agent's feature expectations, subject to a constraint on the norm of the weight vector. This weight vector is then used to train the agent's policy, and the process repeats until convergence. At least one of the trained apprentices perform at least as well as the expert within ϵ.

- Since the states are continuous, they are discretized into 14641 state combinations.

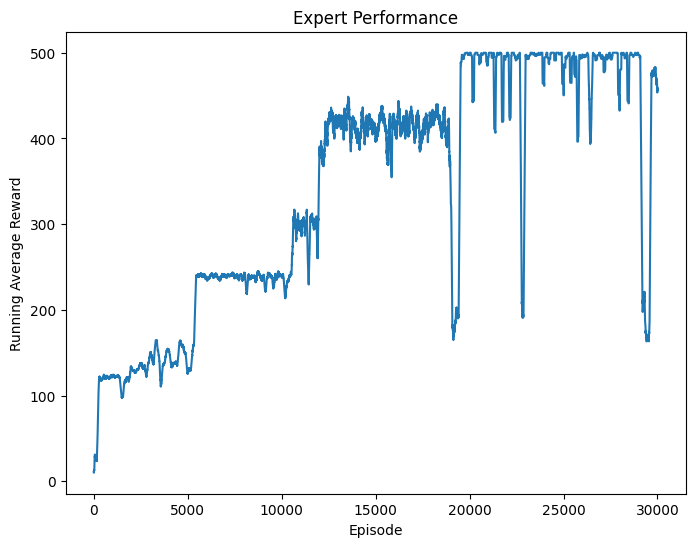

- Expert is trained using Q Learning algorithm with a Q-Table of dimension 14641 x 2 for 30,000 iterations within which the expert obtains the goal.

CartPole expert trained using Q learning

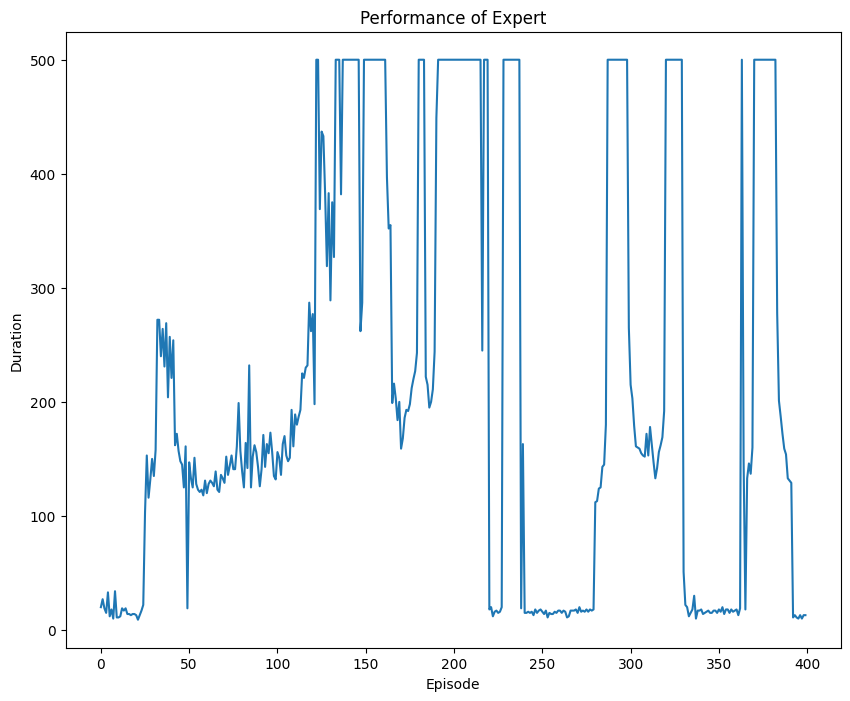

- We utilize Double Deep Q Learning to train an expert cartpole agent for 400 iterations.

- The policy is represented by the Q-value function learned by the neural network.

- A policy network and target network is used in the Double DQN.

CartPole expert trained using DDQN

- Since both the states and actions are continuous, they are discretized into [21, 21, 65] state combinations and 9 actions.

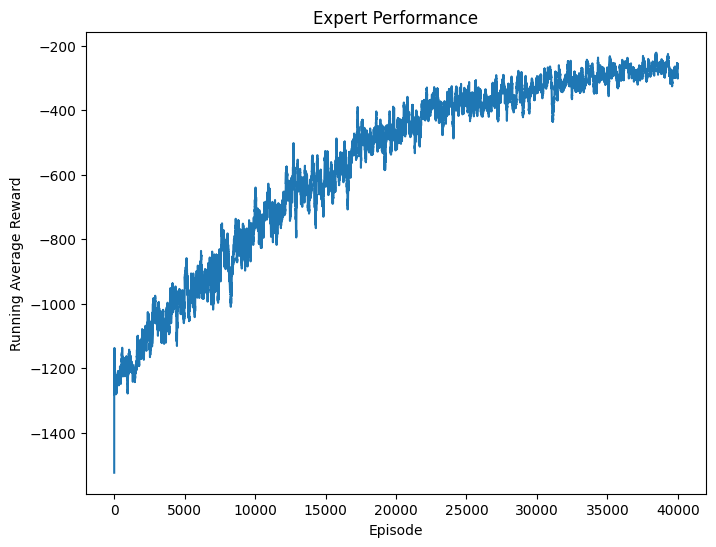

- Expert is trained using Q Learning algorithm for 40,000 iterations within which the expert obtains the goal.

Pendulum expert trained using Q learning

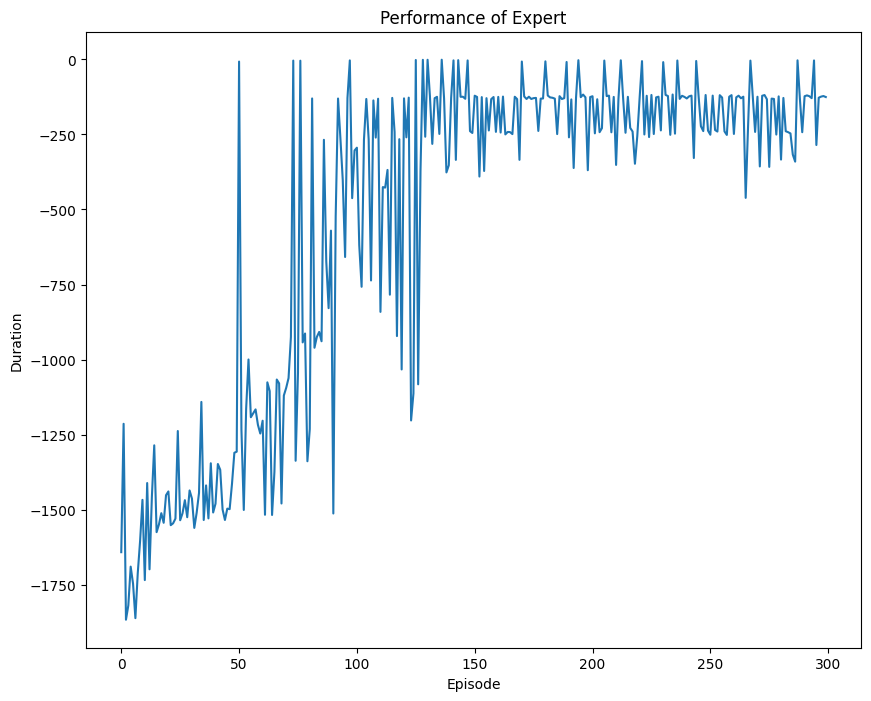

- The continuous actions are discretized into 9 actions.

- Expert agent is trained using Double Deep Q Learning for 300 iterations.

Pendulum expert trained using DDQN

For an overview of the project and its implementation, refer to the presentation file.

- Abbeel, P. & Ng, A. Y. (2004). Apprenticeship learning via inverse reinforcement learning.

- Greg Brockman, Vicki Cheung, Ludwig Pettersson, Jonas Schneider, John Schulman, Jie Tang, & Wojciech Zaremba. (2016). OpenAI Gym.

- Reinforcement Learning (DQN) Tutorial - PyTorch Tutorials 2.2.1+cu121 documentation. (n.d.).

- Amit, R., & Mataric, M. (2002). Learning movement sequences from demonstration. Proc. ICDL.

- Rhklite. (n.d.). Rhklite/apprenticeship_inverse_rl: Apprenticeship learning with inverse reinforcement learning.

- BhanuPrakashPebbeti. (n.d.). Bhanuprakashpebbeti/q-learning_and_double-Q-Learning.

- JM-Kim-94. (n.d.). JM-Kim-94/RL-Pendulum: Open ai gym - pendulum-V1 reinforcement learning (DQN, SAC).