This repo contains the source code for project's web page.

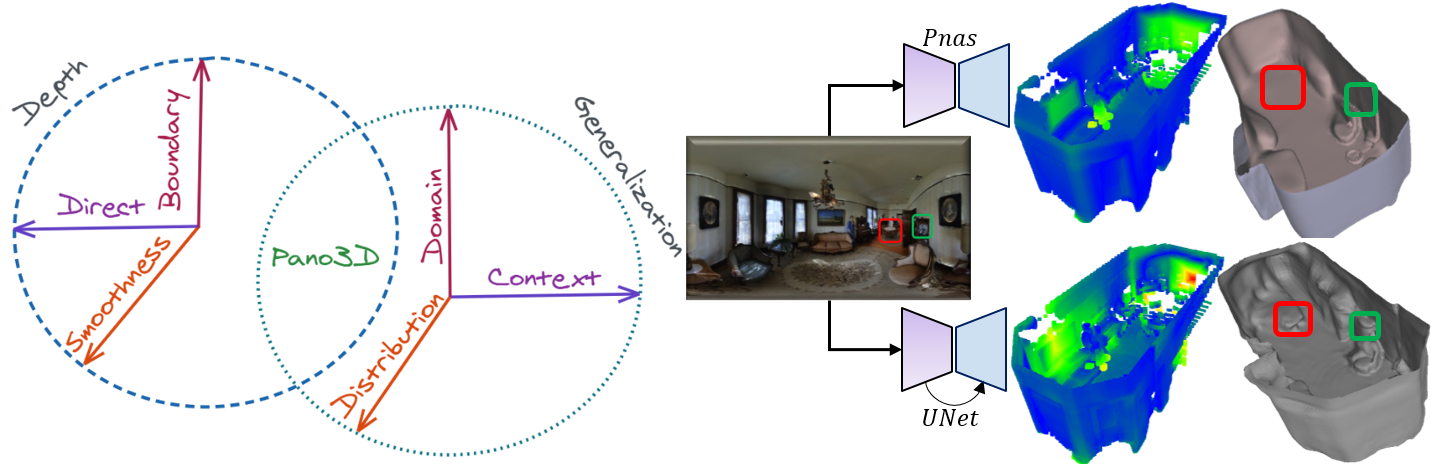

Pano3D is a new benchmark for depth estimation from spherical panoramas. We generate a dataset (using GibsonV2) and provide baselines for holistic performance assessment, offering:

- Primary and secondary traits metrics:

- Direct depth performance:

- (w)RMSE

- (w)RMSLE

- AbsRel

- SqRel

- (w)Relative accuracy (

\delta) @ {1.05,1.1,1.25,1.252,1.253 }

- Boundary discontinuity preservation:

- Precision @ {

0.25,0.5,1.0}m - Recall @ {

0.25,0.5,1.0}m - Depth boundary errors of accuracy and completeness

- Precision @ {

- Surface smoothness:

- RMSEo

- Relative accuracy (

\alpha) @ {11.25o,22.5o,30o}

- Direct depth performance:

- Out-of-distribution & Zero-shot cross dataset transfer:

- Different depth distribution test set

- Varying scene context test set

- Shifted camera domain test set

By disentangling generalization and assessing all depth properties, Pano3D aspires to drive progress benchmarking for 360o depth estimation.

Using Pano3D to search for a solid baseline results in an acknowledgement of exploiting complementary error terms, adding encoder-decoder skip connections and using photometric augmentations.

- Web Demo

- Data Download

- Loader & Splits

- Model Code & Models Weights

- Model Serve Code

- Model Hub Code

- Metrics Code

A publicly hosted demo of the baseline models can be found here. Using the web app, it is possible to upload a panorama and download a 3D reconstructed mesh of the scene using the derived depth map.

Note that due to the external host's caching issues, it might be necessary to refresh your browser's cache in between runs to update the 3D models.

The model's code and weights are also available in our demo.

To download the data, follow the instructions at vcl3d.github.io/Pano3D/download/.

Please note that getting access to the data download links is a two step process as the dataset is a derivative and compliance with the original dataset's terms and usage agreements is required. Therefore:

- You first need to fill in this Google Form.

- And, then, you need to perform an access request at each one of the Zenodo repositories (depending on which dataset partition you need):

Matterport3D Train & Test (/w Filmic) High Resolution (

1024 x 512)GibsonV2 Full (w/o normals) High Resolution (

1024 x 512)GibsonV2 Tiny, Medium & Fullplus (w/o normals) High Resolution (

1024 x 512)GibsonV2 Tiny & Fullplus Filmic High Resolution (

1024 x 512)Matterport3D Train & Test (/w Filmic) Low Resolution (

512 x 256)GibsonV2 Full Low Resolution (

512 x 256)GibsonV2 Tiny, Medium & Fullplus (/w Filmic) Low Resolution (

512 x 256)

After both these steps are completed, you will soon receive the download links for each dataset partition.