BEVSimDet: Simulated Multi-modal Distillation in Bird’s-Eye View for Multi-view 3D Object Detection

This is the official repository of the paper BEVSimDet: Simulated Multi-modal Distillation in Bird’s-Eye View for Multi-view 3D Object Detection.

Haimei Zhao, Qiming Zhang, Shanshan Zhao, Jing Zhang, and Dacheng Tao

News | Abstract | Method | Results | Preparation | Code | Statement

News

- (2023/3/29) BEVSimDet is released on arXiv.

Other applications of ViTAE Transformer include: image classification | object detection | semantic segmentation | pose estimation | remote sensing|image matting | scene text spotting

Abstract

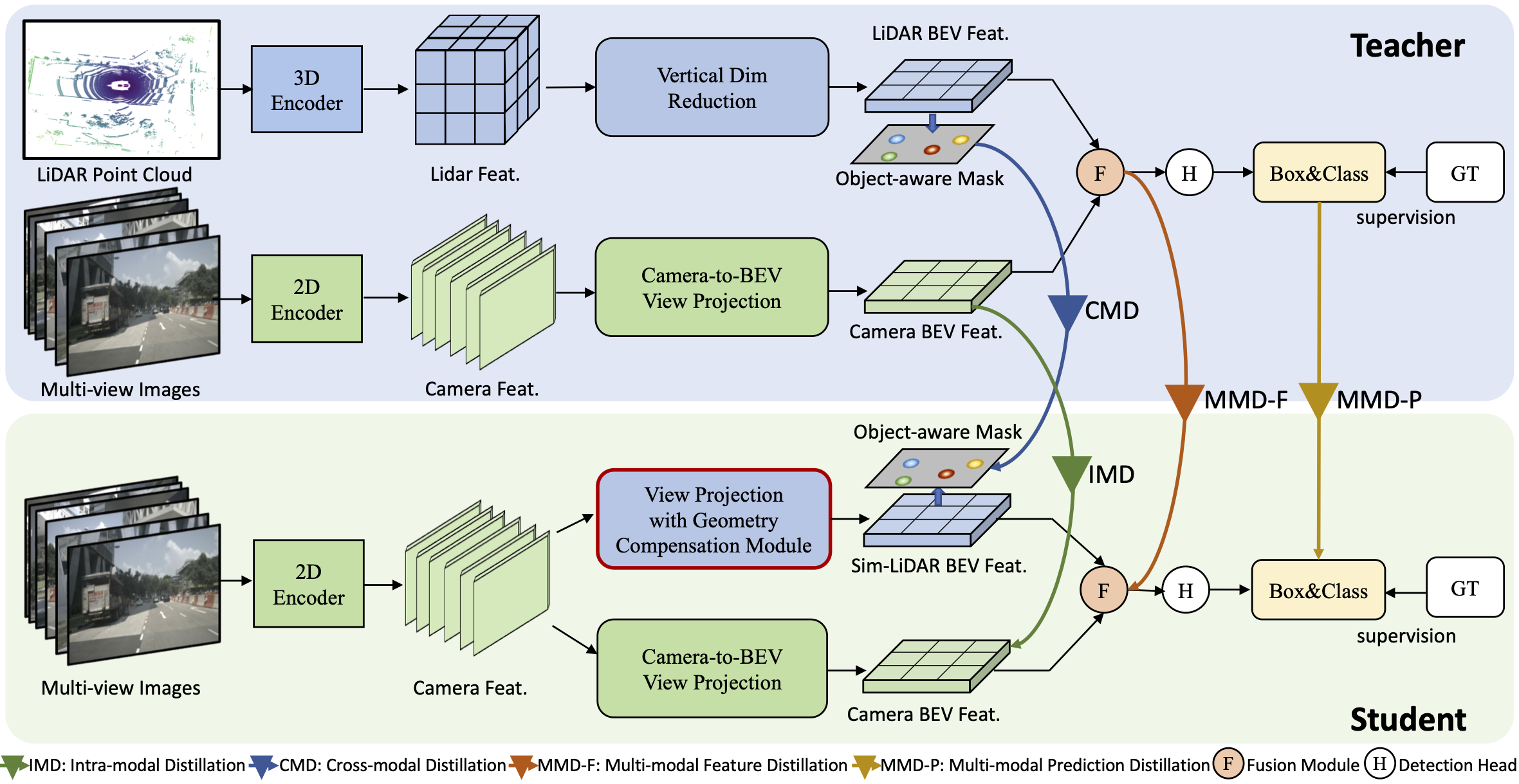

Multi-view camera-based 3D object detection has gained popularity due to its low cost. But accurately inferring 3D geometry solely from camera data remains challenging, which impacts model performance. One promising approach to address this issue is to distill precise 3D geometry knowledge from LiDAR data. However, transferring knowledge between different sensor modalities is hindered by the significant modality gap. In this paper, we approach this challenge from the perspective of both architecture design and knowledge distillation and present a new simulated multi-modal 3D object detection method named BEVSimDet. We first introduce a novel framework that includes a LiDAR and camera fusion-based teacher and a simulated multi-modal student, where the student simulates multi-modal features with image-only input. To facilitate effective distillation, we propose a simulated multi-modal distillation scheme that supports intra-modal, cross-modal, and multi-modal distillation simultaneously. By combining them together, BEVSimDet can learn better feature representations for 3D object detection while enjoying cost-effective camera-only deployment. Experimental results on the challenging nuScenes benchmark demonstrate the effectiveness and superiority of BEVSimDet over recent representative methods.

Method

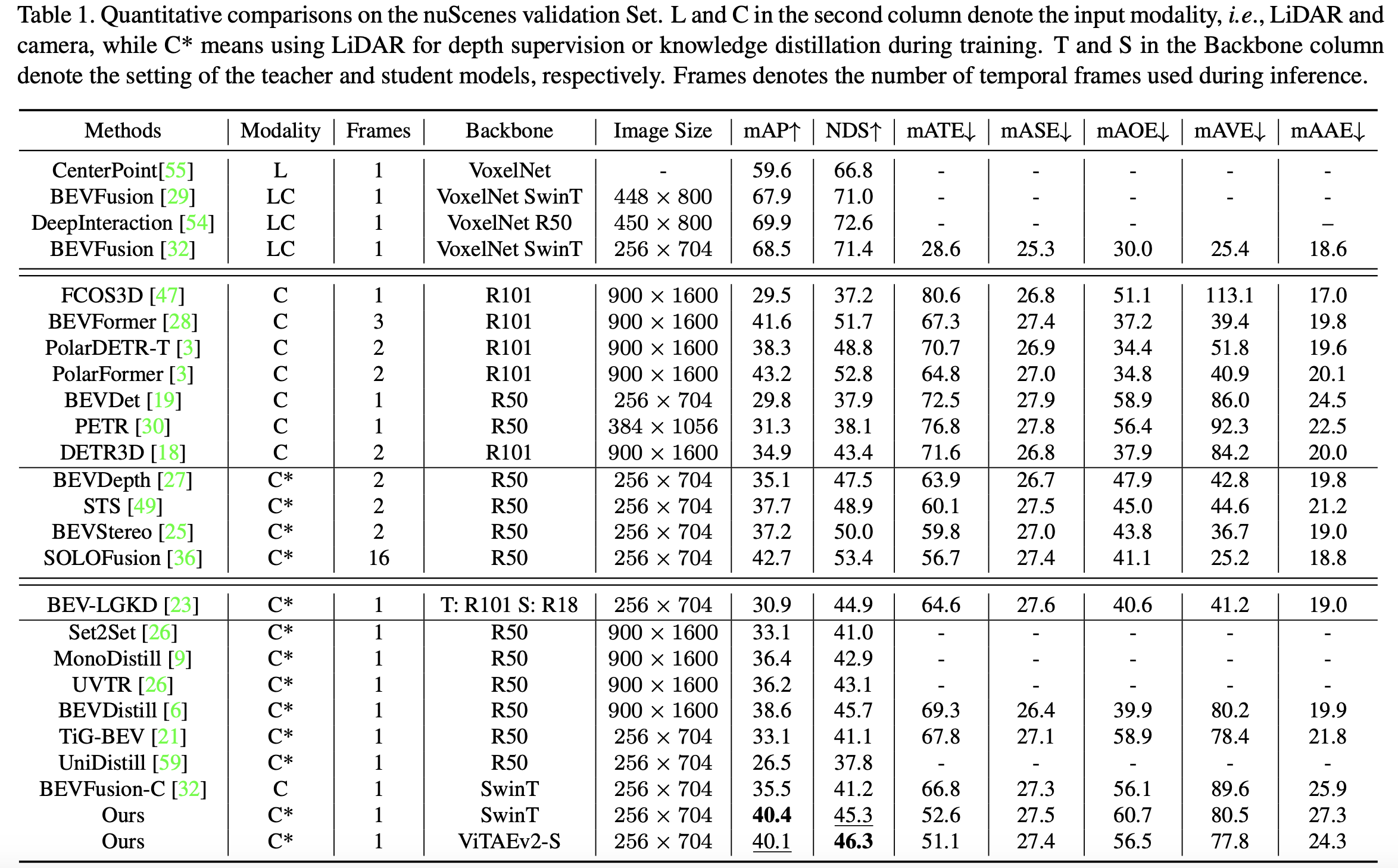

Results

Quantitative results on Nuscenes validation set

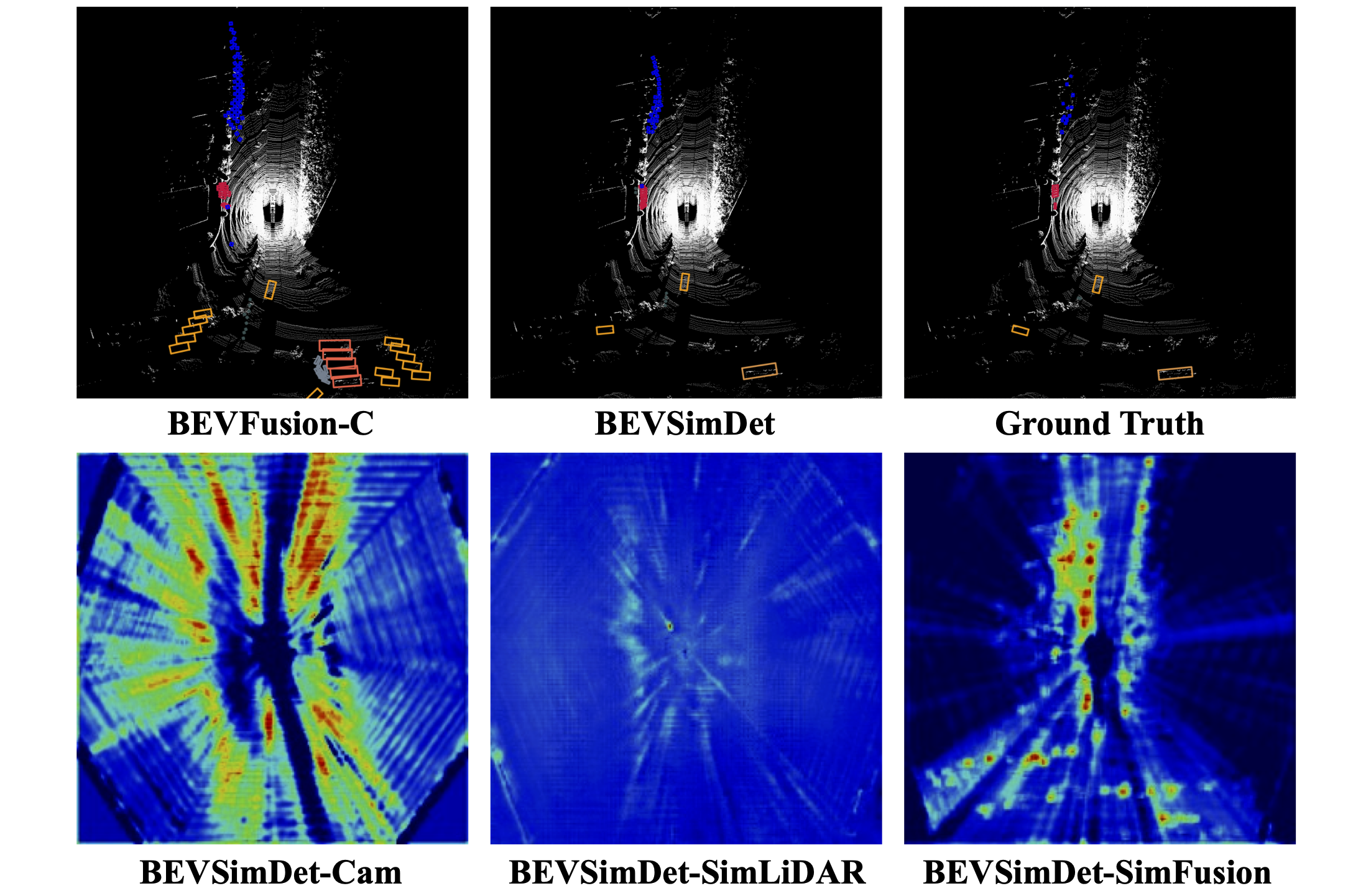

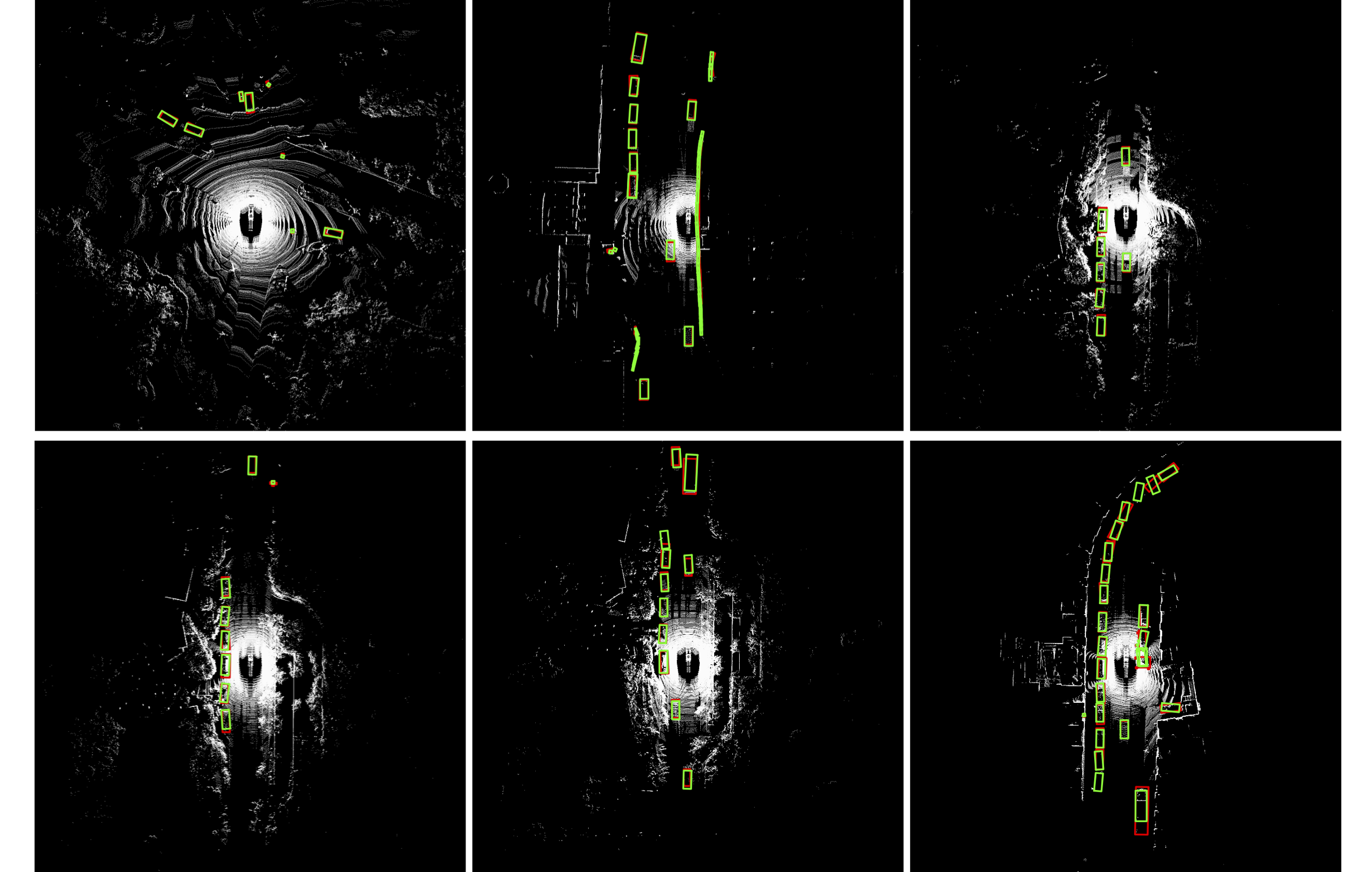

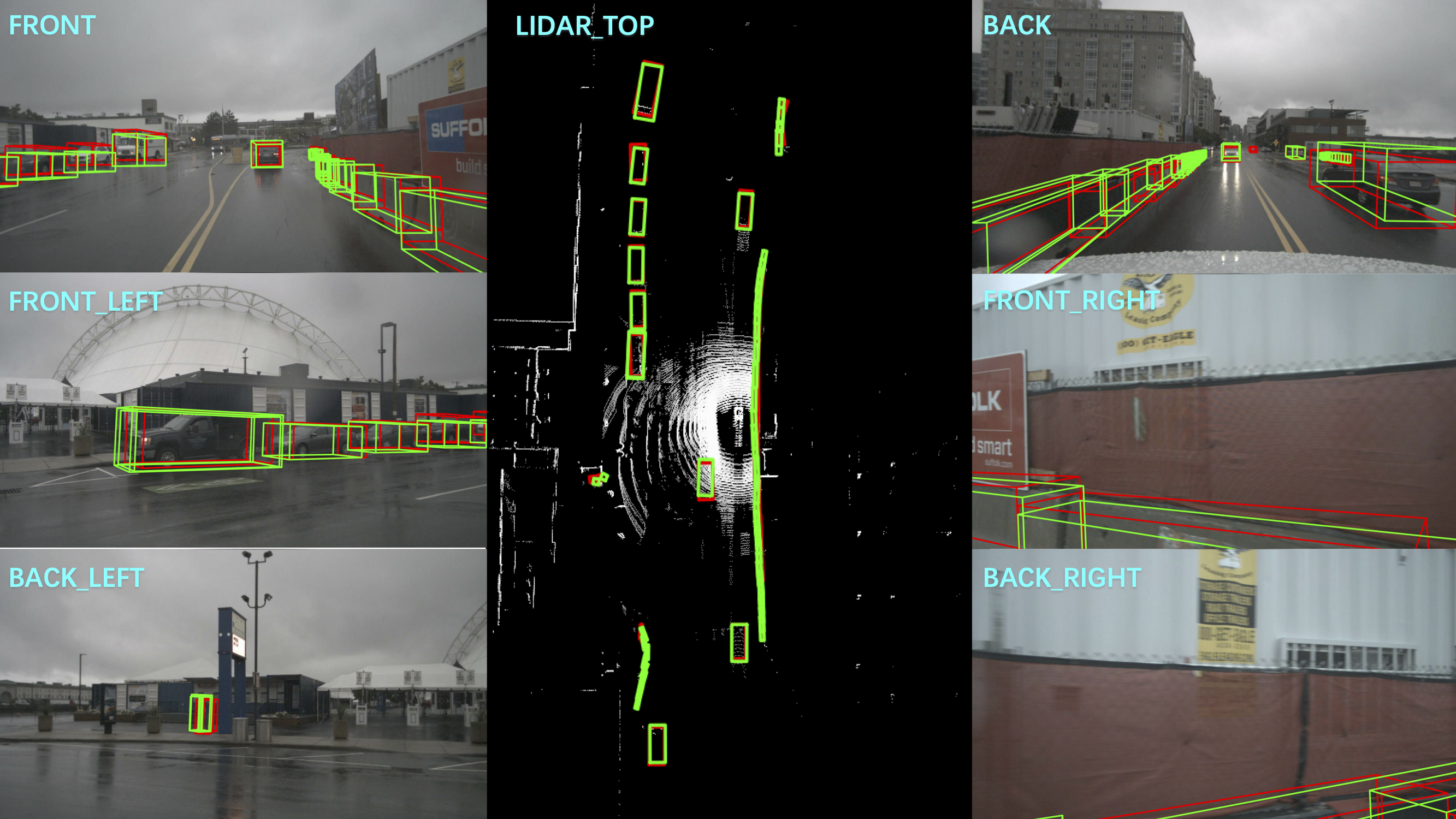

Qualitative results

Preparation

Prerequisites

The code is built with following libraries:

- Python >= 3.8, <3.9

- OpenMPI = 4.0.4 and mpi4py = 3.0.3 (Needed for torchpack)

- Pillow = 8.4.0 (see here)

- PyTorch >= 1.9, <= 1.10.2

- tqdm

- torchpack

- mmcv = 1.4.0

- mmdetection = 2.20.0

- nuscenes-dev-kit

After installing these dependencies, please run this command to install the codebase:

python setup.py developData Preparation

nuScenes

Please follow the instructions from here to download and preprocess the nuScenes dataset. Please remember to download both detection dataset and the map extension (for BEV map segmentation). After data preparation, you will be able to see the following directory structure (as is indicated in mmdetection3d):

mmdetection3d

├── mmdet3d

├── tools

├── configs

├── data

│ ├── nuscenes

│ │ ├── maps

│ │ ├── samples

│ │ ├── sweeps

│ │ ├── v1.0-test

| | ├── v1.0-trainval

│ │ ├── nuscenes_database

│ │ ├── nuscenes_infos_train.pkl

│ │ ├── nuscenes_infos_val.pkl

│ │ ├── nuscenes_infos_test.pkl

│ │ ├── nuscenes_dbinfos_train.pkl

Code

Codes coming soon...

Statement

@article{zhao2023bevsimdet,

title={BEVSimDet: Simulated Multi-modal Distillation in Bird's-Eye View for Multi-view 3D Object Detection},

author={Zhao, Haimei and Zhang, Qiming and Zhao, Shanshan and Zhang, Jing and Tao, Dacheng},

journal={arXiv preprint arXiv:2303.16818},

year={2023}

}