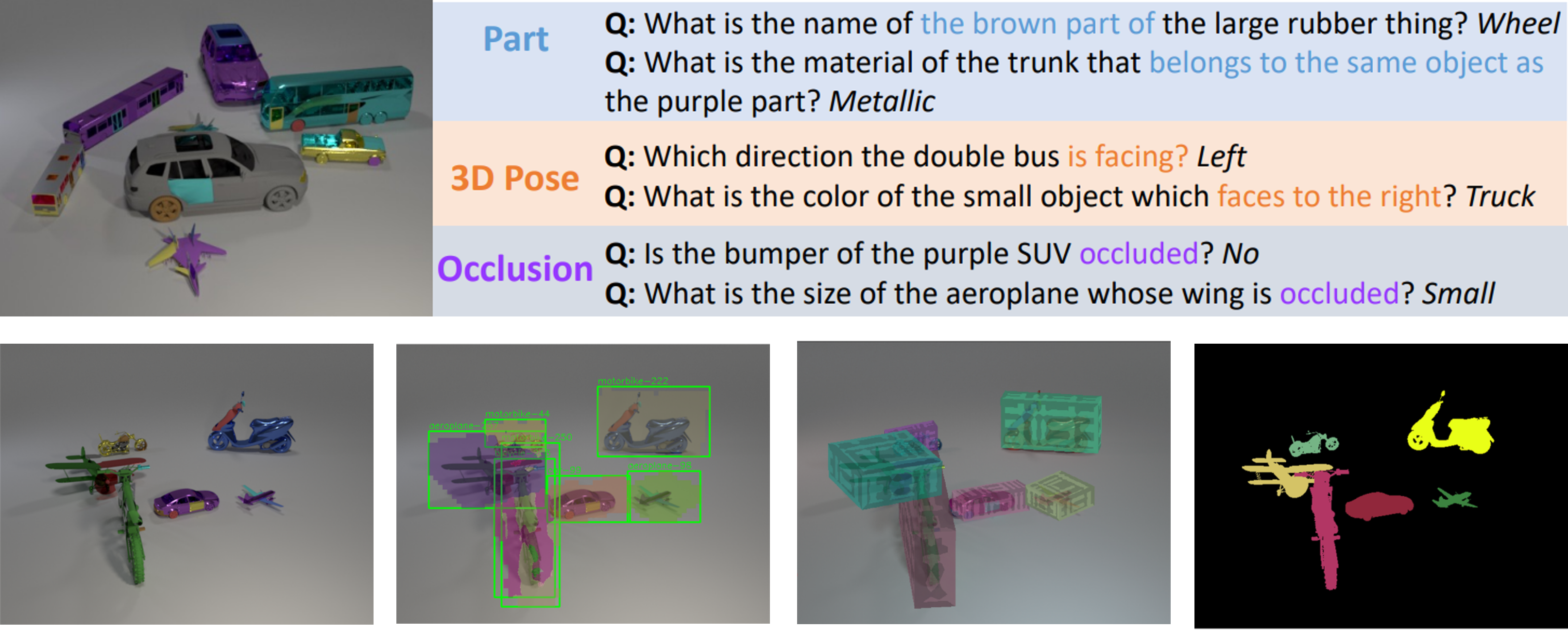

This is the implementation of the NeurIPS'23 paper "3D-Aware Visual Question Answering about Parts, Poses and Occlusions". This paper contains two parts:

-

Super-CLEVR-3D. A compositional reasoning dataset that contains questions about object parts, their 3D poses, and occlusions.

$\rightarrow$ ./superclevr-3D-question -

PO3D-VQA. A 3D-aware VQA model, combining 3D generative representations of objects for robust visual recognition and probabilistic neural symbolic program execution for reasoning.

$\rightarrow$ ./PO3D-VQA

@article{wang20233d,

title={3D-Aware Visual Question Answering about Parts, Poses and Occlusions},

author={Wang, Xingrui and Ma, Wufei and Li, Zhuowan and Kortylewski, Adam and Yuille, Alan},

journal={arXiv preprint arXiv:2310.17914},

year={2023}

}