[CVPR2020] BiFuse: Monocular 360 Depth Estimation via Bi-Projection Fusion

[Paper] [Project Page]

Getting Started

Requirements

- Python (tested on 3.7.4)

- PyTorch (tested on 1.4.0)

- Other dependencies

pip install -r requirements.txt

Usage

First clone our repo:

git clone https://github.com/Yeh-yu-hsuan/BiFuse.git

cd BiFuse

Step1

Download our pretrained Model and create a save folder:

mkdir save

then put the BiFuse_Pretrained.pkl into save folder.

Step2

My_Test_Data folder has contained a Sample.jpg RGB image as an example.

If you want to test your own data, please put your own rgb images into My_Test_Data folder and run:

python main.py --path './My_Test_Data'

Our argument:

--path is the folder path of your own testing images.

--nocrop if you don't want to crop the original images.

After testing, you can see the results in My_Test_Result folder!

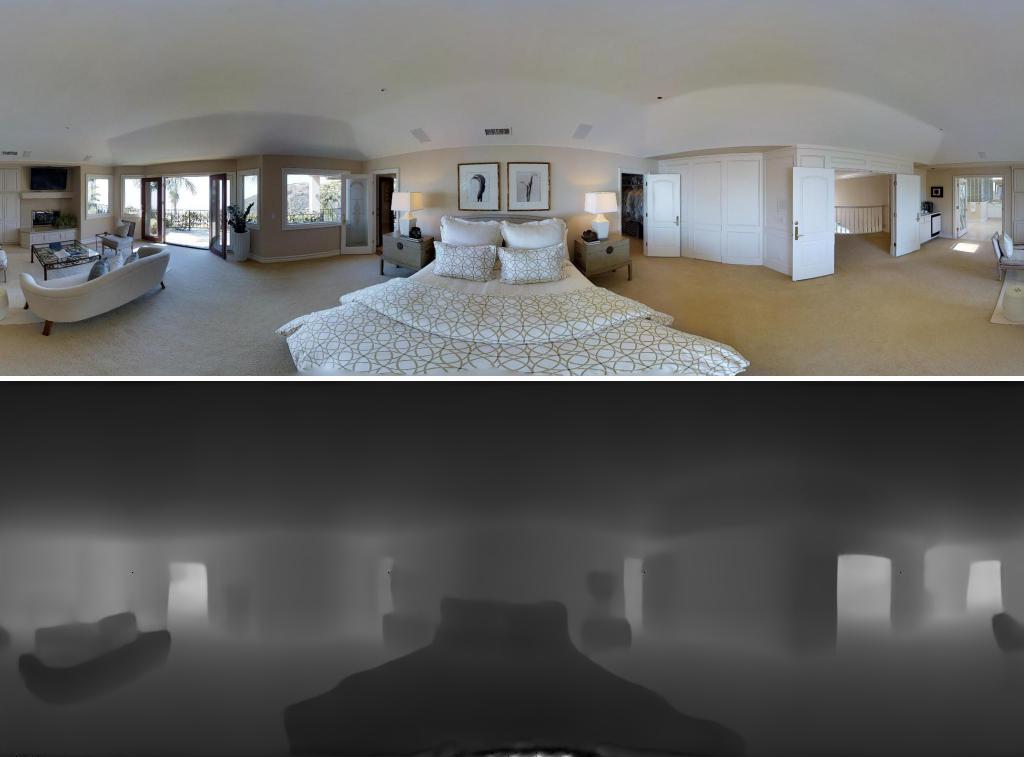

- Here shows some sample results

The Restuls contain Combine.jpg, Depth.jpg, and Data.npy.

Combine.jpg is concatenating rgb image with its corresponding depth map prediction.

Depth.jpg is only depth map prediction.

Data.npy is the original data of both RGB and predicted depth value.

Point Cloud Visualization

If you also want to visualize the point cloud of predicted depth, we also provide the script to render it. You can have a look at tools/.

License

This work is licensed under MIT License. See LICENSE for details.

If you find our code/models useful, please consider citing our paper:

@InProceedings{BiFuse20,

author = {Wang, Fu-En and Yeh, Yu-Hsuan and Sun, Min and Chiu, Wei-Chen and Tsai, Yi-Hsuan},

title = {BiFuse: Monocular 360 Depth Estimation via Bi-Projection Fusion},

booktitle = {The IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2020}

}