Author: Guan Weipeng, Chen Peiyu

This is the repositorie that collects the dataset we used in our papers. We also conclude our works in the field of event-based vision. We hope that we can make some contributions for the development of event-based vision in robotics.

if you find this repositorie is helpful in your research, a simple star or citation of our works should be the best affirmation for us. 😊

- Data Sequence for Event-based Stereo Visual-inertial Odometry

- Data Sequence for Event-based Monocular Visual-inertial Odometry

- Our Works in Event-based Vision

- Using Our Methods as Comparison

- Recommendation

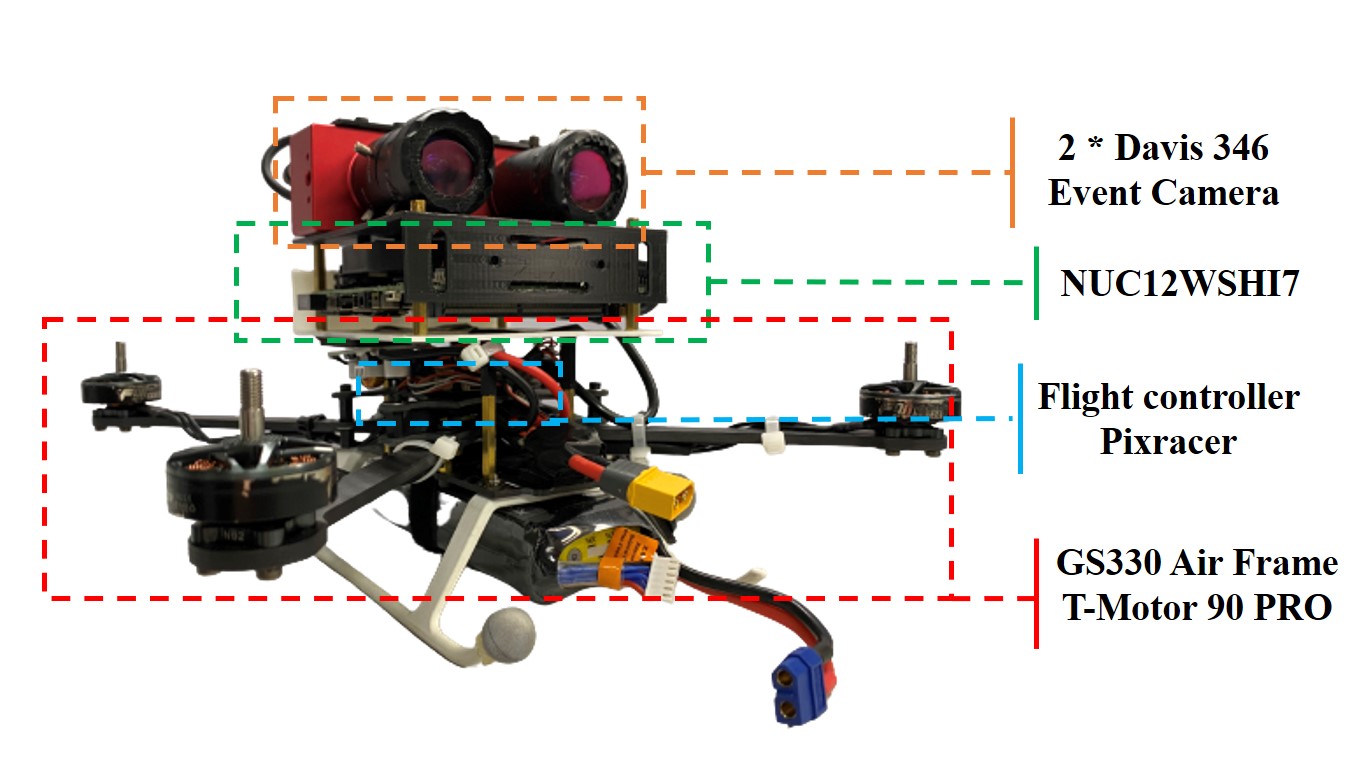

This dataset contains stereo event data at 60HZ and stereo image frames at 30Hz with resolution in 346 × 260, as well as IMU data at 1000Hz. Timestamps between all sensors are synchronized in hardware. We also provide ground truth poses from a motion capture system VICON at 50Hz during the beginning and end of each sequence, which can be used for trajectory evaluation. To alleviate disturbance from the motion capture system’s infrared light on the event camera, we add an infrared filter on the lens surface of the DAVIS346 camera. Note that this might cause the degradation of perception for both the event and image camera during the evaluation, but it can also further increase the challenge of our dataset for the only image-based method.

This is a very challenge dataset for event-based VO/VIO, features aggressive motion and HDR scenarios. EVO, ESVO, Ultimate SLAM are failed in most of the sequences. We think that parameter tuning is infeasible, therefore, we suggest the users use same set of parameters during the evaluation. We hope that our dataset can help to push the boundary of future research on stereo event-based VO/VIO algorithms, especially the ones that are really useful and can be applied in practice.

- The configuration file is in link

- The DAVIS comprises an image camera and event camera on the same pixel array, thus calibration can be done using standard image-based methods, such as Kalibr

- We also provide the rosbag for stereo cameras and IMU calibration: Calibration_bag.

- Event Camera Calibration using Kalibr and imu_utils

We thanks the rpg_dvs_ros for intructions of event camera driver.

We add the function of the hardware synchronized for stereo setup, the source code is available in link. After installing the driver, the user can directly run the following command to run your stereo event camera:

roslaunch stereo_davis_open.launch

Tips: Users need to adjust the lens of the camera, such as the focal length, aperture.

Filters are needed for avoiding the interfere from infrared light under the motion capture system.

For the dvxplorer, the sensitive of event generation should be set, e.g. bias_sensitivity.

Users can visualize the event streams to see whether it is similiar to the edge map of the testing environments, and then fine-tune it.

Otherwise, the event sensor would output noise and is useless just like M2DGR.

In our VICON room:

| Sequence Name | Collection Date | Total Size | Duration | Features | Rosbag |

|---|---|---|---|---|---|

| hku_agg_translation | 2022-10 | 3.63g | --- | aggressive | Rosbag |

| hku_agg_rotation | 2022-10 | 3.70g | --- | aggressive | Rosbag |

| hku_agg_flip | 2022-10 | 3.71g | --- | aggressive | Rosbag |

| hku_agg_walk | 2022-10 | 4.52g | --- | aggressive | Rosbag |

| hku_hdr_circle | 2022-10 | 2.91g | --- | hdr | Rosbag |

| hku_hdr_slow | 2022-10 | 4.61g | --- | hdr | Rosbag |

| hku_hdr_tran_rota | 2022-10 | 3.37g | --- | aggressive & hdr | Rosbag |

| hku_hdr_agg | 2022-10 | 4.43g | --- | aggressive & hdr | Rosbag |

| hku_dark_normal | 2022-10 | 4.24g | --- | dark & hdr | Rosbag |

Outdoor large-scale (outdoor without ground truth):

The path length of this data sequence is about 1866m, which covers the place around 310m in length, 170m in width, and 55m in height changes, from Loke Yew Hall to the Eliot Hall and back to the Loke Yew Hall in HKU campus. That would be a nice travel for your visiting the HKU 😍 Try it!

| Sequence Name | Collection Date | Total Size | Duration | Features | Rosbag |

|---|---|---|---|---|---|

| hku_outdoor_large-scale | 2022-11 | 67.4g | 34.9minutes | Indoor+outdoor; large-scale | Rosbag |

Modified VECtor Dataset:

VECtor dataset covering the full spectrum of motion dynamics, environment complexities, and illumination conditions for both small and large-scale scenarios. We modified the frequency of the event_left and event_right (60Hz) and the message format from "prophesee_event_msgs/EventArray" to "dvs_msgs/EventArray" in the VECtor dataset, so that there is more event information in each frame and we can extract effective point and line features from the event stream. We release this modified VECtor Dataset to facilitate research on event camera. For the convenience of the user, we also fuse the individual rosbag from different sensors together (left_camera, right_camera, left_event, right_event, imu, groundtruth).

| Sequence Name | Collection Date | Total Size | Duration | Features | Rosbag |

|---|---|---|---|---|---|

| board-slow | --- | 3.18g | --- | --- | Rosbag |

| corner-slow | --- | 3.51g | --- | --- | Rosbag |

| robot-normal | --- | 3.39g | --- | --- | Rosbag |

| robot-fast | --- | 4.23g | --- | --- | Rosbag |

| desk-normal | --- | 8.82g | --- | --- | Rosbag |

| desk-fast | --- | 10.9g | --- | --- | Rosbag |

| sofa-normal | --- | 10.8g | --- | --- | Rosbag |

| sofa-fast | --- | 6.7g | --- | --- | Rosbag |

| mountain-normal | --- | 10.9g | --- | --- | Rosbag |

| mountain-fast | --- | 16.6g | --- | --- | Rosbag |

| hdr-normal | --- | 7.73g | --- | --- | Rosbag |

| hdr-fast | --- | 13.1g | --- | --- | Rosbag |

| corridors-dolly | --- | 7.78g | --- | --- | Rosbag |

| corridors-walk | --- | 8.56g | --- | --- | Rosbag |

| school-dolly | --- | 12.0g | --- | --- | Rosbag |

| school-scooter | --- | 5.91g | --- | --- | Rosbag |

| units-dolly | --- | 18.5g | --- | --- | Rosbag |

| units-scooter | --- | 11.6g | --- | --- | Rosbag |

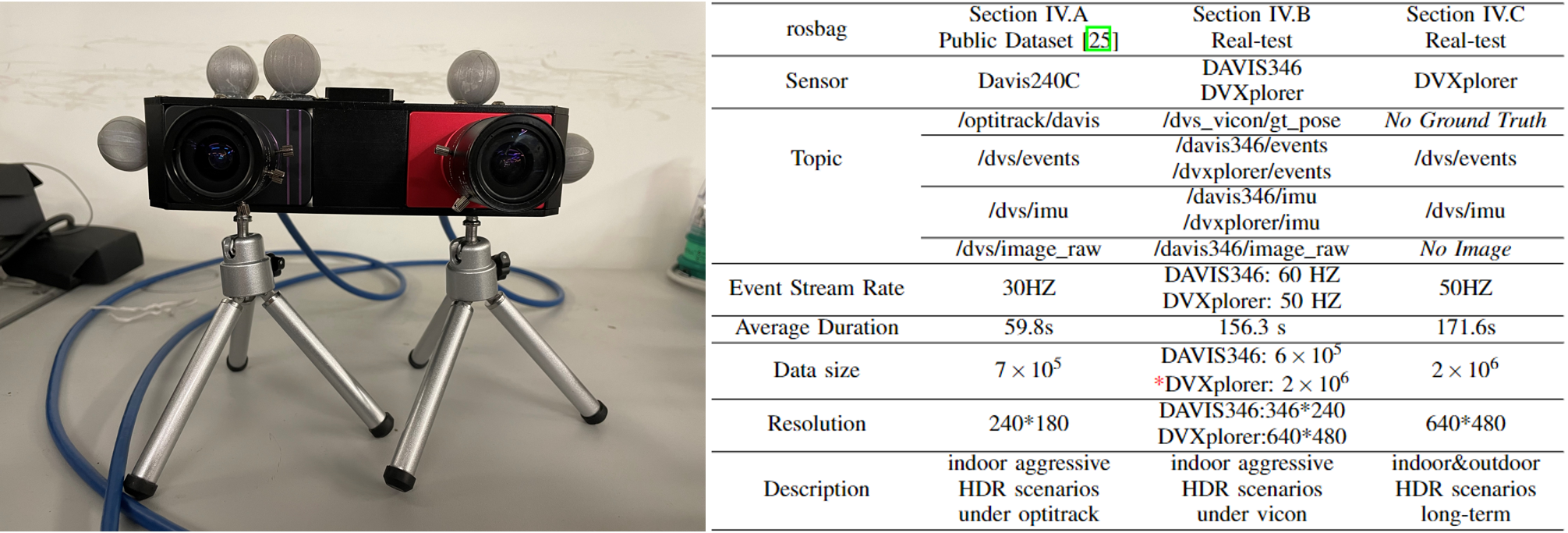

You can use these data sequence to test your monocular EVIO in different resolution event cameras.

TheDAVIS346 (346x260) and DVXplorer (640x480)are attached together (shown in Figure) for facilitating comparison.

All the sequences are recorded in HDR scenarios with very low illumination or strong illumination changes through switching the strobe flash on and off.

We also provide indoor and outdoor large-scale data sequence.

- The configuration file is in link

With VICON as ground truth:

| Sequence Name | Collection Date | Total Size | Duration | Features | Rosbag |

|---|---|---|---|---|---|

| vicon_aggressive_hdr | 2021-12 | 23.0g | --- | HDR, Aggressive Motion | Rosbag |

| vicon_dark1 | 2021-12 | 10.5g | --- | HDR | Rosbag |

| vicon_dark2 | 2021-12 | 16.6g | --- | HDR | Rosbag |

| vicon_darktolight1 | 2021-12 | 17.2g | --- | HDR | Rosbag |

| vicon_darktolight2 | 2021-12 | 14.4g | --- | HDR | Rosbag |

| vicon_hdr1 | 2021-12 | 13.7g | --- | HDR | Rosbag |

| vicon_hdr2 | 2021-12 | 16.9g | --- | HDR | Rosbag |

| vicon_hdr3 | 2021-12 | 11.0g | --- | HDR | Rosbag |

| vicon_hdr4 | 2021-12 | 19.6g | --- | HDR | Rosbag |

| vicon_lighttodark1 | 2021-12 | 17.0g | --- | HDR | Rosbag |

| vicon_lighttodark2 | 2021-12 | 12.0g | --- | HDR | Rosbag |

indoor (no ground truth):

| Sequence Name | Collection Date | Total Size | Duration | Features | Rosbag |

|---|---|---|---|---|---|

| indoor_aggressive_hdr_1 | 2021-12 | 16.62g | --- | HDR, Aggressive Motion | Rosbag |

| indoor_aggressive_hdr_2 | 2021-12 | 15.66g | --- | HDR, Aggressive Motion | Rosbag |

| indoor_aggressive_test_1 | 2021-12 | 17.94g | --- | Aggressive Motion | Rosbag |

| indoor_aggressive_test_2 | 2021-12 | 8.385g | --- | Aggressive Motion | Rosbag |

| indoor_1 | 2021-12 | 3.45g | --- | --- | Rosbag |

| indoor_2 | 2021-12 | 5.31g | --- | --- | Rosbag |

| indoor_3 | 2021-12 | 5.28g | --- | --- | Rosbag |

| indoor_4 | 2021-12 | 6.72g | --- | --- | Rosbag |

| indoor_5 | 2021-12 | 13.79g | --- | --- | Rosbag |

| indoor_6 | 2021-12 | 20.39g | --- | --- | Rosbag |

Outdoor (no ground truth):

| Sequence Name | Collection Date | Total Size | Duration | Features | Rosbag |

|---|---|---|---|---|---|

| indoor_outdoor_1 | 2021-12 | 20.87g | --- | ****** | Rosbag |

| indoor_outdoor_2 | 2021-12 | 39.5g | --- | ****** | Rosbag |

| outdoor_1 | 2021-12 | 5.52g | --- | ****** | Rosbag |

| outdoor_2 | 2021-12 | 5.27g | --- | ****** | Rosbag |

| outdoor_3 | 2021-12 | 6.83g | --- | ****** | Rosbag |

| outdoor_4 | 2021-12 | 7.28g | --- | ****** | Rosbag |

| outdoor_5 | 2021-12 | 7.26g | --- | ****** | Rosbag |

| outdoor_6 | 2021-12 | 5.38g | --- | ****** | Rosbag |

| outdoor_round1 | 2021-12 | 11.27g | --- | ****** | Rosbag |

| outdoor_round2 | 2021-12 | 13.34g | --- | ****** | Rosbag |

| outdoor_round3 | 2021-12 | 37.26g | --- | ****** | Rosbag |

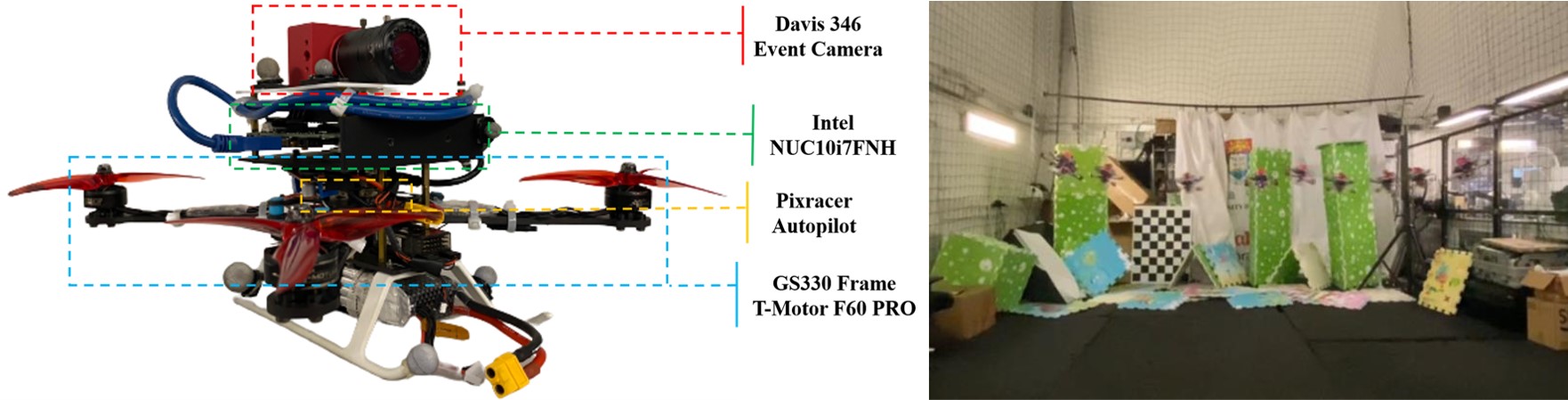

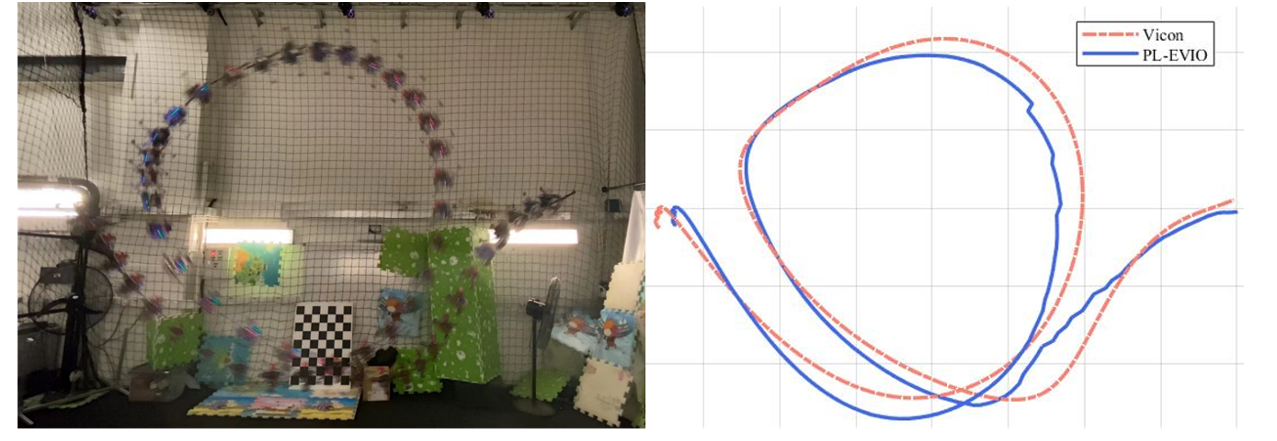

On quadrotor platform (sample sequence in our PL-EVIO work):

We also provide the data squences that are collected in the flighting quadrotor platform using DAVIS346.

- The configuration file is in link

| Sequence Name | Collection Date | Total Size | Duration | Features | Rosbag |

|---|---|---|---|---|---|

| Vicon_dvs_fix_eight | 2022-08 | 1.08g | --- | quadrotor flighting | Rosbag |

| Vicon_dvs_varing_eight | 2022-08 | 1.48g | --- | quadrotor flighting | Rosbag |

| outdoor_large_scale1 | 2022-08 | 9.38g | 16 minutes | ****** | Rosbag |

| outdoor_large_scale2 | 2022-08 | 9.34g | 16 minutes | ****** | Rosbag |

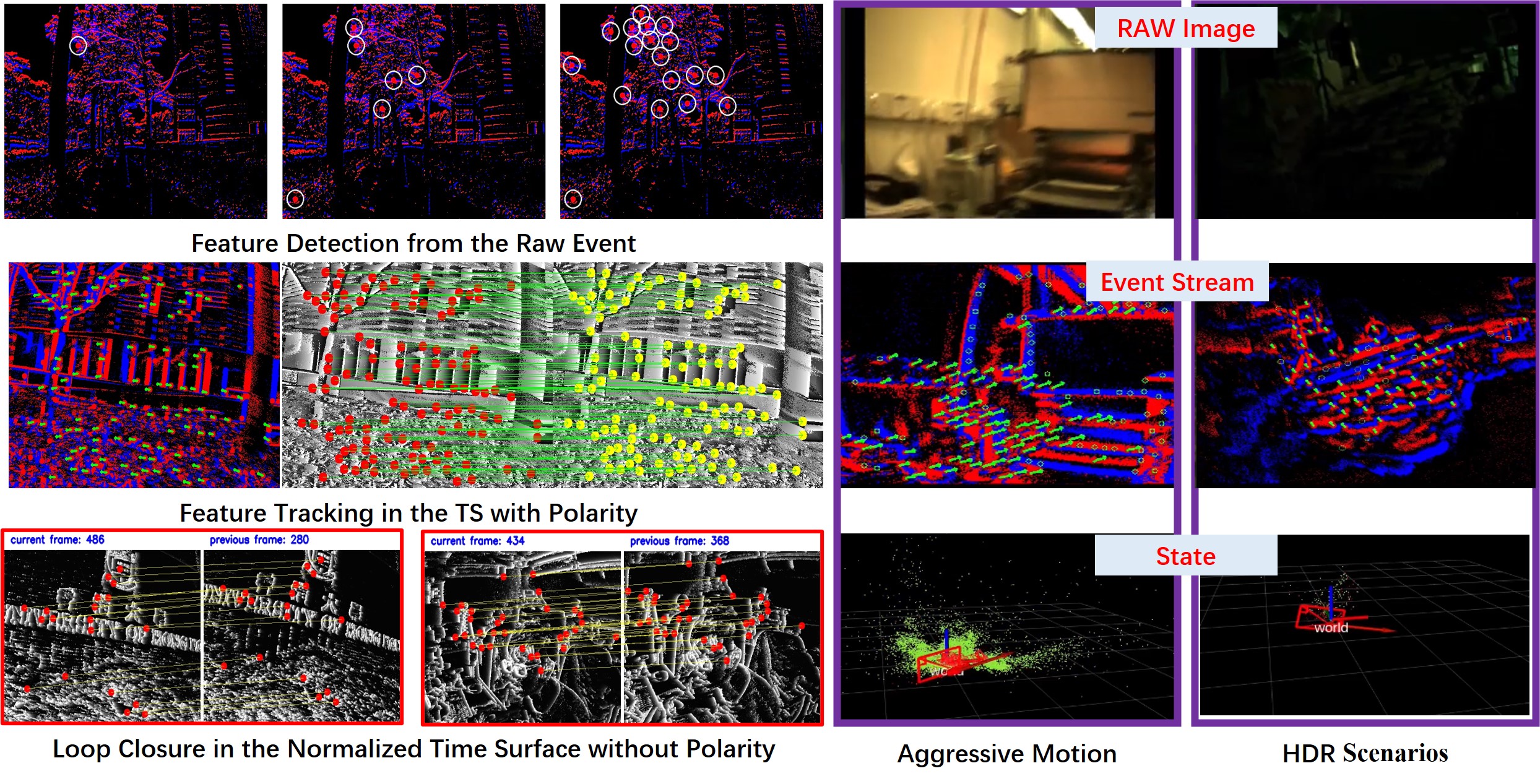

This work proposed pruely event-based visual inertial odometry (VIO). We do not rely on the use of image-based corner detection but design a asynchronously detected and uniformly distributed event-cornerdetector from events-only data. The event-corner features tracker are then integrated into a sliding windows graph-based optimization framework that tightly fuses the event-corner features with IMU measurement to estimate the 6-DoF ego-motion.

- PDF can be downloaded in here

- Results (raw trajectories)

- Code is available in internal-accessed link

@inproceedings{GWPHKU:EVIO,

title={Monocular Event Visual Inertial Odometry based on Event-corner using Sliding Windows Graph-based Optimization},

author={Guan, Weipeng and Lu, Peng},

booktitle={2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages={2438-2445},

year={2022},

organization={IEEE}

}

This work proposed the event-based VIO framework with point and line features, including: pruely event (PL-EIO) and event+image (PL-EVIO). It is reliable and accurate enough to provide onboard pose feedback control for the quadrotor to achieve aggressive motion, e.g. flipping.

- PDF can be downloaded in here

- Results (raw trajectories)

- Code is available in internal-accessed link

- An extended version of our PL-EVIO: realizing high-accurate 6-DoF pose tracking and 3D semi-dense mapping (monocular event only) can be seen in Link

@article{PL-EVIO,

title={PL-EVIO: Robust Monocular Event-based Visual Inertial Odometry with Point and Line Features},

author={Guan, Weipeng and Chen, Peiyu and Xie, Yuhan and Lu, Peng},

journal={arXiv preprint arXiv:2209.12160},

year={2022}

}

This work proposed the first stereo event-based visual inertial odometry framework, including ESIO (purely event-based) and ESVIO (event with image-aided). The stereo event-corner features are temporally and spatially associated through an event-based representation with spatio-temporal and exponential decay kernel. The stereo event tracker are then tightly coupled into a sliding windows graph-based optimization framework for the estimation of ego-motion.

- PDF can be downloaded in here

- Results (raw trajectories)

- Code is available in internal-accessed link

@article{ESVIO,

title={ESVIO: Event-based Stereo Visual Inertial Odometry},

author={Chen, Peiyu and Guan, Weipeng and Lu, Peng},

journal={arXiv preprint arXiv:2212.13184},

year={2022}

}

❗ We strongly recommend the peers to evaluate their proposed method using our dataset, and do the comparison with the raw results from our methods using their own accuracy criterion. ❗

The raw results/trajectories of our methods can be obtained in 👉 here.

- Survey on Event-based 3D Reconstruction

- Event Camera Calibration using dv-gui

- Event Camera Calibration using Kalibr and imu_utils

- Event Camera Simulation in Gazebo

- The survey when I first meet "Event Camera" can be seen in Blog

- Event-based Vision Resources

- The course: Event-based Robot Vision, by Prof. Guillermo Gallego

- Useful tools:

This repositorie is licensed under MIT license. International License and is provided for academic purpose. If you are interested in our project for commercial purposes, please contact Dr. Peng LU for further communication.