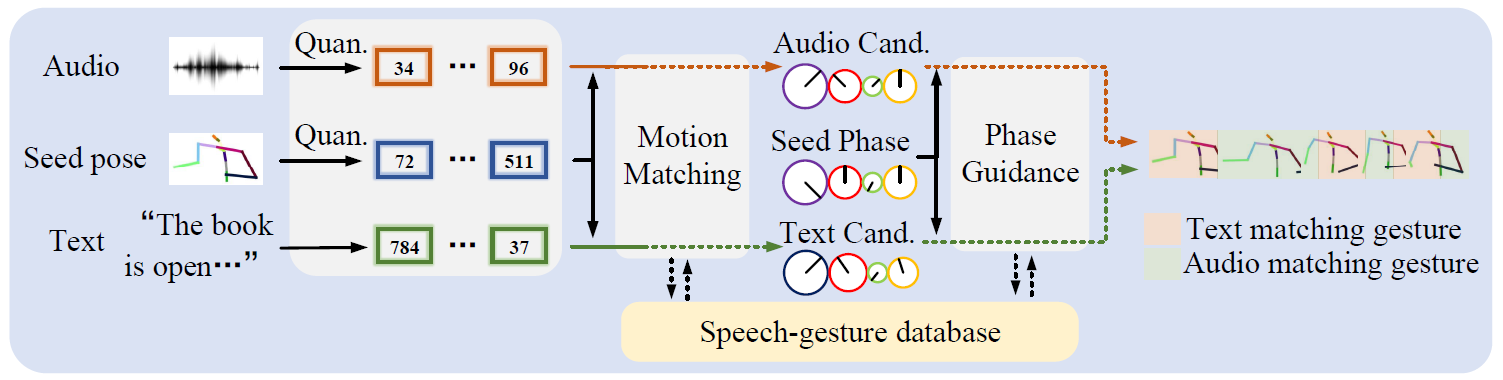

QPGesture: Quantization-Based and Phase-Guided Motion Matching for Natural Speech-Driven Gesture Generation

📢 DiffuseStyleGesture/DiffuseStyleGesture+ - Based on the diffusion model, the full body gesture.

📢 UnifiedGesture - Training on multiple gesture datasets, refine the gestures.

This code was tested on NVIDIA GeForce RTX 2080 Ti and requires conda or miniconda.

conda create -n QPGesture python=3.7

conda activate QPGesture

pip install torch==1.8.0+cu111 -f https://download.pytorch.org/whl/torch_stable.html

pip install -r requirements.txt

Download our processed database and pre-trained models from Tsinghua Cloud or Google Cloud and place them in the data fold and pretrained_model fold in the project path.

cd ./codebook/Speech2GestureMatching/

bash GestureKNN.sh

This is an audio clip about 24 seconds long and it takes about 5 minutes to match.

You will get the results in ./codebook/Speech2GestureMatching/output/result.npz

cd ..

python VisualizeCodebook.py --config=./configs/codebook.yml --gpu 0 --code_path "./Speech2GestureMatching/output/result.npz" --VQVAE_model_path "../pretrained_model/codebook_checkpoint_best.bin" --stage inference

Then you will get .bvh, .mp4 and other intermediate files in .codebook/Speech2GestureMatching/output/knn_pred_wavvq/

knn_pred_wavvq_generated.mp4

You can use Blender to visualize bvh file.

0001-1440.mp4

We also provide a processed database for speaker id 1, available for download in Tsinghua Cloud and Baidu Cloud. It is optional to use this database. We recommend trying Speaker 1, which has a larger database and better performance.

Here, we need to build the test set. We use ./data/Example3/4.wav as an example. Note that no text is used here.

Download vq-wav2vec Gumbel from fairseq

and put it in ./process/.

Modify the code of fairseq in conda or miniconda according to this issue.

Then run:

cd ./process/

python make_test_data.py --audio_path "../data/Example3/4.wav" --save_path "../data/Example3/4"

You will get ./data/Example3/4/wavvq_240.npz

Then similar to the previous step, just run the following code:

cd ../codebook/Speech2GestureMatching/

bash GestureKNN.sh "../../data/Example3/4/wavvq_240.npz" 0 "./output/result_Example3.npz"

Install gentle like Trimodal to align the text and audio, this will take some minutes:

cd ./process/

git clone https://github.com/lowerquality/gentle.git

cd gentle

./install.sh

You can verify whether gentle is installed successfully with the following command:

python align.py './examples/data/lucier.mp3' './examples/data/lucier.txt'

Download the WavLM Large and put it into ./pretrained_model/.

Download the character you want to build from BEAT, you can put it in ./dataset/orig_BEAT/ or other places.

Here is an example of speaker id 10:

python make_beat_dataset.py --BEAT_path "../dataset/orig_BEAT/speakers/" --save_dir "../dataset/BEAT" --prefix "speaker_10_state_0" --step 1

cd ../codebook/Speech2GestureMatching/

python normalize_audio.py

python mfcc.py

cd ../../process/

python make_beat_dataset.py --BEAT_path "../dataset/orig_BEAT/speakers/" --save_dir "../dataset/BEAT" --prefix "speaker_10_state_0" --step 2

Now we get a basic database and further we compute phase, wavlm and wavvq features:

cd ../codebook/

python PAE.py --config=./configs/codebook.yml --gpu 0 --stage inference

cd ../process/

python make_beat_dataset.py --config "../codebook/configs/codebook.yml" --BEAT_path "../dataset/orig_BEAT/speakers/" --save_dir "../dataset/BEAT" --prefix "speaker_10_state_0" --gpu 0 --step 3

python make_beat_dataset.py --config "../codebook/configs/codebook.yml" --BEAT_path "../dataset/orig_BEAT/speakers/" --save_dir "../dataset/BEAT" --prefix "speaker_10_state_0" --gpu 0 --step 4

Then you will get all the databases in Quick Start.

This is just an example of speaker id 10, in fact we use all speakers to train these models.

pip install numpy==1.19.5 # Unfortunately, we have been troubled with the version of the numpy library (with pyarrow).

python beat_data_to_lmdb.py --config=../codebook/configs/codebook.yml --gpu 0

Then you will get data mean/std, and you may copy them to ./codebook/configs/codebook.yml.

cd ../codebook/

python train.py --config=./configs/codebook.yml --gpu 0

The gesture VQ-VAE will saved in ./codebook/output/train_codebook/codebook_checkpoint_best.bin.

For futher calculate the distance between each code, run

python VisualizeCodebook.py --config=./configs/codebook.yml --gpu 0 --code_path "./Speech2GestureMatching/output/result.npz" --VQVAE_model_path "./output/train_codebook/codebook_checkpoint_best.bin" --stage train

Then you will get the absolute pose of each code in ./codebook/output/code.npz used in Quick Start.

python PAE.py --config=./configs/codebook.yml --gpu 0 --stage train

The PAE will saved in ./codebook/output/train_PAE/PAE_checkpoint_best.bin

This work is highly inspired by Bailando, KNN and DeepPhase.

If you find this work useful, please consider cite our work with the following bibtex:

@inproceedings{yang2023QPGesture,

author = {Sicheng Yang and Zhiyong Wu and Minglei Li and Zhensong Zhang and Lei Hao and Weihong Bao and Haolin Zhuang},

title = {QPGesture: Quantization-Based and Phase-Guided Motion Matching for Natural Speech-Driven Gesture Generation},

booktitle = {{IEEE/CVF} Conference on Computer Vision and Pattern Recognition, {CVPR}},

publisher = {{IEEE}},

month = {June},

year = {2023},

pages = {2321-2330}

}

Please feel free to contact us yangsc21@mails.tsinghua.edu.cn with any question or concerns.