A Survey on Interpretable Cross-modal Reasoning

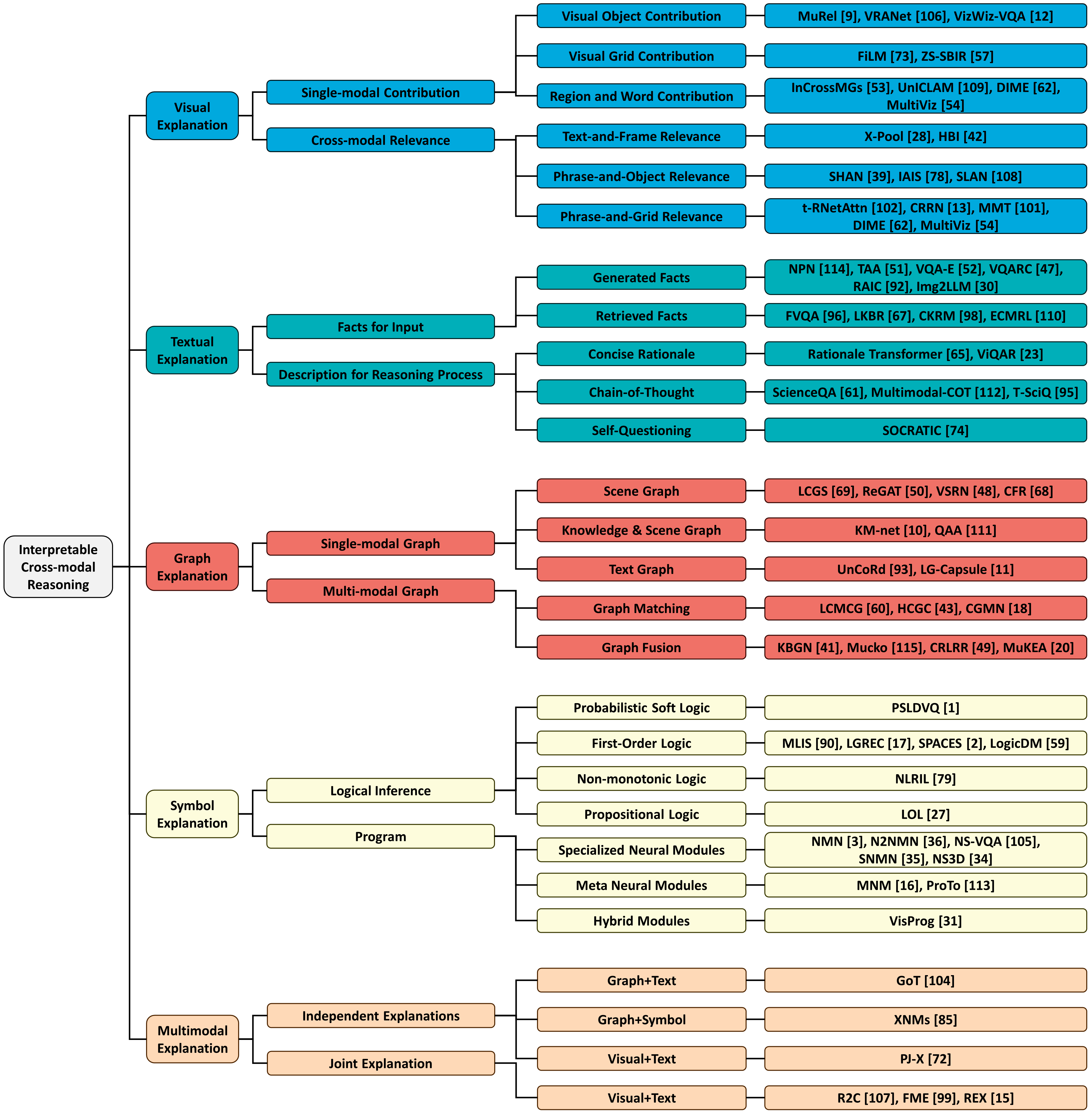

Dizhan Xue, Shengsheng Qian, Zuyi Zhou, and Changsheng XuAbstract: In recent years, cross-modal reasoning (CMR), the process of understanding and reasoning across different modalities, has emerged as a pivotal area with applications spanning from multimedia analysis to healthcare diagnostics. As the deployment of AI systems becomes more ubiquitous, the demand for transparency and comprehensibility in these systems’ decision-making processes has intensified. This survey delves into the realm of interpretable cross-modal reasoning (I-CMR), where the objective is not only to achieve high predictive performance but also to provide human-understandable explanations for the results. This survey presents a comprehensive overview of the typical methods with a three-level taxonomy for I-CMR. Furthermore, this survey reviews the existing CMR datasets with annotations for explanations. Finally, this survey summarizes the challenges for I-CMR and discusses potential future directions. In conclusion, this survey aims to catalyze the progress of this emerging research area by providing researchers with a panoramic and comprehensive perspective, illuminating the state of the art and discerning the opportunities.

If you find our paper or this repo helpful for your research, please cite it as below. Thanks!

@article{xue2023survey,

title={A Survey on Interpretable Cross-modal Reasoning},

author={Xue, Dizhan and Qian, Shengsheng and Zhou, Zuyi and Xu, Changsheng},

journal={arXiv preprint arXiv:2309.01955},

year={2023}

}- In this survey, we present a three-level taxonomy for inter-pretable cross-modal reasoning methods, as follows:

MUREL: Multimodal Relational Reasoning for Visual Question Answering [CVPR 2019]

Remi Cadene, Hedi Ben-Younes, Matthieu Cord, and Nicolas Thome.

[Paper][Code]

Reasoning on the Relation: Enhancing Visual Representation for Visual Question Answering and Cross-Modal Retrieval [TMM 2022]

Jing Yu, Weifeng Zhang, Yuhang Lu, Zengchang Qin, Yue Hu, Jianlong Tan, and Qi Wu.

[Paper] [Code]

Grounding Answers for Visual Questions Asked by Visually Impaired People [CVPR 2022]

Chongyan Chen, Samreen Anjum, and Danna Gurari.

[Paper] [Code]

FiLM: Visual Reasoning with a General Conditioning Layer [AAAI 2018]

Ethan Perez, Florian Strub, Harm De Vries, Vincent Dumoulin, and Aaron Courville.

[Paper] [Code]

Zero-Shot Everything Sketch-Based Image Retrieval, and in Explainable Style [CVPR 2023]

Fengyin Lin, Mingkang Li, Da Li, Timothy Hospedales, Yi-Zhe Song, and Yonggang Qi.

[Paper] [Code]

Multi-Modal Sarcasm Detection with Interactive In-Modal and Cross-Modal Graphs [ACM MM 2021]

Bin Liang, Chenwei Lou, Xiang Li, Lin Gui, Min Yang, and Ruifeng Xu.

[Paper]

UnICLAM: Contrastive Representation Learning with Adversarial Masking for Unified and Interpretable Medical Vision Question Answering [arXiv 2022]

Chenlu Zhan, Peng Peng, Hongsen Wang, Tao Chen, and Hongwei Wang.

[Paper]

DIME: Fine-grained Interpretations of Multimodal Models via Disentangled Local Explanations [AIES 2022]

Yiwei Lyu, Paul Pu Liang, Zihao Deng, Ruslan Salakhutdinov, and Louis-Philippe Morency.

[Paper] [Code]

MultiViz: Towards Visualizing and Understanding Multimodal Models [ICLR 2023]

Paul Pu Liang, Yiwei Lyu, Gunjan Chhablani, Nihal Jain, Zihao Deng, Xingbo Wang, Louis-Philippe Morency, and Ruslan Salakhutdinov.

[Paper] [Code]

X-Pool: Cross-Modal Language-Video Attention For Text-Video Retrieval [CVPR 2022]

Satya Krishna Gorti, Noël Vouitsis, Junwei Ma, Keyvan Golestan, Maksims Volkovs, Animesh Garg, and Guangwei Yu.

[Paper] [Code]

Video-Text as Game Players: Hierarchical Banzhaf Interaction for Cross-Modal Representation Learning [CVPR 2023]

Peng Jin, Jinfa Huang, Pengfei Xiong, Shangxuan Tian, Chang Liu, Xiangyang Ji, Li Yuan, and Jie Chen.

[Paper] [Code]

Step-Wise Hierarchical Alignment Network for Image-Text Matching [IJCAI 2021]

Zhong Ji, Kexin Chen, and Haoran Wang.

[Paper]

Learning Relation Alignment for Calibrated Cross-modal Retrieval [ACL-IJCNLP 2021]

Shuhuai Ren, Junyang Lin, Guangxiang Zhao, Rui Men, An Yang, Jingren Zhou, Xu Sun, and Hongxia Yang.

[Paper] [Code]

SLAN: Self-Locator Aided Network for Cross-Modal Understanding [arXiv 2022]

Jiang-Tian Zhai, Qi Zhang, Tong Wu, Xing-Yu Chen, Jiang-Jiang Liu, Bo Ren, and Ming-Ming Cheng.

[Paper]

Robust and Interpretable Grounding of Spatial References with Relation Networks [EMNLP 2020]

Tsung-Yen Yang, Andrew Lan, and Karthik Narasimhan.

[Paper] [Code]

Cross-modal Relational Reasoning Network for Visual Question Answering [ICCV 2021]

Hongyu Chen, Ruifang Liu, and Bo Peng.

[Paper]

MMT: Image-guided Story Ending Generation with Multimodal Memory Transformer [ACM MM 2023]

Dizhan Xue, Shengsheng Qian, Quan Fang, and Changsheng Xu.

[Paper] [Code]

More Than An Answer: Neural Pivot Network for Visual Qestion Answering [ACM MM 2017]

Yiyi Zhou, Rongrong Ji, Jinsong Su, YongjianWu, and YunshengWu.

[Paper]

Tell-and-Answer: Towards Explainable Visual Question Answering using Attributes and Captions [EMNLP 2018]

Qing Li, Jianlong Fu, Dongfei Yu, Tao Mei, and Jiebo Luo.

[Paper]

VQA-E: Explaining, Elaborating, and Enhancing Your Answers for Visual Questions [ECCV 18]

Qing Li, Qingyi Tao, Shafiq Joty, Jianfei Cai, and Jiebo Luo.

[Paper]

Visual Question Answering as Reading Comprehension [CVPR 2019]

Hui Li, Peng Wang, Chunhua Shen, and Anton van den Hengel.

[Paper]

Relation-Aware Image Captioning for Explainable Visual Question Answering [TAAI 2022]

Ching-Shan Tseng, Ying-Jia Lin, and Hung-Yu Kao.

[Paper]

From Images to Textual Prompts: Zero-shot Visual Question Answering with Frozen Large Language Models [CVPR 2023]

Jiaxian Guo, Junnan Li, Dongxu Li, Anthony Meng Huat Tiong, Boyang Li, Dacheng Tao, and Steven Hoi.

[Paper] [Code]

FVQA: Fact-based Visual Question Answering [T-PAMI 2017]

PengWang, QiWu, Chunhua Shen, Anthony Dick, and Anton Van Den Hengel.

[Paper]

Straight to the Facts: Learning Knowledge Base Retrieval for Factual Visual Question Answering [ECCV 2018]

Medhini Narasimhan and Alexander G Schwing.

[Paper]

Multi-Level Knowledge Injecting for Visual Commonsense Reasoning [TCSVT 2020]

Zhang Wen and Yuxin Peng. 2020.

[Paper]

Explicit Cross-Modal Representation Learning For Visual Commonsense Reasoning [TMM 2012]

Xi Zhang, Feifei Zhang, and Changsheng Xu.

[Paper]

TiNatural Language Rationales with Full-Stack Visual Reasoning: From Pixels to Semantic Frames to Commonsense Graphstle [EMNLP 2020]

Ana Marasović, Chandra Bhagavatula, Jae sung Park, Ronan Le Bras, Noah A Smith, and Yejin Choi.

[Paper] [Code]

Beyond VQA: Generating Multi-word Answers and Rationales to Visual Questions [CVPR 2021]

Radhika Dua, Sai Srinivas Kancheti, and Vineeth N Balasubramanian.

[Paper]

Learn to Explain: Multimodal Reasoning via Thought Chains for Science Question Answering [NeurIPS 2022]

Pan Lu, Swaroop Mishra, Tanglin Xia, Liang Qiu, Kai-Wei Chang, Song-Chun Zhu, Oyvind Tafjord, Peter Clark, and Ashwin Kalyan.

[Paper] [Code]

Multimodal Chain-of-Thought Reasoning in Language Models [arXiv 2023]

Zhuosheng Zhang, Aston Zhang, Mu Li, Hai Zhao, George Karypis, and Alex Smola.

[Paper] [Code]

T-SciQ: Teaching Multimodal Chain-of-Thought Reasoning via Large Language Model Signals for Science Question Answering [arXiv 2023]

Lei Wang, Yi Hu, Jiabang He, Xing Xu, Ning Liu, Hui Liu, and Heng Tao Shen.

[Paper]

The Art of SOCRATIC QUESTIONING: Zero-shot Multimodal Reasoning with Recursive Thinking and Self-Questioning [arXiv 2023]

Jingyuan Qi, Zhiyang Xu, Ying Shen, Minqian Liu, Di Jin, Qifan Wang, and Lifu Huang.

[Paper]

Learning Conditioned Graph Structures for Interpretable Visual Question Answering [NeurIPS 2018]

Will Norcliffe-Brown, Stathis Vafeias, and Sarah Parisot.

[Paper] [Code]

Relation-Aware Graph Attention Network for Visual Question Answering [ICCV 2019]

Linjie Li, Zhe Gan, Yu Cheng, and Jingjing Liu.

[Paper] [Code]

Visual Semantic Reasoning for Image-Text Matching [ICCV 2019]

Kunpeng Li, Yulun Zhang, Kai Li, Yuanyuan Li, and Yun Fu.

[Paper] [Code]

Coarse-to-Fine Reasoning for Visual Question Answering [CVPR 2023]

Binh X Nguyen, Tuong Do, Huy Tran, Erman Tjiputra, Quang D Tran, and Anh Nguyen..

[Paper] [Code]

Explainable High-Order Visual Question Reasoning: A New Benchmark and Knowledgerouted Network [arXiv 2019]

Qingxing Cao, Bailin Li, Xiaodan Liang, and Liang Lin.

[Paper]

Query and Attention Augmentation for Knowledge-Based Explainable Reasoning [CVPR 2022]

Yifeng Zhang, Ming Jiang, and Qi Zhao.

[Paper] [Code]

VQA with No Questions-Answers Training [CVPR 2020]

Ben-Zion Vatashsky and Shimon Ullman.

[Paper] [Code]

Linguistically Driven Graph Capsule Network for Visual Question Reasoning [arXiv 2020]

Qingxing Cao, Xiaodan Liang, Keze Wang, and Liang Lin.

[Paper]

Learning Cross-Modal Context Graph for Visual Grounding [AAAI 2020]

Yongfei Liu, Bo Wan, Xiaodan Zhu, and Xuming He.

[Paper] [Code]

Hierarchical Cross-Modal Graph Consistency Learning for Video-Text Retrieval [SIGIR 2021]

Weike Jin, Zhou Zhao, Pengcheng Zhang, Jieming Zhu, Xiuqiang He, and Yueting Zhuang.

[Paper]

Cross-modal Graph Matching Network for Image-Text Retrieval [TOMM 2022]

Yuhao Cheng, Xiaoguang Zhu, Jiuchao Qian, Fei Wen, and Peilin Liu.

[Paper] [Code]

KBGN: Knowledge-Bridge Graph Network for Adaptive Vision-Text Reasoning in Visual Dialogue [ACM MM 2020]

Xiaoze Jiang, Siyi Du, Zengchang Qin, Yajing Sun, and Jing Yu.

[Paper]

Mucko: Multi-Layer Cross-Modal Knowledge Reasoning for Fact-Based Visual Question Answering [IJCAI 2021]

Zihao Zhu, Jing Yu, Yujing Wang, Yajing Sun, Yue Hu, and Qi Wu.

[Paper]

Cross-modal Representation Learning and Relation Reasoning for Bidirectional Adaptive Manipulation [IJCAI 2022]

Lei Li, Kai Fan, and Chun Yuan.

[Paper]

MuKEA: Multimodal Knowledge Extraction And Accumulation For Knowledgebased Visual Question Answering [CVPR 2022]

Yang Ding, Jing Yu, Bang Liu, Yue Hu, Mingxin Cui, and Qi Wu.

[Paper] [Code]

Explicit Reasoning over End-to-End Neural Architectures for Visual Question Answering [AAAI 2018]

Somak Aditya, Yezhou Yang, and Chitta Baral.

[Paper]

Multimodal Logical Inference System for Visual-Textual Entailment [ACL SRW 2019]

NamRiko Suzuki, Hitomi Yanaka, Masashi Yoshikawa, Koji Mineshima, and Daisuke Bekki.

[Paper]

Exploring Logical Reasoning for Referring Expression Comprehension [ACM MM 2021]

Ying Cheng, Ruize Wang, Jiashuo Yu, Rui-Wei Zhao, Yuejie Zhang, and Rui Feng.

[Paper]

SPACES: Explainable Multimodal AI for Active Surveillance, Diagnosis, and Management of Adverse Childhood Experiences (ACEs) [Big Dat 2021]

Nariman Ammar, Parya Zareie, Marion E Hare, Lisa Rogers, Sandra Madubuonwu, Jason Yaun, and Arash Shaban-Nejad.

[Paper]

Interpretable Multimodal Misinformation Detection with Logic Reasoning [arXiv 2023]

Hui Liu, Wenya Wang, and Haoliang Li.

[Paper] [Code]

Integrating Non-monotonic Logical Reasoning and Inductive Learning with Deep Learning for Explainable Visual Question Answering [FRAI 6 2019]

Heather Riley and Mohan Sridharan.

[Paper] [Code]

VQA-LOL: Visual Question Answering Under the Lens of Logic [ECCV 2022]

Tejas Gokhale, Pratyay Banerjee, Chitta Baral, and Yezhou Yang.

[Paper]

Neural Module Networks [CVPR 2016]

Jacob Andreas, Marcus Rohrbach, Trevor Darrell, and Dan Klein.

[Paper]

Learning to Reason: End-to-End Module Networks for Visual Question Answering [ICCV 2017]

Ronghang Hu, Jacob Andreas, Marcus Rohrbach, Trevor Darrell, and Kate Saenko.

[Paper]

Neural-Symbolic VQA: Disentangling Reasoning from Vision and Language Understanding [NeurIPS 2018]

Kexin Yi, Jiajun Wu, Chuang Gan, Antonio Torralba, Pushmeet Kohli, and Josh Tenenbaum.

[Paper] [Code]

Explainable Neural Computation via Stack Neural Module Networks [ECCV 2018]

Ronghang Hu, Jacob Andreas, Trevor Darrell, and Kate Saenko.

[Paper]

NS3D: Neuro-Symbolic Grounding of 3d Objects and Relations [CVPR 2023]

Joy Hsu, Jiayuan Mao, and Jiajun Wu.

[Paper] [Code]

Meta Module Network for Compositional Visual Reasoning [WACV 2021]

Wenhu Chen, Zhe Gan, Linjie Li, Yu Cheng, William Wang, and Jingjing Liu.

[Paper] [Code]

ProTo: Program-Guided Transformer for Program-Guided Tasks [NeurIPS 2021]

Zelin Zhao, Karan Samel, Binghong Chen, et al.

[Paper] [Code]

Visual Programming: Compositional Visual Reasoning without Training [CVPR 2023]

Tanmay Gupta and Aniruddha Kembhavi.

[Paper] [Code]

Beyond Chain-of-Thought, Effective Graph-of-Thought Reasoning in Large Language Models [arXiv 2023]

Yao Yao, Zuchao Li, and Hai Zhao. 2023.

[Paper]

Explainable and Explicit Visual Reasoning over Scene Graphs [CVPR 2019]

Jiaxin Shi, Hanwang Zhang, and Juanzi Li.

[Paper] [Code]

Multimodal Explanations: Justifying Decisions and Pointing to the Evidence [CVPR 2018]

Dong Huk Park, Lisa Anne Hendricks, Zeynep Akata, Anna Rohrbach, Bernt Schiele, Trevor Darrell, and Marcus Rohrbach.

[Paper]

From Recognition to Cognition: Visual Commonsense Reasoning [CVPR 2019]

Rowan Zellers, Yonatan Bisk, Ali Farhadi, and Yejin Choi.

[Paper] [Code]

Faithful Multimodal Explanation for Visual Question Answering [arXiv 2019]

Jialin Wu and Raymond J. Mooney.

[Paper]

REX: Reasoning-Aware and Grounded Explanation [CVPR 2022]

Shi Chen and Qi Zhao.

[Paper] [Code]

Visual Genome: Connecting Language and Vision Using Crowdsourced Dense Image Annotations [IJCV 2017]

Ranjay Krishna, Yuke Zhu, Oliver Groth, Justin Johnson, Kenji Hata, Joshua Kravitz, Stephanie Chen, Yannis Kalantidis, Li-Jia Li, David A. Shamma, Michael S. Bernstein, and Fei-Fei Li.

[Paper] [Dataset] Visual Genome

GQA: A New Dataset for Real-World Visual Reasoning and Compositional Question Answering [CVPR 2019]

Drew A Hudson and Christopher D Manning.

[Paper] [Dataset] GQA

REX: Reasoning-Aware And Grounded Explanation [CVPR 2022]

Shi Chen and Qi Zhao.

[Paper] [Dataset] GQA-REX

FVQA: Fact-based Visual Question Answering [T-PAMI 2017]

Peng Wang, Qi Wu, Chunhua Shen, Anthony Dick, and Anton Van Den Hengel.

[Paper] [Dataset] FVQA

VQA-E: Explaining, Elaborating, and Enhancing Your Answers for Visual Questions [ECCV 18]

Qing Li, Qingyi Tao, Shafiq Joty, Jianfei Cai, and Jiebo Luo.

[Paper] [Dataset] VQA-E

OK-VQA: A Visual Question Answering Benchmark Requiring External Knowledge [CVPR 2019]

Kenneth Marino, Mohammad Rastegari, Ali Farhadi, and Roozbeh Mottaghi.

[Paper] [Dataset] OK-VQA

KVQA: Knowledge-Aware Visual Question Answering [AAAI 2019]

Sanket Shah, Anand Mishra, Naganand Yadati, and Partha Pratim Talukdar.

[Paper] [Dataset] KVQA

From Recognition to Cognition: Visual Commonsense Reasoning [CVPR 2019]

Rowan Zellers, Yonatan Bisk, Ali Farhadi, and Yejin Choi.

[Paper] [Dataset] VCR

Modular Multitask Reinforcement Learning with Policy Sketches [PMLR 2017]

Jacob Andreas, Dan Klein, and Sergey Levine.

[Paper] [Dataset] 2D Minecraft

End-to-End Multimodal Fact-Checking And Explanation Generation: A Challenging Dataset and Models [SIGIR 2023]

Barry Menglong Yao, Aditya Shah, Lichao Sun, Jin-Hee Cho, and Lifu Huang.

[Paper] [Dataset] Mocheg

Multimodal Explanations: Justifying Decisions and Pointing to the Evidence [CVPR 2018]

Dong Huk Park, Lisa Anne Hendricks, Zeynep Akata, Anna Rohrbach, Bernt Schiele, Trevor Darrell, and Marcus Rohrbach.

[Paper] [Dataset] VQA-X ACT-X

Learn to Explain: Multimodal Reasoning via Thought Chains for Science Question Answering [NeurIPS 2022]

Pan Lu, Swaroop Mishra, Tanglin Xia, Liang Qiu, Kai-Wei Chang, Song-Chun Zhu, Oyvind Tafjord, Peter Clark, and Ashwin Kalyan.

[Paper] [Dataset] ScienceQA

Decoding the Underlying Meaning of Multimodal Hateful Memes [arXiv 2023]

Ming Shan Hee, Wen-Haw Chong, and Roy Ka-Wei Lee.

[Paper] [Dataset] HatReD

WAX: A New Dataset for Word Association eXplanations [ACL-IJCNLP 2023]

Chunhua Liu, Trevor Cohn, Simon De Deyne, and Lea Frermann.

[Paper] [Dataset] WAX

Please contact us by e-mail:

xuedizhan17@mails.ucas.ac.cnshengsheng.qian@nlpr.ia.ac.cnzhouzuyi2023@ia.ac.cn