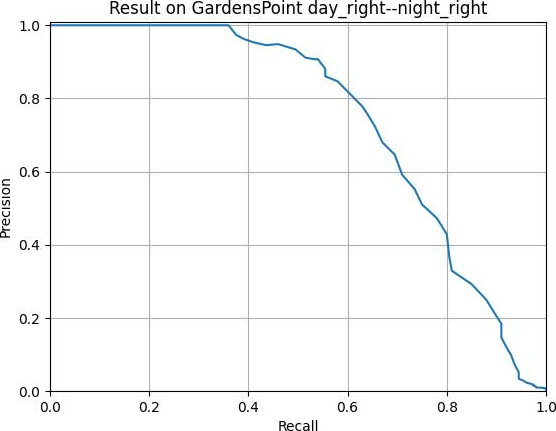

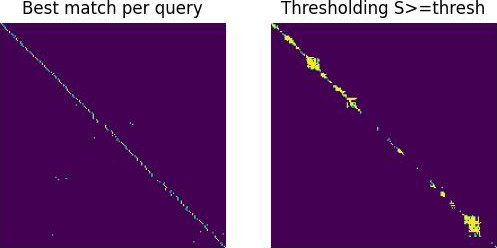

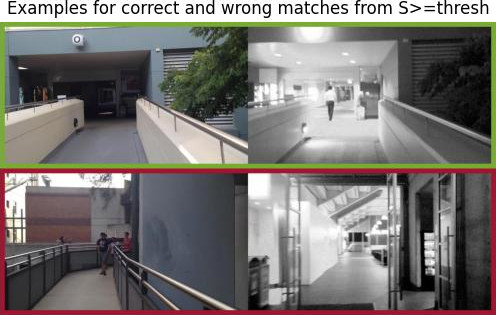

Work in progress: This repository provides the example code from our paper "Visual Place Recognition: A Tutorial". The code performs VPR on the GardensPoint day_right--night_right dataset. Output is a plotted pr-curve, matching decisions, two examples for a true-positive and a false-positive matching, and the AUC performance, as shown below.

If you use our work for your academic research, please refer to the following paper:

@article{SchubertRAM2023ICRA2024,

title={Visual Place Recognition: A Tutorial},

author={Schubert, Stefan and Neubert, Peer and Garg, Sourav and Milford, Michael and Fischer, Tobias},

journal={IEEE Robotics \& Automation Magazine},

year={2023},

doi={10.1109/MRA.2023.3310859}

}This repository is configured for use with GitHub Codespaces, a service that provides you with a fully-configured Visual Studio Code environment in the cloud, directly from your GitHub repository.

To open this repository in a Codespace:

- Click on the green "Code" button near the top-right corner of the repository page.

- In the dropdown, select "Open with Codespaces", and then click on "+ New codespace".

- Your Codespace will be created and will start automatically. This process may take a few minutes.

Once your Codespace is ready, it will open in a new browser tab. This is a full-featured version of VS Code running in your browser, and it has access to all the files in your repository and all the tools installed in your Docker container.

You can run commands in the terminal, edit files, debug code, commit changes, and do anything else you would normally do in VS Code. When you're done, you can close the browser tab, and your Codespace will automatically stop after a period of inactivity.

python3 demo.py

The GardensPoints Walking dataset will be downloaded automatically. You should get an output similar to this:

python3 demo.py

===== Load dataset

===== Load dataset GardensPoint day_right--night_right

===== Compute local DELF descriptors

===== Compute holistic HDC-DELF descriptors

===== Compute cosine similarities S

===== Match images

===== Evaluation

===== AUC (area under curve): 0.74

===== R@100P (maximum recall at 100% precision): 0.36

===== recall@K (R@K) -- R@1: 0.85 , R@5: 0.925 , R@10: 0.945

| Precision-recall curve | Matchings M | Examples for a true positive and a false positive |

|---|---|---|

|

|

|

The code was tested with the library versions listed in requirements.txt. Note that Tensorflow or PyTorch is only required if the corresponding image descriptor is used. If you use pip, simply:

pip install -r requirements.txtYou can create a conda environment containing these libraries as follows (or use the provided environment.yml):

mamba create -n vprtutorial python numpy pytorch torchvision natsort tqdm opencv pillow scikit-learn faiss matplotlib-base tensorflow tensorflow-hub tqdm scikit-image patchnetvlad -c conda-forge| AlexNet | code* | paper |

| AMOSNet | code | paper |

| DELG | code | paper |

| DenseVLAD | code | paper |

| HDC-DELF | code | paper |

| HybridNet | code | paper |

| NetVLAD | code | paper |

| CosPlace | code | paper |

| EigenPlaces | code | paper |

| D2-Net | code | paper |

| DELF | code | paper |

| LIFT | code | paper |

| Patch-NetVLAD | code | paper |

| R2D2 | code | paper |

| SuperPoint | code | paper |

| DBoW2 | code | paper |

| HDC (Hyperdimensional Computing) | code | paper |

| iBoW (Incremental Bag-of-Words) / OBIndex2 | code | paper |

| VLAD (Vector of Locally Aggregated Descriptors) | code* | paper |

| Delta Descriptors | code | paper |

| MCN | code | paper |

| OpenSeqSLAM | code* | paper |

| OpenSeqSLAM 2.0 | code | paper |

| OPR | code | paper |

| SeqConv | code | paper |

| SeqNet | code | paper |

| SeqVLAD | code | paper |

| VPR | code | paper |

| ICM | code | paper | Graph optimization of the similarity matrix S |

| SuperGlue | code | paper | Local descriptor matching |

*Third party code. Not provided by the authors. Code implements the author's idea or can be used to implement the authors idea.