A Survey on Leveraging Pre-trained Generative Adversarial Networks for Image Editing and Restoration

Generative adversarial networks (GANs) have drawn enormous attention due to the simple yet effective training mechanism and superior image generation quality. With the ability to generate photo-realistic high-resolution (e.g.,

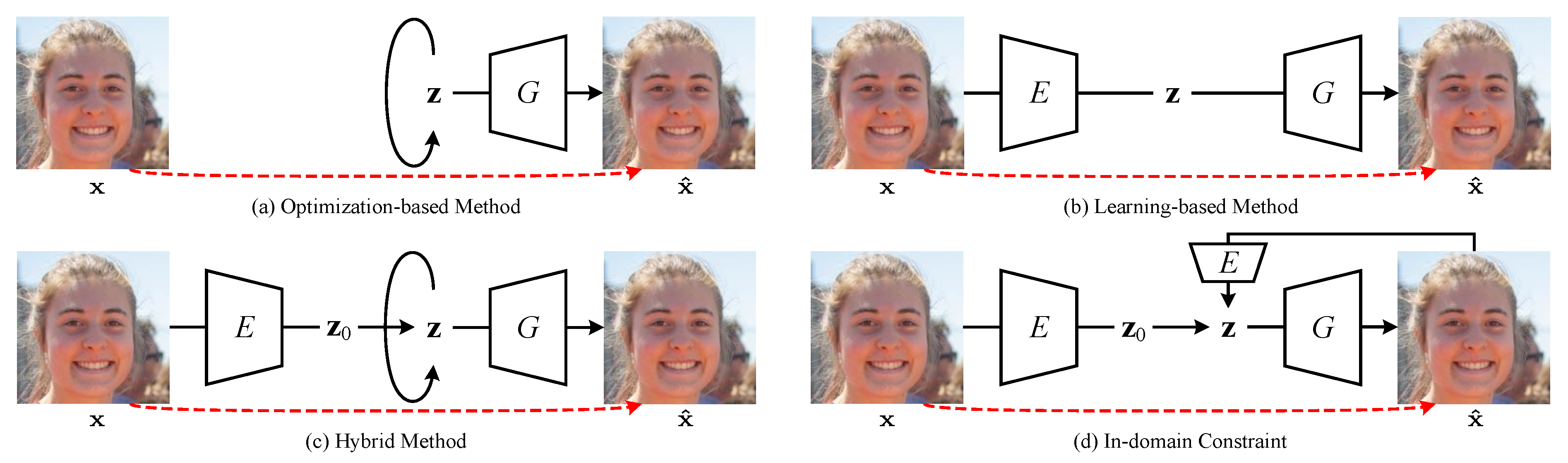

Figure 1. Illustration of GAN inversion methods.

Illustration

In this figure,

Figure Content (PDF file here)

Figure 2. A Summary of Relevant Papers

If you want to get the raw file, please refer to ProcessOn.com (passcode: 1qaz)

Figure 3. Illustration of recent GAN models (see (a)$\sim$(d)) and the latent spaces of StyleGAN series (see (e)).

Illustration

(a) For PGGAN, the blue part denotes the progressive growing procedure fromFigure Content (PDF file here)

Table 1. A summary of GAN inversion and methods leveraging pre-trained GANs for image editing and restoration.

Illustration

For the inversion method, "O", "L", "T" represent optimization-based, learning-based, and training-based (or fine-tuning) methods, while "/" means no inversion is performed in this method, and the numbers (without square brackets) are the indices of methods used for inversion in this table. Note that the methods are ordered (roughly) according to publicly accessible time (e.g., the appear time on ArXiv, openreview.net, CVF Open Access, etc.).

Abbreviations

Table Content

| No. | Method | Publication | Backbone | Latent Space | Inversion Method | Dataset$^\ast$ | Application$^\dagger$ |

|---|---|---|---|---|---|---|---|

| 1 | BiGAN (Link) (Code) | ICLR 2017 | / | T | MN, IN | Inv | |

| 2 | ALI (Link) (Code) | ICLR 2017 | / | T | CF, SV, CA, IN | Inv, Int | |

| 3 | Zhu et al. (Link) (Code) | ECCV 2016 | DCGAN | L, O | SH, LS, PL$^\ddagger$ | Inv, Int, AE | |

| 4 | IcGAN (Link) (Code) | NeurIPSw 2016 | cGAN |

|

L | MN, CA | Inv, AT, AE |

| 5 | Creswell et al. (Link) (Code) | T-NNLS 2018 | DCGAN, WGAN-GP | O | OM, UT, CA | Inv | |

| 6 | Lipton et al. (Link) (Code) | ICLRw 2017 | DCGAN | O | CA | Inv | |

| 7 | PGD-GAN (Link) (Code) | ICASSP 2018 | DCGAN | O | MN, CA | Inv | |

| 8 | Ma et al. (Link) (Code) | NeurIPS 2018 | DCGAN | O | MN, CA | Inv, IP | |

| 9 | Suzuki et al. (Link) (Code) | ArXiv 2018 | SNGAN, BigGAN, StyleGAN | 3 | IN, FL, FF, DA | CO | |

| 10 | GANDissection (Link) (Code) | ICLR 2019 | PGGAN | / | LS, AD | AE, AR | |

| 11 | NPGD (Link) (Code) | ICCV 2019 | DCGAN, SAGAN | L, O | MN, CA, LS | Inv, SR, IP | |

| 12 | Image2StyleGAN (Link) (Code) | ICCV 2019 | StyleGAN | O | FF$^\ddagger$ | Inv, Int, AE, ST | |

| 13 | Bau et al. (Link) (Code) | ICLRw 2019 | PGGAN, WGAN-GP, StyleGAN |

|

L, O | LS | Inv |

| 14 | GANPaint (Link) (Demo) | ToG 2019 | PGGAN |

|

L, O, T | LS | Inv, AE |

| 15 | InterFaceGAN(Link) (Code) | CVPR 2020 | PGGAN, StyleGAN |

|

3, 8 | CH | AE, AR |

| 16 | GANSeeing(Link) (Code) | ICCV 2019 | PGGAN, WGAN-GP, StyleGAN |

|

13 | LS | Inv |

| 17 | YLG(Link) (Code) | CVPR 2020 | SAGAN | O | IN | Inv | |

| 18 | Image2StyleGAN++(Link) (Video) | CVPR 2020 | StyleGAN |

|

O | LS, FF | Inv, CO, IP, AE, ST |

| 19 | mGANPrior(Link) (Code) | CVPR 2020 | PGGAN, StyleGAN | O | FF, CH, LS | Inv, IC, SR, IP, DN, AE | |

| 20 | MimicGAN(Link) | IJCV 2020 | DCGAN | O | CA, FF, LF | Inv, UDA, AD, AN | |

| 21 | PULSE(Link) (Code) | CVPR 2020 | StyleGAN | O | FF, CH | Inv, SR | |

| 22 | DGP(Link) (Code) | ECCV 2020 | BigGAN | O, T | IN, P3 | Inv, Int, IC, IP, SR, AD, TR, AE | |

| 23 | StyleGAN2Distillation(Link) (Code) | ECCV 2020 | StyleGAN2, pix2pixHD | / | FF | AT, AE | |

| 24 | EditingInStyle(Link) (Code) | CVPR 2020 | PGGAN, StyleGAN, StyleGAN2 | / | FF, LS | AT | |

| 25 | StyleRig(Link) (Video) | CVPR 2020 | StyleGAN | / | FF | AT | |

| 26 | ALAE(Link) (Code) | CVPR 2020 | StyleGAN | T | MN, FF, LS, CH | Inv, AT | |

| 27 | IDInvert(Link) (Code) | ECCV 2020 | StyleGAN | L, O | FF, LS | Inv, Int, AE, CO | |

| 28 | pix2latent(Link) (Code) | ECCV 2020 | BigGAN, StyleGAN2 | O | IN, CO, CF, LS | Inv, TR, AE | |

| 29 | IDDistanglement(Link) (Code) | ToG 2020 | StyleGAN | L | FF | Inv, AT | |

| 30 | WhenAndHow(Link) | ArXiv 2020 | MLP | O | MN | Inv, IP | |

| 31 | Guan et al.(Link) | ArXiv 2020 | StyleGAN | L, O | CH, CD | Inv, Int, AT, IC | |

| 32 | SeFa(Link) (Code) | CVPR 2021 | PGGAN, BigGAN, StyleGAN | 19, 27 | FF, CH, LS, IN, SS, DA | AE | |

| 33 | GH-Feat(Link) (Code) | CVPR 2021 | StyleGAN | L | MN, FF, LS, IN | Inv, AT, AE | |

| 34 | pSp(Link) (Code) | CVPR 2021 | StyleGAN2 | L | FF, AF, CH, CM | Inv, FF, SI, SR | |

| 35 | StyleFlow(Link) (Code) | ToG 2021 | StyleGAN, StyleGAN2 | 12 | FF, LS | AT, AE | |

| 36 | PIE(Link) (Code) | ToG 2020 | StyleGAN | O | FF | AT, AE | |

| 37 | Bartz et al.(Link) (Code) | BMVC 2020 | StyleGAN, StyleGAN2 |

|

L | FF, LS | Inv, DN |

| 38 | StyleIntervention(Link) | ArXiv 2020 | StyleGAN2 | O | FF | Inv, AE | |

| 39 | StyleSpace(Link) (Code) | CVPR 2021 | StyleGAN2 | O | FF, LS | Inv, AE | |

| 40 | Hijack-GAN(Link) (Code) | CVPR 2021 | PGGAN, StyleGAN | / | CH | AE | |

| 41 | NaviGAN(Link) (Code) | CVPR 2021 | pix2pixHD, BigGAN, StyleGAN2 | StyleGAN2 | FF, LS, CS, IN | AE | |

| 42 | GLEAN(Link) (Code) | CVPR 2021 | StyleGAN | L | FF, LS | Inv, SR | |

| 43 | ImprovedGANEmbedding(Link) (Code) | ArXiv 2020 | StyleGAN, StyleGAN2 | O | FF, MF$^\ddagger$ | Inv, IC, IP, SR | |

| 44 | GFPGAN(Link) (Code) | CVPR 2021 | StyleGAN2 | L | FF | Inv, SR | |

| 45 | EnjoyEditing(Link) (Code) | ICLR 2021 | PGGAN, StyleGAN2 | 12 | FF, CA, CH, P3, TR | Inv, AE | |

| 46 | SAM(Link) (Code) | ToG 2021 | StyleGAN | L | CA, CH | AE | |

| 47 | e4e(Link) (Code) | ToG 2021 | StyleGAN2 | L | FF, CH, LS, SC | Inv, AE | |

| 48 | StyleCLIP(Link) (Code) | ICCV 2021 | StyleGAN2 |

|

47, O | FF, CH, LS, AF | AE |

| 49 | LatentComposition(Link) (Code) | ICLR 2021 | PGGAN, StyleGAN2 | L | FF, CH, LS | Inv, IP, AT | |

| 50 | GANEnsembling(Link) (Code) | CVPR 2021 | StyleGAN2 | L, O | CH, SC, PT | Inv, AT | |

| 51 | ReStyle(Link) (Code) | ICCV 2021 | StyleGAN2 | L | FF, CH, SC, LS, AF | Inv, AE | |

| 52 | E2Style(Link) (Code) | T-IP 2022 | StyleGAN2 | L | FF, CH | Inv, SI, PI, AT, IP, SR, AE, IH | |

| 53 | GPEN(Link) (Code) | CVPR 2021 | StyleGAN2 |

|

L | FF, CH | Inv, SR |

| 54 | Consecutive(Link) (Code) | ICCV 2021 | StyleGAN | O | FF, RA | Inv, Int, AE | |

| 55 | BDInvert(Link) (Code) | ICCV 2021 | StyleGAN, StyleGAN2 |

|

O | FF, CH, LS | Inv, AE |

| 56 | HFGI(Link) (Code) | CVPR 2022 | StyleGAN2 |

|

L | FF, CH, SC | Inv, AE |

| 57 | VisualVocab(Link) (Code) | ICCV 2021 | BigGAN | / | P3, IN | AE | |

| 58 | HyperStyle(Link) (Code) | CVPR 2022 | StyleGAN2 | L | FF, CH, AF | Inv, AE, ST | |

| 59 | GANGealing(Link) (Code) | CVPR 2022 | StyleGAN2 | / | LS, FF, AF, CH, CU | TR | |

| 60 | HyperInverter(Link) (Code) | CVPR 2022 | StyleGAN2 |

|

L | FF, CH, LS | Inv, Int, AE |

| 61 | InsetGAN(Link) (Code) | CVPR 2022 | StyleGAN2 | O | FF, DF$^\ddagger$ | CO, IG | |

| 62 | HairMapper(Link) (Code) | CVPR 2022 | StyleGAN2 | 47 | FF, CM$^\ddagger$ | AE | |

| 63 | SAMInv(Link) (Code) | CVPR 2022 | BigGAN-deep, StyleGAN2 |

|

L | FF, LS, IN | Inv, AE |

Pull requests are welcome for error correction and content expansion!

Tips:

- The tables in latex and markdown can be generated by tablesgenerator.com

- You can download our table content from here, and load it (or your own CSV files) in tablesgenerator.com

Please find more details in our paper. If you find it useful, please consider citing

@article{liu2022pretrainedGANs,

title={A Survey on Leveraging Pre-trained Generative Adversarial Networks for Image Editing and Restoration},

author={Liu, Ming and Wei, Yuxiang and Wu, Xiaohe and Zuo, Wangmeng and Zhang, Lei},

journal={arXiv preprint arXiv:2207.10309},

year={2022}

}