Few-shot learning has gained ample momentum in the past few years, thanks to its adaptability to small labeled datasets. This is especially relevant in medical imaging, where datasets often have limited high-quality annotations, like in CT scans of COVID-19 infection.

This project aims to provide an intuitive framework, with detailed documentation and easily usable code, to show the benefits of using few-shot learning for semantic segmentation of COVID-19 lungs infection. Also, by the very nature of few-shot learning, the framework is readily applicable to automatic detection/segmentation of other types of lung infection without need for re-training on more specific datasets.

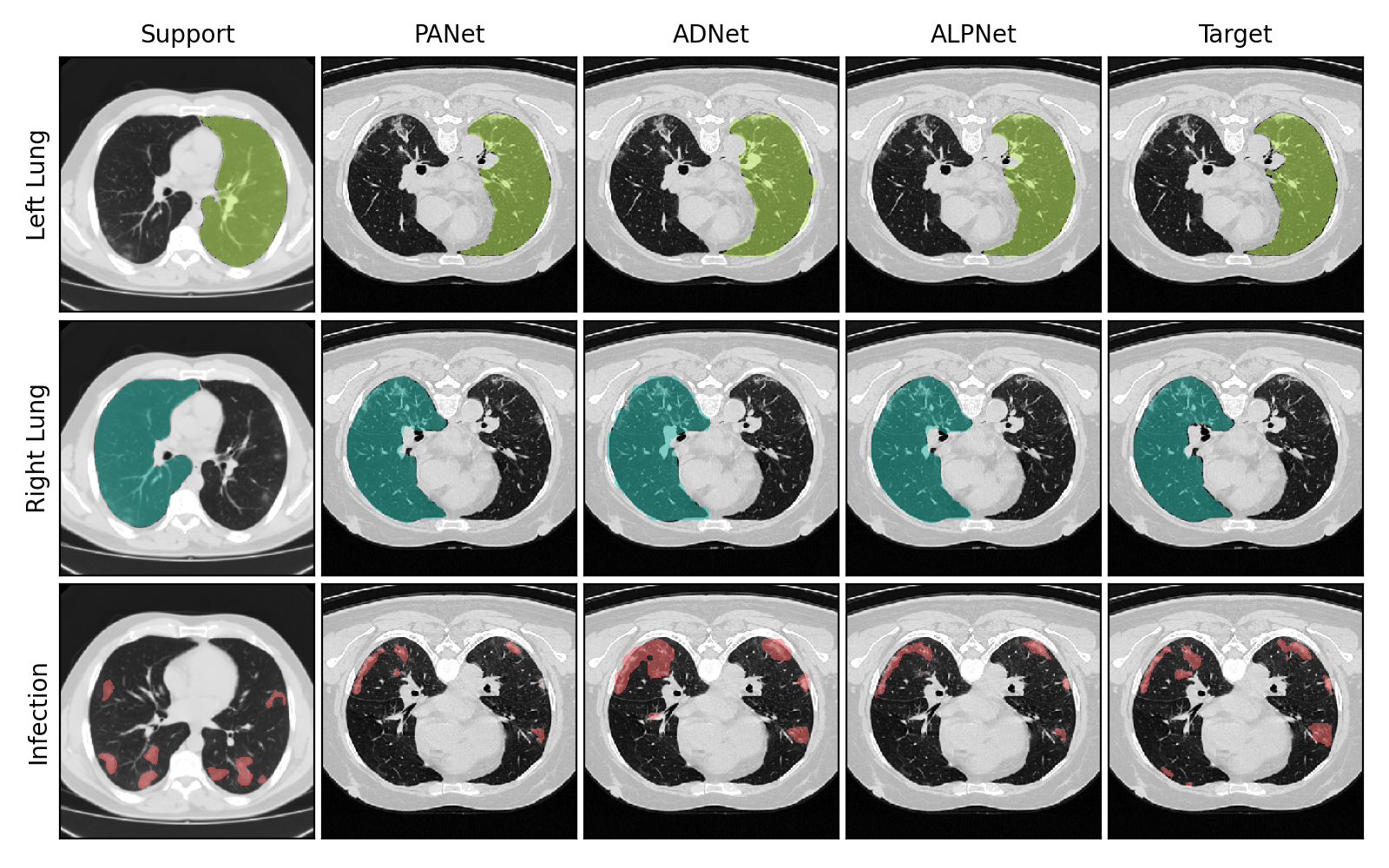

The few-shot models used in this project are based on PANet, ADNet and ALPNet, which are popular in the medical image segmentation landscape. Have a look at their papers!

Thanks for your interest. 🙂

-

Clone/fork the repository.

-

Install the required dependencies, listed in

requirements.txt -

Change the paths in the script files

./experiments/<...>.shaccording to your root directory.

-

Download the COVID-19-CT-Seg dataset.

-

Put the CT scans under

./data/COVID-19-CT-Seg/Dataand the segmentation masks under./data/COVID-19-CT-Seg/<Infection_Mask/Lung_Mask/Lung_and_Infection_Mask> -

Run the data pre-processing notebook

☝ This pre-processing pipeline can be used for other medical imaging datasets with minor adjustments, feel free to experiment!

-

Adjust the experimental settings in

./configs/config_<panet/adnet/alpnet>.yaml -

Each model is trained and evaluated in a five-fold cross-validation fashion. For each fold:

-

Train on training split by running

./experiments/train_<panet/adnet/alpnet>.sh -

Evaluate on validation split by running

./experiments/validate_<panet/adnet/alpnet>.sh -

Remember to change

FOLDin the scripts after each cycle.

-

☝ If you have a computer cluster or a machine with multiple GPUs, you can try distributed training. Just change the last command in the train scripts, e.g.

torchrun --nproc_per_node=2 distributed_train.py # Using 2 GPUs

to run an equivalent training schedule but with distributed settings.

Do note that this feature is still under development so it might not work on every machine.

-

You can use this noteboook to visualise the results of your experiments

-

The table below shows a summary of the evaluation scores obtained by training for 50,000 iterations with a ResNet50 backbone.

| PANet | ADNet | ALPNet | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Left Lung |

|

|

|

|

||||||||||||

| Right Lung |

|

|

|

|

||||||||||||

| Infection |

|

|

|

|