vis_vad.mp4

vis_vad_carla.mp4

VAD: Vectorized Scene Representation for Efficient Autonomous Driving

Bo Jiang1*, Shaoyu Chen1*, Qing Xu2, Bencheng Liao1, Jiajie Chen2, Helong Zhou2, Qian Zhang2, Wenyu Liu1, Chang Huang2, Xinggang Wang1,†

1 Huazhong University of Science and Technology, 2 Horizon Robotics

*: equal contribution, †: corresponding author.

arXiv Paper, ICCV 2023

30 Oct, 2024: Checkout our new work Senna 🥰, which combines VAD/VADv2 with large vision-language models to achieve more accurate, robust, and generalizable autonomous driving planning.20 Sep, 2024: Core code of VADv2 (config and model) is available in theVADv2folder. Easy to integrade it into the VADv1 framework for training and inference.17 June, 2024: CARLA implementation of VADv1 is available on Bench2Drive.20 Feb, 2024: VADv2 is available on arXiv paper project page.1 Aug, 2023: Code & models are released!14 July, 2023: VAD is accepted by ICCV 2023🎉! Code and models will be open source soon!21 Mar, 2023: We release the VAD paper on arXiv. Code/Models are coming soon. Please stay tuned! ☕️

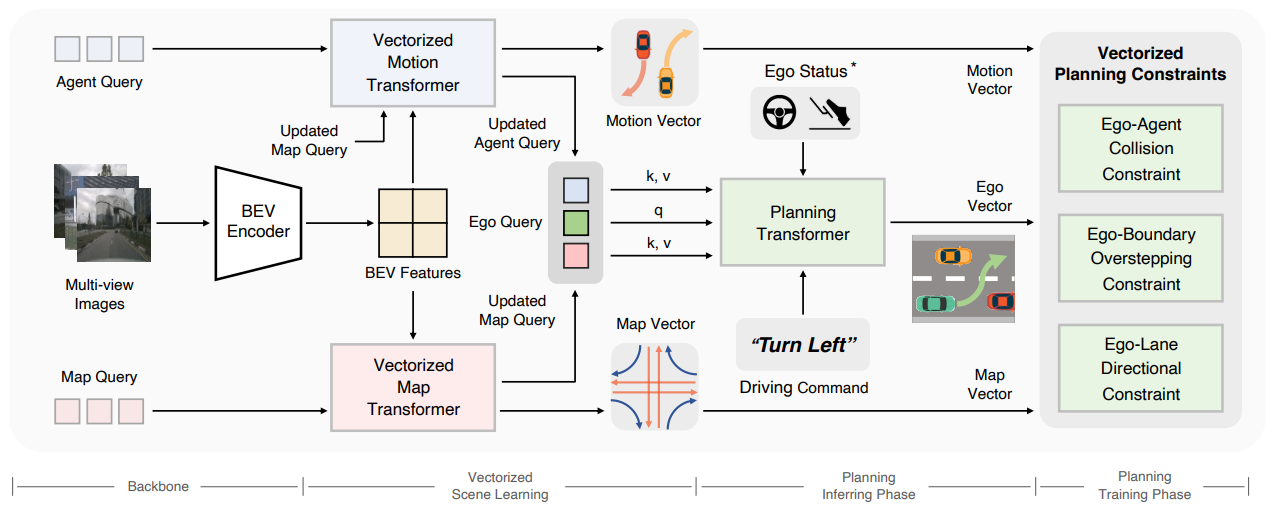

VAD is a vectorized paradigm for end-to-end autonomous driving.

- We propose VAD, an end-to-end unified vectorized paradigm for autonomous driving. VAD models the driving scene as a fully vectorized representation, getting rid of computationally intensive dense rasterized representation and hand-designed post-processing steps.

- VAD implicitly and explicitly utilizes the vectorized scene information to improve planning safety, via query interaction and vectorized planning constraints.

- VAD achieves SOTA end-to-end planning performance, outperforming previous methods by a large margin. Not only that, because of the vectorized scene representation and our concise model design, VAD greatly improves the inference speed, which is critical for the real-world deployment of an autonomous driving system.

| Method | Backbone | avg. L2 | avg. Col. | FPS | Config | Download |

|---|---|---|---|---|---|---|

| VAD-Tiny | R50 | 0.78 | 0.38 | 16.8 | config | model |

| VAD-Base | R50 | 0.72 | 0.22 | 4.5 | config | model |

| Method | L2 (m) 1s | L2 (m) 2s | L2 (m) 3s | Col. (%) 1s | Col. (%) 2s | Col. (%) 3s | FPS |

|---|---|---|---|---|---|---|---|

| ST-P3 | 1.33 | 2.11 | 2.90 | 0.23 | 0.62 | 1.27 | 1.6 |

| UniAD | 0.48 | 0.96 | 1.65 | 0.05 | 0.17 | 0.71 | 1.8 |

| VAD-Tiny | 0.46 | 0.76 | 1.12 | 0.21 | 0.35 | 0.58 | 16.8 |

| VAD-Base | 0.41 | 0.70 | 1.05 | 0.07 | 0.17 | 0.41 | 4.5 |

- Closed-loop simulation results on CARLA.

| Method | Town05 Short DS | Town05 Short RC | Town05 Long DS | Town05 Long RC |

|---|---|---|---|---|

| CILRS | 7.47 | 13.40 | 3.68 | 7.19 |

| LBC | 30.97 | 55.01 | 7.05 | 32.09 |

| Transfuser* | 54.52 | 78.41 | 33.15 | 56.36 |

| ST-P3 | 55.14 | 86.74 | 11.45 | 83.15 |

| VAD-Base | 64.29 | 87.26 | 30.31 | 75.20 |

*: LiDAR-based method.

- Code & Checkpoints Release

- Initialization

If you have any questions or suggestions about this repo, please feel free to contact us (bjiang@hust.edu.cn, outsidercsy@gmail.com).

If you find VAD useful in your research or applications, please consider giving us a star 🌟 and citing it by the following BibTeX entry.

@article{jiang2023vad,

title={VAD: Vectorized Scene Representation for Efficient Autonomous Driving},

author={Jiang, Bo and Chen, Shaoyu and Xu, Qing and Liao, Bencheng and Chen, Jiajie and Zhou, Helong and Zhang, Qian and Liu, Wenyu and Huang, Chang and Wang, Xinggang},

journal={ICCV},

year={2023}

}

@article{chen2024vadv2,

title={Vadv2: End-to-end vectorized autonomous driving via probabilistic planning},

author={Chen, Shaoyu and Jiang, Bo and Gao, Hao and Liao, Bencheng and Xu, Qing and Zhang, Qian and Huang, Chang and Liu, Wenyu and Wang, Xinggang},

journal={arXiv preprint arXiv:2402.13243},

year={2024}

}All code in this repository is under the Apache License 2.0.

VAD is based on the following projects: mmdet3d, detr3d, BEVFormer and MapTR. Many thanks for their excellent contributions to the community.