pLM-BLAST is a sensitive remote homology detection tool based on the comparison of residue embeddings obtained from protein language models such as ProtTrans5. It is available as a stand-alone package as well as an easy-to-use web server within the MPI Bioinformatics Toolkit, where pre-computed databases can be searched.

Create a conda environment:

conda create --name plmblast python=3.10

conda activate plmblast

# Install pip in the environment

conda install pipInstall pLM-BLAST using requirements.txt:

pip install -r requirements.txtPre-computed databases can be downloaded from http://ftp.tuebingen.mpg.de/pub/protevo/toolkit/databases/plmblast_dbs. pLM-BLAST can use any kind of embeddings, which are in the form of (seqlen, embdim).

The embeddings.py script can be used to create a custom database based on pLM embeddings (T5 based model such as prott5, esm-family or any model working with transformers AutoModel class) from a CSV or FASTA file. For example, the first lines of the CSV file for the ECOD database are:

,id,description,sequence

0,ECOD_000151743_e4aybQ1,"ECOD_000151743_e4aybQ1 | 4146.1.1.2 | 4AYB Q:33-82 | A: alpha bundles, X: NO_X_NAME, H: NO_H_NAME, T: YqgQ-like, F: RNA_pol_Rpo13 | Protein: DNA-DIRECTED RNA POLYMERASE",FPKLSIQDIELLMKNTEIWDNLLNGKISVDEAKRLFEDNYKDYEKRDSRR

1,ECOD_000399743_e3nmdE1,"ECOD_000399743_e3nmdE1 | 5027.1.1.3 | 3NMD E:3-53 | A: extended segments, X: NO_X_NAME, H: NO_H_NAME, T: Preprotein translocase SecE subunit, F: DD_cGKI-beta | Protein: cGMP Dependent PRotein Kinase",LRDLQYALQEKIEELRQRDALIDELELELDQKDELIQMLQNELDKYRSVI

2,ECOD_002164660_e6atuF1,"ECOD_002164660_e6atuF1 | 927.1.1.1 | 6ATU F:8-57 | A: few secondary structure elements, X: NO_X_NAME, H: NO_H_NAME, T: Elafin-like, F: WAP | Protein: Elafin",PVSTKPGSCPIILIRCAMLNPPNRCLKDTDCPGIKKCCEGSCGMACFVPQ

If the input file is in CSV format, use -cname to specify in which column the sequences are stored.

It is recommended to sort the input sequences by length before running embeddings.py, when dealing with big databases.

By default embeddings.py uses ProtT5 (-embedder pt alias for Rostlab/prot_t5_xl_half_uniref50-enc), by typing - embedder hf:modelname_or_path will download or load locally stored

model supported by huggingface AutoModel class (same as calling: AutoModel.from_pretrained(modelname_or_path)).

# CSV input

python embeddings.py start database.csv database -embedder pt -cname sequence --gpu -bs 0 --asdir

# FASTA input

python embeddings.py start database.fasta database -embedder pt --gpu -bs 0 --asdirIn the examples above, database defines a directory where sequence embeddings are stored.

The batch size (number of sequences per batch) is set with the -bs option. Setting -bs to 0 activates the adaptive mode, in which the batch size is set so that all included sequences have no more than 3000 residues (this value can be changed with --res_per_batch).

The use of --gpu is highly recommended for large datasets. To run embeddings.py on multiple GPUs, specify -nproc X where X is the number of GPU devices you want to use.

When dealing with large databases, it may be helpful to resume previously stopped or interrupted computations. When embeddings.py encounters an exception or keyboard interrupt, the main process captures the actual computation steps in the checkpoint file. If you want to resume, type:

python embeddings.py resume databaseTo search a database database with a FASTA sequence in query.fas, we first need to compute the embedding:

python embeddings.py start query.fas query.ptThen the plmblast.py script can be used to search the database:

python ./scripts/plmblast.py database query output.csv -cpc 70You can also perform an all vs. all search by typing:

python ./scripts/plmblast.py database database output.csv -cpc 70🌞 Note that only the base filename should be specified for the query and database (extensions are added automatically) 🌞

The -cpc X with X > 0 option enables the use of cosine similarity pre-screening, which improves search speed. This option is recommended for typical applications, such as query vs database search. Follow the link for more examples and run plmblast.py -h for more options.

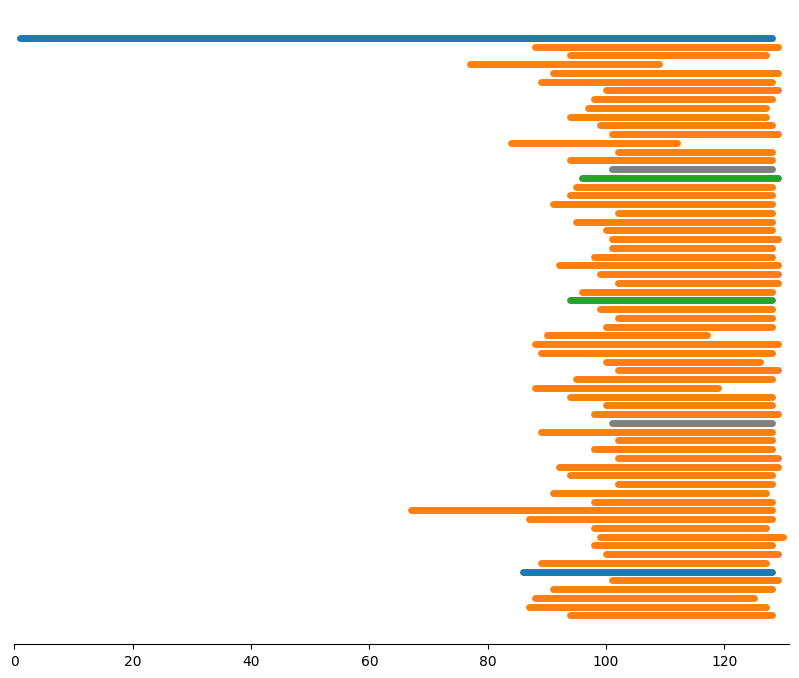

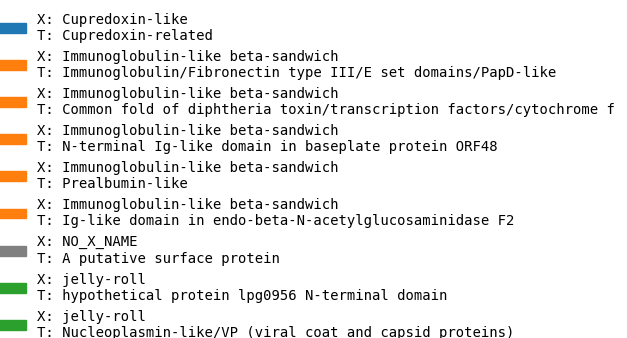

The results of searching a database with a single query sequence can be visualized using the plot.py script. For example:

python ./scripts/plot.py results.csv query.fas plot.png -mode score -ecodWhere results.csv are the results of the plmblast.py script, query.fas is the query sequence in FASTA format, and plot.png is the plot file that will be generated.

The -mode option is used to specify the order of the hits in the plot. score causes the hits to be sorted by score, while qend (default) causes the hits to be sorted by the end position of the match in the query. In both modes, the bars corresponding to each hit are colored according to the score.

The additional flag -ecod can be used to color the hits according to the ECOD classification. When this flag is used, an additional legend plot will be generated (.legend.png extension).

A visualization of the results of searching the ECOD30 database with the sequence of the cupredoxin domain of the glycogen debranching protein. See onevsall.sh for details.

If you find the pLM-BLAST useful, please cite:

"pLM-BLAST – distant homology detection based on direct comparison of sequence representations from protein language models"

Kamil Kaminski, Jan Ludwiczak, Kamil Pawlicki, Vikram Alva, and Stanislaw Dunin-Horkawicz

Link

If you have any questions, problems, or suggestions, please contact us.

This work was supported by the First TEAM program of the Foundation for Polish Science co-financed by the European Union under the European Regional Development Fund.

- 26/09/2023 Improved embedding extraction script, calculations can now be resumed if interrupted, see databases section for more info.

- 26/09/2023 Improved adaptive batching strategy for

-bs 0option, batch size is now divisible by 4 for better performance, and-res_per_batchoptions have been added. - 9/10/2023 added support for

hdf5files for embedding generation, soon we will add support for therun_plmblast.pyscript. - 9/10/2023 added multi-processing feature to embedding generation,

-nproc Xoptions will now spawnXindependent processes. - 27/10/2023 added

examplesdirectory with end-to-end usages - 26/11/2023 added parallelism to cosine prescreening - which gives a huge performance boost, especially for multiple query sequences

- 05/12/2023 added signal enhancement "Embedding-based alignment: combining protein language models and alignment approaches to detect structural similarities in the twilight-zone": https://www.biorxiv.org/content/10.1101/2022.12.13.520313v2

- 22/02/2024 improved RAM consumption in the prescreening process - additionally whole procedure will be faster now

- 23/04/2024 added support for transformers

AutoModel - 11/06/2024 improved speed and memory efficiency for long

plmblast.pyruns. Intermediate results are now stored on disk not RAM. - 30/07/2024 added

--only-scanflag toplmblast.pynow script can be run only pre-screening. Improved pre-screening parameter control