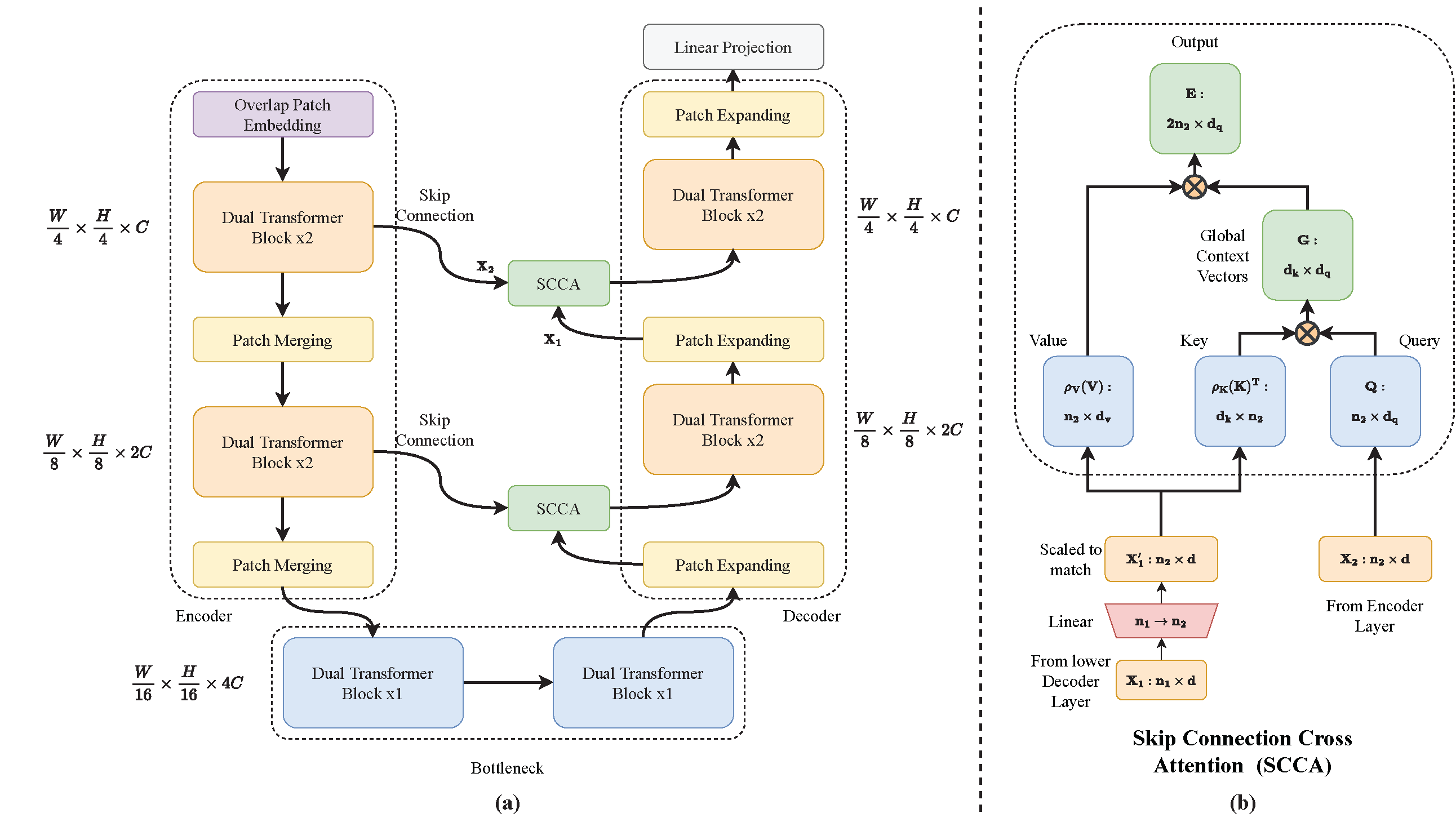

DAEFormer

The official code for "DAE-Former: Dual Attention-guided Efficient Transformer for Medical Image Segmentation".

Updates

- 29 Dec., 2022: Initial release with arXiv.

- 27 Dec., 2022: Submitted to MIDL 2023 [Under Review].

Citation

@article{azad2022daeformer,

title={DAE-Former: Dual Attention-guided Efficient Transformer for Medical Image Segmentation},

author={Azad, Reza and Arimond, René and Aghdam, Ehsan Khodapanah and Kazerouni, Amirhosein and Merhof, Dorit},

journal={arXiv preprint arXiv:2212.13504},

year={2022}

}

How to use

The script train.py contains all the necessary steps for training the network. A list and dataloader for the Synapse dataset are also included.

To load a network, use the --module argument when running the train script (--module <directory>.<module_name>.<class_name>, e.g. --module networks.DAEFormer.DAEFormer)

Model weights

You can download the learned weights of the DAEFormer in the following table.

| Task | Dataset | Learned weights |

|---|---|---|

| Multi organ segmentation | Synapse | DAE-Former |

Training and Testing

-

Download the Synapse dataset from here.

-

Run the following code to install the Requirements.

pip install -r requirements.txt -

Run the below code to train the DAEFormer on the synapse dataset.

python train.py --root_path ./data/Synapse/train_npz --test_path ./data/Synapse/test_vol_h5 --batch_size 20 --eval_interval 20 --max_epochs 400 --module networks.DAEFormer.DAEFormer

--root_path [Train data path]

--test_path [Test data path]

--eval_interval [Evaluation epoch]

--module [Module name, including path (can also train your own models)]

-

Run the below code to test the DAEFormer on the synapse dataset.

python test.py --volume_path ./data/Synapse/ --output_dir './model_out'--volume_path [Root dir of the test data]

--output_dir [Directory of your learned weights]

Results

Performance comparision on Synapse Multi-Organ Segmentation dataset.

Query

All implementation done by Rene Arimond. For any query please contact us for more information.

rene.arimond@lfb.rwth-aachen.de