The Python code for a one-shot next best view method for active object recognition. Active object recognition tries to get visual information from different viewpoints of objects to classify them better if there is uncertainty in the original recognition. In this context, next best view decides among the candidate viewpoints to provide better information for the active object recognition system for the goal of improved classification performance.

The next best view method works by dividing the current frontal viewpoint into a collection of tiles, where each peripheral tile of the image represents a side view of the current object. The following figure shows a sample situation where the frontal view is divided into nine tiles and choosing the top left would mean to look at the object from its top left next time.

It then considers a few criteria to determine the next viewpoint in only a single shot and with merely the front view of the object available. The criteria include: histogram variance, foreshortening, and classification dissimilarity of a tile compared to the frontal view. Due to the need to compute foreshortening, the next best view method requires a depth camera to provide both color images and depth maps. Each of the criteria then cast votes, according to the preference they give to the tiles. The lowest ranked tile gets no votes, while others get one more vote than their less preferred one.

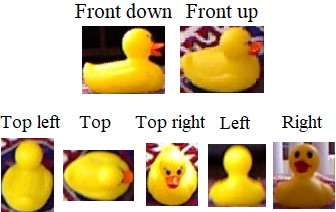

Our team worked on gathering a small dataset to be used specifically as a benchmark for active object recognition systems and their next best view mechanisms. This dataset is the only available dataset at the time of release to provide such a functionality for different objects, all of them in various lighting conditions and backgrounds. It was used to evaluate the next best view method and can be found in here. The following figure shows a test situation that involves two different frontal views to choose from and five side views as candidate next viewpoints.

The next best view method can work with any classifier. In our implementation, the following classifiers are available:

- Four convolutional neural networks, including a ResNet 101

- A support vector machine with the option of Hu moment invariants, color histogram, and/or histogram of oriented gradients (HOG) as the features with principal component analysis (PCA) as the feature reduction method

- A random forest with a bag of words feature descriptor on top of the SIFT or KAZE keypoint extractor and descriptor.

In addition, to combine the classification results of the original view and the next one, three decsion fusion techniques are implemented: Naive Bayes, Dempster-Shafer, and averaging.

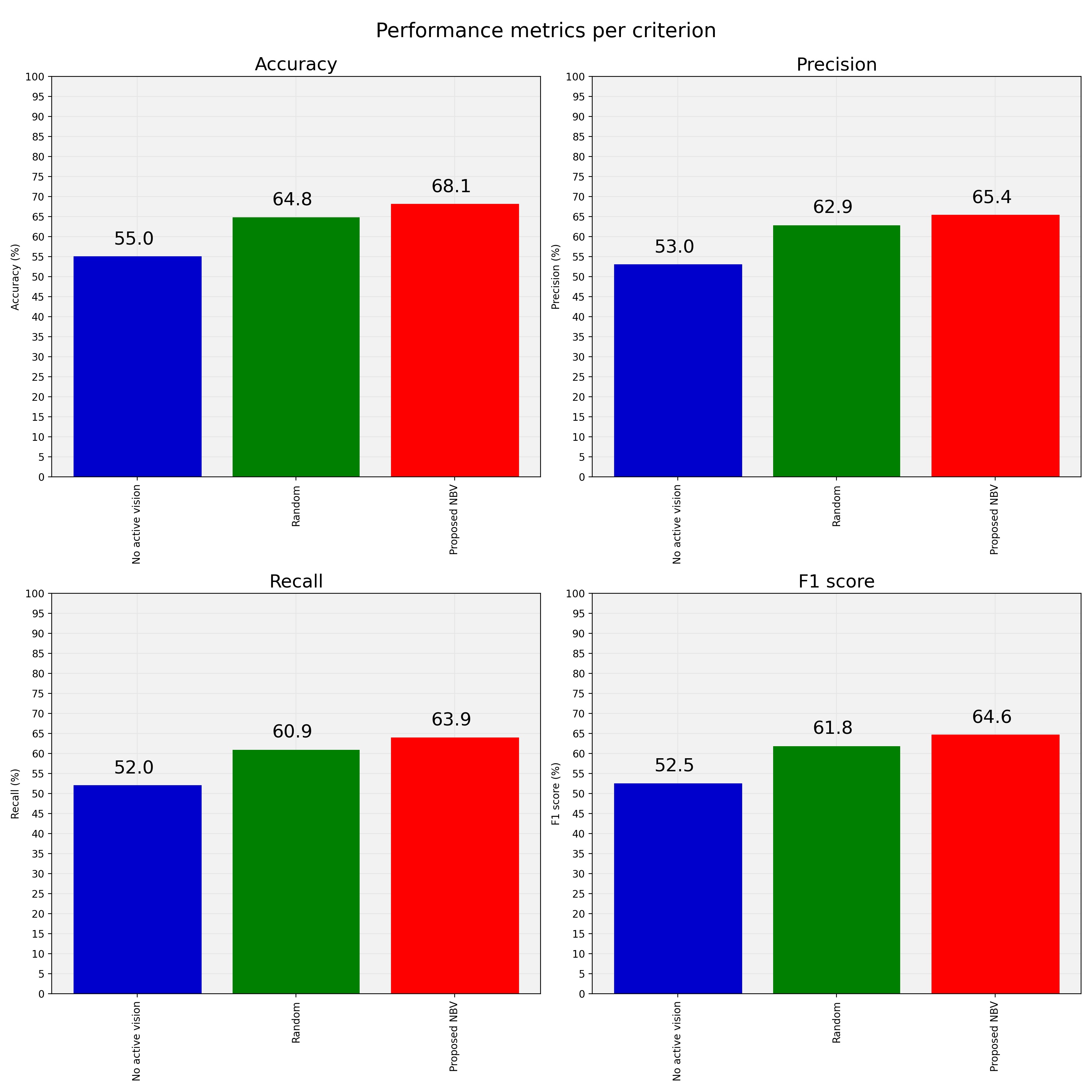

The results demonstrate that while a randomly moving active object recognition mechanism generally improves classification performance, a next best view system adds to the improvements even more. On average, the performance measures, such as accuracy and F1 score point to 13.1% (0.131) and 12.1% (0.121) improvements in classification performance with the next best view method, while a randomly-selecting active vision provides 9.8% and 9.3% enhancements. In the below figure, blue, green, and red bars represent single-view, randomly-selecting active vision, and active next best view recognition performance.

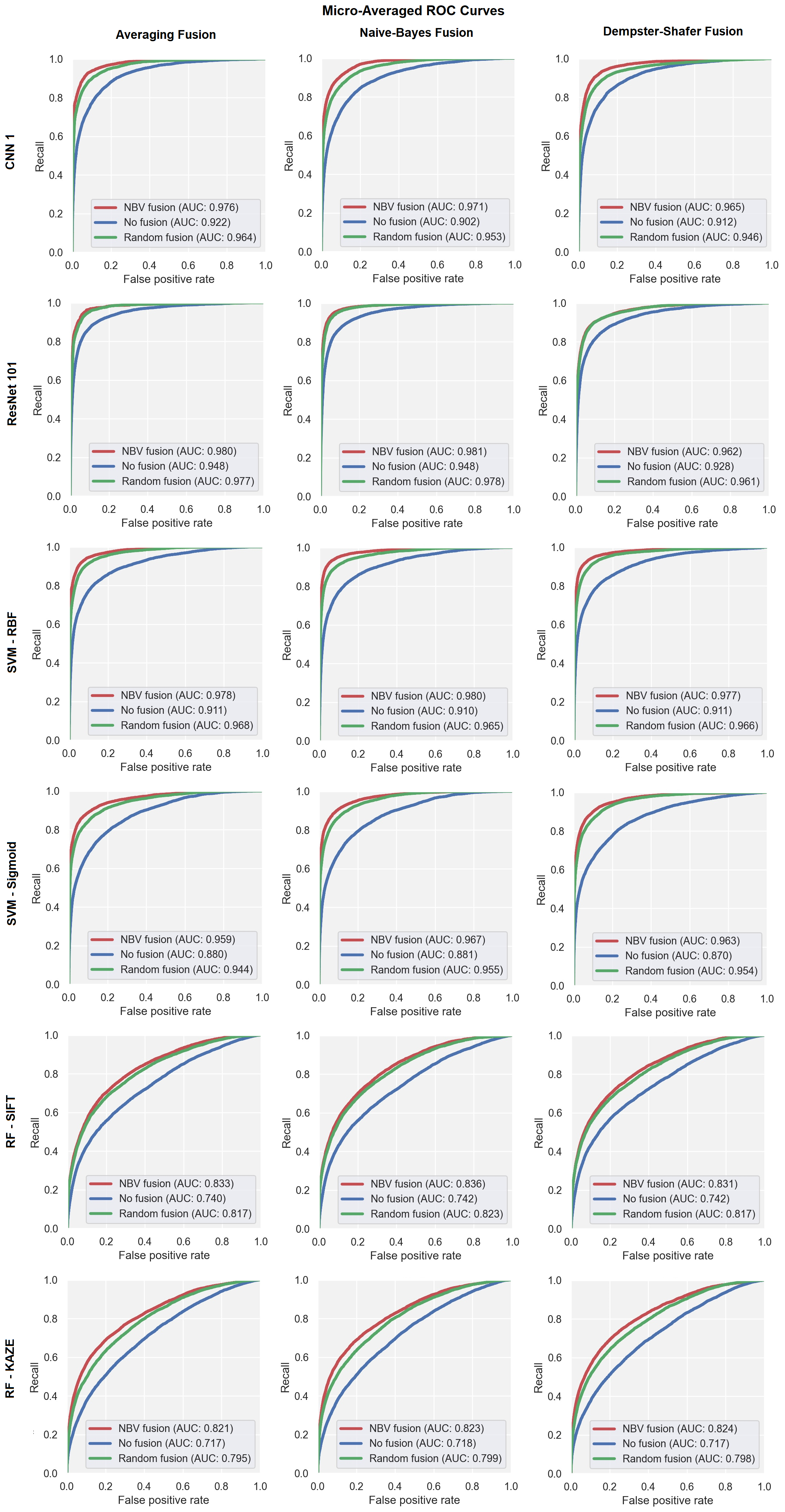

In addition, ROC curves for different classifier types and decision fusion techniques illustrate the efficacy of next best view method compared to a single classification and randomly-selecting active vision.

Train images (for training the classifiers) should be placed under the vision_training directory with images of each class be placed in a separate folder with the name of the class being the folder name. The test images and depth maps should be put under the test_set directory. The test dataset can be found in the Next-Best-View-Dataset repository.

To run the code, the file main.py should be executed. The configurations of the classifiers, fusion, test data augmentation, and evaluation are set in the config.cfg under the config directory.

I can be reached at hoseini@nevada.unr.edu.