Official page of "Run Your Visual-Inertial Odometry on NVIDIA Jetson: Benchmark Tests on a Micro Aerial Vehicle", which is accepted by RA-L with ICRA'21 option

This is the dataset for testing the robustness of various VO/VIO methods, acquired on a UAV.

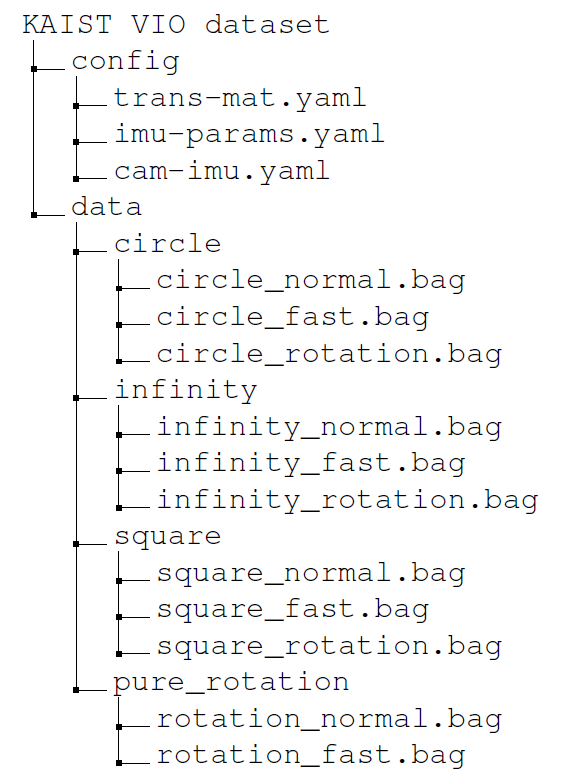

You can download the whole dataset on KAIST VIO dataset

- Four different trajectories:

- circle

- infinity

- square

- pure_rotation

- Each trajectory has three types of sequence:

- normal speed

- fast speed

- rotation

- The pure rotation sequence has only normal speed, fast speed types

You can download a single ROS bag file from the link below. (or whole dataset from KAIST VIO dataset)

| Trajectory | Type | ROS bag download |

|---|---|---|

| circle | normal fast rotation |

link link link |

| infinity | normal fast rotation |

link link link |

| square | normal fast rotation |

link link link |

| rotation | normal fast |

link link |

- Each set of data is recorded as a ROS bag file.

- Each data sequence contains the followings:

- stereo infra images (w/ emitter turned off)

- mono RGB image

- IMU data (3-axes accelerometer, 3-axes gyroscopes)

- 6-DOF Ground-Truth

- ROS topic

- Camera(30 Hz): "/camera/infra1(2)/image_rect_raw/compressed", "/camera/color/image_raw/compressed"

- IMU(100 Hz): "/mavros/imu/data"

- Ground-Truth(50 Hz): "/pose_transformed"

- In the config directory

- trans-mat.yaml: translational matrix between the origin of the Ground-Truth and the VI sensor unit.

(the offset has already been applied to the bag data, and this YAML file has estimated offset values, just for reference. To benchmark your VO/VIO method more accurately, you can use your alignment method with other tools, like origin alignment or Umeyama alignment from evo) - imu-params.yaml: estimated noise parameters of Pixhawk 4 mini

- cam-imu.yaml: Camera intrinsics, Camera-IMU extrinsics in kalibr format

- trans-mat.yaml: translational matrix between the origin of the Ground-Truth and the VI sensor unit.

- publish ground truth as trajectory ROS topic

- ground truth are recorded as 'geometry_msgs/PoseStamped'

- but you may want to acquire 'nav_msgs/Path' rather than just 'Pose' for visuaslization purpose (e.g. Rviz)

- For this, you can refer this package for 'geometry_msgs/PoseStamped'->'nav_msgs/Path': tf_to_trajectory

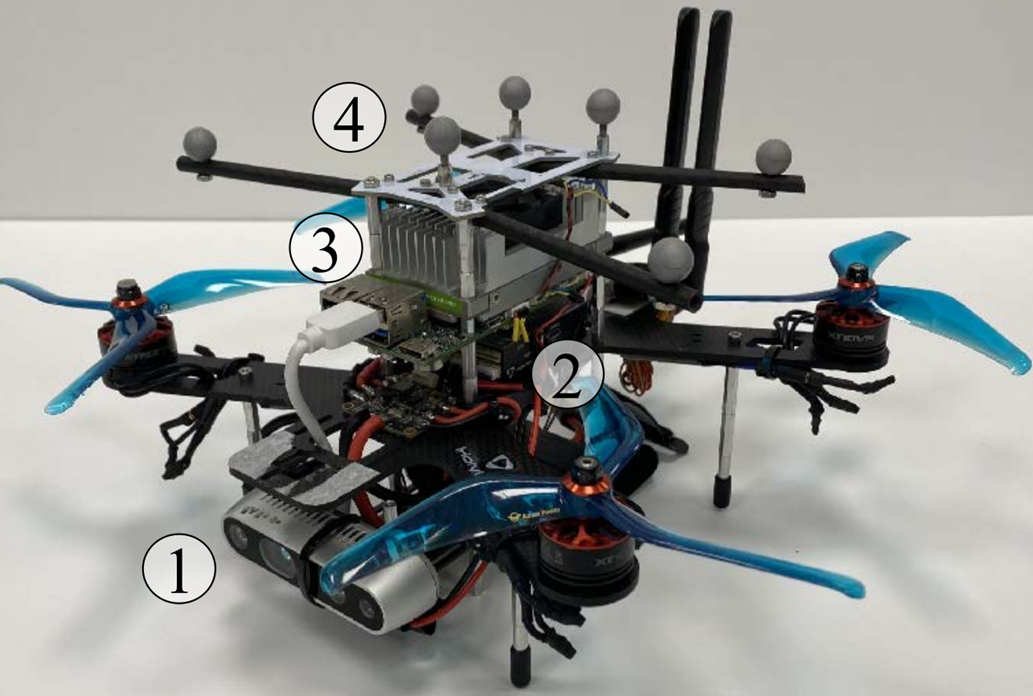

Fig.1 Lab Environment Fig.2 UAV platform

- VI sensor unit

- camera: Intel Realsense D435i (640x480 for infra 1,2 & RGB images)

- IMU: Pixhawk 4 mini

- VI sensor unit was calibrated by using kalibr

- Ground-Truth

- OptiTrack PrimeX 13 motion capture system with six cameras was used

- including 6-DOF motion information.

| VO/VIO | Setup link |

|---|---|

| VINS-Mono | link |

| ROVIO | link |

| VINS-Fusion | link |

| Stereo-MSCKF | link |

| Kimera | link |

If you use the algorithm in an academic context, please cite the following publication:

@article{jeon2021run,

title={Run your visual-inertial odometry on NVIDIA Jetson: Benchmark tests on a micro aerial vehicle},

author={Jeon, Jinwoo and Jung, Sungwook and Lee, Eungchang and Choi, Duckyu and Myung, Hyun},

journal={IEEE Robotics and Automation Letters},

volume={6},

number={3},

pages={5332--5339},

year={2021},

publisher={IEEE}

}

This datasets are released under the Creative Commons license (CC BY-NC-SA 3.0), which is free for non-commercial use (including research).