This repository contains code for the paper Learned Feature Embeddings for Non-Line-of-Sight Imaging and Recognition by Wenzheng Chen, Fangyin Wei, Kyros Kutulakos, Szymon Rusinkiewicz, and Felix Heide (project webpage).

|

|

|---|

- Description: A bike captured at approximately 1 m distance from the wall.

- Resolution: 512 x 512

- Scanned Area: 2 m x 2 m planar wall

- Integration Time: 180 min.

|

|

|---|

- Description: A specular disco ball captured at approximately 1 m distance from the wall.

- Resolution: 512 x 512

- Scanned Area: 2 m x 2 m planar wall

- Integration Time: 180 min.

|

|

|---|

- Description: A glossy dragon captured at approximately 1 m distance from the wall.

- Resolution: 512 x 512

- Scanned Area: 2 m x 2 m planar wall

- Integration Time: 180 min.

|

|

|---|

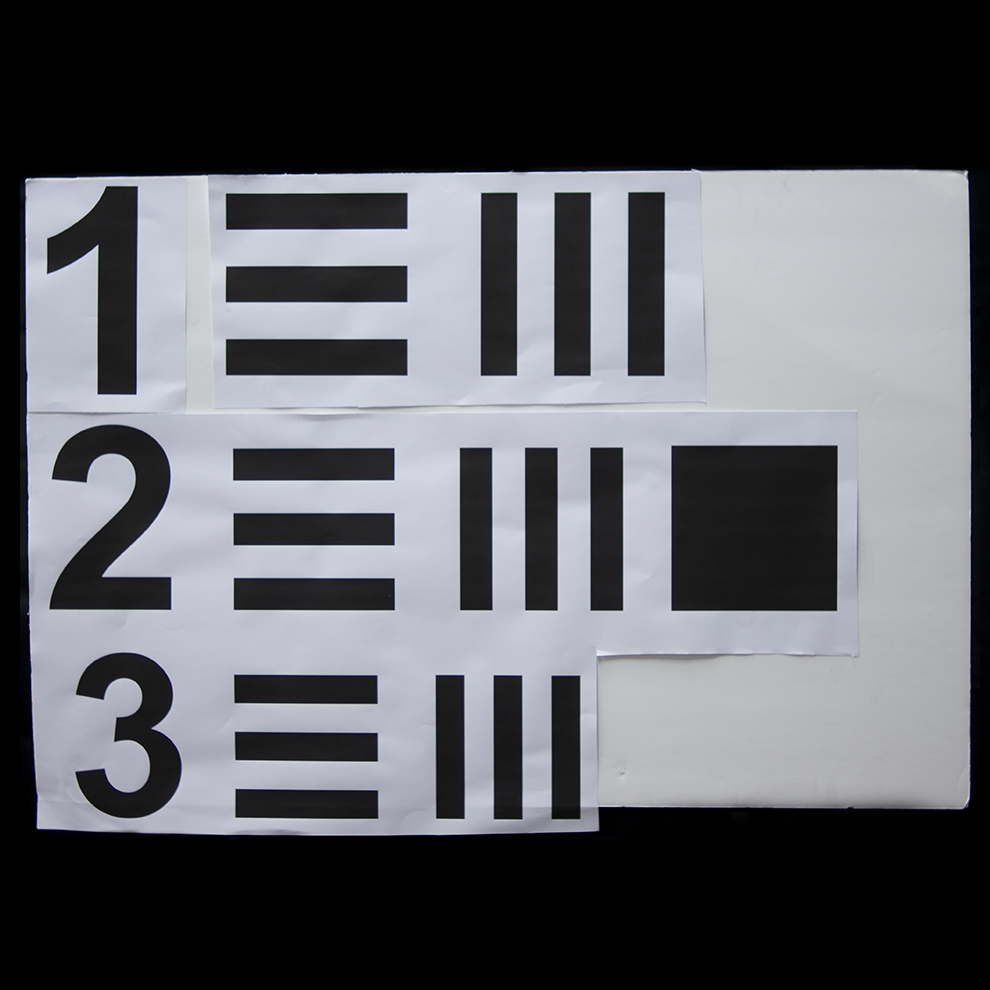

- Description: A resolution chart captured at approximately 1 m distance from the wall.

- Resolution: 512 x 512

- Scanned Area: 2 m x 2 m planar wall

- Integration Time: 180 min.

|

|

|---|

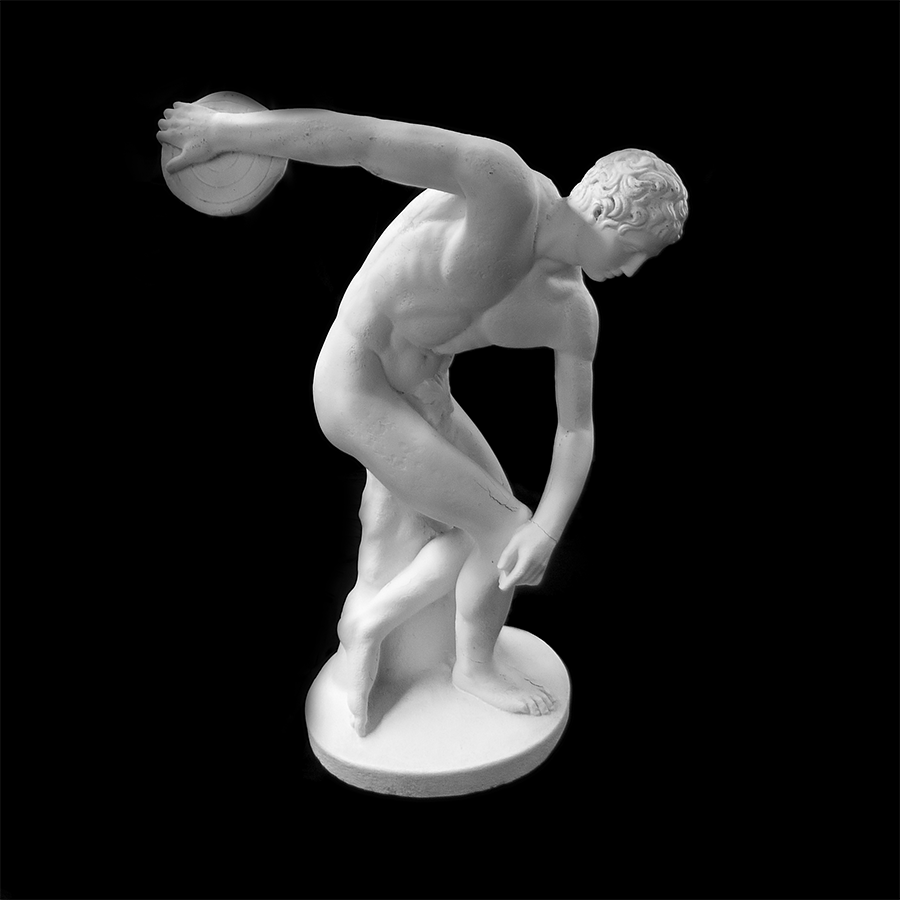

- Description: A white stone statue captured at approximately 1 m distance from the wall.

- Resolution: 512 x 512

- Scanned Area: 2 m x 2 m planar wall

- Integration Time: 180 min.

|

|

|---|

- Description: The teaser scene used in the paper which includes a number of objects, including a bookshelf, statue, dragon, and disco ball.

- Resolution: 512 x 512

- Scanned Area: 2 m x 2 m planar wall

- Integration Time: 180 min.

The realistic experimental scenes above have been captured by this work.

Qualitative Evaluation for NLOS 2D Imaging. Compared with F-K, LCT, and filtered back-projection (BP), we observe that the proposed method is able to reconstruct 2D images with clearer boundaries while achieving more accurate color reconstruction.

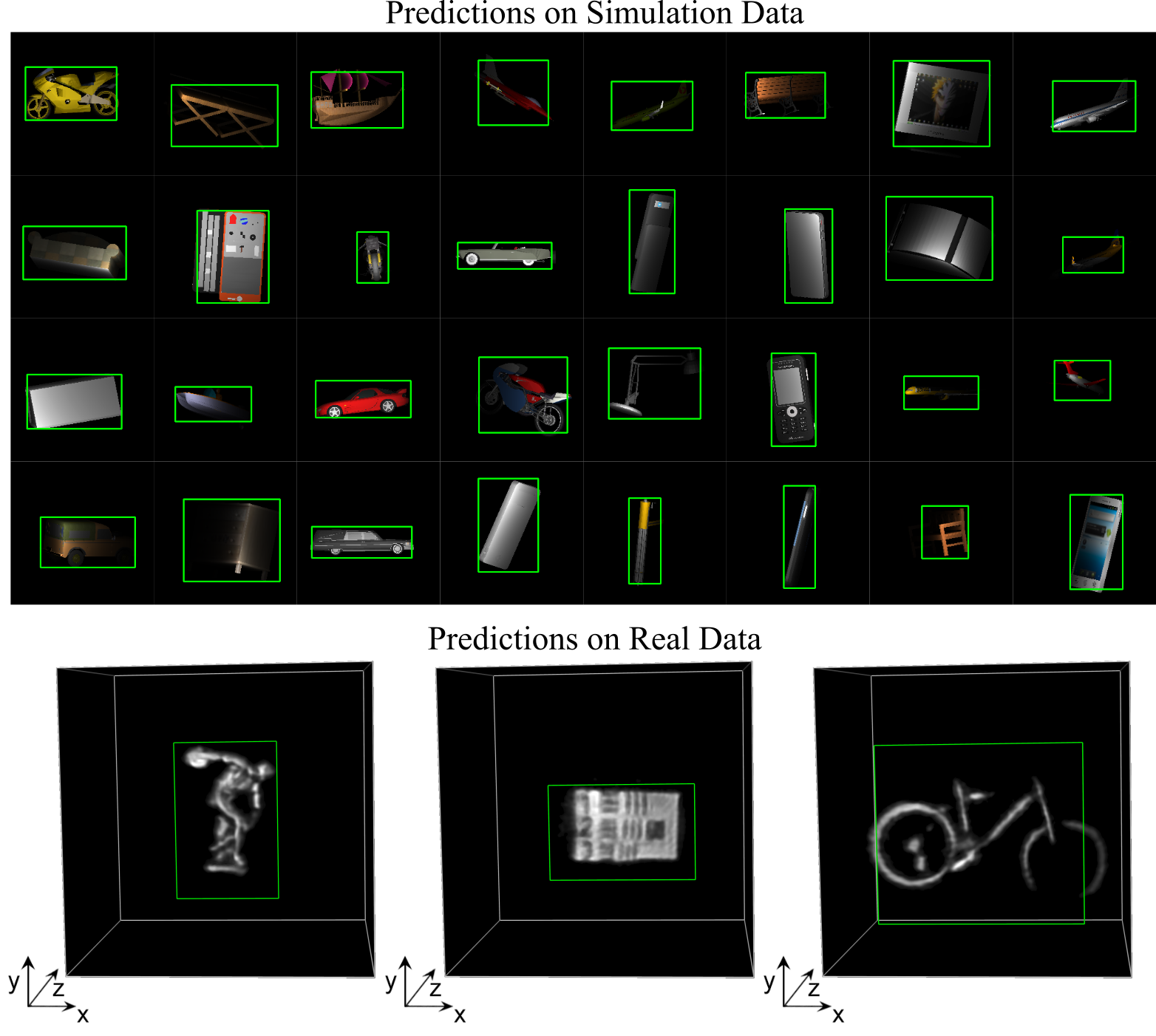

Qualitative end-to-end detection results on synthetic (top) and real (bottom) data. The proposed method correctly predicts the bounding boxes for different classes with various color, shape, and pose. The model is only trained on synthetic data. Evaluation of such a model on real data validates its generalization capability.

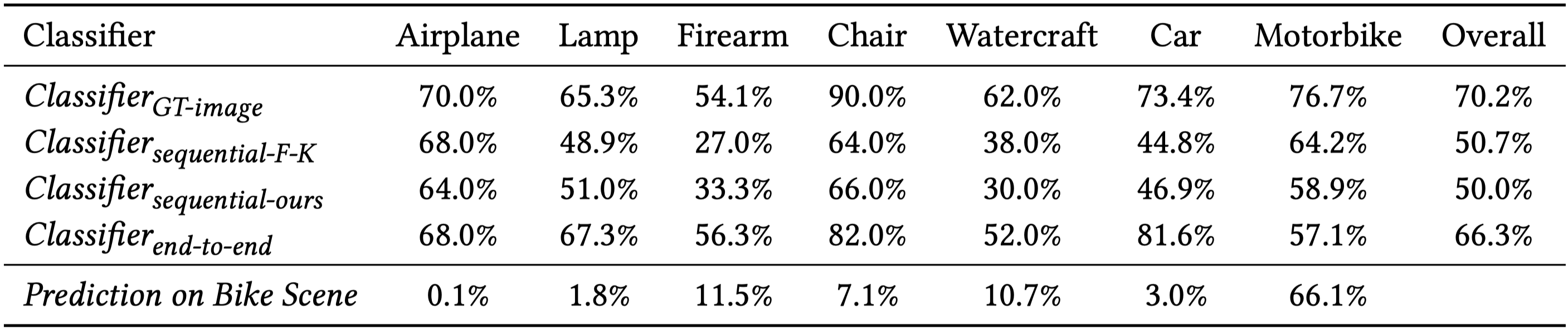

We compare the classification accuracy of the proposed method, learned to classify hidden scenes with a monolithic end-to-end network, and sequential NLOS image classification baselines. The last row in the table reports the confidence scores for the experimental bike measurement. We note that the proposed model recognizes it as a motorbike with more than 66% probability.

The code is organized as in the following directory tree

./cuda-render

conversion/

render/

./DL_inference

inference/

network7_256/

re/

utils/

utils_pytorch/

./data

bunny-model/

img/

LICENSE

README.md

The code base contains two parts. The first part is how to render data and the second is how to train and test the neural network models.

Please check the cuda-render folder. We recommend opening it in Nsight (tested). Other IDE should also work. To compile the code, please install cuda (tested for cuda 9.0), libglm, glew, glfw, and opencv (tested for opencv 3.4).

sudo apt-get install libglm-dev

sudo apt-get install libglew-dev

sudo apt-get install libglfw3-dev

sudo apt-get install libopencv-dev

To render the 3D model, first create a cuda project in Nsight and put everything in cuda-render/render folder to the created project and compile. To successfully run the code, modify the folder path and data saving path in main.cpp. We provide a bunny model for test.

-

Change 3D model location and scale. We change the model size in two places. When we load a 3D model, we normalize it by moving it to the origin and load with a specific scale. The code can be modified here. Next, when we render the model, we may change the model location and rotation here.

-

3D model normal. For the bunny model, we use point normals. We empirically find that it is better to use face normals for ShapeNet data set. You can change it here.

-

Confocal/Non-confocal renderings. Our rendering algorithm supports both confocal and non-confocal settings. One can change it here, where conf=0 means non-confocal and conf=1 means confocal.

-

Specular rendering. Our rendering algorithm supports both diffuse and specular materials. To render a specular object (metal material), change the switch here.

-

Video conversion. To convert a rendered hdr file to a video, we provide a script in cuda-render/conversion. Please change the render folder here then run the python script. It will generate a video which is of a much smaller size and easier to load to train the deep learning model.

-

SPAD simulation. The rendered hdr file does not have any noise simulation. One can add simple Gaussian noise in dataloader, but we recommend employing a computational method for spad simulation to synthesize noise. We adopt the method from here.

-

Rendered dataset. We provide a motorbike dataset with 3000 motorbike examples here.

Non-confocal Rendering

| t=1.2m | t=1.4m | t=1.6m | t=1.8m |

|

|

|

|

| t=1.2m | t=1.4m | t=1.6m | t=1.8m |

|

|

|

|

| t=1.2m | t=1.4m | t=1.6m | t=1.8m |

|

|

|

|

To run the inference model, please first download the data and pre-trained model here. Next, go to DL_inference/inference folder and run:

python eval2.py --datafolder YOUR_DATA_FOLDER --mode fk --netfolder network7_256 --netsvfolder model10_bike --datanum 800 --dim 3 --frame 128 --grid 128 --tres 2 --h 256 --w 256

We provide our reimplementations of different NLOS methods in python and PyTorch. The python implementations are in DL_inference/utils, and the PyTorch implementations are in DL_inference/utils_pytorch. The file name starts with tf. You may directly check tflct.py, tffk.py, and tfphasor.py for NLOS methods LCT (back-projection included), F-K, and Phasor, respectively.

The code and dataset are licensed under the following license:

MIT License

Copyright (c) 2020 wenzhengchen

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

Questions can be addressed to Wenzheng Chen and Fangyin Wei.

If you find it is useful, please cite

@article{Chen:NLOS:2020,

title = {Learned Feature Embeddings for Non-Line-of-Sight Imaging and Recognition},

author = {Wenzheng Chen and Fangyin Wei and Kiriakos N. Kutulakos and Szymon Rusinkiewicz and Felix Heide},

year = {2020},

issue_date = {December 2020},

publisher = {Association for Computing Machinery},

volume = {39},

number = {6},

journal = {ACM Transactions on Graphics (Proc. SIGGRAPH Asia)},

}