This is the official PyTorch implementation of our paper "Frequency-Aware Self-Supervised Depth Estimation" (WACV 2023)

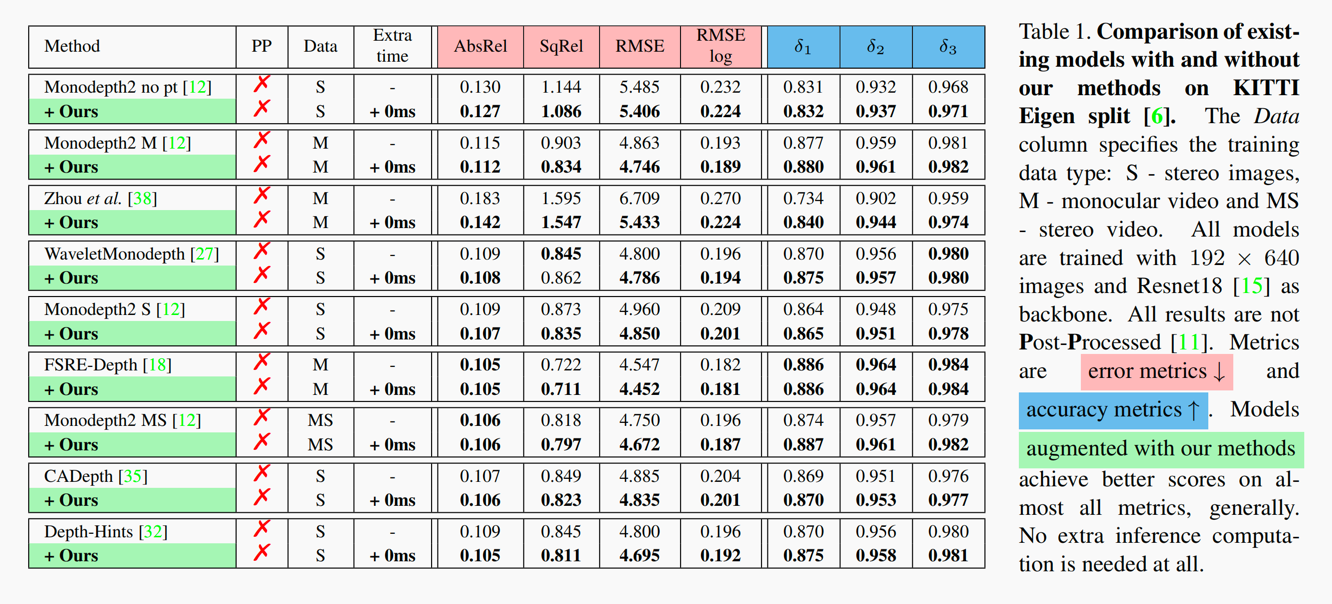

We introduce FreqAwareDepth, with highly generalizable performance-boosting features that can be easily integrated into other models, see the paper for more details.

Our methods introduce no more than 10% extra training time and no extra inference time at all.

🎬 Watch our video.

Assuming a fresh Anaconda distribution, you can install the dependencies with:

conda install pytorch=1.7.1 torchvision=0.8.2 -c pytorch

pip install tensorboardX==1.5 # 1.4 also ok

conda install opencv=3.4.2 # just needed for evaluation, 3.3.1 also okOur code is build upon monodepth2.

Train our full model:

python train.py --model_name {name_you_expect}You can download the entire KITTI RAW dataset by running:

wget -i splits/kitti_archives_to_download.txt -P kitti_data/Then unzip with

cd kitti_data

unzip "*.zip"

cd ..Warning: it weighs about 175GB.

python test_simple.py --image_path assets/test_image.jpg --model_path {pretrained_model_path}To evaluate, run:

python evaluate_depth.py --eval_mono --load_weights_folder {model_path}🐷 Note: Make sure you have run the command below to generate ground truth depth before evaluating.

python export_gt_depth.py --data_path {KITTI_path} --split eigenIf you find our work useful or interesting, please consider citing our paper:

@inproceedings{chen2023frequency,

title={{Frequency-Aware Self-Supervised Monocular Depth Estimation}},

author={Chen, Xingyu and Li, Thomas H and Zhang, Ruonan and Li, Ge},

booktitle={Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision},

pages={5808--5817},

year={2023}

}