This source code release accompanies the manuscript:

Z. Fountas, N. Sajid, P. A.M. Mediano and K. Friston "Deep active inference agents using Monte-Carlo methods", Advances in Neural Information Processing Systems 33 (NeurIPS 2020).

If you use this model or the dynamic dSprites environment in your work, please cite our paper.

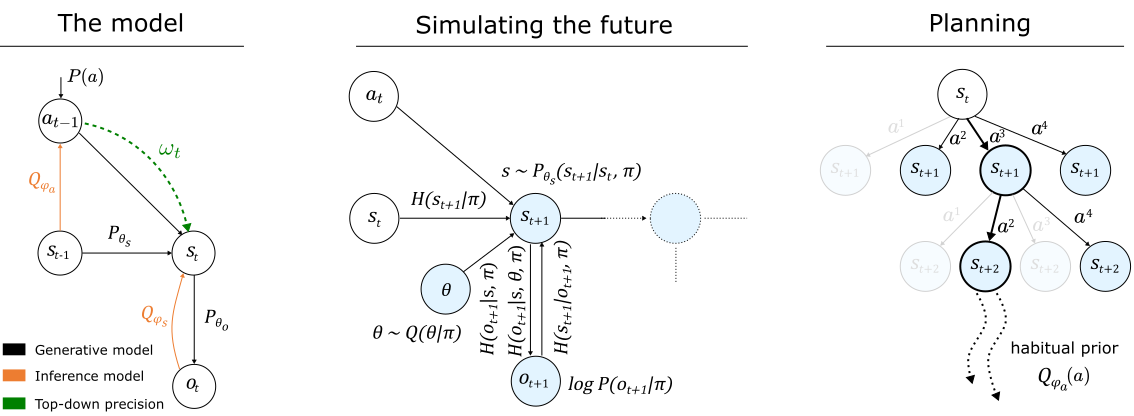

For a quick overview see this video. In this work, we propose the deep neural architecture illustrated below, which can be used to train scaled-up active inference agents for continuous complex environments based on amortized inference, M-C tree search, M-C dropouts and top-down transition precision, that encourages disentangled latent representations.

We test this architecture on two tasks from the Animal-AI Olympics and a new simple object-sorting task based on DeepMind's dSprites dataset.

|

|

| Agent trained in the Dynamic dSprites environment | Agent trained in the Animal-AI environment |

- Programming language: Python 3

- Libraries: tensorflow >= 2.0.0, numpy, matplotlib, scipy, opencv-python

- dSprites dataset.

- Initially, make sure the required libraries are installed in your computer. Open a terminal and type

pip install -r requirements.txt- Then, clone this repository, navigate to the project directory and download the dSrpites dataset by typing

wget https://github.com/deepmind/dsprites-dataset/raw/master/dsprites_ndarray_co1sh3sc6or40x32y32_64x64.npzor by manually visiting the above URL.

- To train an active inference agent to solve the dynamic dSprites task, type

python train.pyThis script will automatically generate checkpoints with the optimized parameters of the agent and store this checkpoints to a different sub-folder every 25 training iterations. The default folder that will contain all sub-folders is figs_final_model_0.01_30_1.0_50_10_5. The script will also generate a number of performance figures, also stored in the same folder. You can stop the process at any point by pressing Ctr+c.

- Finally, once training has been completed, the performance of the newly-trained agent can be demonstrated in real-time by typing

python test_demo.py -n figs_final_model_0.01_30_1.0_50_10_5/checkpoints/ -mThis command will open a graphical interface which can be controlled by a number of keyboard shortcuts. In particular, press:

qorescto exit the simulation at any point.1to enable the MCTS-based full-scale active inference agent (enable by default).2to enable the active inference agent that minimizes expected free energy calculated only for a single time-step into the future.3to make the agent being controlled entirely by the habitual network (see manuscript for explanation)4to activate manual mode where the agents are disabled and the environment can be manipulated by the user. Use the keysw,s,aordto move the current object up, down, left or right respectively.5to enable an agent that minimizes the termsaandbof equation 8 in the manuscript.6to enable an agent that minimizes only the termaof the same equation (reward-seeking agent).mto toggle the use of sampling in calculating future transitions.

@inproceedings{fountas2020daimc,

author = {Fountas, Zafeirios and Sajid, Noor and Mediano, Pedro and Friston, Karl},

booktitle = {Advances in Neural Information Processing Systems},

editor = {H. Larochelle and M. Ranzato and R. Hadsell and M. F. Balcan and H. Lin},

pages = {11662--11675},

publisher = {Curran Associates, Inc.},

title = {Deep active inference agents using Monte-Carlo methods},

url = {https://proceedings.neurips.cc/paper/2020/file/865dfbde8a344b44095495f3591f7407-Paper.pdf},

volume = {33},

year = {2020}

}