LLM-Augmented-MTR.mp4

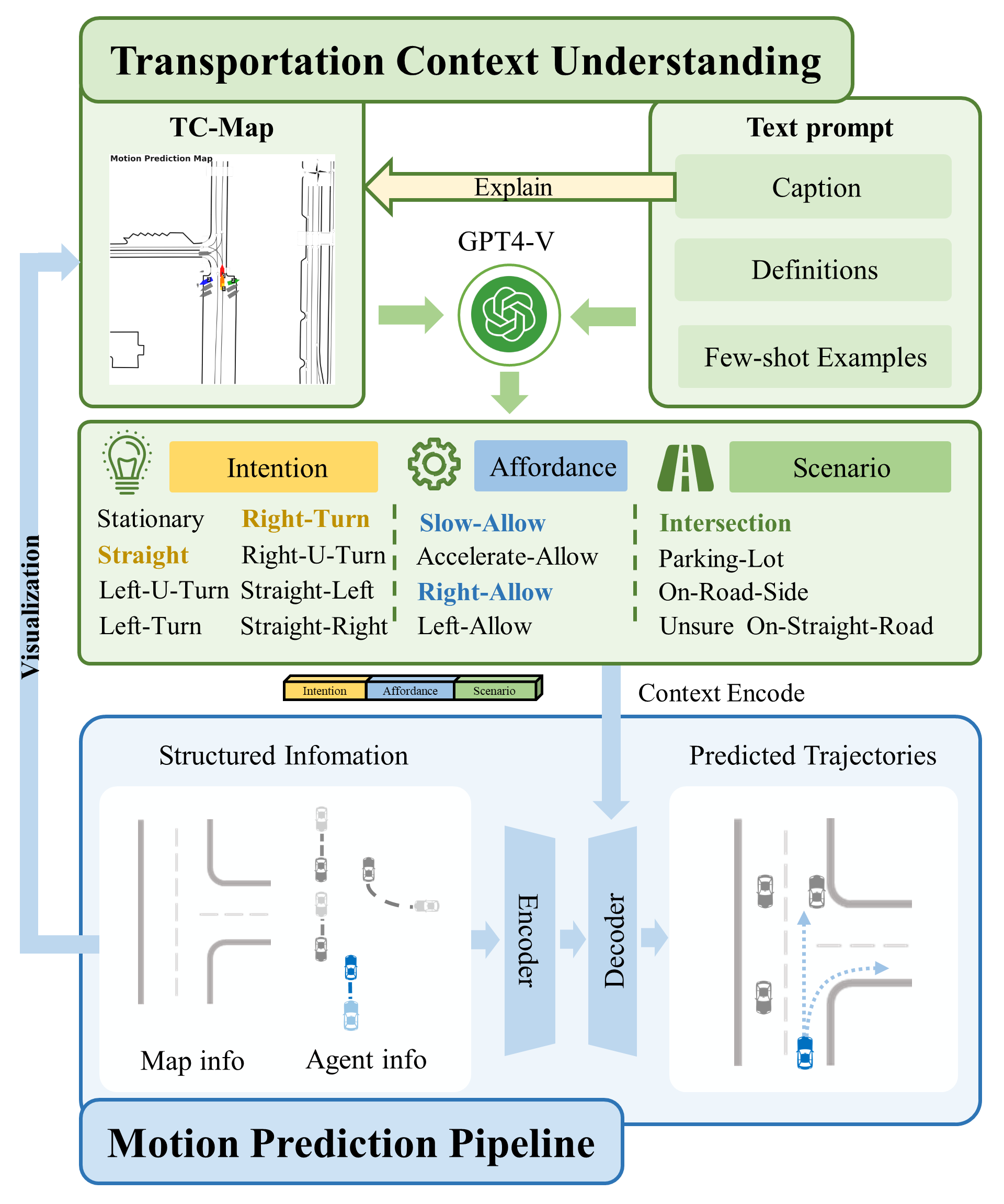

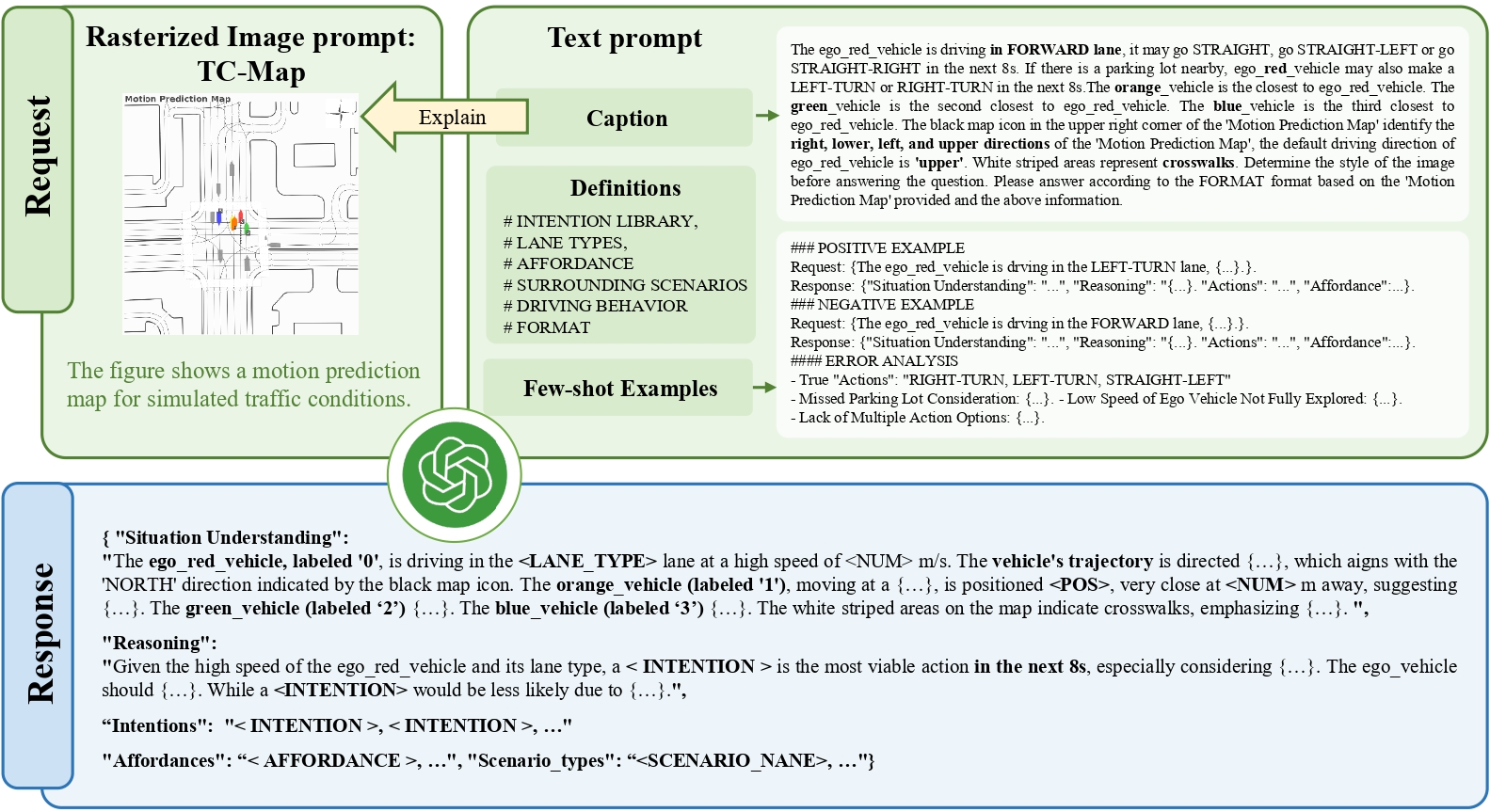

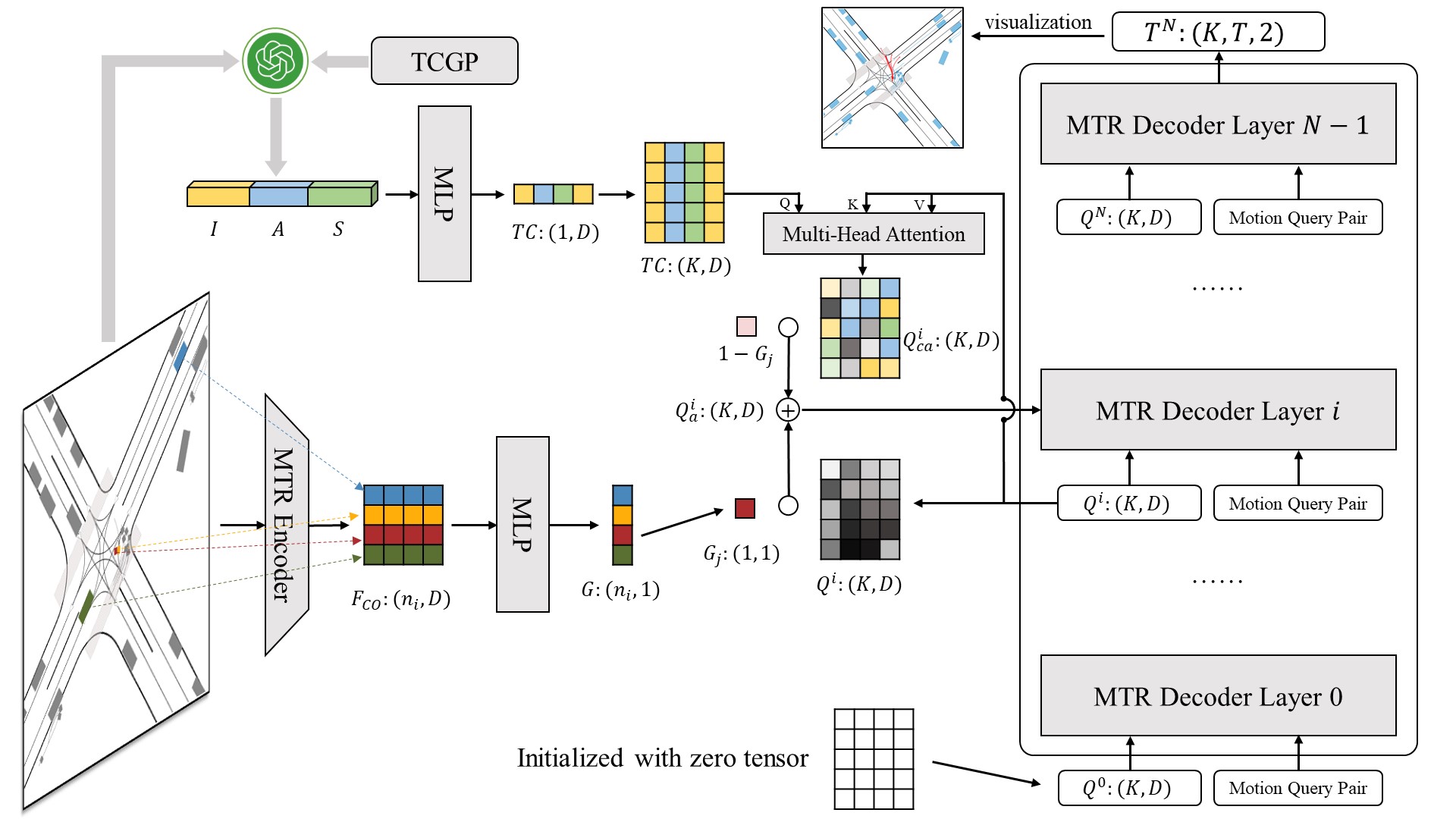

Motion prediction is among the most fundamental tasks in autonomous driving. Traditional methods of motion forecasting primarily encode vector information of maps and historical trajectory data of traffic participants, lacking a comprehensive understanding of overall traffic semantics, which in turn affects the performance of prediction tasks. In this paper, we utilized Large Language Models (LLMs) to enhance the global traffic context understanding for motion prediction tasks. We first conducted systematic prompt engineering, visualizing complex traffic environments and historical trajectory information of traffic participants into image prompts---Transportation Context Map (TC-Map), accompanied by corresponding text prompts. Through this approach, we obtained rich traffic context information from the LLM. By integrating this information into the motion prediction model, we demonstrate that such context can enhance the accuracy of motion predictions. Furthermore, considering the cost associated with LLMs, we propose a cost-effective deployment strategy: enhancing the accuracy of motion prediction tasks at scale with 0.7% LLM-augmented datasets. Our research offers valuable insights into enhancing the understanding of traffic scenes of LLMs and the motion prediction performance of autonomous driving.

[2024/06/30] Our paper was accepted by IROS 2024.

[2024/04/20] The LLM-Augmented-MTR part of our code was released.

[2024/03/17] Our paper is released on Arxiv, you can read it by clicking this link.

LLM-Augmented-MTR

├─docs # Documentation of our work

│ ├─model_docs

│ └─prompt_docs

├─fig

├─llm_augmented_mtr # MTR integrate with LLM's context data

│ ├─data # stores WOMD dataset and cluster file for MTR

│ │ └─waymo

│ ├─LLM_integrate # raw data of LLM, code to process LLM's output

│ │ ├─embedding # defined file format of context data equipped with embedding

│ │ ├─LLM_output # raw data of LLM and corresponding process program

│ │ │ └─raw_data

│ │ └─tools # tools to assist data process (embedding generate and retrieval)

│ │ ├─cfgs # configuation files

│ │ ├─generate_feature_vector_utils # utility for embedding (feature vector) generation

│ │ └─scripts # encapsulated scripts for users to run different tasks

│ ├─mtr # MTR model from Shaoshuai Shi, and we do minor change to integrate it with LLM

│ │ ├─datasets # defined data types and logic of dataloader

│ │ │ ├─llm_context # format of context data provided by LLM

│ │ │ └─waymo # data type used in project, and logic of dataloader

│ │ ├─models # model structure of MTR

│ │ │ ├─context_encoder

│ │ │ ├─motion_decoder

│ │ │ └─utils

│ │ │ └─transformer

│ │ ├─ops # CUDA code of attention to improve computing speed

│ │ │ ├─attention

│ │ │ │ └─src

│ │ │ └─knn # CUDA code of K Nearest Neighbor

│ │ │ └─src

│ │ └─utils

│ ├─submit_to_waymo # convert result data to submission version

│ └─tools # tools to run programs (train/eval)

│ ├─cfgs # configuration files

│ │ └─waymo

│ ├─eval_utils

│ ├─run # encapsulated scripts for users to train/eval llm-augmented-mtr

│ ├─scripts

│ └─train_utils

└─llm_augmented_prompt # Prompt engineering for LLM

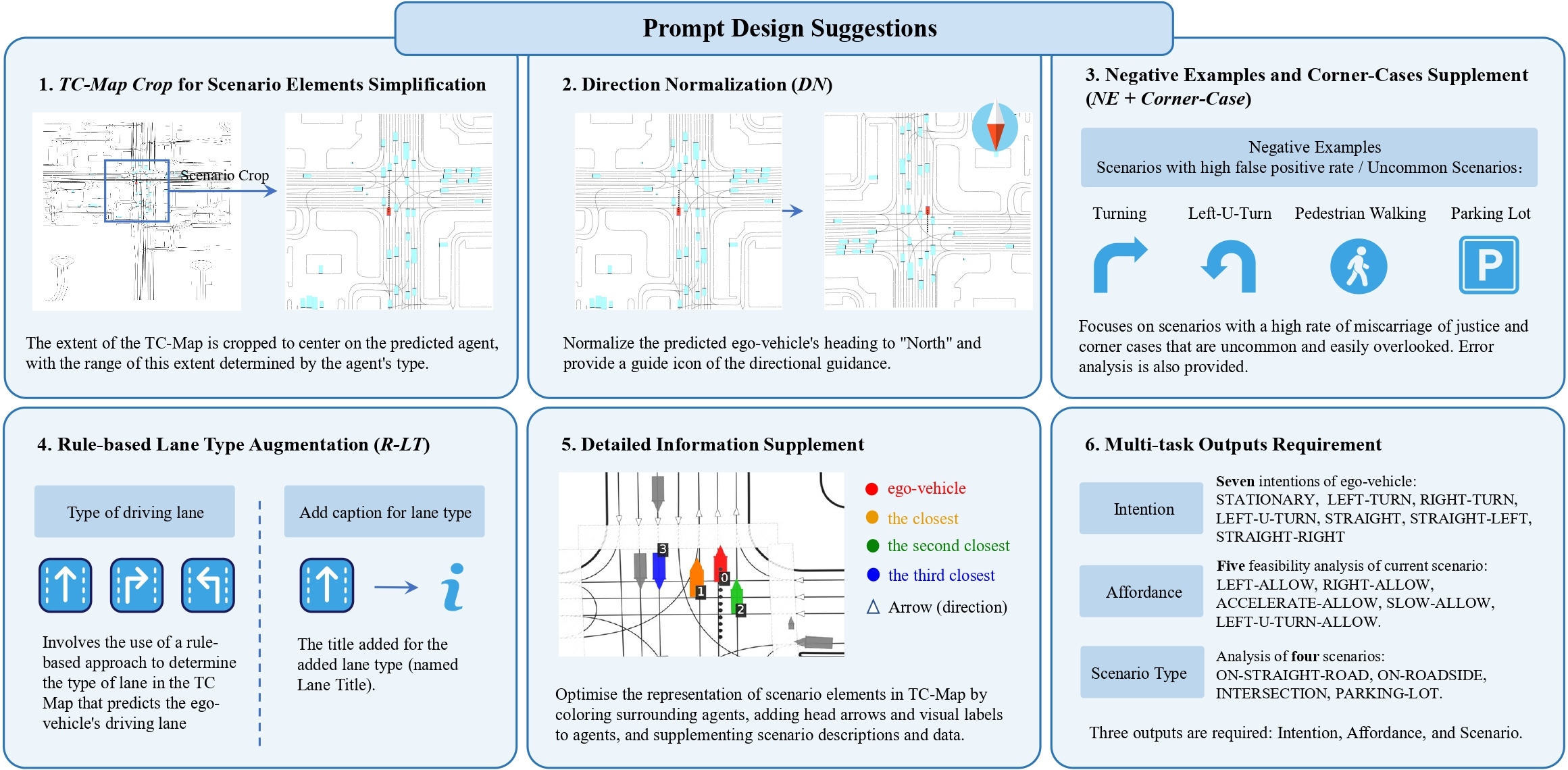

we conducted a comprehensive prompt design and experiment and summarised six prompt suggestions for future researchers who want to utilize LLM's ability to understand BEV-liked complex transportation maps.

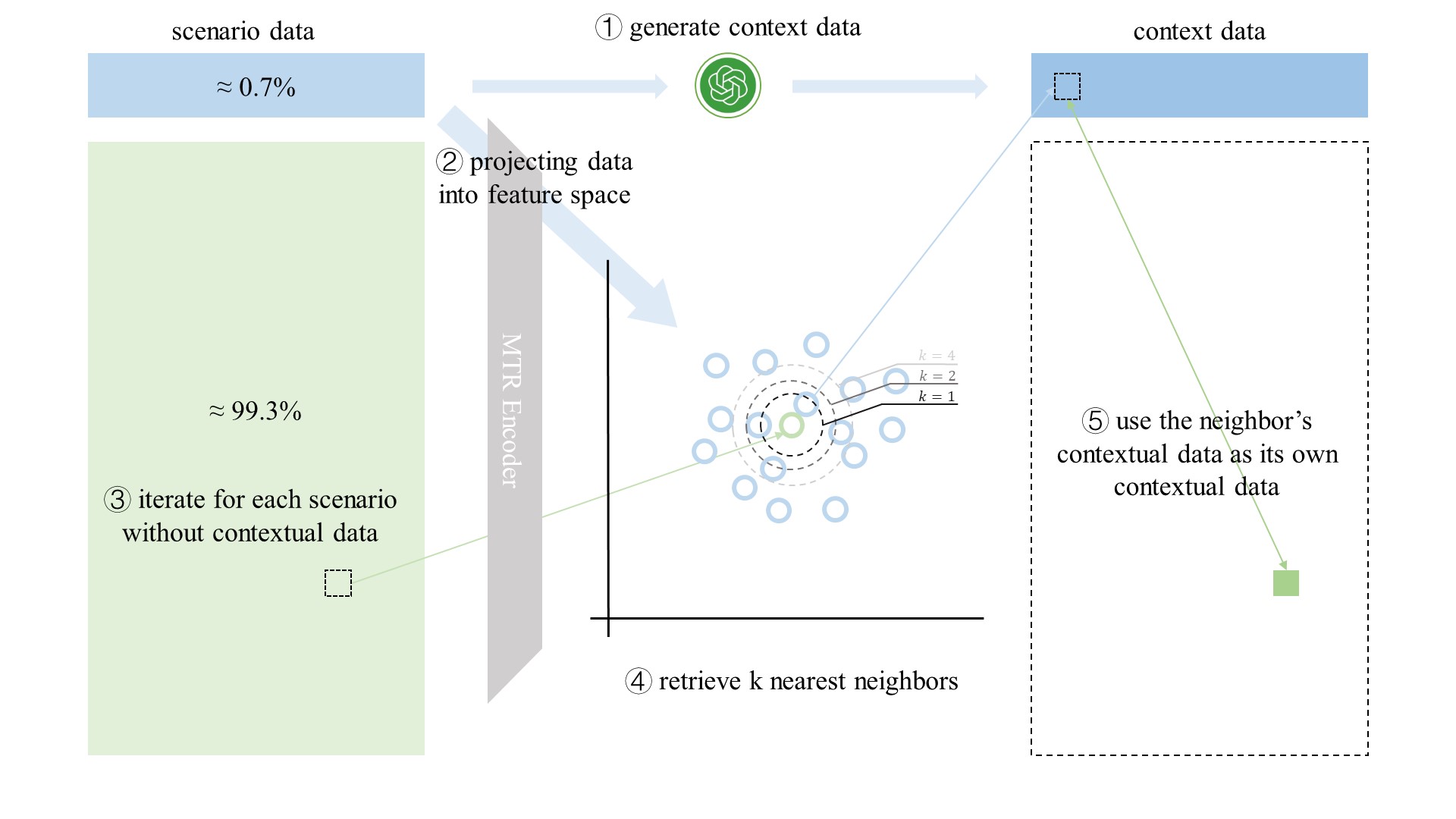

Considering the high cost of API calls and inspired by methods in the field of semi-supervised learning, we generated the transportation context information for the whole dataset via cost-effective depolyment strategy.

The LLM Integration module is inspired by CogBERT. We set a learnable gate tensor and considered the number of layers to conduct the integration.

This repository is based on the code from Waymo Open Dataset, MTR. Thanks~

if you find our work are useful, we are happy to hear you cite our work!

@misc{llm_augmented_mtr,

title={Large Language Models Powered Context-aware Motion Prediction},

author={Xiaoji Zheng and Lixiu Wu and Zhijie Yan and Yuanrong Tang and Hao Zhao and Chen Zhong and Bokui Chen and Jiangtao Gong},

year={2024},

eprint={2403.11057},

archivePrefix={arXiv},

primaryClass={cs.CV}

}