This repo is modified ROS1 version of vdbfusion for mapping incrementally based on received odometry and corresponding point cloud message. Since the origin repo of vdbfusion_ros have some problems on the cow dataset, more issues can be found here. The whole process is based on the ROS1, please check origin repo of vdbfusion if you'd like to use directly without ROS. Please remember clone submodules also.

# 内地的同学用gitee 快一点

git clone --recurse-submodules https://gitee.com/kin_zhang/vdbfusion_mapping.git

# OR from github

git clone --recurse-submodules https://github.com/Kin-Zhang/vdbfusion_mapping.gitDocker version for convenient usage. [在内地的同学建议使用docker pull 先换一下dockerhub的源]

docker pull zhangkin/vdbmapping_mapping

# or build through Dockerfile

docker build -t zhangkin/vdbfusion_mapping .

# =========== RUN -v is the bag path in your computer

docker run -it --net=host -v /dev/shm:/dev/shm -v /home/kin/bags:/workspace/data --name vdbfusion_mapping zhangkin/vdbfusion_mapping /bin/zshOwn environment, please check the file here, TESTED SYSTEM: Ubuntu 18.04 and Ubuntu 20.04. Here are some dependencies for desktop installed if you'd like to try. Please follow their dependencies to install, Dockerfile may help you with that also.

- IGL: mesh save

- OpenVDB: vdb data structure, ATTENTION Boost need 1.70, Ubuntu 18.04 default is 1.65

- glog, gflag: for output log

- ROS1: ROS-full (tested on melodic)

Please note that this is the for incremental mapping, no! odom output! So, you have to have odom/tf topic with same timestamp lidar msg. If you don't have the package to do so, checkout here: Kin-Zhang/simple_ndt_slam Really easy to get poses!! (But it didn't work well on depth sensor point cloud like cow dataset)

The only thing you have to change is the config file about the topic name on your own dataset/equipment.

# input topic name setting ===========> Please change according to your dataset

lidar_topic: "/odom_lidar"

odom_topic: "/auto_odom"

# or tf topic ==> like the cow and lady datasetrun launch with bag directly

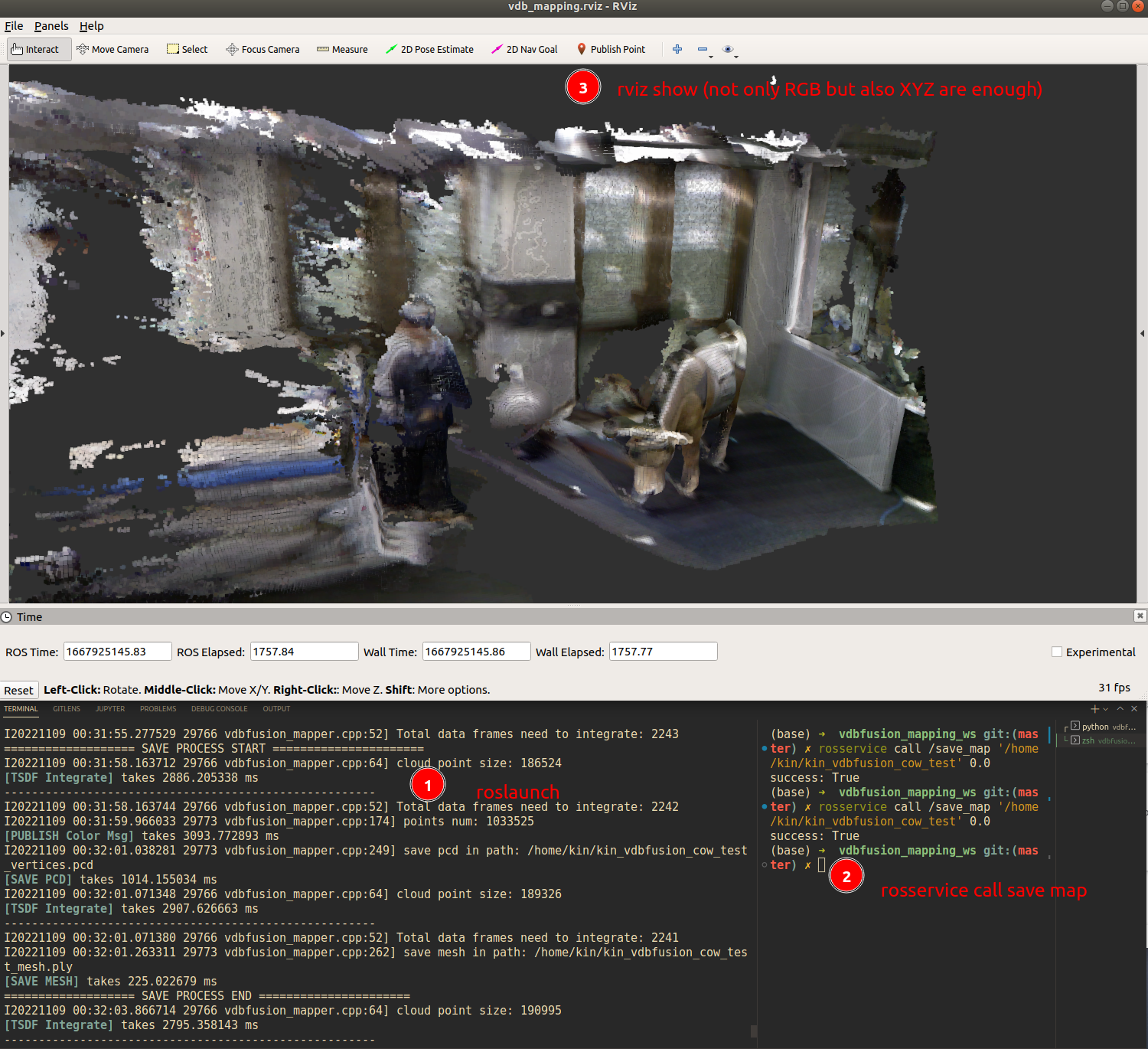

roslaunch vdbfusion_ros vdbfusion_mapping.launchsave and pub map, open with visualization tools example image, .pcd file and .ply file (mesh)

rosservice call /save_map '/workspace/data/test' 0.0My own dataset with Kin-Zhang/simple_ndt_slam give the pose and this repo to mapping

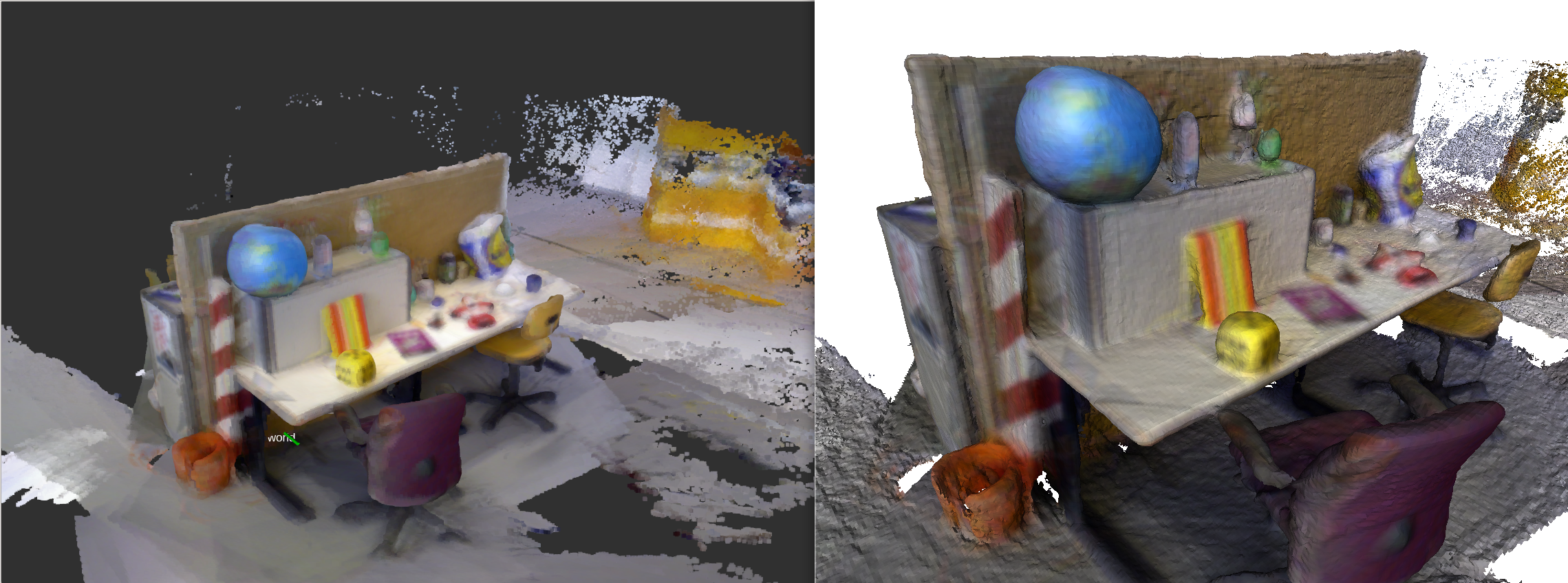

ETH Zurich ASL: Cow and Lady RGBD Dataset Meshlab view, If the bag have the RGB info in the msg like XYZRGB etc, the results could be like right one:

Another dataset: TU Munich RGB-D SLAM Dataset and Benchmark - FR1DESK2 Test bag: rgbd_dataset_freiburg3_long_office_household-2hz-with-pointclouds.bag

-

jianhao jiao: for the first version on vdbfusion mapping ros

-

paucarre: for the rgb version on vdbfusion

-

Style Formate: https://github.com/ethz-asl/linter

cd $YOUR_REPO init_linter_git_hooks # install linter_check_all # run init_linter_git_hooks --remove # remove

includes some todo and issue

- grouped process TODO from voxblox

- Memory increases a lot, maybe buffer and thread is not so great option, throw some data away if the queue is too long?

- speedup again?? GPU? I don't know, let's find out

Solved:

-

For HDDA, here are comments from vdbfusion author @nachovizzo:

Additionally, the HDDA makes not much sense in our mapping context since it's highly effective ONCE the VDB topology has been already created