Project Page | arXiv | Paper

Guanxing Lu*, Zifeng Gao*, Tianxing Chen, Wenxun Dai, Ziwei Wang, Yansong Tang†

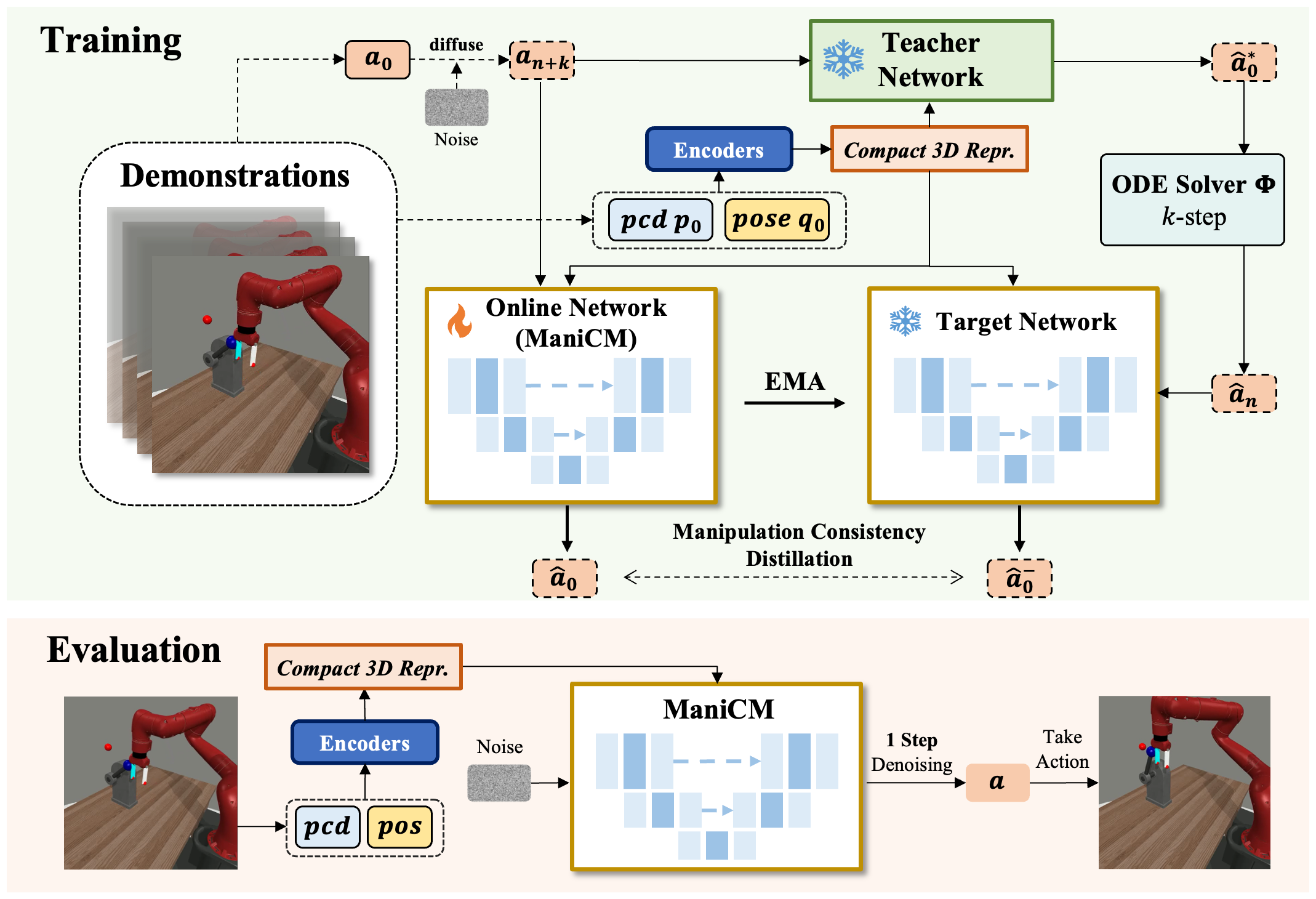

ManiCM Overview: Given a raw action sequence a0, we first perform a forward diffusion to introduce noise over n + k steps. The resulting noisy sequence an+k is then fed into both the online network and the teacher network to predict the clean action sequence. The target network uses the teacher network’s k-step estimation results to predict the action sequence. To enforce self-consistency, a loss function is applied to ensure that the outputs of the online network and the target network are consistent.

ManiCM Overview: Given a raw action sequence a0, we first perform a forward diffusion to introduce noise over n + k steps. The resulting noisy sequence an+k is then fed into both the online network and the teacher network to predict the clean action sequence. The target network uses the teacher network’s k-step estimation results to predict the action sequence. To enforce self-consistency, a loss function is applied to ensure that the outputs of the online network and the target network are consistent.

See INSTALL.md for installation instructions.

Algorithms. We provide the implementation of the following algorithms:

- DP3:

dp3.yaml - ManiCM:

dp3_cm.yaml

You can modify the configuration of the teacher model and ManiCM by editing these two files. Here are the meanings of some important configurations:

num_inference_timesteps: The inference steps of ManiCM.

num_train_timesteps: Total time step for adding noise.

prediction_type: epsilon represents prediction noise, while sample represents predicted action.

For more detailed arguments, please refer to the scripts and the code.

Scripts for generating demonstrations, training, and evaluation are all provided in the scripts/ folder.

The results are logged by wandb, so you need to wandb login first to see the results and videos.

We provide a simple instruction for using the codebase here.

-

Generate demonstrations by

gen_demonstration_adroit.shandgen_demonstration_dexart.sh. See the scripts for details. For example:bash scripts/gen_demonstration_adroit.sh hammer

This will generate demonstrations for the

hammertask in Adroit environment. The data will be saved inManiCM/data/folder automatically. -

Train and evaluate a teacher policy with behavior cloning. For example:

# bash scripts/train_policy.sh config_name task_name addition_info seed gpu_id bash scripts/train_policy.sh dp3 adroit_hammer 0603 0 0This will train a DP3 policy on the

hammertask in Adroit environment using point cloud modality. By default we save the ckpt (optional in the script). During training, teacher's model takes ~10G gpu memory and ~4 hours on an Nvidia 4090 GPU. -

Move teacher's ckpt. For example:

# bash scopy.sh alg_name task_name teacher_addition_info addition_info seed gpu_id bash scopy.sh dp3_cm adroit_hammer 0603 0603_cm 0 0 -

Train and evaluate ManiCM. For example:

# bash scripts/train_policy_cm.sh config_name task_name addition_info seed gpu_id bash scripts/train_policy_cm.sh dp3_cm adroit_hammer 0603_cm 0 0This will train ManiCM use a DP3 policy teacher model on the

hammertask in Adroit environment using point cloud modality. During training, ManiCM model takes ~10G gpu memory and ~4 hours on an Nvidia 4090 GPU.

We have updated the pre-trained checkpoints of hammer task in Adroit environment for your convenience. You can download them and place the folder into data/outputs/.

This repository is released under the MIT license.

Our code is built upon 3D Diffusion Policy, MotionLCM, Latent Consistency Model, Diffusion Policy, VRL3, Metaworld, and ManiGaussian. We would like to thank the authors for their excellent works.

If you find this repository helpful, please consider citing:

@article{lu2024manicm,

title={ManiCM: Real-time 3D Diffusion Policy via Consistency Model for Robotic Manipulation},

author={Guanxing Lu and Zifeng Gao and Tianxing Chen and Wenxun Dai and Ziwei Wang and Yansong Tang},

journal={arXiv preprint arXiv:2406.01586},

year={2024}

}