Neelu Madan, Andreas Moegelmose, Rajat Modi, Yogesh S. Rawat, Thomas B. Moeslund

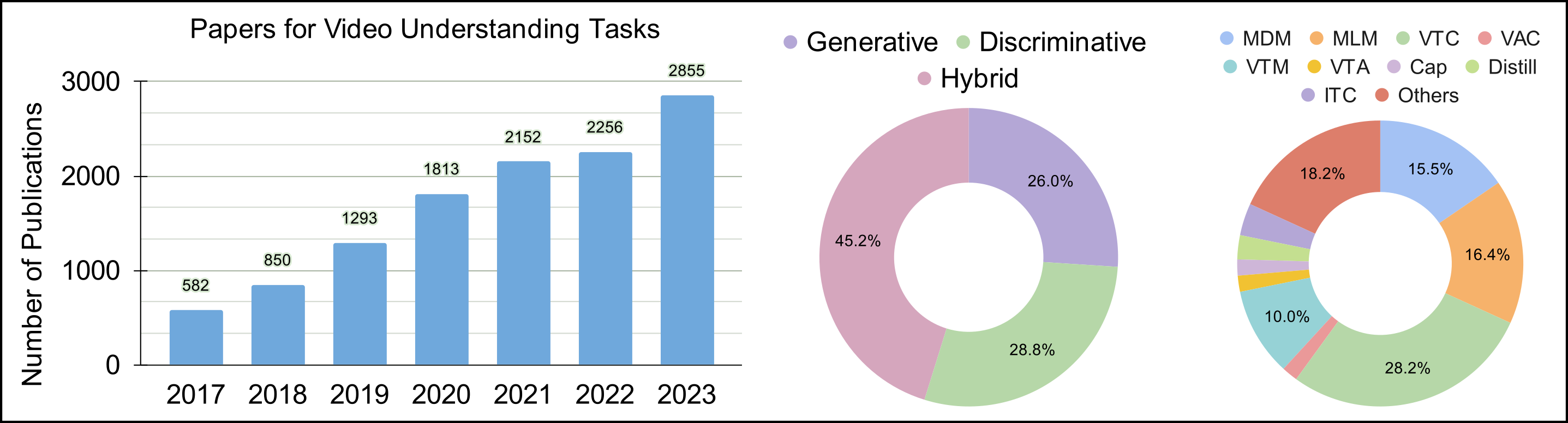

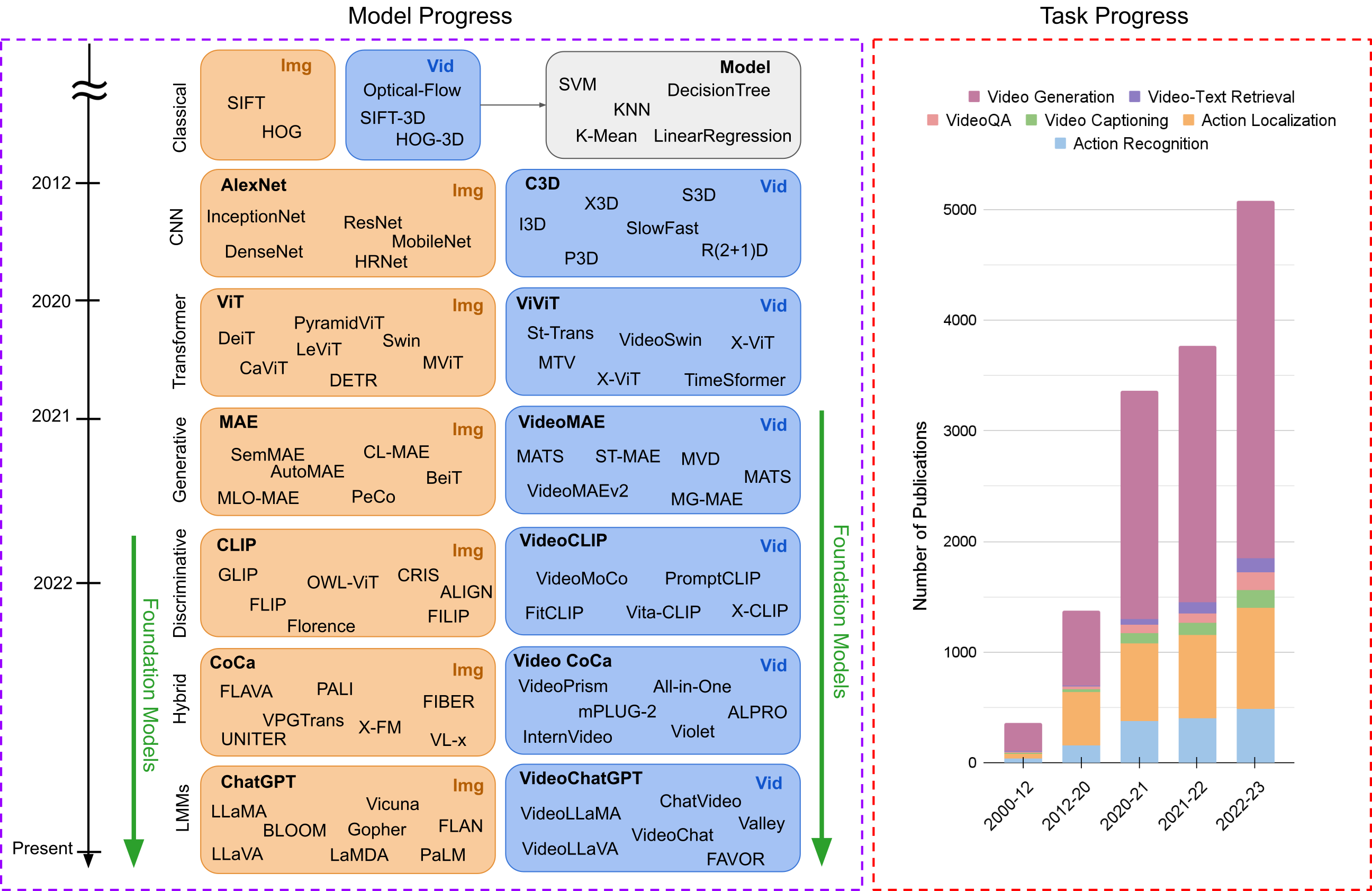

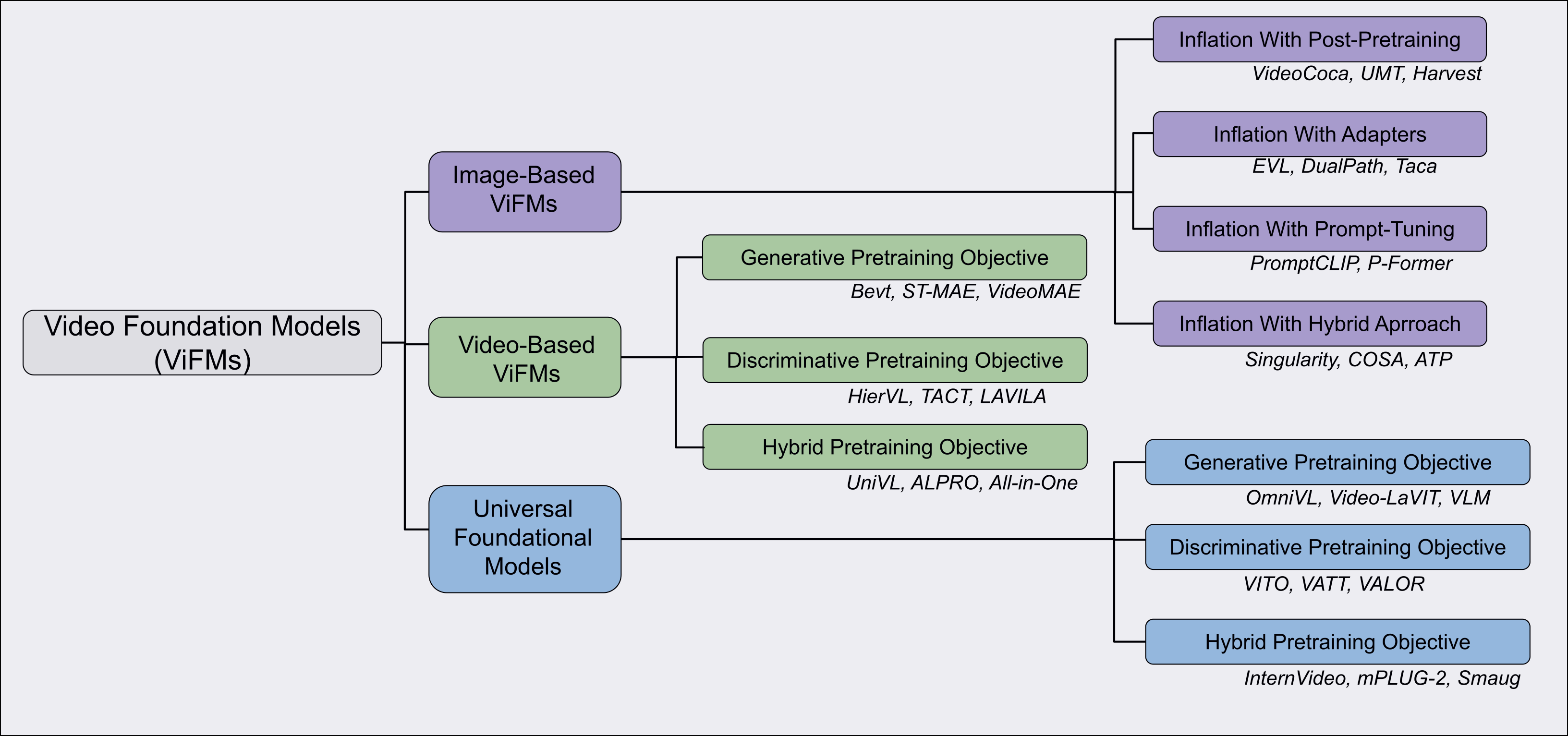

The field of video understanding is undergoing significant advancement, as evidenced by the increasing number of research publications focused on various video understanding tasks (Figure below). This growth coincides with the development of large-scale pretraining techniques. These techniques have demonstrated remarkable capabilities in adapting to diverse tasks, requiring minimal additional training with robust generalization. As a result, researchers are actively investigating the role of these foundational models to address a broad spectrum of video understanding challenges. We surveyed more than 200 foundation models, analyzing their performance over several common video tasks. Our survey, Foundation Model for Video Understanding: A Survey, also provides an overview of 16 different video tasks, including their benchmarks and evaluation metrics.

Distilling Vision-Language Models on Millions of Videos. (Distill-VLM). [CVPR, 2024].

Yue Zhao, Long Zhao, Xingyi Zhou, Jialin Wu, Chun-Te Chu, Hui Miao, Florian Schroff, Hartwig Adam, Ting Liu, Boqing Gong, Philipp Krähenbühl, Liangzhe Yuan.

[Paper]

COSA: Concatenated Sample Pretrained Vision-Language Foundation Model. (COSA). [ICLR, 2024].

Sihan Chen, Xingjian He, Handong Li, Xiaojie Jin, Jiashi Feng, Jing Liu.

[Paper] [Code]

FROSTER: Frozen CLIP Is A Strong Teacher for Open-Vocabulary Action Recognition. (FROSTER). [ICLR, 2024].

Xiaohu Huang, Hao Zhou, Kun Yao, Kai Han.

[Paper] [Code]

EZ-CLIP: Efficient Zeroshot Video Action Recognition. (EZ-CLIP). [arxiv, 2024].

Shahzad Ahmad, Sukalpa Chanda, Yogesh S Rawat.

[Paper] [Code]

M2-CLIP: A Multimodal, Multi-task Adapting Framework for Video Action Recognition. (M2-CLIP). [arxiv, 2024].

Mengmeng Wang, Jiazheng Xing, Boyuan Jiang, Jun Chen, Jianbiao Mei, Xingxing Zuo, Guang Dai, Jingdong Wang, Yong Liu.

[Paper]

PaLM2-VAdapter: Progressively Aligned Language Model Makes a Strong Vision-language Adapter. (PaLM2-VAdapter). [arxiv, 2024].

Junfei Xiao, Zheng Xu, Alan Yuille, Shen Yan, Boyu Wang.

[Paper]

Revealing Single Frame Bias for Video-and-Language Learning. (Singularity). [ACL, 2023].

Jie Lei, Tamara L Berg, Mohit Bansal.

[Paper] [Code]

AdaCLIP: Towards Pragmatic Multimodal Video Retrieval. (AdaCLIP). [ACM-MM, 2023].

Zhiming Hu, Angela Ning Ye, Salar Hosseini Khorasgani, Iqbal Mohomed.

[Paper] [Code]

CLIP4Caption: CLIP for Video Caption. (CLIP4Caption). [ACM-MM, 2023].

Mingkang Tang, Zhanyu Wang, Zhenhua Liu, Fengyun Rao, Dian Li, Xiu Li.

[Paper]

RTQ: Rethinking Video-language Understanding Based on Image-text Model. (RTQ). [ACM Multimedia, 2023].

Kunchang Li, Yali Wang, Yizhuo Li, Yi Wang, Yinan He, Limin Wang, Yu Qiao.

[Paper] [Code]

Align your Latents: High-Resolution Video Synthesis with Latent Diffusion Models. (VideoLDM). [CVPR, 2023].

Wenhao Wu, Xiaohan Wang, Haipeng Luo, Jingdong Wang, Yi Yang, Wanli Ouyang.

[Paper]

Bidirectional Cross-Modal Knowledge Exploration for Video Recognition with Pre-trained Vision-Language Models. (BIKE). [CVPR, 2023].

Wenhao Wu, Xiaohan Wang, Haipeng Luo, Jingdong Wang, Yi Yang, Wanli Ouyang.

[Paper] [Code]

Dual-path Adaptation from Image to Video Transformers. (DualPath). [CVPR, 2023].

Jungin Park, Jiyoung Lee, Kwanghoon Sohn.

[Paper] [Code]

Fine-tuned CLIP Models are Efficient Video Learners. (ViFi-CLIP). [CVPR, 2023].

Hanoona Rasheed, Muhammad Uzair Khattak, Muhammad Maaz, Salman Khan, Fahad Shahbaz Khan.

[Paper] [Code]

Vita-CLIP: Video and text adaptive CLIP via Multimodal Prompting. (Vita-CLIP). [CVPR, 2023].

Syed Talal Wasim, Muzammal Naseer, Salman Khan, Fahad Shahbaz Khan, Mubarak Shah.

[Paper] [Code]

Disentangling Spatial and Temporal Learning for Efficient Image-to-Video Transfer Learning. (DiST). [ICCV, 2023].

Zhiwu Qing, Shiwei Zhang, Ziyuan Huang, Yingya Zhang, Changxin Gao, Deli Zhao, Nong Sang.

[Paper] [Code]

Preserve Your Own Correlation: A Noise Prior for Video Diffusion Models. (pyoco). [ICCV, 2023].

Kunchang Li, Yali Wang, Yizhuo Li, Yi Wang, Yinan He, Limin Wang, Yu Qiao.

[Paper] [Demo]

Unmasked Teacher: Towards Training-Efficient Video Foundation Models. (UMT). [ICCV, 2023].

Kunchang Li, Yali Wang, Yizhuo Li, Yi Wang, Yinan He, Limin Wang, Yu Qiao.

[Paper] [Code]

Alignment and Generation Adapter for Efficient Video-text Understanding. (AG-Adapter). [ICCVW, 2023].

Han Fang, Zhifei Yang, Yuhan Wei, Xianghao Zang, Chao Ban, Zerun Feng, Zhongjiang He, Yongxiang Li, Hao Sun.

[Paper]

AIM: Adapting Image Models for Efficient Video Action Recognition. (AIM). [ICLR, 2023].

Taojiannan Yang, Yi Zhu, Yusheng Xie, Aston Zhang, Chen Chen, Mu Li.

[Paper] [Code]

MAtch, eXpand and Improve: Unsupervised Finetuning for Zero-Shot Action Recognition with Language Knowledge. (MAXI). [ICCV, 2023].

Wei Lin, Leonid Karlinsky, Nina Shvetsova, Horst Possegger, Mateusz Kozinski, Rameswar Panda, Rogerio Feris, Hilde Kuehne, Horst Bischof.

[Paper] [Code]

Prompt Switch: Efficient CLIP Adaptation for Text-Video Retrieval. (PromptSwitch). [ICCV, 2023].

Chaorui Deng, Qi Chen, Pengda Qin, Da Chen, Qi Wu.

[Paper] [Code]

Alignment and Generation Adapter for Efficient Video-text Understanding. (AG-Adapter). [ICCVW, 2023].

Han Fang; Zhifei Yang; Yuhan Wei; Xianghao Zang; Chao Ban; Zerun Feng; Zhongjiang He; Yongxiang Li; Hao Sun.

[Paper]

CLIP-ViP: Adapting Pre-trained Image-Text Model to Video-Language Representation Alignment. (CLIP-ViP). [ICLR, 2023].

Hongwei Xue, Yuchong Sun, Bei Liu, Jianlong Fu, Ruihua Song, Houqiang Li, Jiebo Luo.

[Paper] [Code]

Alternating Gradient Descent and Mixture-of-Experts for Integrated Multimodal Perception. (IMP). [NeurIPS, 2023].

Hassan Akbari, Dan Kondratyuk, Yin Cui, Rachel Hornung, Huisheng Wang, Hartwig Adam.

[Paper]

Bootstrapping Vision-Language Learning with Decoupled Language Pre-training. (P-Former). [NeurIPS, 2023].

Yiren Jian, Chongyang Gao, Soroush Vosoughi.

[Paper] [Code]

Language-based Action Concept Spaces Improve Video Self-Supervised Learning. (LSS). [NeurIPS, 2023].

Kanchana Ranasinghe, Michael Ryoo.

[Paper]

MaMMUT: A Simple Architecture for Joint Learning for MultiModal Tasks. (MaMMUT). [TMLR, 2023].

Weicheng Kuo, AJ Piergiovanni, Dahun Kim, Xiyang Luo, Ben Caine, Wei Li, Abhijit Ogale, Luowei Zhou, Andrew Dai, Zhifeng Chen, Claire Cui, Anelia Angelova.

[Paper] [Code]

EVA-CLIP: Improved Training Techniques for CLIP at Scale. (EVA-CLIP). [arxiv, 2023].

Quan Sun, Yuxin Fang, Ledell Wu, Xinlong Wang, Yue Cao.

[Paper] [Code]

Fine-grained Text-Video Retrieval with Frozen Image Encoders. (CrossTVR). [arxiv, 2023].

Zuozhuo Dai, Fangtao Shao, Qingkun Su, Zilong Dong, Siyu Zhu.

[Paper]

Harvest Video Foundation Models via Efficient Post-Pretraining. (Harvest). [arxiv, 2023].

Yizhuo Li, Kunchang Li, Yinan He, Yi Wang, Yali Wang, Limin Wang, Yu Qiao, Ping Luo.

[Paper] [Code]

Motion-Conditioned Diffusion Model for Controllable Video Synthesis. (MCDiff). [arxiv, 2023].

Tsai-Shien Chen, Chieh Hubert Lin, Hung-Yu Tseng, Tsung-Yi Lin, Ming-Hsuan Yang.

[Paper]

TaCA: Upgrading Your Visual Foundation Model with Task-agnostic Compatible Adapter. (TaCA). [arxiv, 2023].

Binjie Zhang, Yixiao Ge, Xuyuan Xu, Ying Shan, Mike Zheng Shou.

[Paper]

PaLM2-VAdapter: Progressively Aligned Language Model Makes a Strong Vision-language Adapter. (PaLM2-VAdapter). [arxiv, 2023].

Junfei Xiao, Zheng Xu, Alan Yuille, Shen Yan, Boyu Wang.

[Paper]

Videoprompter: an ensemble of foundational models for zero-shot video understanding. (Videoprompter). [arxiv, 2023].

Adeel Yousaf, Muzammal Naseer, Salman Khan, Fahad Shahbaz Khan, Mubarak Shah.

[Paper]

FitCLIP: Refining Large-Scale Pretrained Image-Text Models for Zero-Shot Video Understanding Tasks. (FitCLIP). [BMVC, 2022].

Santiago Castro and Fabian Caba.

[Paper] [Code]

Cross Modal Retrieval with Querybank Normalisation. (QB-Norm). [CVPR, 2022].

Simion-Vlad Bogolin, Ioana Croitoru, Hailin Jin, Yang Liu, Samuel Albanie.

[Paper] [Code]

Revisiting the "Video" in Video-Language Understanding. (ATP). [CVPR, 2022].

Shyamal Buch, Cristóbal Eyzaguirre, Adrien Gaidon, Jiajun Wu, Li Fei-Fei, Juan Carlos Niebles.

[Paper] [Code]

Frozen CLIP models are Efficient Video Learners. (EVL). [ECCV, 2022].

Ziyi Lin, Shijie Geng, Renrui Zhang, Peng Gao, Gerard de Melo, Xiaogang Wang, Jifeng Dai, Yu Qiao, Hongsheng Li.

[Paper] [Code]

Prompting Visual-Language Models for Efficient Video Understanding. (Prompt-CLIP). [ECCV, 2022].

Chen Ju, Tengda Han, Kunhao Zheng, Ya Zhang, Weidi Xie.

[Paper] [Code]

Expanding Language-Image Pretrained Models for General Video Recognition. (X-CLIP). [ECCV, 2022].

Bolin Ni, Houwen Peng, Minghao Chen, Songyang Zhang, Gaofeng Meng, Jianlong Fu, Shiming Xiang, Haibin Ling.

[Paper] [Code]

ST-Adapter: Parameter-Efficient Image-to-Video Transfer Learning. (St-Adapter). [NeurIPS, 2022].

Junting Pan, Ziyi Lin, Xiatian Zhu, Jing Shao, Hongsheng Li.

[Paper] [Code]

CLIP4Clip: An Empirical Study of CLIP for End to End Video Clip Retrieval. (CLIP4Clip). [Neurocomputing, 2022].

Huaishao Luo, Lei Ji, Ming Zhong, Yang Chen, Wen Lei, Nan Duan, Tianrui Li.

[Paper] [Code]

Transferring Image-CLIP to Video-Text Retrieval via Temporal Relations. (CLIP2Video). [TMM, 2022].

Han Fang, Pengfei Xiong, Luhui Xu, Wenhan Luo.

[Paper]

Cross-Modal Adapter for Text-Video Retrieval. (Cross-Modal-Adapter). [arxiv, 2022].

Haojun Jiang, Jianke Zhang, Rui Huang, Chunjiang Ge, Zanlin Ni, Jiwen Lu, Jie Zhou, Shiji Song, Gao Huang.

[Paper] [Code]

Imagen Video: High Definition Video Generation with Diffusion Models. (Imagen Video). [arxiv, 2022].

Jonathan Ho, William Chan, Chitwan Saharia, Jay Whang, Ruiqi Gao, Alexey Gritsenko, Diederik P. Kingma, Ben Poole, Mohammad Norouzi, David J. Fleet, Tim Salimans.

[Paper] [Demo]

Multimodal Open-Vocabulary Video Classification via Pre-Trained Vision and Language Models. (MOV). [arxiv, 2022].

Rui Qian, Yeqing Li, Zheng Xu, Ming-Hsuan Yang, Serge Belongie, Yin Cui.

[Paper]

VideoCoCa: Video-Text Modeling with Zero-Shot Transfer from Contrastive Captioners. (Video-CoCa). [arxiv, 2022].

Shen Yan, Tao Zhu, Zirui Wang, Yuan Cao, Mi Zhang, Soham Ghosh, Yonghui Wu, Jiahui Yu.

[Paper]

Frozen in Time: A Joint Video and Image Encoder for End-to-End Retrieval. (Frozen). [ICCV, 2021].

Max Bain, Arsha Nagrani, Gül Varol, Andrew Zisserman.

[Paper] [Code]

ActionCLIP: A New Paradigm for Video Action Recognition. (ActionCLIP). [arxiv, 2021].

Mengmeng Wang, Jiazheng Xing, Yong Liu.

[Paper] [Code]

Improving Video-Text Retrieval by Multi-Stream Corpus Alignment and Dual Softmax Loss. (CAMoE). [arxiv, 2021].

Xing Cheng, Hezheng Lin, Xiangyu Wu, Fan Yang, Dong Shen.

[Paper]

End-to-End Learning of Visual Representations from Uncurated Instructional Videos. (MIL-NCE). [CVPR, 2020].

Antoine Miech, Jean-Baptiste Alayrac, Lucas Smaira, Ivan Laptev, Josef Sivic, Andrew Zisserman.

[Paper] [Code]

ControlVideo: Training-free Controllable Text-to-Video Generation. (ControlVideo). [ICLR, 2024].

Yabo Zhang, Yuxiang Wei, Dongsheng Jiang, Xiaopeng Zhang, Wangmeng Zuo, Qi Tian.

[Paper] [Code]

InternVid: A Large-scale Video-Text Dataset for Multimodal Understanding and Generation. (ViCLIP). [ICLR, 2024].

Yi Wang, Yinan He, Yizhuo Li, Kunchang Li, Jiashuo Yu, Xin Ma, Xinhao Li, Guo Chen, Xinyuan Chen, Yaohui Wang, Ping Luo, Ziwei Liu, Yali Wang, Limin Wang, Yu Qiao.

[Paper] [Code]

Mora: Enabling Generalist Video Generation via A Multi-Agent Framework. (MORA). [arxiv, 2024].

Zhengqing Yuan, Ruoxi Chen, Zhaoxu Li, Haolong Jia, Lifang He, Chi Wang, Lichao Sun.

[Paper] [Code]

Lumiere: A Space-Time Diffusion Model for Video Generation. (Lumiere). [arxiv, 2024].

Omer Bar-Tal, Hila Chefer, Omer Tov, Charles Herrmann, Roni Paiss, Shiran Zada, Ariel Ephrat, Junhwa Hur, Guanghui Liu, Amit Raj, Yuanzhen Li, Michael Rubinstein, Tomer Michaeli, Oliver Wang, Deqing Sun, Tali Dekel, Inbar Mosseri.

[Paper] [Demo]

VideoChat: Chat-Centric Video Understanding. (VideoChat). [arxiv, 2024].

KunChang Li, Yinan He, Yi Wang, Yizhuo Li, Wenhai Wang, Ping Luo, Yali Wang, Limin Wang, Yu Qiao.

[Paper] [Code]

Video generation models as world simulators. (SORA). [OpenAI, 2024].

OpenAI.

[Link]

All in One: Exploring Unified Video-Language Pre-training. (All-in-One). [CVPR, 2023].

Alex Jinpeng Wang, Yixiao Ge, Rui Yan, Yuying Ge, Xudong Lin, Guanyu Cai, Jianping Wu, Ying Shan, Xiaohu Qie, Mike Zheng Shou.

[Paper] [Code]

Clover: Towards A Unified Video-Language Alignment and Fusion Model. (Clover). [CVPR, 2023].

Jingjia Huang, Yinan Li, Jiashi Feng, Xinglong Wu, Xiaoshuai Sun, Rongrong Ji.

[Paper] [Code]

HierVL: Learning Hierarchical Video-Language Embeddings. (HierVL). [CVPR, 2023].

Kumar Ashutosh, Rohit Girdhar, Lorenzo Torresani, Kristen Grauman.

[Paper] [Code]

LAVENDER: Unifying Video-Language Understanding as Masked Language Modeling. (LAVENDER). [CVPR, 2023].

Linjie Li, Zhe Gan, Kevin Lin, Chung-Ching Lin, Zicheng Liu, Ce Liu, Lijuan Wang.

[Paper] [Code]

Learning Video Representations from Large Language Models. (LaViLa). [CVPR, 2023].

Yue Zhao, Ishan Misra, Philipp Krähenbühl, Rohit Girdhar.

[Paper] [Code]

Masked Video Distillation: Rethinking Masked Feature Modeling for Self-supervised Video Representation Learning. (MVD). [CVPR, 2023].

Rui Wang, Dongdong Chen, Zuxuan Wu, Yinpeng Chen, Xiyang Dai, Mengchen Liu, Lu Yuan, Yu-Gang Jiang.

[Paper] [Code]

Tell Me What Happened: Unifying Text-guided Video Completion via Multimodal Masked Video Generation. (MMVG). [CVPR, 2023].

Linjie Li, Zhe Gan, Kevin Lin, Chung-Ching Lin, Zicheng Liu, Ce Liu, Lijuan Wang.

[Paper] [Code]

Test of Time: Instilling Video-Language Models with a Sense of Time. (TACT). [CVPR, 2023].

Piyush Bagad, Makarand Tapaswi, Cees G. M. Snoek.

[Paper] [Code]

VideoMAE V2: Scaling Video Masked Autoencoders with Dual Masking. (VideoMAEv2). [CVPR, 2023].

Tsu-Jui Fu, Licheng Yu, Ning Zhang, Cheng-Yang Fu, Jong-Chyi Su, William Yang Wang, Sean Bell.

[Paper] [Code]

VindLU: A Recipe for Effective Video-and-Language Pretraining. (VindLU). [CVPR, 2023].

Feng Cheng, Xizi Wang, Jie Lei, David Crandall, Mohit Bansal, Gedas Bertasius.

[Paper] [Code]

An Empirical Study of End-to-End Video-Language Transformers with Masked Visual Modeling. (Violetv2). [CVPR, 2023].

Tsu-Jui Fu, Linjie Li, Zhe Gan, Kevin Lin, William Yang Wang, Lijuan Wang, Zicheng Liu.

[Paper] [Code]

Video-LLaMA: An Instruction-tuned Audio-Visual Language Model for Video Understanding. (Video-LLaMA). [EMNLP, 2023].

Hang Zhang, Xin Li, Lidong Bing.

[Paper] [Code]

Audiovisual Masked Autoencoders. (AudVis MAE). [ICCV, 2023].

Mariana-Iuliana Georgescu, Eduardo Fonseca, Radu Tudor Ionescu, Mario Lucic, Cordelia Schmid, Anurag Arnab.

[Paper]

FateZero: Fusing Attentions for Zero-shot Text-based Video Editing. (FateZero). [ICCV, 2023].

Chenyang Qi, Xiaodong Cun, Yong Zhang, Chenyang Lei, Xintao Wang, Ying Shan, Qifeng Chen.

[Paper] [Code]

HiTeA: Hierarchical Temporal-Aware Video-Language Pre-training. (Hitea). [ICCV, 2023].

Qinghao Ye, Guohai Xu, Ming Yan, Haiyang Xu, Qi Qian, Ji Zhang, Fei Huang.

[Paper]

MGMAE: Motion Guided Masking for Video Masked Autoencoding. (MGMAE). [ICCV, 2023].

Bingkun Huang, Zhiyu Zhao, Guozhen Zhang, Yu Qiao, Limin Wang.

[Paper] [Code]

Progressive Spatio-Temporal Prototype Matching for Text-Video Retrieval. (ProST). [ICCV, 2023].

Pandeng Li, Chen-Wei Xie, Liming Zhao, Hongtao Xie, Jiannan Ge, Yun Zheng, Deli Zhao, Yongdong Zhang·

[Paper] [Code]

StableVideo: Text-driven Consistency-aware Diffusion Video Editing. (StableVideo). [ICCV, 2023].

Wenhao Chai, Xun Guo, Gaoang Wang, Yan Lu·

[Paper] [Code]

Verbs in Action: Improving verb understanding in video-language models. (VFC). [ICCV, 2023].

Liliane Momeni, Mathilde Caron, Arsha Nagrani, Andrew Zisserman, Cordelia Schmid.

[Paper] [Code]

Paxion: Patching Action Knowledge in Video-Language Foundation Models. (PAXION). [NeurIPS, 2023].

Zhenhailong Wang, Ansel Blume, Sha Li, Genglin Liu, Jaemin Cho, Zineng Tang, Mohit Bansal, Heng Ji.

[Paper] [Code]

Control-A-Video: Controllable Text-to-Video Generation with Diffusion Models. (Control-A-Video). [arxiv, 2023].

Weifeng Chen, Yatai Ji, Jie Wu, Hefeng Wu, Pan Xie, Jiashi Li, Xin Xia, Xuefeng Xiao, Liang Lin.

[Paper] [Code]

Dreamix: Video Diffusion Models are General Video Editors. (Dreammix). [arxiv, 2023].

Eyal Molad, Eliahu Horwitz, Dani Valevski, Alex Rav Acha, Yossi Matias, Yael Pritch, Yaniv Leviathan, Yedid Hoshen.

[Paper]

MuLTI: Efficient Video-and-Language Understanding with Text-Guided MultiWay-Sampler and Multiple Choice Modeling. (MuLTI). [arxiv, 2023].

Jiaqi Xu, Bo Liu, Yunkuo Chen, Mengli Cheng, Xing Shi.

[Paper]

Stable Video Diffusion: Scaling Latent Video Diffusion Models to Large Datasets. (SV3D). [arxiv, 2023].

Andreas Blattmann, Tim Dockhorn, Sumith Kulal, Daniel Mendelevitch, Maciej Kilian, Dominik Lorenz, Yam Levi, Zion English, Vikram Voleti, Adam Letts, Varun Jampani, Robin Rombach.

[Paper] [Code]

Video-ChatGPT: Towards Detailed Video Understanding via Large Vision and Language Models. (Video-ChatGPT). [arxiv, 2023].

Muhammad Maaz, Hanoona Rasheed, Salman Khan, Fahad Shahbaz Khan.

[Paper] [Code]

VideoDirectorGPT: Consistent Multi-scene Video Generation via LLM-Guided Planning. (VALOR). [arxiv, 2023].

Han Lin, Abhay Zala, Jaemin Cho, Mohit Bansal.

[Paper] [Code]

Video-LLaVA: Learning United Visual Representation by Alignment Before Projection. (Video-LLaVA). [arxiv, 2023].

Bin Lin, Yang Ye, Bin Zhu, Jiaxi Cui, Munan Ning, Peng Jin, Li Yuan.

[Paper] [Code]

Advancing High-Resolution Video-Language Representation with Large-Scale Video Transcriptions. (HD-VILA). [CVPR, 2022].

Hongwei Xue, Tiankai Hang, Yanhong Zeng, Yuchong Sun, Bei Liu, Huan Yang, Jianlong Fu, Baining Guo.

[Paper] [Code]

Align and Prompt: Video-and-Language Pre-training with Entity Prompts. (MCQ). [CVPR, 2022].

Dongxu Li, Junnan Li, Hongdong Li, Juan Carlos Niebles, Steven C.H. Hoi.

[Paper] [Code]

BEVT: BERT Pretraining of Video Transformers. (Bevt). [CVPR, 2022].

Rui Wang, Dongdong Chen, Zuxuan Wu, Yinpeng Chen, Xiyang Dai, Mengchen Liu, Yu-Gang Jiang, Luowei Zhou, Lu Yuan.

[Paper] [Code]

Bridging Video-text Retrieval with Multiple Choice Questions. (ALPRO). [CVPR, 2022].

Yuying Ge, Yixiao Ge, Xihui Liu, Dian Li, Ying Shan, Xiaohu Qie, Ping Luo.

[Paper] [Code]

End-to-end Generative Pretraining for Multimodal Video Captioning. (MV-GPT). [CVPR, 2022].

Paul Hongsuck Seo, Arsha Nagrani, Anurag Arnab, Cordelia Schmid.

[Paper]

Object-aware Video-language Pre-training for Retrieval. (OA-Trans). [CVPR, 2022].

Alex Jinpeng Wang, Yixiao Ge, Guanyu Cai, Rui Yan, Xudong Lin, Ying Shan, Xiaohu Qie, Mike Zheng Shou.

[Paper] [Code]

SwinBERT: End-to-End Transformers with Sparse Attention for Video Captioning. (SwinBERT). [CVPR, 2022].

Kevin Lin, Linjie Li, Chung-Ching Lin, Faisal Ahmed, Zhe Gan, Zicheng Liu, Yumao Lu, Lijuan Wang.

[Paper] [Code]

MILES: Visual BERT Pre-training with Injected Language Semantics for Video-text Retrieval. (MILES). [ECCV, 2022].

Yuying Ge, Yixiao Ge, Xihui Liu, Alex Jinpeng Wang, Jianping Wu, Ying Shan, Xiaohu Qie, Ping Luo.

[Paper] [Code]

Long-Form Video-Language Pre-Training with Multimodal Temporal Contrastive Learning. (LF-VILA). [NeurIPS, 2022].

Yuchong Sun, Hongwei Xue, Ruihua Song, Bei Liu, Huan Yang, Jianlong Fu.

[Paper] [Code]

Text-Adaptive Multiple Visual Prototype Matching for Video-Text Retrieval. (TMVM). [NeurIPS, 2022].

Chengzhi Lin, Ancong Wu, Junwei Liang, Jun Zhang, Wenhang Ge, Wei-Shi Zheng, Chunhua Shen.

[Paper]

Masked Autoencoders As Spatiotemporal Learners. (ST-MAE). [NeurIPS, 2022].

Christoph Feichtenhofer, Haoqi Fan, Yanghao Li, Kaiming He.

[Paper] [Code]

VideoMAE: Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training. (VideoMAE). [NeurIPS, 2022].

Zhan Tong, Yibing Song, Jue Wang, Limin Wang.

[Paper] [Code]

TVLT: Textless Vision-Language Transformer. (TVLT). [NeurIPS, 2022].

Zineng Tang, Jaemin Cho, Yixin Nie, Mohit Bansal.

[Paper] [Code]

It Takes Two: Masked Appearance-Motion Modeling for Self-supervised Video Transformer Pre-training. (MAM2). [arxiv, 2022].

Yuxin Song, Min Yang, Wenhao Wu, Dongliang He, Fu Li, Jingdong Wang.

[Paper]

Make-A-Video: Text-to-Video Generation without Text-Video Data. (Make-A-Video). [arxiv, 2022].

Uriel Singer, Adam Polyak, Thomas Hayes, Xi Yin, Jie An, Songyang Zhang, Qiyuan Hu, Harry Yang, Oron Ashual, Oran Gafni, Devi Parikh, Sonal Gupta, Yaniv Taigman.

[Paper]

SimVTP: Simple Video Text Pre-training with Masked Autoencoders. (SimVTP). [arxiv, 2022].

Yue Ma, Tianyu Yang, Yin Shan, Xiu Li.

[Paper] [Code]

VIOLET : End-to-End Video-Language Transformers with Masked Visual-token Modeling. (Violet). [arxiv, 2022].

Tsu-Jui Fu, Linjie Li, Zhe Gan, Kevin Lin, William Yang Wang, Lijuan Wang, Zicheng Liu.

[Paper] [Code]

VideoCLIP: Contrastive Pre-training for Zero-shot Video-Text Understanding. (VideoCLIP). [EMNLP, 2021].

Hu Xu, Gargi Ghosh, Po-Yao Huang, Dmytro Okhonko, Armen Aghajanyan, Florian Metze, Luke Zettlemoyer, Christoph Feichtenhofer.

[Paper] [Code]

Just Ask: Learning to Answer Questions from Millions of Narrated Videos. (Just-Ask). [ICCV, 2021].

Antoine Yang, Antoine Miech, Josef Sivic, Ivan Laptev, Cordelia Schmid.

[Paper] [Code]

VIMPAC: Video Pre-Training via Masked Token Prediction and Contrastive Learning. (Vimpac). [arxiv, 2021].

Hao Tan, Jie Lei, Thomas Wolf, Mohit Bansal.

[Paper] [Code]

UniVL: A Unified Video and Language Pre-Training Model for Multimodal Understanding and Generation. (UniVL). [arxiv, 2020].

Huaishao Luo, Lei Ji, Botian Shi, Haoyang Huang, Nan Duan, Tianrui Li, Jason Li, Taroon Bharti, Ming Zhou.

[Paper] [Code]

Chat-UniVi: Unified Visual Representation Empowers Large Language Models with Image and Video Understanding. (Chat-UniVi). [CVPR, 2024].

Peng Jin, Ryuichi Takanobu, Wancai Zhang, Xiaochun Cao, Li Yuan.

[Paper] [Code]

General Object Foundation Model for Images and Videos at Scale. (GLEE). [CVPR, 2024].

Junfeng Wu, Yi Jiang, Qihao Liu, Zehuan Yuan, Xiang Bai, Song Bai.

[Paper] [Code]

LanguageBind: Extending Video-Language Pretraining to N-modality by Language-based Semantic Alignment. (LanguageBind). [ICLR, 2024].

Bin Zhu, Bin Lin, Munan Ning, Yang Yan, Jiaxi Cui, HongFa Wang, Yatian Pang, Wenhao Jiang, Junwu Zhang, Zongwei Li, Wancai Zhang, Zhifeng Li, Wei Liu, Li Yuan.

[Paper] [Code]

X2-VLM: All-In-One Pre-trained Model For Vision-Language Tasks. (X2-VLM). [TPAMI, 2024].

Yan Zeng, Xinsong Zhang, Hang Li, Jiawei Wang, Jipeng Zhang, Wangchunshu Zhou.

[Paper] [Code]

InternVideo2: Scaling Video Foundation Models for Multimodal Video Understanding. (InternVideo2). [arxiv, 2024].

Yi Wang, Kunchang Li, Xinhao Li, Jiashuo Yu, Yinan He, Guo Chen, Baoqi Pei, Rongkun Zheng, Jilan Xu, Zun Wang, Yansong Shi, Tianxiang Jiang, Songze Li, Hongjie Zhang, Yifei Huang, Yu Qiao, Yali Wang, Limin Wang.

[Paper] [Code]

Video-LaVIT: Unified Video-Language Pre-training with Decoupled Visual-Motional Tokenization. (Video-LaVIT). [arxiv, 2024].

Yang Jin, Zhicheng Sun, Kun Xu, Kun Xu, Liwei Chen, Hao Jiang, Quzhe Huang, Chengru Song, Yuliang Liu, Di Zhang, Yang Song, Kun Gai, Yadong Mu.

[Paper] [Code]

VideoPoet: A Large Language Model for Zero-Shot Video Generation. (VideoPoet). [arxiv, 2024].

Dan Kondratyuk, Lijun Yu, Xiuye Gu, José Lezama, Jonathan Huang, Grant Schindler, Rachel Hornung, Vighnesh Birodkar, Jimmy Yan, Ming-Chang Chiu, Krishna Somandepalli, Hassan Akbari, Yair Alon, Yong Cheng, Josh Dillon, Agrim Gupta, Meera Hahn, Anja Hauth, David Hendon, Alonso Martinez, David Minnen, Mikhail Sirotenko, Kihyuk Sohn, Xuan Yang, Hartwig Adam, Ming-Hsuan Yang, Irfan Essa, Huisheng Wang, David A. Ross, Bryan Seybold, Lu Jiang.

[Paper]

VideoPrism: A Foundational Visual Encoder for Video Understanding. (VideoPrism). [arxiv, 2024].

Long Zhao, Nitesh B. Gundavarapu, Liangzhe Yuan, Hao Zhou, Shen Yan, Jennifer J. Sun, Luke Friedman, Rui Qian, Tobias Weyand, Yue Zhao, Rachel Hornung, Florian Schroff, Ming-Hsuan Yang, David A. Ross, Huisheng Wang, Hartwig Adam, Mikhail Sirotenko, Ting Liu, Boqing Gong.

[Paper]

OmniMAE: Single Model Masked Pretraining on Images and Videos. (OmniMAE). [CVPR, 2023].

Rohit Girdhar, Alaaeldin El-Nouby, Mannat Singh, Kalyan Vasudev Alwala, Armand Joulin, Ishan Misra.

[Paper] [Code]

SMAUG: Sparse Masked Autoencoder for Efficient Video-Language Pre-training. (Smaug). [ICCV, 2023].

Yuanze Lin, Chen Wei, Huiyu Wang, Alan Yuille, Cihang Xie.

[Paper]

mPLUG-2: A Modularized Multi-modal Foundation Model Across Text, Image and Video. (mPLUG-2). [ICML, 2023].

Haiyang Xu, Qinghao Ye, Ming Yan, Yaya Shi, Jiabo Ye, Yuanhong Xu, Chenliang Li, Bin Bi, Qi Qian, Wei Wang, Guohai Xu, Ji Zhang, Songfang Huang, Fei Huang, Jingren Zhou.

[Paper] [Code]

Contrastive Audio-Visual Masked Autoencoder. (CAV-MAE). [ICLR, 2023].

Yuan Gong, Andrew Rouditchenko, Alexander H. Liu, David Harwath, Leonid Karlinsky, Hilde Kuehne, James Glass.

[Paper] [Code]

VAST: A Vision-Audio-Subtitle-Text Omni-Modality Foundation Model and Dataset. (VAST). [NeurIPS, 2023].

Sihan Chen, Handong Li, Qunbo Wang, Zijia Zhao, Mingzhen Sun, Xinxin Zhu, Jing Liu.

[Paper] [Code]

Perceiver-VL: Efficient Vision-and-Language Modeling with Iterative Latent Attention. (Perceiver-VL). [WACV, 2023].

Zineng Tang, Jaemin Cho, Jie Lei, Mohit Bansal.

[Paper] [Code]

Fine-grained Audio-Visual Joint Representations for Multimodal Large Language Models. (FAVOR). [arxiv, 2023].

Guangzhi Sun, Wenyi Yu, Changli Tang, Xianzhao Chen, Tian Tan, Wei Li, Lu Lu, Zejun Ma, Chao Zhang.

[Paper] [Code]

Macaw-LLM: Multi-Modal Language Modeling with Image, Audio, Video, and Text Integration. (Macaw-LLM). [arxiv, 2023].

Chenyang Lyu, Minghao Wu, Longyue Wang, Xinting Huang, Bingshuai Liu, Zefeng Du, Shuming Shi, Zhaopeng Tu.

[Paper] [Code]

PG-Video-LLaVA: Pixel Grounding Large Video-Language Models. (PG-Video-LLaVA). [arxiv, 2023].

Shehan Munasinghe, Rusiru Thushara, Muhammad Maaz, Hanoona Abdul Rasheed, Salman Khan, Mubarak Shah, Fahad Khan.

[Paper] [Code]

ViC-MAE: Self-Supervised Representation Learning from Images and Video with Contrastive Masked Autoencoders. (Vic-MAE). [arxiv, 2023].

Jefferson Hernandez, Ruben Villegas, Vicente Ordonez.

[Paper]

VALOR: Vision-Audio-Language Omni-Perception Pretraining Model and Dataset. (VALOR). [arxiv, 2023].

Jefferson Hernandez, Ruben Villegas, Vicente Ordonez.

[Paper] [Code]

Masked Feature Prediction for Self-Supervised Visual Pre-Training. (MaskFeat). [CVPR, 2022].

Chen Wei, Haoqi Fan, Saining Xie, Chao-Yuan Wu, Alan Yuille, Christoph Feichtenhofer.

[Paper] [Code]

OmniVL:One Foundation Model for Image-Language and Video-Language Tasks. (OmniVL). [NeurIPS, 2022].

Junke Wang, Dongdong Chen, Zuxuan Wu, Chong Luo, Luowei Zhou, Yucheng Zhao, Yujia Xie, Ce Liu, Yu-Gang Jiang, Lu Yuan.

[Paper]

InternVideo: General Video Foundation Models via Generative and Discriminative Learning. (InternVideo). [arxiv, 2022].

Yi Wang, Kunchang Li, Yizhuo Li, Yinan He, Bingkun Huang, Zhiyu Zhao, Hongjie Zhang, Jilan Xu, Yi Liu, Zun Wang, Sen Xing, Guo Chen, Junting Pan, Jiashuo Yu, Yali Wang, Limin Wang, Yu Qiao.

[Paper] [Code]

Self-supervised video pretraining yields human-aligned visual representations. (VITO). [arxiv, 2022].

Nikhil Parthasarathy, S. M. Ali Eslami, João Carreira, Olivier J. Hénaff.

[Paper]

VLM: Task-agnostic Video-Language Model Pre-training for Video Understanding. (VLM). [ACL, 2021].

Hu Xu, Gargi Ghosh, Po-Yao Huang, Prahal Arora, Masoumeh Aminzadeh, Christoph Feichtenhofer, Florian Metze, Luke Zettlemoyer.

[Paper] [Code]

MERLOT: Multimodal Neural Script Knowledge Models. (MERLOT). [NeurIPS, 2021].

Rowan Zellers, Ximing Lu, Jack Hessel, Youngjae Yu, Jae Sung Park, Jize Cao, Ali Farhadi, Yejin Choi.

[Paper] [Code]

VATT: Transformers for Multimodal Self-Supervised Learning from Raw Video, Audio and Text. (VATT). [NeurIPS, 2021].

Hassan Akbari, Liangzhe Yuan, Rui Qian, Wei-Hong Chuang, Shih-Fu Chang, Yin Cui, Boqing Gong.

[Paper] [Code]