Ruojin Cai1,

Joseph Tung1,

Qianqian Wang1,

Hadar Averbuch-Elor2,

Bharath Hariharan1,

Noah Snavely1

1Cornell University, 2Tel Aviv University

ICCV 2023 Oral

Project Page | Paper | Arxiv | Dataset

Implementation for paper "Doppelgangers: Learning to Disambiguate Images of Similar Structures", which proposes a learning-based approach to disambiguate distinct yet visually similar image pairs (doppelgangers) and applies it to structure-from-motion disambiguation.

- Create Conda environment with Python 3.8, PyTorch 1.12.0, and CUDA 10.2 using the following commands:

conda env create -f ./environment.yml

conda activate doppelgangers- Download Colmap from their installation page (version 3.8+).

-

Pretrained model is available in the following link. To use the pretrained models, download the

checkpoint.tar.gzfile, unzip and putdoppelgangers_classifier_loftr.ptunder./weights/folder.[Click to expand]

cd weights/ wget -c https://doppelgangers.cs.cornell.edu/dataset/checkpoint.tar.gz tar -xf checkpoint.tar.gz mv doppelgangers/checkpoints/doppelgangers_classifier_loftr.pt ./ rm checkpoint.tar.gz rm -r doppelgangers/ -

We use LoFTR models for feature matching. Please download the LoFTR outdoor checkpoint

outdoor_ds.ckptin the following Google Drive link, and put it under./weights/folder.

This section contains download links for several helpful datasets:

- SfM Disambiguation: Download the Structure from Motion disambigaution Dataset from the SfM disambiguation with COLMAP GitHub repository. Unzip the file under folder

./data/sfm_disambiguation/. - Pairwise Visual Disambiguation: Download the Doppelgangers Dataset by following these instructions and put the dataset under folder

./data/doppelgangers_dataset/.

- We begin by performing COLMAP feature extraction and matching, which generates a list of image pairs.

- Next, we run Doppelgangers classifiers on these image pairs, with LoFTR matches as input.

- We then remove image pairs from the COLMAP database if they have a predicted probability below the specified threshold. These pairs are more likely to be Doppelgangers.

- Finally, we perform COLMAP reconstruction with the pruned database.

We provide a demo on the Cup dataset to demonstrate how to use our Doppelgangers classifier in Structure from Motion disambiguation with COLMAP: ./notebook/demo_sfm_disambiguation.ipynb.

We provide a script for SfM disambiguation. The COLMAP reconstruction with Doppelgangers classifier can be found at [output_path]/sparse_doppelgangers_[threshold]/.

# Usage:

# python script_sfm_disambiguation.py [path/to/example_config] --input_image_path [path/to/dataset] --output_path [path/to/output]

python script_sfm_disambiguation.py doppelgangers/configs/test_configs/sfm_disambiguation_example.yaml \

--colmap_exe_command colmap \

--input_image_path data/sfm_disambiguation/yan2017/cup/images \

--output_path results/cup/ \

--threshold 0.8 \

--pretrained weights/doppelgangers_classifier_loftr.ptDetails of the Arguments: [Click to expand]

-

To apply the Doppelgangers classifier on custom datasets, change the argument

--input_image_path [path/to/dataset]to the dataset path accordingly, and set the path for output results using the argument--output_path [path/to/output]. -

If you have already completed COLMAP feature extraction and matching stage, you can skip this stage with the

--skip_feature_matchingargument, and specify the path todatabase.dbfile using the argument--database_path [path/to/database.db].Example [Click to expand]

python script_sfm_disambiguation.py doppelgangers/configs/test_configs/sfm_disambiguation_example.yaml \ --colmap_exe_command colmap \ --input_image_path data/sfm_disambiguation/yan2017/cup/images \ --output_path results/cup/ \ --skip_feature_matching \ --database_path results/cup/database.db

-

Use the argument

--skip_reconstructionto skip the standard COLMAP reconstruction w/o Doppelgangers classifier. -

Change doppelgangers threshold with argument

--thresholdand specify a value between 0 and 1. A smaller threshold includes more pairs, while a larger threshold filters out more pairs. The default threshold is set to 0.8. When the reconstruction is split into several components, consider using a smaller threshold. If the reconstruction is not completely disambiguated, consider using a larger threshold. -

Pretrained model can be specified by the argument

--pretrained [path/to/checkpoint].

COLMAP reconstructions of test scenes with and without the Doppelgangers classifier, as described in the paper: reconstructions.tar.gz (3G)

These reconstructions can be imported and visualized in the COLMAP GUI.

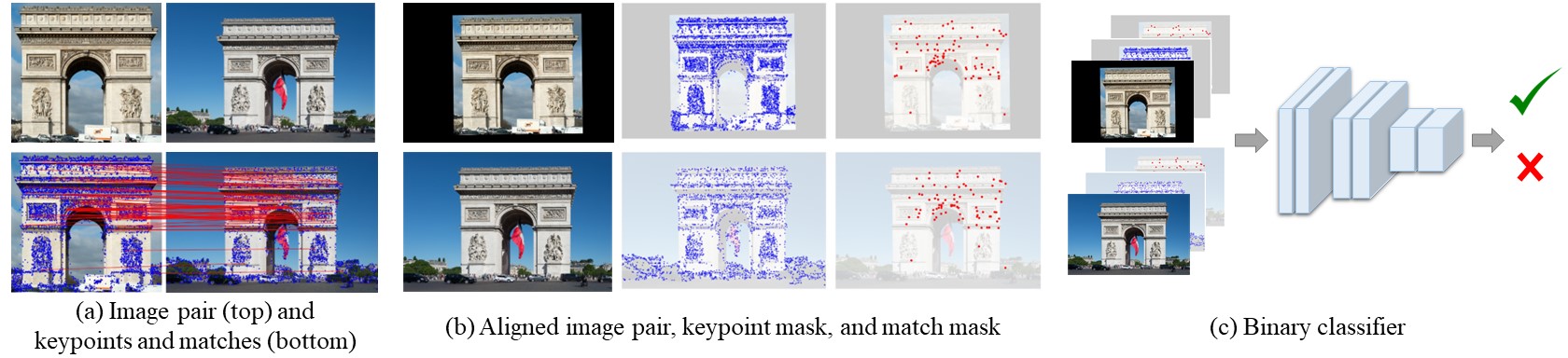

(a) Given a pair of images, we extract keypoints and matches via feature matching methods. Note that this is a negative (doppelganger) pair picturing opposite sides of the Arc de Triomphe. The feature matches are primarily in the top part of the structure, where there are repeated elements, as opposed to the sculptures on the bottom part. (b) We create binary masks of keypoints and matches. We then align the image pair and masks with an affine transformation estimated from matches. (c) Our classifier takes the concatenation of the images and binary masks as input and outputs the probability that the given pair is positive.

# Usage:

# python test.py [path/to/config] --pretrained [path/to/checkpoint]

python test.py doppelgangers/configs/training_configs/doppelgangers_classifier_noflip.yaml \

--pretrained weights/doppelgangers_classifier_loftr.pt# Usage:

# python train.py [path/to/config]

# python train_multi_gpu.py [path/to/config]

python train.py doppelgangers/configs/training_configs/doppelgangers_classifier_noflip.yaml

# training with multiple gpus on Doppelgangers dataset with image flip augmentation

python train_multi_gpu.py doppelgangers/configs/training_configs/doppelgangers_classifier_flip.yaml@inproceedings{cai2023doppelgangers,

title = {Doppelgangers: Learning to Disambiguate Images of Similar Structures},

author = {Cai, Ruojin and Tung, Joseph and Wang, Qianqian and Averbuch-Elor, Hadar and Hariharan, Bharath and Snavely, Noah},

journal = {ICCV},

year = {2023}

}