In this work, we present a lightweight pipeline for robust behavioral cloning of a human driver using end-to-end imitation learning. The proposed pipeline was employed to train and deploy three distinct driving behavior models onto a simulated vehicle.

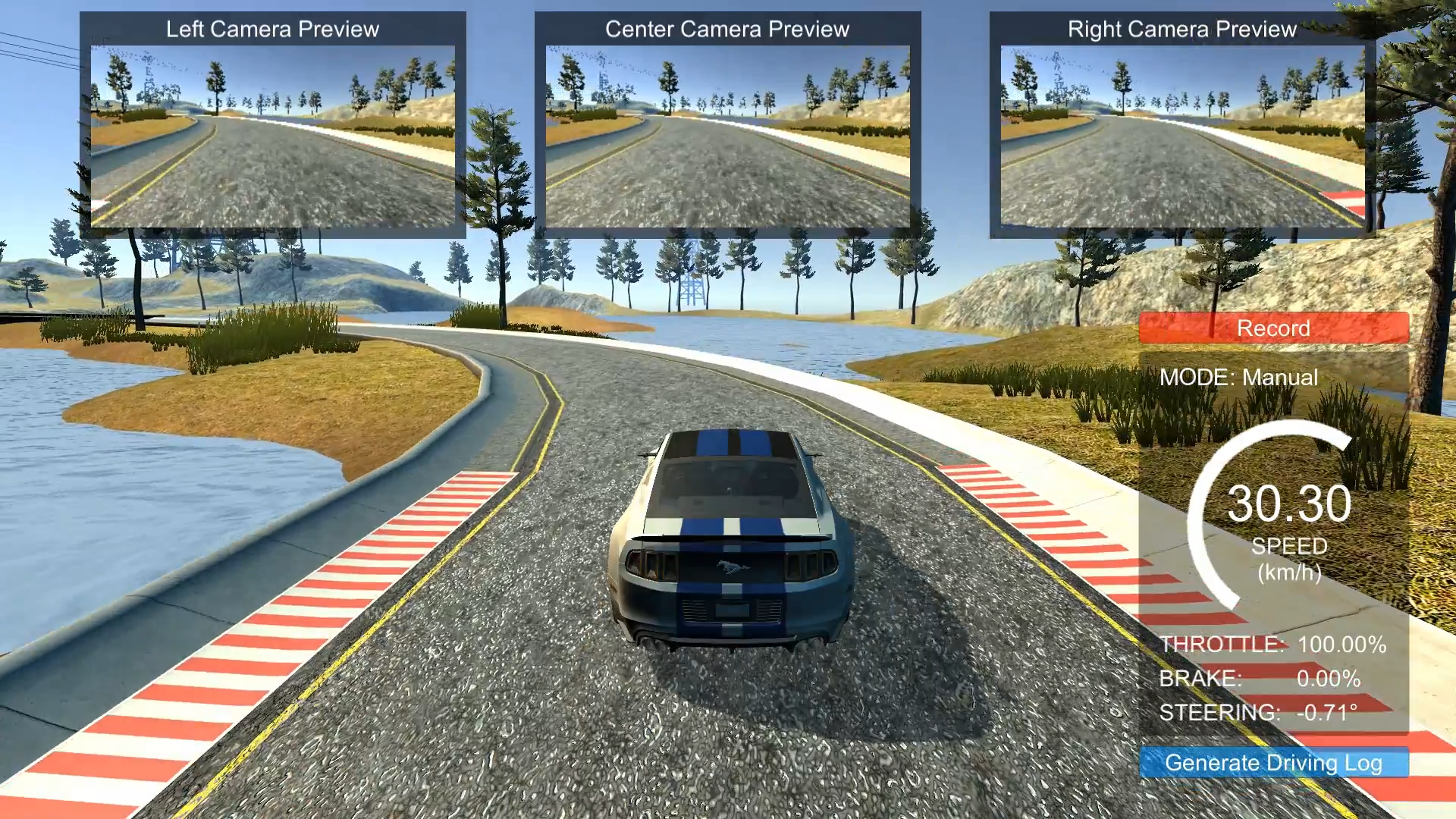

The simulation system employed for validating the proposed pipeline was a rework of an open source simulator developed by Udacity. The source files of our Behavioral Cloning Simulator can be found here.

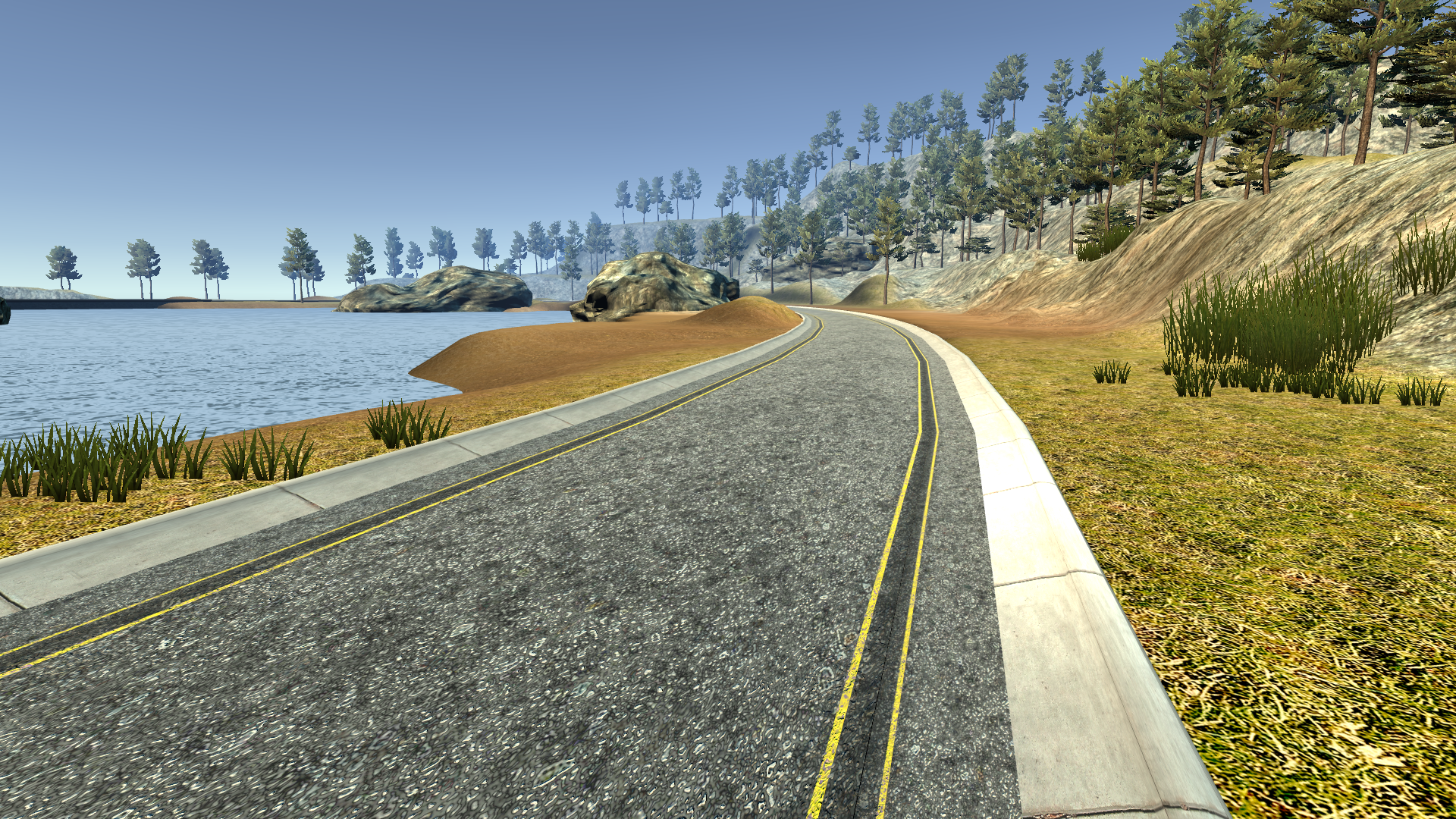

The simulator currently supports two tracks, viz. Lake Track and Mountain Track, for training and testing three different driving behaviors.

| Lake Track | Mountain Track |

|---|---|

|

|

The Lake Track is used for training and testing simplistic driving and collision avoidance behaviors, while the Mountain Track is used for training and testing rigorous driving behavior.

| Simplistic Driving Scenario | Rigorous Driving Scenario | Collision Avoidance Scenario |

|---|---|---|

|

|

|

| Experiment | Parameter | Original Value | Variation |

|---|---|---|---|

| No variation | None | NA | NA |

| Scene obstacle variation | Obstacles | 0 | {0, 10, 20} |

| Scene light intensity variation | Light intensity | 1.6 cd | ±0.1 cd |

| Scene light direction variation | Light direction (X-axis) | 42.218 deg | ±1 deg |

| Vehicle position variation | Vehicle pose | Pos: 179.81, 1.8, 89.86 m Rot: 0, 7.103, 0 deg |

Pos: -40.62, 1.8, 108.73 m Rot: 0, 236.078, 0 deg |

| Vehicle orientation variation | Vehicle orientation (Y-axis) | 7.103 deg | ±5 deg |

| Vehicle heading inversion | Vehicle pose | Pos: 179.81, 1.8, 89.86 m Rot: 0, 7.103, 0 deg |

Pos: 179.81, 1.8, 89.86 m Rot: 0, 187.103, 0 deg |

| Vehicle speed limit variation | Speed limit | Python script: 25 km/h Unity editor: 30 km/h |

+5 km/h (same in Python script and Unity editor) |

| Experiment | Parameter | Original Value | Variation Step Size |

|---|---|---|---|

| No variation | None | NA | NA |

| Scene obstacle variation | Obstacles | NA | NA |

| Scene light intensity variation | Light intensity | 1.0 cd | ±0.1 cd |

| Scene light direction variation | Light direction (X-axis) | 50 deg | ±1 deg |

| Vehicle position variation | Vehicle pose | Pos: 170.62, -79.6, -46.36 m Rot: 0, 90, 0 deg |

Pos: 170.62, -79.6, -56.36 m Rot: 0, 90, 0 deg |

| Vehicle orientation variation | Vehicle orientation (Y-axis) | 90 deg | ±5 deg |

| Vehicle heading inversion | Vehicle pose | Pos: 170.62, -79.6, -46.36 m Rot: 0, 90, 0 deg |

Pos: 170.62, -79.6, -41.86 m Rot: 0, 270, 0 deg |

| Vehicle speed limit variation | Speed limit | Python script: 25 km/h Unity editor: 30 km/h |

+5 km/h (same in Python script and Unity editor) |

Pre-recorded datasets are available within this repository. They can be accessed within each of the four directories:

- Simplistic Driving Behavior

- Rigorous Driving Behaviour

- Collision Avoidance Behaviour

- NVIDIA's Approach vs Our Approach

To record your own dataset, follow the following steps:

- Launch the

Behavioral Cloning Simulator(available here). - Select the track (

Lake TrackorMountain Track). - Select

Training Mode. - Click the

Recordbutton first time to select the directory to store the recorded dataset. - Click the

Recordbutton second time to start recording the dataset. - Drive the vehicle manually (click

Controlsbutton inMain Menuto get acquainted with the manual controls). - Click the

Recordingbutton to stop recording the dataset. The button will show recording progress.

The training pipeline is scripted in Python3 and is implemented in the form of an IPYNB. It comprises of data balancing, augmentation, preprocessing, training a neural network and saving the trained model.

Training.ipynb file in each of the four directories implements the respective training pipeline and the trained models are also available in the respective directories as H5 files.

This analysis pipeline is scripted in Python3 and is implemented in the form of an IPYNB. It comprises of loading data for ~1 lap, loading the trained model and using it to visualize the convolution filters, activation maps and steering angle predictions.

Prediction.ipynb file in each of the four directories implements the respective analysis pipeline and the results are stored in the Results direcotry within respective directories.

The deployment pipeline is scripted in Python3 and is implemented in the raw form to enable command-line execution. It implements a WebSocket interface with the Behavioral Cloning Simulator to acquire live camera feed from the simulated camera on-board the vehicle. It then uses the same preprocessing pipeline adopted during training phase so as to preprocess the camera frames in real-time. It also loads the trained model to predict lateral control command (i.e. steering) using the preprocessed frame. Next, it defines an adaptive longitudinal control law to compute the longitudinal control command (i.e. throttle and brake) based on the predicted steering angle, actual vehicle speed and the prescribed speed and steering limits. Finally it sends the control commands to the simulator to drive the vehicle autonomously.

Drive.py file in each of the four directories implements the respective deployment pipeline. It can also be used to measure the deployment latency.

Note: Please make sure to appropriately modify the Preprocessing Pipeline and NN Model before using this python script for deploying any other driving behavior.

Use the Generate Driving Log button in Training Mode and/or Deployment Mode to log the following data within Unity Editor:

- Vehicle Position X-Coordinate

- Vehicle Position Z-Coordinate

- Throttle Command

- Brake Command

- Steering Command

- Vehicle Speed

The field result plots can be generated using a MATLAB Script by loading the data into it.

Sample MATLAB Scripts (*.m files) along with the generated plots are available within the Results direcotry of each of the three driving behaviors.

Implementation demonstrations pertaining to this research on robust behavioral cloning for autonomous vehicles are available on YouTube.

Please cite the following paper when using any part of this work for your research:

@article{RBCAV-2021,

author = {Samak, Tanmay Vilas and Samak, Chinmay Vilas and Kandhasamy, Sivanathan},

title = {Robust Behavioral Cloning for Autonomous Vehicles Using End-to-End Imitation Learning},

journal = {SAE International Journal of Connected and Automated Vehicles},

volume = {4},

number = {3},

pages = {279-295},

month = {aug},

year = {2021},

doi = {10.4271/12-04-03-0023},

url = {https://doi.org/10.4271/12-04-03-0023},

issn = {2574-0741}

}This work has been published in SAE International Journal of Connected and Automated Vehicles, as a part of their Special Issue on Machine Learning and Deep Learning Techniques for Connected and Autonomous Vehicle Applications. The publication can be found on SAE Mobilus.