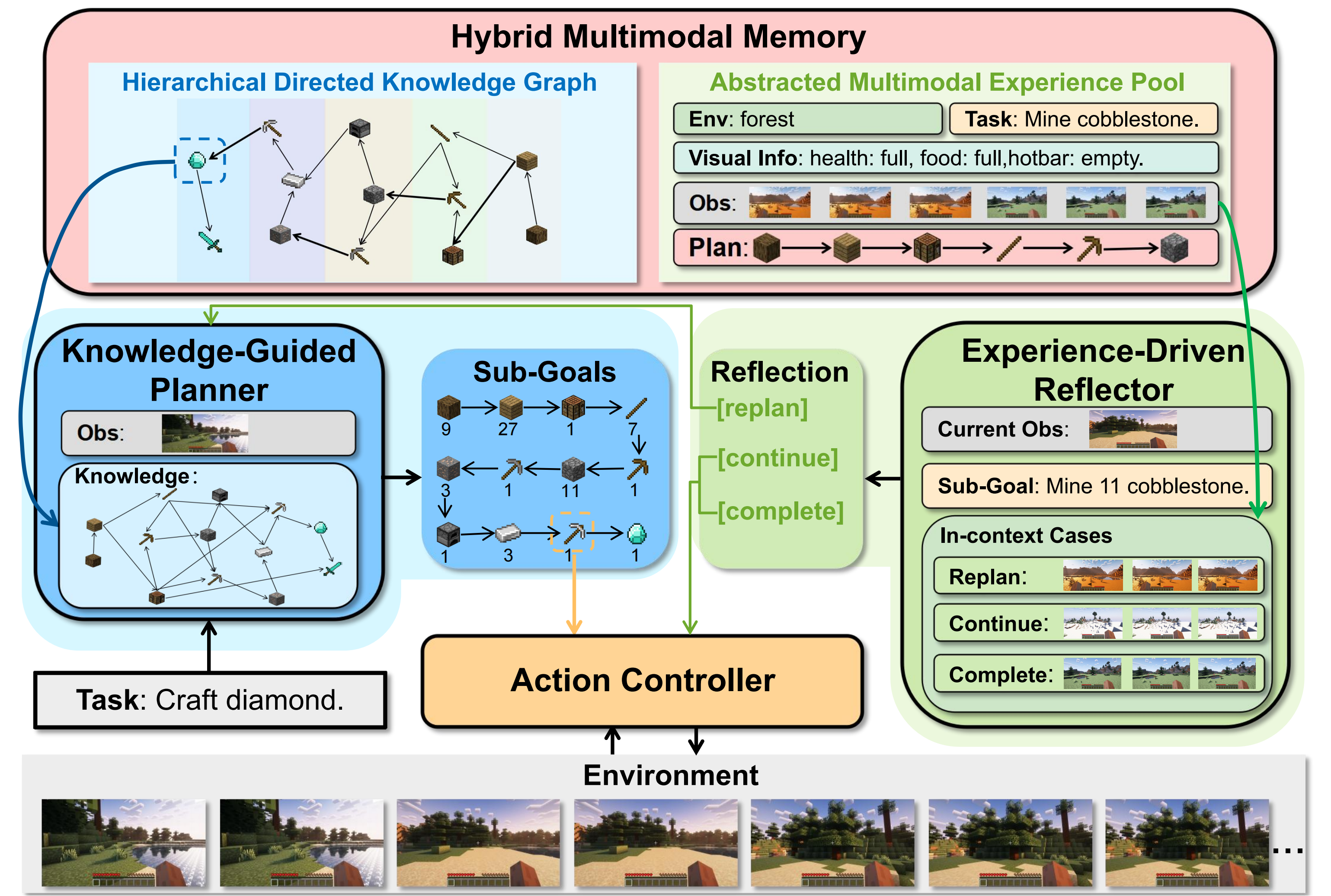

We propose a Hybrid Multimodal Memory module that integrates structured knowledge and multimodal experiences into the memory mechanism of the agent. On top of it, we introduce a powerful Minecraft agent, Optimus-1, which achieves a 30% improvement over existing agents on 67 long-horizon tasks. ✨

Table of Contents

| Title | Venue | Year | Code | Demo |

|---|---|---|---|---|

Video PreTraining (VPT): Learning to Act by Watching Unlabeled Online Videos |

NeurIPS | 2022 | Github | - |

GROOT: Learning to Follow Instructions by Watching Gameplay Videos |

ICLR | 2024 | Github | Demo |