Extract legs positions from lidar data, like this:

https://www.youtube.com/watch?v=KcfxU6_UrOo

run preprocessing script:

$ python3 src/preprocessing.py

once run data are stored in ./data/train/

run training script:

$ python3 src/training.py

# clone 'ros_pygame_radar_2D' to your workspace

$ git clone https://github.com/PouceHeure/ros_pygame_radar_2D

# compile package

$ catkin build ros_pygame_radar_2D

# git 'ros_detection_legs' (this pkg) to your workspace

$ git clone https://github.com/PouceHeure/ros_detection_legs

# inside your ros workingspace

$ catkin build ros_detection_legs

- run detector_node

$ rosrun ros_detection_legs detector_node.py

$ rosrun ros_pygame_radar_2D radar_node.py

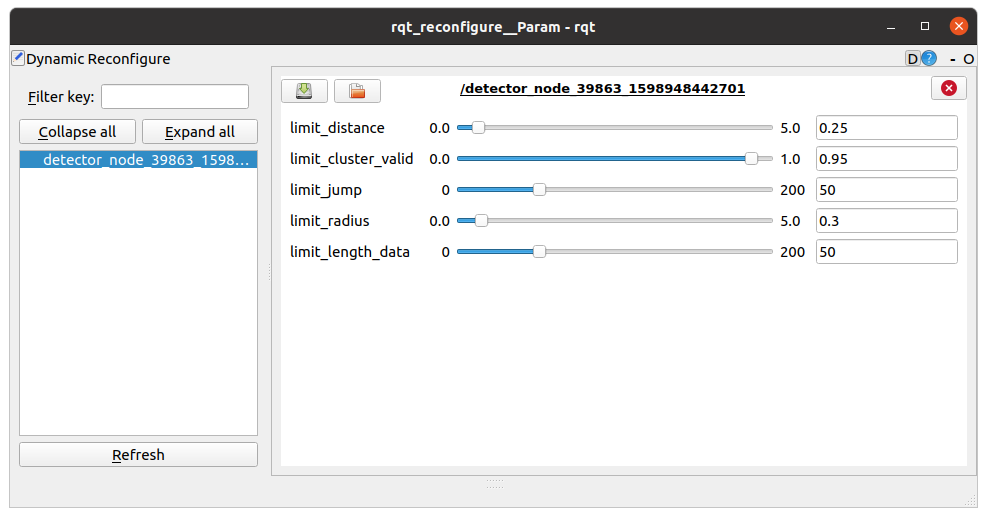

- update parameters, some parameters about model are dynamically reconfigurable. By default dynamic_reconfigure loads settings save in parameters.json, don't forget to recompile the project if you change parameters.json (because the dynamic_reconfigure generates a .h file from the cfg file) !

- compile cfg and generate new configuration file:

# update parameters.json

$ catkin build

- update parameters dynamically:

$ rosrun rqt_reconfigure rqt_reconfigure

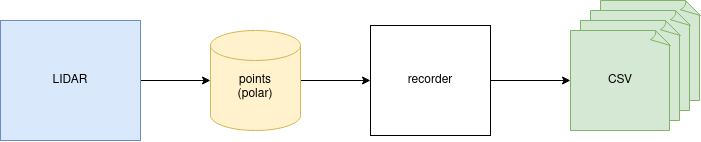

- package: ros_lidar_recorder https://github.com/PouceHeure/ros_lidar_recorder

- data labeling tool: lidar_tool_label https://github.com/PouceHeure/lidar_tool_label

- dataset: https://github.com/PouceHeure/dataset_lidar2D_legs

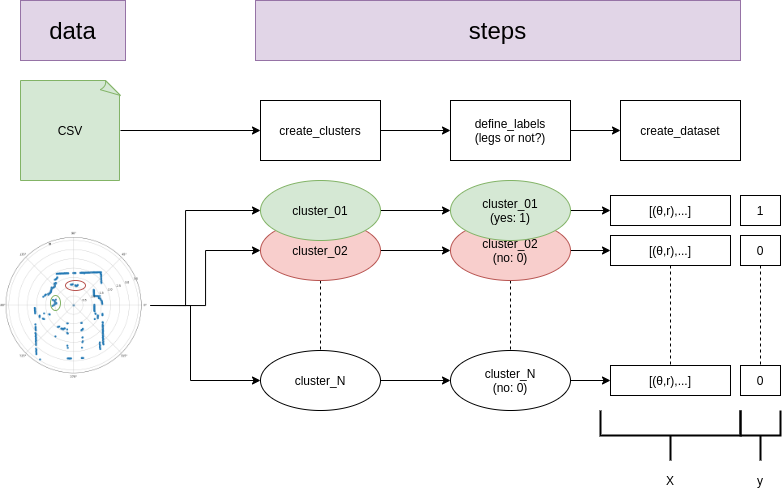

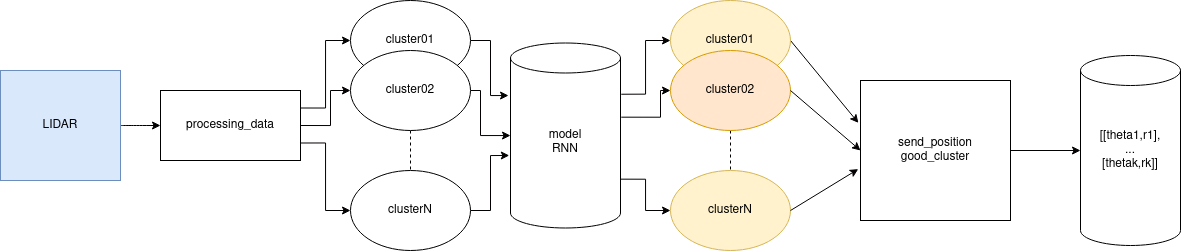

This schema defines princpals steps:

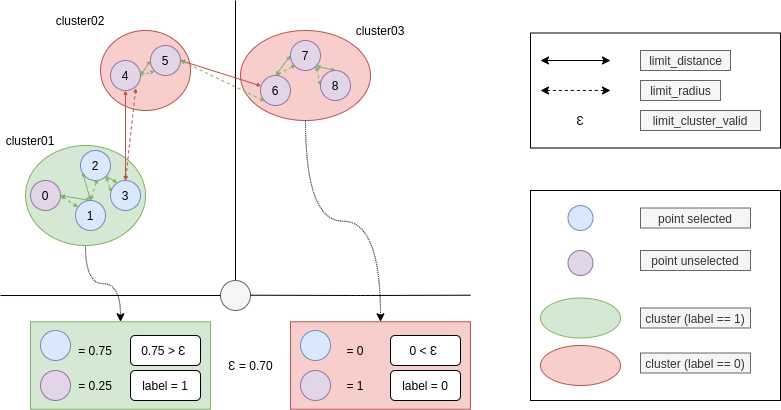

We can create clusters with a segmentation method, by this way data will gathering if there are closed.

Before segmentation, we have to found a way how to compute a distance between 2 polar points.

-

First approach, convert all polar points to cartesien and apply a classic norm, but the complexity of this method is too high.

-

Second approach, find a general expression from polar point to cartesian distance.

A second distance is computed:

Once expressions are defined, we have to define hyper-parameters:

- limit_distance

- limit_radius

After segmentation, we have to attach a label to each cluster. For this one, we define limit_cluster_valid.

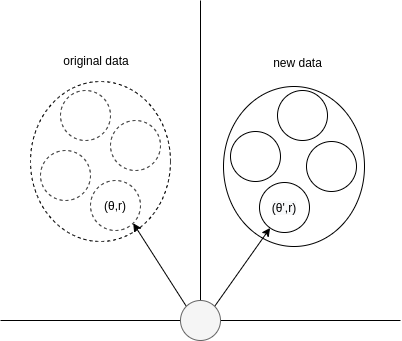

We can increase positive data by applying a rotation on these data.

All positive clusters are selected, on each cluster the same tranformation is applied on each point.

The transformation is done by this expression:

So at after transformation, if we have N transformations

model used: RNN with LSTM cells.

more information about LSTM: https://www.tensorflow.org/api_docs/python/tf/keras/layers/LSTM

settings: model/train/parameters.json

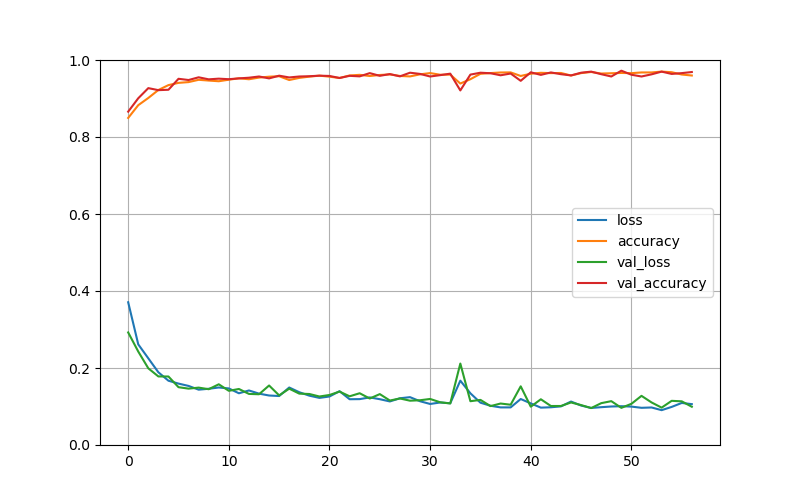

learning curves:

A ros node, detector_node subscribes to /scan topic. Once data are pusblished to this topic, the node uses the training model to predict legs positions. Legs positions are published to /radar topic.

Like the training, data need to be tranformed. So before prediction, clusters are created directly in the subscriber callback function.

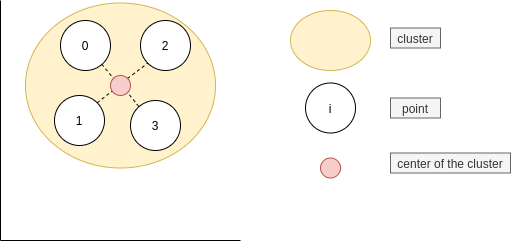

Define the center point of positive clusters:

Coordinate of the center of the cluster j are: