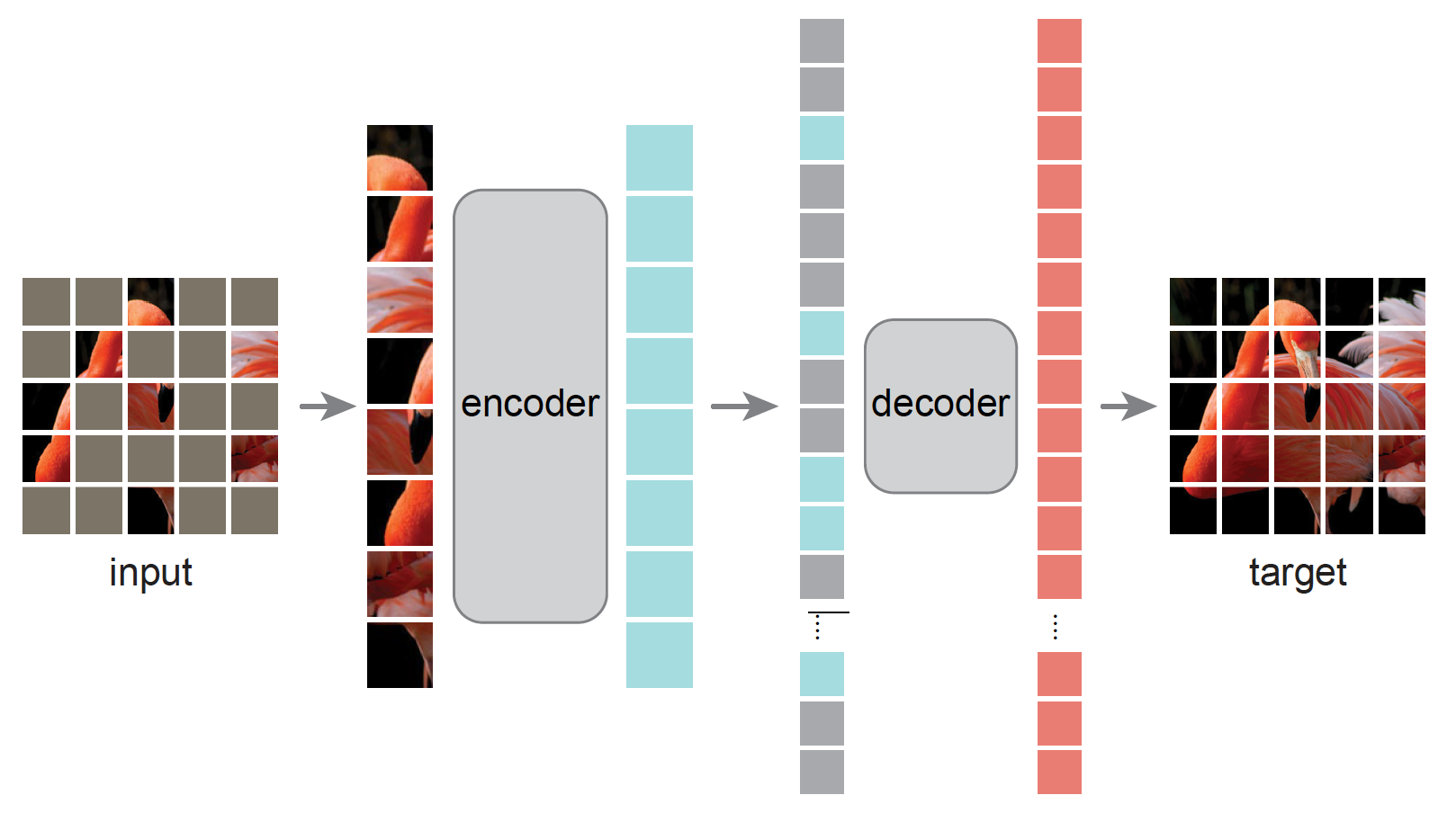

Fig. 1. Masked Autoencoders from Kaiming He et al.

Masked Autoencoder (MAE, Kaiming He et al.) has renewed a surge of interest due to its capacity to learn useful representations from rich unlabeled data. Until recently, MAE and its follow-up works have advanced the state-of-the-art and provided valuable insights in research (particularly vision research). Here I list several follow-up works after or concurrent with MAE to inspire future research.

*:octocat: code link, 🌐 project page

- 🔥Masked Autoencoders Are Scalable Vision Learners

Kaiming He, Xinlei Chen, Saining Xie, Yanghao Li, Piotr Dollár, Ross Girshick

Kaiming He, Xinlei Chen, Saining Xie, Yanghao Li, Piotr Dollár, Ross Girshick - 🔥SimMIM: A Simple Framework for Masked Image Modeling

Zhenda Xie, Zheng Zhang, Yue Cao, Yutong Lin, Jianmin Bao, Zhuliang Yao, Qi Dai, Han Hu

Zhenda Xie, Zheng Zhang, Yue Cao, Yutong Lin, Jianmin Bao, Zhuliang Yao, Qi Dai, Han Hu - 🔥BEIT: BERT Pre-Training of Image Transformers

Hangbo Bao, Li Dong, Furu Wei

Hangbo Bao, Li Dong, Furu Wei - Student Collaboration Improves Self-Supervised Learning: Dual-Loss Adaptive Masked Autoencoder for Brain Cell Image Analysis

Son T. Ly, Bai Lin, Hung Q. Vo, Dragan Maric, Badri Roysam, Hien V. Nguyen

Son T. Ly, Bai Lin, Hung Q. Vo, Dragan Maric, Badri Roysam, Hien V. Nguyen - A Mask-Based Adversarial Defense Scheme Weizhen Xu, Chenyi Zhang, Fangzhen Zhao, Liangda Fang

- Adversarial Masking for Self-Supervised Learning

Yuge Shi, N. Siddharth, Philip H.S. Torr, Adam R. Kosiorek

Yuge Shi, N. Siddharth, Philip H.S. Torr, Adam R. Kosiorek - Beyond Masking: Demystifying Token-Based Pre-Training for Vision Transformers

Yunjie Tian, Lingxi Xie, Jiemin Fang, Mengnan Shi, Junran Peng, Xiaopeng Zhang, Jianbin Jiao, Qi Tian, Qixiang Ye

Yunjie Tian, Lingxi Xie, Jiemin Fang, Mengnan Shi, Junran Peng, Xiaopeng Zhang, Jianbin Jiao, Qi Tian, Qixiang Ye - Context Autoencoder for Self-Supervised Representation Learning

Xiaokang Chen, Mingyu Ding, Xiaodi Wang, Ying Xin, Shentong Mo, Yunhao Wang, Shumin Han, Ping Luo, Gang Zeng, Jingdong Wang

Xiaokang Chen, Mingyu Ding, Xiaodi Wang, Ying Xin, Shentong Mo, Yunhao Wang, Shumin Han, Ping Luo, Gang Zeng, Jingdong Wang - Contextual Representation Learning beyond Masked Language Modeling

Zhiyi Fu, Wangchunshu Zhou, Jingjing Xu, Hao Zhou, Lei Li

Zhiyi Fu, Wangchunshu Zhou, Jingjing Xu, Hao Zhou, Lei Li - ContrastMask: Contrastive Learning to Segment Every Thing

Xuehui Wang, Kai Zhao, Ruixin Zhang, Shouhong Ding, Yan Wang, Wei Shen

Xuehui Wang, Kai Zhao, Ruixin Zhang, Shouhong Ding, Yan Wang, Wei Shen - ConvMAE: Masked Convolution Meets Masked Autoencoders

Peng Gao, Teli Ma, Hongsheng Li, Ziyi Lin, Jifeng Dai, Yu Qiao

Peng Gao, Teli Ma, Hongsheng Li, Ziyi Lin, Jifeng Dai, Yu Qiao - Exploring Plain Vision Transformer Backbones for Object Detection Yanghao Li, Hanzi Mao, Ross Girshick, Kaiming He

- Global Contrast Masked Autoencoders Are Powerful Pathological Representation Learners

Hao Quan, Xingyu Li, Weixing Chen, Qun Bai, Mingchen Zou, Ruijie Yang, Tingting Zheng, Ruiqun Qi, Xinghua Gao, Xiaoyu Cui

Hao Quan, Xingyu Li, Weixing Chen, Qun Bai, Mingchen Zou, Ruijie Yang, Tingting Zheng, Ruiqun Qi, Xinghua Gao, Xiaoyu Cui - iBOT: Image Bert Pre-Training With Online Tokenizer

Jinghao Zhou, Chen Wei, Huiyu Wang, Wei Shen, Cihang Xie, Alan Yuille, Tao Kong

Jinghao Zhou, Chen Wei, Huiyu Wang, Wei Shen, Cihang Xie, Alan Yuille, Tao Kong - MADE: Masked Autoencoder for Distribution Estimation

Mathieu Germain, Karol Gregor, Iain Murray, Hugo Larochelle

Mathieu Germain, Karol Gregor, Iain Murray, Hugo Larochelle - Mask Transfiner for High-Quality Instance Segmentation

Lei Ke, Martin Danelljan, Xia Li, Yu-Wing Tai, Chi-Keung Tang, Fisher Yu

Lei Ke, Martin Danelljan, Xia Li, Yu-Wing Tai, Chi-Keung Tang, Fisher Yu - Masked Autoencoders As Spatiotemporal Learners Christoph Feichtenhofer, Haoqi Fan, Yanghao Li, Kaiming He

- Masked Feature Prediction for Self-Supervised Visual Pre-Training Chen Wei, Haoqi Fan, Saining Xie, Chao-Yuan Wu, Alan Yuille, Christoph Feichtenhofer

- Masked Image Modeling Advances 3D Medical Image Analysis Zekai Chen, Devansh Agarwal, Kshitij Aggarwal, Wiem Safta, Mariann Micsinai Balan, Venkat Sethuraman, Kevin Brown

- Masked Siamese Networks for Label-Efficient Learning

Masked Siamese Networks for Label-Efficient Learning

Masked Siamese Networks for Label-Efficient Learning - MaskGIT: Masked Generative Image Transformer

Huiwen Chang, Han Zhang, Lu Jiang, Ce Liu, William T. Freeman

Huiwen Chang, Han Zhang, Lu Jiang, Ce Liu, William T. Freeman - MLIM: Vision-and-Language Model Pre-training with Masked Language and Image Modeling Tarik Arici, Mehmet Saygin Seyfioglu, Tal Neiman, Yi Xu, Son Train, Trishul Chilimbi, Belinda Zeng, Ismail Tutar

- SimMC: Simple Masked Contrastive Learning of Skeleton Representations for Unsupervised Person Re-Identification

Haocong Rao, Chunyan Miao

Haocong Rao, Chunyan Miao - VideoMAE: Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training

Zhan Tong, Yibing Song, Jue Wang, Limin Wang

Zhan Tong, Yibing Song, Jue Wang, Limin Wang - What to Hide from Your Students: Attention-Guided Masked Image Modeling Ioannis Kakogeorgiou, Spyros Gidaris, Bill Psomas, Yannis Avrithis, Andrei Bursuc, Konstantinos Karantzalos, Nikos Komodakis

- Uniform Masking: Enabling MAE Pre-training for Pyramid-based Vision Transformers with Locality

Xiang Li, Wenhai Wang, Lingfeng Yang, Jian Yang

Xiang Li, Wenhai Wang, Lingfeng Yang, Jian Yang - Self-supervised 3D anatomy segmentation using self-distilled masked image transformer (SMIT) Jue Jiang, Neelam Tyagi, Kathryn Tringale, Christopher Crane, Harini Veeraraghavan

- FaceMAE: Privacy-Preserving Face Recognition via Masked Autoencoders

Kai Wang, Bo Zhao, Xiangyu Peng, Zheng Zhu, Jiankang Deng, Xinchao Wang, Hakan Bilen, Yang You

Kai Wang, Bo Zhao, Xiangyu Peng, Zheng Zhu, Jiankang Deng, Xinchao Wang, Hakan Bilen, Yang You - Deeper vs Wider: A Revisit of Transformer Configuration Fuzhao Xue, Jianghai Chen, Aixin Sun, Xiaozhe Ren, Zangwei Zheng, Xiaoxin He, Xin Jiang, Yang You

- Masked Conditional Video Diffusion for Prediction, Generation, and Interpolation

Vikram Voleti, Alexia Jolicoeur-Martineau, Christopher Pal

Vikram Voleti, Alexia Jolicoeur-Martineau, Christopher Pal - Green Hierarchical Vision Transformer for Masked Image Modeling

Lang Huang, Shan You, Mingkai Zheng, Fei Wang, Chen Qian, Toshihiko Yamasaki

Lang Huang, Shan You, Mingkai Zheng, Fei Wang, Chen Qian, Toshihiko Yamasaki - Revealing the Dark Secrets of Masked Image Modeling Zhenda Xie, Zigang Geng, Jingcheng Hu, Zheng Zhang, Han Hu, Yue Cao

- MixMIM: Mixed and Masked Image Modeling for Efficient Visual Representation Learning

Jihao Liu, Xin Huang, Yu Liu, Hongsheng Li

Jihao Liu, Xin Huang, Yu Liu, Hongsheng Li - Contrastive Learning Rivals Masked Image Modeling in Fine-tuning via Feature Distillation

Yixuan Wei, Han Hu, Zhenda Xie, Zheng Zhang, Yue Cao, Jianmin Bao, Dong Chen, Baining Guo

Yixuan Wei, Han Hu, Zhenda Xie, Zheng Zhang, Yue Cao, Jianmin Bao, Dong Chen, Baining Guo - Architecture-Agnostic Masked Image Modeling -- From ViT back to CNN

Siyuan Li, Di Wu, Fang Wu, Zelin Zang, Kai Wang, Lei Shang, Baigui Sun, Hao Li, Stan.Z.Li

Siyuan Li, Di Wu, Fang Wu, Zelin Zang, Kai Wang, Lei Shang, Baigui Sun, Hao Li, Stan.Z.Li - SupMAE: Supervised Masked Autoencoders Are Efficient Vision Learners

Feng Liang, Yangguang Li, Diana Marculescu

Feng Liang, Yangguang Li, Diana Marculescu - Object-wise Masked Autoencoders for Fast Pre-training Jiantao Wu, Shentong Mo

- Multimodal Masked Autoencoders Learn Transferable Representations Xinyang Geng, Hao Liu, Lisa Lee, Dale Schuurmans, Sergey Levine, Pieter Abbeel

- MaskOCR: Text Recognition with Masked Encoder-Decoder Pretraining Pengyuan Lyu, Chengquan Zhang, Shanshan Liu, Meina Qiao, Yangliu Xu, Liang Wu, Kun Yao, Junyu Han, Errui Ding, Jingdong Wang

- Mask DINO: Towards A Unified Transformer-based Framework for Object Detection and Segmentation

Feng Li, Hao Zhang, Huaizhe xu, Shilong Liu, Lei Zhang, Lionel M. Ni, Heung-Yeung Shum

Feng Li, Hao Zhang, Huaizhe xu, Shilong Liu, Lei Zhang, Lionel M. Ni, Heung-Yeung Shum - Efficient Self-supervised Vision Pretraining with Local Masked Reconstruction Jun Chen, Ming Hu, Boyang Li, Mohamed Elhoseiny

- Masked Unsupervised Self-training for Zero-shot Image Classification

Junnan Li, Silvio Savarese, Steven C.H. Hoi

Junnan Li, Silvio Savarese, Steven C.H. Hoi - On Data Scaling in Masked Image Modeling Zhenda Xie, Zheng Zhang, Yue Cao, Yutong Lin, Yixuan Wei, Qi Dai, Han Hu

- Draft-and-Revise: Effective Image Generation with Contextual RQ-Transformer

Doyup Lee, Chiheon Kim, Saehoon Kim, Minsu Cho, Wook-Shin Han

Doyup Lee, Chiheon Kim, Saehoon Kim, Minsu Cho, Wook-Shin Han - Layered Depth Refinement with Mask Guidance

Soo Ye Kim, Jianming Zhang, Simon Niklaus, Yifei Fan, Simon Chen, Zhe Lin, Munchurl Kim

Soo Ye Kim, Jianming Zhang, Simon Niklaus, Yifei Fan, Simon Chen, Zhe Lin, Munchurl Kim - MVP: Multimodality-guided Visual Pre-training Longhui Wei, Lingxi Xie, Wengang Zhou, Houqiang Li, Qi Tian

- Masked Autoencoders are Robust Data Augmentors

Haohang Xu, Shuangrui Ding, Xiaopeng Zhang, Hongkai Xiong, Qi Tian

Haohang Xu, Shuangrui Ding, Xiaopeng Zhang, Hongkai Xiong, Qi Tian - Discovering Object Masks with Transformers for Unsupervised Semantic Segmentation

Wouter Van Gansbeke, Simon Vandenhende, Luc Van Gool

Wouter Van Gansbeke, Simon Vandenhende, Luc Van Gool - Masked Frequency Modeling for Self-Supervised Visual Pre-Training

Jiahao Xie, Wei Li, Xiaohang Zhan, Ziwei Liu, Yew Soon Ong, Chen Change Loy

Jiahao Xie, Wei Li, Xiaohang Zhan, Ziwei Liu, Yew Soon Ong, Chen Change Loy - Adapting Self-Supervised Vision Transformers by Probing Attention-Conditioned Masking Consistency

Viraj Prabhu, Sriram Yenamandra, Aaditya Singh, Judy Hoffman

Viraj Prabhu, Sriram Yenamandra, Aaditya Singh, Judy Hoffman - OmniMAE: Single Model Masked Pretraining on Images and Videos

Rohit Girdhar, Alaaeldin El-Nouby, Mannat Singh, Kalyan Vasudev Alwala, Armand Joulin, Ishan Misra

Rohit Girdhar, Alaaeldin El-Nouby, Mannat Singh, Kalyan Vasudev Alwala, Armand Joulin, Ishan Misra - A Unified Framework for Masked and Mask-Free Face Recognition via Feature Rectification

Shaozhe Hao, Chaofeng Chen, Zhenfang Chen, Kwan-Yee K. Wong

Shaozhe Hao, Chaofeng Chen, Zhenfang Chen, Kwan-Yee K. Wong - Integral Migrating Pre-trained Transformer Encoder-decoders for Visual Object Detection Xiaosong Zhang, Feng Liu, Zhiliang Peng, Zonghao Guo, Fang Wan, Xiangyang Ji, Qixiang Ye

- SemMAE: Semantic-Guided Masking for Learning Masked Autoencoders Gang Li, Heliang Zheng, Daqing Liu, Bing Su, Changwen Zheng

- MaskViT: Masked Visual Pre-Training for Video Prediction

Agrim Gupta, Stephen Tian, Yunzhi Zhang, Jiajun Wu, Roberto Martín-Martín, Li Fei-Fei

Agrim Gupta, Stephen Tian, Yunzhi Zhang, Jiajun Wu, Roberto Martín-Martín, Li Fei-Fei - Masked World Models for Visual Control

Younggyo Seo, Danijar Hafner, Hao Liu, Fangchen Liu, Stephen James, Kimin Lee, Pieter Abbeel

Younggyo Seo, Danijar Hafner, Hao Liu, Fangchen Liu, Stephen James, Kimin Lee, Pieter Abbeel - Training Vision-Language Transformers from Captions Alone

Liangke Gui, Qiuyuan Huang, Alex Hauptmann, Yonatan Bisk, Jianfeng Gao

Liangke Gui, Qiuyuan Huang, Alex Hauptmann, Yonatan Bisk, Jianfeng Gao - Masked Generative Distillation

Zhendong Yang, Zhe Li, Mingqi Shao, Dachuan Shi, Zehuan Yuan, Chun Yuan

Zhendong Yang, Zhe Li, Mingqi Shao, Dachuan Shi, Zehuan Yuan, Chun Yuan - k-means Mask Transformer

Qihang Yu, Huiyu Wang, Siyuan Qiao, Maxwell Collins, Yukun Zhu, Hatwig Adam, Alan Yuille, Liang-Chieh Chen

Qihang Yu, Huiyu Wang, Siyuan Qiao, Maxwell Collins, Yukun Zhu, Hatwig Adam, Alan Yuille, Liang-Chieh Chen - Bootstrapped Masked Autoencoders for Vision BERT Pretraining

Xiaoyi Dong, Jianmin Bao, Ting Zhang, Dongdong Chen, Weiming Zhang, Lu Yuan, Dong Chen, Fang Wen, Nenghai Yu

Xiaoyi Dong, Jianmin Bao, Ting Zhang, Dongdong Chen, Weiming Zhang, Lu Yuan, Dong Chen, Fang Wen, Nenghai Yu - SatMAE: Pre-training Transformers for Temporal and Multi-Spectral Satellite Imagery

Yezhen Cong, Samar Khanna, Chenlin Meng, Patrick Liu, Erik Rozi, Yutong He, Marshall Burke, David B. Lobell, Stefano Ermon

Yezhen Cong, Samar Khanna, Chenlin Meng, Patrick Liu, Erik Rozi, Yutong He, Marshall Burke, David B. Lobell, Stefano Ermon - Contrastive Masked Autoencoders are Stronger Vision Learners Zhicheng Huang, Xiaojie Jin, Chengze Lu, Qibin Hou, Ming-Ming Cheng, Dongmei Fu, Xiaohui Shen, Jiashi Feng

- SdAE: Self-distillated Masked Autoencoder

Yabo Chen, Yuchen Liu, Dongsheng Jiang, Xiaopeng Zhang, Wenrui Dai, Hongkai Xiong, Qi Tian

Yabo Chen, Yuchen Liu, Dongsheng Jiang, Xiaopeng Zhang, Wenrui Dai, Hongkai Xiong, Qi Tian - Less is More: Consistent Video Depth Estimation with Masked Frames Modeling Yiran Wang, Zhiyu Pan, Xingyi Li, Zhiguo Cao, Ke Xian, Jianming Zhang

- Masked Vision and Language Modeling for Multi-modal Representation Learning Gukyeong Kwon, Zhaowei Cai, Avinash Ravichandran, Erhan Bas, Rahul Bhotika, Stefano Soatto

- Masked Feature Prediction for Self-Supervised Visual Pre-Training

Chen Wei, Haoqi Fan, Saining Xie, Chao-Yuan Wu, Alan Yuille, Christoph Feichtenhofer

Chen Wei, Haoqi Fan, Saining Xie, Chao-Yuan Wu, Alan Yuille, Christoph Feichtenhofer - Understanding Masked Image Modeling via Learning Occlusion Invariant Feature Xiangwen Kong, Xiangyu Zhang

- BEiT v2: Masked Image Modeling with Vector-Quantized Visual Tokenizers

Zhiliang Peng, Li Dong, Hangbo Bao, Qixiang Ye, Furu Wei

Zhiliang Peng, Li Dong, Hangbo Bao, Qixiang Ye, Furu Wei - MILAN: Masked Image Pretraining on Language Assisted Representation

Zejiang Hou, Fei Sun, Yen-Kuang Chen, Yuan Xie, Sun-Yuan Kung

Zejiang Hou, Fei Sun, Yen-Kuang Chen, Yuan Xie, Sun-Yuan Kung - Open-Vocabulary Panoptic Segmentation with MaskCLIP Zheng Ding, Jieke Wang, Zhuowen Tu

- VLMAE: Vision-Language Masked Autoencoder Sunan He, Taian Guo, Tao Dai, Ruizhi Qiao, Chen Wu, Xiujun Shu, Bo Ren

- MaskCLIP: Masked Self-Distillation Advances Contrastive Language-Image Pretraining Xiaoyi Dong, Yinglin Zheng, Jianmin Bao, Ting Zhang, Dongdong Chen, Hao Yang, Ming Zeng, Weiming Zhang, Lu Yuan, Dong Chen, Fang Wen, Nenghai Yu

- Masked Autoencoders Enable Efficient Knowledge Distillers

Yutong Bai, Zeyu Wang, Junfei Xiao, Chen Wei, Huiyu Wang, Alan Yuille, Yuyin Zhou, Cihang Xie

Yutong Bai, Zeyu Wang, Junfei Xiao, Chen Wei, Huiyu Wang, Alan Yuille, Yuyin Zhou, Cihang Xie - Multi-Modal Masked Autoencoders for Medical Vision-and-Language Pre-Training

Zhihong Chen, Yuhao Du, Jinpeng Hu, Yang Liu, Guanbin Li, Xiang Wan, Tsung-Hui Chang

Zhihong Chen, Yuhao Du, Jinpeng Hu, Yang Liu, Guanbin Li, Xiang Wan, Tsung-Hui Chang - MetaMask: Revisiting Dimensional Confounder for Self-Supervised Learning

Jiangmeng Li, Wenwen Qiang, Yanan Zhang, Wenyi Mo, Changwen Zheng, Bing Su, Hui Xiong

Jiangmeng Li, Wenwen Qiang, Yanan Zhang, Wenyi Mo, Changwen Zheng, Bing Su, Hui Xiong - NamedMask: Distilling Segmenters from Complementary Foundation Models

Gyungin Shin, Weidi Xie, Samuel Albanie

Gyungin Shin, Weidi Xie, Samuel Albanie - Exploring Target Representations for Masked Autoencoders Xingbin Liu, Jinghao Zhou, Tao Kong, Xianming Lin, Rongrong Ji

- Self-Supervised Masked Convolutional Transformer Block for Anomaly Detection

Neelu Madan, Nicolae-Catalin Ristea, Radu Tudor Ionescu, Kamal Nasrollahi, Fahad Shahbaz Khan, Thomas B. Moeslund, Mubarak Shah

Neelu Madan, Nicolae-Catalin Ristea, Radu Tudor Ionescu, Kamal Nasrollahi, Fahad Shahbaz Khan, Thomas B. Moeslund, Mubarak Shah - Exploring The Role of Mean Teachers in Self-supervised Masked Auto-Encoders Youngwan Lee, Jeffrey Willette, Jonghee Kim, Juho Lee, Sung Ju Hwang

- Self-Distillation for Further Pre-training of Transformers Seanie Lee, Minki Kang, Juho Lee, Sung Ju Hwang, Kenji Kawaguchi

- MAMO: Masked Multimodal Modeling for Fine-Grained Vision-Language Representation Learning Zijia Zhao, Longteng Guo, Xingjian He, Shuai Shao, Zehuan Yuan, Jing Liu

- It Takes Two: Masked Appearance-Motion Modeling for Self-supervised Video Transformer Pre-training Yuxin Song, Min Yang, Wenhao Wu, Dongliang He, Fu Li, Jingdong Wang

- Exploring Long-Sequence Masked Autoencoders

Ronghang Hu, Shoubhik Debnath, Saining Xie, Xinlei Chen

Ronghang Hu, Shoubhik Debnath, Saining Xie, Xinlei Chen - M3Video: Masked Motion Modeling for Self-Supervised Video Representation Learning Xinyu Sun, Peihao Chen, Liangwei Chen, Thomas H. Li, Mingkui Tan, Chuang Gan

- Ensemble Learning using Transformers and Convolutional Networks for Masked Face Recognition

Mohammed R. Al-Sinan, Aseel F. Haneef, Hamzah Luqman

Mohammed R. Al-Sinan, Aseel F. Haneef, Hamzah Luqman - MOVE: Unsupervised Movable Object Segmentation and Detection Adam Bielski, Paolo Favaro

- Denoising Masked AutoEncoders are Certifiable Robust Vision Learners

Quanlin Wu, Hang Ye, Yuntian Gu, Huishuai Zhang, Liwei Wang, Di He

Quanlin Wu, Hang Ye, Yuntian Gu, Huishuai Zhang, Liwei Wang, Di He - How Mask Matters: Towards Theoretical Understandings of Masked Autoencoders

Qi Zhang, Yifei Wang, Yisen Wang

Qi Zhang, Yifei Wang, Yisen Wang - MultiMAE: Multi-modal Multi-task Masked Autoencoders

🌐 Roman Bachmann, David Mizrahi, Andrei Atanov, Amir Zamir

🌐 Roman Bachmann, David Mizrahi, Andrei Atanov, Amir Zamir - A Unified View of Masked Image Modeling

Zhiliang Peng, Li Dong, Hangbo Bao, Qixiang Ye, Furu Wei

Zhiliang Peng, Li Dong, Hangbo Bao, Qixiang Ye, Furu Wei - i-MAE: Are Latent Representations in Masked Autoencoders Linearly Separable? 🌐

Kevin Zhang, Zhiqiang Shen

Kevin Zhang, Zhiqiang Shen - MixMask: Revisiting Masked Siamese Self-supervised Learning in Asymmetric Distance

Kirill Vishniakov, Eric Xing, Zhiqiang Shen

Kirill Vishniakov, Eric Xing, Zhiqiang Shen - DiffEdit: Diffusion-based semantic image editing with mask guidance Guillaume Couairon, Jakob Verbeek, Holger Schwenk, Matthieu Cord

- Masked Modeling Duo: Learning Representations by Encouraging Both Networks to Model the Input Daisuke Niizumi, Daiki Takeuchi, Yasunori Ohishi, Noboru Harada, Kunio Kashino

- A simple, efficient and scalable contrastive masked autoencoder for learning visual representations

Shlok Mishra, Joshua Robinson, Huiwen Chang, David Jacobs, Aaron Sarna, Aaron Maschinot, Dilip Krishnan

Shlok Mishra, Joshua Robinson, Huiwen Chang, David Jacobs, Aaron Sarna, Aaron Maschinot, Dilip Krishnan - Siamese Transition Masked Autoencoders as Uniform Unsupervised Visual Anomaly Detector Haiming Yao, Xue Wang, Wenyong Yu

- Bootstrapped Masked Autoencoders for Vision BERT Pretraining

Xiaoyi Dong, Jianmin Bao, Ting Zhang, Dongdong Chen, Weiming Zhang, Lu Yuan, Dong Chen, Fang Wen, Nenghai Yu

Xiaoyi Dong, Jianmin Bao, Ting Zhang, Dongdong Chen, Weiming Zhang, Lu Yuan, Dong Chen, Fang Wen, Nenghai Yu - MaskTune: Mitigating Spurious Correlations by Forcing to Explore

Saeid Asgari Taghanaki, Aliasghar Khani, Fereshte Khani, Ali Gholami, Linh Tran, Ali Mahdavi-Amiri, Ghassan Hamarneh

Saeid Asgari Taghanaki, Aliasghar Khani, Fereshte Khani, Ali Gholami, Linh Tran, Ali Mahdavi-Amiri, Ghassan Hamarneh - Exploring the Limits of Masked Visual Representation Learning at Scale

Yuxin Fang, Wen Wang, Binhui Xie, Quan Sun, Ledell Wu, Xinggang Wang, Tiejun Huang, Xinlong Wang, Yue Cao

Yuxin Fang, Wen Wang, Binhui Xie, Quan Sun, Ledell Wu, Xinggang Wang, Tiejun Huang, Xinlong Wang, Yue Cao - MAGE: MAsked Generative Encoder to Unify Representation Learning and Image Synthesis

Tianhong Li, Huiwen Chang, Shlok Kumar Mishra, Han Zhang, Dina Katabi, Dilip Krishnan

Tianhong Li, Huiwen Chang, Shlok Kumar Mishra, Han Zhang, Dina Katabi, Dilip Krishnan - Stare at What You See: Masked Image Modeling without Reconstruction

Hongwei Xue, Peng Gao, Hongyang Li, Yu Qiao, Hao Sun, Houqiang Li, Jiebo Luo

Hongwei Xue, Peng Gao, Hongyang Li, Yu Qiao, Hao Sun, Houqiang Li, Jiebo Luo - Mask-based Latent Reconstruction for Reinforcement Learning

Tao Yu, Zhizheng Zhang, Cuiling Lan, Yan Lu, Zhibo Chen

Tao Yu, Zhizheng Zhang, Cuiling Lan, Yan Lu, Zhibo Chen - AdaMAE: Adaptive Masking for Efficient Spatiotemporal Learning with Masked Autoencoders

Wele Gedara Chaminda Bandara, Naman Patel, Ali Gholami, Mehdi Nikkhah, Motilal Agrawal, Vishal M. Patel

Wele Gedara Chaminda Bandara, Naman Patel, Ali Gholami, Mehdi Nikkhah, Motilal Agrawal, Vishal M. Patel - Efficient Video Representation Learning via Masked Video Modeling with Motion-centric Token Selection

Sunil Hwang, Jaehong Yoon, Youngwan Lee, Sung Ju Hwang

Sunil Hwang, Jaehong Yoon, Youngwan Lee, Sung Ju Hwang - Contrastive Masked Autoencoders for Self-Supervised Video Hashing

Yuting Wang, Jinpeng Wang, Bin Chen, Ziyun Zeng, Shutao Xia

Yuting Wang, Jinpeng Wang, Bin Chen, Ziyun Zeng, Shutao Xia - MCVD: Masked Conditional Video Diffusion for Prediction, Generation, and Interpolation 🌐

Vikram Voleti, Alexia Jolicoeur-Martineau, Christopher Pal

Vikram Voleti, Alexia Jolicoeur-Martineau, Christopher Pal - MAEDAY: MAE for few and zero shot AnomalY-Detection

Eli Schwartz, Assaf Arbelle, Leonid Karlinsky, Sivan Harary, Florian Scheidegger, Sivan Doveh, Raja Giryes

Eli Schwartz, Assaf Arbelle, Leonid Karlinsky, Sivan Harary, Florian Scheidegger, Sivan Doveh, Raja Giryes - What's Behind the Mask: Estimating Uncertainty in Image-to-Image Problems Gilad Kutiel, Regev Cohen, Michael Elad, Daniel Freedman

- Good helper is around you: Attention-driven Masked Image Modeling Jie Gui, Zhengqi Liu, Hao Luo

- Scaling Language-Image Pre-training via Masking Yanghao Li, Haoqi Fan, Ronghang Hu, Christoph Feichtenhofer, Kaiming He

- Learning Imbalanced Data with Vision Transformers

Zhengzhuo Xu, Ruikang Liu, Shuo Yang, Zenghao Chai, Chun Yuan

Zhengzhuo Xu, Ruikang Liu, Shuo Yang, Zenghao Chai, Chun Yuan - Masked Contrastive Pre-Training for Efficient Video-Text Retrieval Fangxun Shu, Biaolong Chen, Yue Liao, Shuwen Xiao, Wenyu Sun, Xiaobo Li, Yousong Zhu, Jinqiao Wang, Si Liu

- MIC: Masked Image Consistency for Context-Enhanced Domain Adaptation

Lukas Hoyer, Dengxin Dai, Haoran Wang, Luc Van Gool

Lukas Hoyer, Dengxin Dai, Haoran Wang, Luc Van Gool - Masked Video Distillation: Rethinking Masked Feature Modeling for Self-supervised Video Representation Learning 🌐 Rui Wang, Dongdong Chen, Zuxuan Wu, Yinpeng Chen, Xiyang Dai, Mengchen Liu, Lu Yuan, Yu-Gang Jiang

- MAGVIT: Masked Generative Video Transformer 🌐 Lijun Yu, Yong Cheng, Kihyuk Sohn, José Lezama, Han Zhang, Huiwen Chang, Alexander G. Hauptmann, Ming-Hsuan Yang, Yuan Hao, Irfan Essa, Lu Jiang

- FastMIM: Expediting Masked Image Modeling Pre-training for Vision

Jianyuan Guo, Kai Han, Han Wu, Yehui Tang, Yunhe Wang, Chang Xu

Jianyuan Guo, Kai Han, Han Wu, Yehui Tang, Yunhe Wang, Chang Xu - Efficient Self-supervised Learning with Contextualized Target Representations for Vision, Speech and Language

Alexei Baevski, Arun Babu, Wei-Ning Hsu, Michael Auli

Alexei Baevski, Arun Babu, Wei-Ning Hsu, Michael Auli - Swin MAE: Masked Autoencoders for Small Datasets Zi'an Xu, Yin Dai, Fayu Liu, Weibing Chen, Yue Liu, Lifu Shi, Sheng Liu, Yuhang Zhou

- Semi-MAE: Masked Autoencoders for Semi-supervised Vision Transformers Haojie Yu, Kang Zhao, Xiaoming Xu

- MS-DINO: Efficient Distributed Training of Vision Transformer Foundation Model in Medical Domain through Masked Sampling Sangjoon Park, Ik-Jae Lee, Jun Won Kim, Jong Chul Ye

- TinyMIM: An Empirical Study of Distilling MIM Pre-trained Models

Sucheng Ren, Fangyun Wei, Zheng Zhang, Han Hu

Sucheng Ren, Fangyun Wei, Zheng Zhang, Han Hu - ConvNeXt V2: Co-designing and Scaling ConvNets with Masked Autoencoders

Sanghyun Woo, Shoubhik Debnath, Ronghang Hu, Xinlei Chen, Zhuang Liu, In So Kweon, Saining Xie

Sanghyun Woo, Shoubhik Debnath, Ronghang Hu, Xinlei Chen, Zhuang Liu, In So Kweon, Saining Xie - Disjoint Masking with Joint Distillation for Efficient Masked Image Modeling

Xin Ma, Chang Liu, Chunyu Xie, Long Ye, Yafeng Deng, Xiangyang Ji

Xin Ma, Chang Liu, Chunyu Xie, Long Ye, Yafeng Deng, Xiangyang Ji - Masked Siamese ConvNets: Towards an Effective Masking Strategy for General-purpose Siamese Networks Li Jing, Jiachen Zhu, Yann LeCun

- Efficient Masked Autoencoders with Self-Consistency Zhaowen Li, Yousong Zhu, Zhiyang Chen, Wei Li, Chaoyang Zhao, Liwei Wu, Rui Zhao, Ming Tang, Jinqiao Wang

- PixMIM: Rethinking Pixel Reconstruction in Masked Image Modeling

Yuan Liu, Songyang Zhang, Jiacheng Chen, Kai Chen, Dahua Lin

Yuan Liu, Songyang Zhang, Jiacheng Chen, Kai Chen, Dahua Lin - Rethinking Out-of-distribution (OOD) Detection: Masked Image Modeling is All You Need Jingyao Li, Pengguang Chen, Shaozuo Yu, Zexin He, Shu Liu, Jiaya Jia

- Masked Image Modeling with Denoising Contrast

Kun Yi, Yixiao Ge, Xiaotong Li, Shusheng Yang, Dian Li, Jianping Wu, Ying Shan, Xiaohu Qie

Kun Yi, Yixiao Ge, Xiaotong Li, Shusheng Yang, Dian Li, Jianping Wu, Ying Shan, Xiaohu Qie - Layer Grafted Pre-training: Bridging Contrastive Learning And Masked Image Modeling For Label-Efficient Representations

Ziyu Jiang, Yinpeng Chen, Mengchen Liu, Dongdong Chen, Xiyang Dai, Lu Yuan, Zicheng Liu, Zhangyang Wang

Ziyu Jiang, Yinpeng Chen, Mengchen Liu, Dongdong Chen, Xiyang Dai, Lu Yuan, Zicheng Liu, Zhangyang Wang - MaskedKD: Efficient Distillation of Vision Transformers with Masked Images Seungwoo Son, Namhoon Lee, Jaeho Lee

- Generic-to-Specific Distillation of Masked Autoencoders

Wei Huang, Zhiliang Peng, Li Dong, Furu Wei, Jianbin Jiao, Qixiang Ye

Wei Huang, Zhiliang Peng, Li Dong, Furu Wei, Jianbin Jiao, Qixiang Ye - Masked Image Modeling with Local Multi-Scale Reconstruction

Haoqing Wang, Yehui Tang, Yunhe Wang, Jianyuan Guo, Zhi-Hong Deng, Kai Han

Haoqing Wang, Yehui Tang, Yunhe Wang, Jianyuan Guo, Zhi-Hong Deng, Kai Han - StrucTexTv2: Masked Visual-Textual Prediction for Document Image Pre-training

Yuechen Yu, Yulin Li, Chengquan Zhang, Xiaoqiang Zhang, Zengyuan Guo, Xiameng Qin, Kun Yao, Junyu Han, Errui Ding, Jingdong Wang

Yuechen Yu, Yulin Li, Chengquan Zhang, Xiaoqiang Zhang, Zengyuan Guo, Xiameng Qin, Kun Yao, Junyu Han, Errui Ding, Jingdong Wang - Masked Distillation with Receptive Tokens

Tao Huang, Yuan Zhang, Shan You, Fei Wang, Chen Qian, Jian Cao, Chang Xu

Tao Huang, Yuan Zhang, Shan You, Fei Wang, Chen Qian, Jian Cao, Chang Xu - DeepMIM: Deep Supervision for Masked Image Modeling

Sucheng Ren, Fangyun Wei, Samuel Albanie, Zheng Zhang, Han Hu

Sucheng Ren, Fangyun Wei, Samuel Albanie, Zheng Zhang, Han Hu - 3D Masked Autoencoding and Pseudo-labeling for Domain Adaptive Segmentation of Heterogeneous Infant Brain MRI

Xuzhe Zhang, Yuhao Wu, Jia Guo, Jerod M. Rasmussen, Thomas G. O'Connor, Hyagriv N. Simhan, Sonja Entringer, Pathik D. Wadhwa, Claudia Buss, Cristiane S. Duarte, Andrea Jackowski, Hai Li, Jonathan Posner, Andrew F. Laine, Yun Wang

Xuzhe Zhang, Yuhao Wu, Jia Guo, Jerod M. Rasmussen, Thomas G. O'Connor, Hyagriv N. Simhan, Sonja Entringer, Pathik D. Wadhwa, Claudia Buss, Cristiane S. Duarte, Andrea Jackowski, Hai Li, Jonathan Posner, Andrew F. Laine, Yun Wang - The effectiveness of MAE pre-pretraining for billion-scale pretraining Mannat Singh, Quentin Duval, Kalyan Vasudev Alwala, Haoqi Fan, Vaibhav Aggarwal, Aaron Adcock, Armand Joulin, Piotr Dollár, Christoph Feichtenhofer, Ross Girshick, Rohit Girdhar, Ishan Misra

- VideoMAE V2: Scaling Video Masked Autoencoders with Dual Masking Limin Wang, Bingkun Huang, Zhiyu Zhao, Zhan Tong, Yinan He, Yi Wang, Yali Wang, Yu Qiao

- Scale-MAE: A Scale-Aware Masked Autoencoder for Multiscale Geospatial Representation Learning Colorado J. Reed, Ritwik Gupta, Shufan Li, Sarah Brockman, Christopher Funk, Brian Clipp, Kurt Keutzer, Salvatore Candido, Matt Uyttendaele, Trevor Darrell

- Siamese Masked Autoencoders Agrim Gupta, Jiajun Wu, Jia Deng, Li Fei-Fei

- MaskDiff: Modeling Mask Distribution with Diffusion Probabilistic Model for Few-Shot Instance Segmentation Minh-Quan Le, Tam V. Nguyen, Trung-Nghia Le, Thanh-Toan Do, Minh N. Do, Minh-Triet Tran

- DiffuMask: Synthesizing Images with Pixel-level Annotations for Semantic Segmentation Using Diffusion Models

Weijia Wu, Yuzhong Zhao, Mike Zheng Shou, Hong Zhou, Chunhua Shen

Weijia Wu, Yuzhong Zhao, Mike Zheng Shou, Hong Zhou, Chunhua Shen - Mixed Autoencoder for Self-supervised Visual Representation Learning Kai Chen, Zhili Liu, Lanqing Hong, Hang Xu, Zhenguo Li, Dit-Yan Yeung

- DropMAE: Masked Autoencoders with Spatial-Attention Dropout for Tracking Tasks

Qiangqiang Wu, Tianyu Yang, Ziquan Liu, Baoyuan Wu, Ying Shan, Antoni B. Chan

Qiangqiang Wu, Tianyu Yang, Ziquan Liu, Baoyuan Wu, Ying Shan, Antoni B. Chan - MM-BSN: Self-Supervised Image Denoising for Real-World with Multi-Mask based on Blind-Spot Network

Dan Zhang, Fangfang Zhou, Yuwen Jiang, Zhengming Fu

Dan Zhang, Fangfang Zhou, Yuwen Jiang, Zhengming Fu - Hard Patches Mining for Masked Image Modeling

Haochen Wang, Kaiyou Song, Junsong Fan, Yuxi Wang, Jin Xie, Zhaoxiang Zhang

Haochen Wang, Kaiyou Song, Junsong Fan, Yuxi Wang, Jin Xie, Zhaoxiang Zhang - SMAE: Few-shot Learning for HDR Deghosting with Saturation-Aware Masked Autoencoders Qingsen Yan, Song Zhang, Weiye Chen, Hao Tang, Yu Zhu, Jinqiu Sun, Luc Van Gool, Yanning Zhang

- FreMAE: Fourier Transform Meets Masked Autoencoders for Medical Image Segmentation Wenxuan Wang, Jing Wang, Chen Chen, Jianbo Jiao, Lichao Sun, Yuanxiu Cai, Shanshan Song, Jiangyun Li

- An Empirical Study of End-to-End Video-Language Transformers with Masked Visual Modeling

Tsu-Jui Fu, Linjie Li, Zhe Gan, Kevin Lin, William Yang Wang, Lijuan Wang, Zicheng Liu

Tsu-Jui Fu, Linjie Li, Zhe Gan, Kevin Lin, William Yang Wang, Lijuan Wang, Zicheng Liu - PMatch: Paired Masked Image Modeling for Dense Geometric Matching

Shengjie Zhu, Xiaoming Liu

Shengjie Zhu, Xiaoming Liu - Medical supervised masked autoencoders: Crafting a better masking strategy and efficient fine-tuning schedule for medical image classification Jiawei Mao, Shujian Guo, Yuanqi Chang, Xuesong Yin, Binling Nie

- Maskomaly:Zero-Shot Mask Anomaly Segmentation Jan Ackermann, Christos Sakaridis, Fisher Yu

- Unsupervised Anomaly Detection in Medical Images Using Masked Diffusion Model

Hasan Iqbal, Umar Khalid, Jing Hua, Chen Chen

Hasan Iqbal, Umar Khalid, Jing Hua, Chen Chen - Hiera: A Hierarchical Vision Transformer without the Bells-and-Whistles

Chaitanya Ryali, Yuan-Ting Hu, Daniel Bolya, Chen Wei, Haoqi Fan, Po-Yao Huang, Vaibhav Aggarwal, Arkabandhu Chowdhury, Omid Poursaeed, Judy Hoffman, Jitendra Malik, Yanghao Li, Christoph Feichtenhofer

Chaitanya Ryali, Yuan-Ting Hu, Daniel Bolya, Chen Wei, Haoqi Fan, Po-Yao Huang, Vaibhav Aggarwal, Arkabandhu Chowdhury, Omid Poursaeed, Judy Hoffman, Jitendra Malik, Yanghao Li, Christoph Feichtenhofer - CM-MaskSD: Cross-Modality Masked Self-Distillation for Referring Image Segmentation Wenxuan Wang, Jing Liu, Xingjian He, Yisi Zhang, Chen Chen, Jiachen Shen, Yan Zhang, Jiangyun Li

- R-MAE: Regions Meet Masked Autoencoders

Duy-Kien Nguyen, Vaibhav Aggarwal, Yanghao Li, Martin R. Oswald, Alexander Kirillov, Cees G. M. Snoek, Xinlei Chen

Duy-Kien Nguyen, Vaibhav Aggarwal, Yanghao Li, Martin R. Oswald, Alexander Kirillov, Cees G. M. Snoek, Xinlei Chen - Exploring Effective Mask Sampling Modeling for Neural Image Compression Lin Liu, Mingming Zhao, Shanxin Yuan, Wenlong Lyu, Wengang Zhou, Houqiang Li, Yanfeng Wang, Qi Tian

- Automatic Image Blending Algorithm Based on SAM and DINO Haochen Xue, Mingyu Jin, Chong Zhang, Yuxuan Huang, Qian Weng, Xiaobo Jin

- A Survey on Masked Autoencoder for Visual Self-supervised Learning Chaoning Zhang, Chenshuang Zhang, Junha Song, John Seon Keun Yi, In So Kweon

- MAE-AST: Masked Autoencoding Audio Spectrogram Transformer

Alan Baade, Puyuan Peng, David Harwath

Alan Baade, Puyuan Peng, David Harwath - Group masked autoencoder based density estimator for audio anomaly detection Ritwik Giri, Fangzhou Cheng, Karim Helwani, Srikanth V. Tenneti, Umut Isik, Arvindh Krishnaswamy

- Masked Autoencoders that Listen

Po-Yao (Bernie)Huang, Hu Xu, Juncheng Li, Alexei Baevski, Michael Auli, Wojciech Galuba, Florian Metze, Christoph Feichtenhofer

Po-Yao (Bernie)Huang, Hu Xu, Juncheng Li, Alexei Baevski, Michael Auli, Wojciech Galuba, Florian Metze, Christoph Feichtenhofer - Efficient Vision-Language Pretraining with Visual Concepts and Hierarchical Alignment

Mustafa Shukor, Guillaume Couairon, Matthieu Cord

Mustafa Shukor, Guillaume Couairon, Matthieu Cord - Contrastive Audio-Visual Masked Autoencoder Yuan Gong, Andrew Rouditchenko, Alexander H. Liu, David Harwath, Leonid Karlinsky, Hilde Kuehne, James Glass

- Masked Spectrogram Modeling using Masked Autoencoders for Learning General-purpose Audio Representation

Daisuke Niizumi, Daiki Takeuchi, Yasunori Ohishi, Noboru Harada, Kunio Kashino

Daisuke Niizumi, Daiki Takeuchi, Yasunori Ohishi, Noboru Harada, Kunio Kashino - Masked Lip-Sync Prediction by Audio-Visual Contextual Exploitation in Transformers

Yasheng Sun, Hang Zhou, Kaisiyuan Wang, Qianyi Wu, Zhibin Hong, Jingtuo Liu, Errui Ding, Jingdong Wang, Ziwei Liu, Hideki Koike

Yasheng Sun, Hang Zhou, Kaisiyuan Wang, Qianyi Wu, Zhibin Hong, Jingtuo Liu, Errui Ding, Jingdong Wang, Ziwei Liu, Hideki Koike - Audiovisual Masked Autoencoders Mariana-Iuliana Georgescu, Eduardo Fonseca, Radu Tudor Ionescu, Mario Lucic, Cordelia Schmid, Anurag Arnab

- MGAE: Masked Autoencoders for Self-Supervised Learning on Graphs

Qiaoyu Tan, Ninghao Liu, Xiao Huang, Rui Chen, Soo-Hyun Choi, Xia Hu

Qiaoyu Tan, Ninghao Liu, Xiao Huang, Rui Chen, Soo-Hyun Choi, Xia Hu - Graph Masked Autoencoder with Transformers

Sixiao Zhang, Hongxu Chen, Haoran Yang, Xiangguo Sun, Philip S. Yu, Guandong Xu

Sixiao Zhang, Hongxu Chen, Haoran Yang, Xiangguo Sun, Philip S. Yu, Guandong Xu - What's Behind the Mask: Understanding Masked Graph Modeling for Graph Autoencoders

Jintang Li, Ruofan Wu, Wangbin Sun, Liang Chen, Sheng Tian, Liang Zhu, Changhua Meng, Zibin Zheng, Weiqiang Wang

Jintang Li, Ruofan Wu, Wangbin Sun, Liang Chen, Sheng Tian, Liang Zhu, Changhua Meng, Zibin Zheng, Weiqiang Wang - GraphMAE: Self-Supervised Masked Graph Autoencoders

Zhenyu Hou, Xiao Liu, Yukuo Cen, Yuxiao Dong, Hongxia Yang, Chunjie Wang, Jie Tang

Zhenyu Hou, Xiao Liu, Yukuo Cen, Yuxiao Dong, Hongxia Yang, Chunjie Wang, Jie Tang - Heterogeneous Graph Masked Autoencoders

Yijun Tian, Kaiwen Dong, Chunhui Zhang, Chuxu Zhang, Nitesh V. Chawla

Yijun Tian, Kaiwen Dong, Chunhui Zhang, Chuxu Zhang, Nitesh V. Chawla - Graph Masked Autoencoder Enhanced Predictor for Neural Architecture Search

Kun Jing , Jungang Xu, Pengfei Li

Kun Jing , Jungang Xu, Pengfei Li - Bi-channel Masked Graph Autoencoders for Spatially Resolved Single-cell Transcriptomics Data Imputation Hongzhi Wen, Wei Jin, Jiayuan Ding, Christopher Xu, Yuying Xie, Jiliang Tang

- Masked Graph Auto-Encoder Constrained Graph Pooling

Chuang Liu, Yibing Zhan, Xueqi Ma, Dapeng Tao, Bo Du, Wenbin Hu

Chuang Liu, Yibing Zhan, Xueqi Ma, Dapeng Tao, Bo Du, Wenbin Hu - BatmanNet: Bi-branch Masked Graph Transformer Autoencoder for Molecular Representation Zhen Wang, Zheng Feng, Yanjun Li, Bowen Li, Yongrui Wang, Chulin Sha, Min He, Xiaolin Li

- Jointly Learning Visual and Auditory Speech Representations from Raw Data Alexandros Haliassos, Pingchuan Ma, Rodrigo Mira, Stavros Petridis, Maja Pantic

- S2GAE: Self-Supervised Graph Autoencoders are Generalizable Learners with Graph Masking

Qiqoyu Tan, Ninghao Liu, Xiao Huang, Soo-Hyun Choi, Li Li, Rui Chen, Xia Hu

Qiqoyu Tan, Ninghao Liu, Xiao Huang, Soo-Hyun Choi, Li Li, Rui Chen, Xia Hu - GraphMAE2: A Decoding-Enhanced Masked Self-Supervised Graph Learner

Zhenyu Hou, Yufei He, Yukuo Cen, Xiao Liu, Yuxiao Dong, Evgeny Kharlamov, Jie Tang

Zhenyu Hou, Yufei He, Yukuo Cen, Xiao Liu, Yuxiao Dong, Evgeny Kharlamov, Jie Tang - SeeGera: Self-supervised Semi-implicit Graph Variational Auto-encoders with Masking

Xiang Li, Tiandi Ye, Caihua Shan, Dongsheng Li, Ming Gao

Xiang Li, Tiandi Ye, Caihua Shan, Dongsheng Li, Ming Gao - GiGaMAE: Generalizable Graph Masked Autoencoder via Collaborative Latent Space Reconstruction

Yucheng Shi, Yushun Dong, Qiaoyu Tan, Jundong Li, Ninghao Liu

Yucheng Shi, Yushun Dong, Qiaoyu Tan, Jundong Li, Ninghao Liu

- Masked Discrimination for Self-Supervised Learning on Point Clouds

Haotian Liu, Mu Cai, Yong Jae Lee

Haotian Liu, Mu Cai, Yong Jae Lee - Voxel-MAE: Masked Autoencoders for Pre-training Large-scale Point Clouds

Chen Min, Dawei Zhao, Liang Xiao, Yiming Nie, Bin Dai

Chen Min, Dawei Zhao, Liang Xiao, Yiming Nie, Bin Dai - Masked Autoencoders for Self-Supervised Learning on Automotive Point Clouds

Georg Hess, Johan Jaxing, Elias Svensson, David Hagerman, Christoffer Petersson, Lennart Svensson

Georg Hess, Johan Jaxing, Elias Svensson, David Hagerman, Christoffer Petersson, Lennart Svensson - Learning 3D Representations from 2D Pre-trained Models via Image-to-Point Masked Autoencoders

Renrui Zhang, Liuhui Wang, Yu Qiao, Peng Gao, Hongsheng Li

Renrui Zhang, Liuhui Wang, Yu Qiao, Peng Gao, Hongsheng Li - BEV-MAE: Bird's Eye View Masked Autoencoders for Outdoor Point Cloud Pre-training Zhiwei Lin, Yongtao Wang

- PiMAE: Point Cloud and Image Interactive Masked Autoencoders for 3D Object Detection

Anthony Chen, Kevin Zhang, Renrui Zhang, Zihan Wang, Yuheng Lu, Yandong Guo, Shanghang Zhang

Anthony Chen, Kevin Zhang, Renrui Zhang, Zihan Wang, Yuheng Lu, Yandong Guo, Shanghang Zhang - Point-M2AE: Multi-scale Masked Autoencoders for Hierarchical Point Cloud Pre-training

Renrui Zhang, Ziyu Guo, Peng Gao, Rongyao Fang, Bin Zhao, Dong Wang, Yu Qiao, Hongsheng Li

Renrui Zhang, Ziyu Guo, Peng Gao, Rongyao Fang, Bin Zhao, Dong Wang, Yu Qiao, Hongsheng Li - GD-MAE: Generative Decoder for MAE Pre-training on LiDAR Point Clouds

Honghui Yang, Tong He, Jiaheng Liu, Hua Chen, Boxi Wu, Binbin Lin, Xiaofei He, Wanli Ouyang

Honghui Yang, Tong He, Jiaheng Liu, Hua Chen, Boxi Wu, Binbin Lin, Xiaofei He, Wanli Ouyang - MAELi -- Masked Autoencoder for Large-Scale LiDAR Point Clouds Georg Krispel, David Schinagl, Christian Fruhwirth-Reisinger, Horst Possegger, Horst Bischof

- GeoMAE: Masked Geometric Target Prediction for Self-supervised Point Cloud Pre-Training

Xiaoyu Tian, Haoxi Ran, Yue Wang, Hang Zhao

Xiaoyu Tian, Haoxi Ran, Yue Wang, Hang Zhao - Masked Autoencoders for Point Cloud Self-Supervised Learning

Yatian Pang, Wenxiao Wang, Francis E.H. Tay, Wei Liu, Yonghong Tian, Li Yuan

Yatian Pang, Wenxiao Wang, Francis E.H. Tay, Wei Liu, Yonghong Tian, Li Yuan - Masked Autoencoder for Self-Supervised Pre-Training on Lidar Point Clouds

Georg Hess, Johan Jaxing, Elias Svensson, David Hagerman, Christoffer Petersson, Lennart Svensson

Georg Hess, Johan Jaxing, Elias Svensson, David Hagerman, Christoffer Petersson, Lennart Svensson - Joint-MAE: 2D-3D Joint Masked Autoencoders for 3D Point Cloud Pre-training Ziyu Guo, Renrui Zhang, Longtian Qiu, Xianzhi Li, Pheng-Ann Heng

There has been a surge of language research focused on such masking-and-predicting paradigm, e.g. BERT, so I'm not going to report these works.

- Masked Bayesian Neural Networks : Computation and Optimality Insung Kong, Dongyoon Yang, Jongjin Lee, Ilsang Ohn, Yongdai Kim

- How to Understand Masked Autoencoders Shuhao Cao, Peng Xu, David A. Clifton

- Towards Understanding Why Mask-Reconstruction Pretraining Helps in Downstream Tasks Jiachun Pan, Pan Zhou, Shuicheng Yan

- MET: Masked Encoding for Tabular Data Kushal Majmundar, Sachin Goyal, Praneeth Netrapalli, Prateek Jain

- Masked Self-Supervision for Remaining Useful Lifetime Prediction in Machine Tools Haoren Guo, Haiyue Zhu, Jiahui Wang, Vadakkepat Prahlad, Weng Khuen Ho, Tong Heng Lee

- MAR: Masked Autoencoders for Efficient Action Recognition

Zhiwu Qing, Shiwei Zhang, Ziyuan Huang, Xiang Wang, Yuehuan Wang, Yiliang Lv, Changxin Gao, Nong Sang

Zhiwu Qing, Shiwei Zhang, Ziyuan Huang, Xiang Wang, Yuehuan Wang, Yiliang Lv, Changxin Gao, Nong Sang - MeshMAE: Masked Autoencoders for 3D Mesh Data Analysis

Yaqian Liang, Shanshan Zhao, Baosheng Yu, Jing Zhang, Fazhi He

Yaqian Liang, Shanshan Zhao, Baosheng Yu, Jing Zhang, Fazhi He - A Dual-Masked Auto-Encoder for Robust Motion Capture with Spatial-Temporal Skeletal Token Completion

Junkun Jiang, Jie Chen, Yike Guo

Junkun Jiang, Jie Chen, Yike Guo - [Survey] A Survey on Masked Autoencoder for Self-supervised Learning in Vision and Beyond Chaoning Zhang, Chenshuang Zhang, Junha Song, John Seon Keun Yi, Kang Zhang, In So Kweon

- Masked Imitation Learning: Discovering Environment-Invariant Modalities in Multimodal Demonstrations

Yilun Hao, Ruinan Wang, Zhangjie Cao, Zihan Wang, Yuchen Cui, Dorsa Sadigh

Yilun Hao, Ruinan Wang, Zhangjie Cao, Zihan Wang, Yuchen Cui, Dorsa Sadigh - Real-World Robot Learning with Masked Visual Pre-training

Ilija Radosavovic, Tete Xiao, Stephen James, Pieter Abbeel, Jitendra Malik, Trevor Darrell

Ilija Radosavovic, Tete Xiao, Stephen James, Pieter Abbeel, Jitendra Malik, Trevor Darrell - Self-supervised Video Representation Learning with Motion-Aware Masked Autoencoders

Haosen Yang, Deng Huang, Bin Wen, Jiannan Wu, Hongxun Yao, Yi Jiang, Xiatian Zhu, Zehuan Yuan

Haosen Yang, Deng Huang, Bin Wen, Jiannan Wu, Hongxun Yao, Yi Jiang, Xiatian Zhu, Zehuan Yuan - MAEEG: Masked Auto-encoder for EEG Representation Learning Hsiang-Yun Sherry Chien, Hanlin Goh, Christopher M. Sandino, Joseph Y. Cheng

- Masked Autoencoding for Scalable and Generalizable Decision Making

Fangchen Liu, Hao Liu, Aditya Grover, Pieter Abbeel

Fangchen Liu, Hao Liu, Aditya Grover, Pieter Abbeel - MHCCL: Masked Hierarchical Cluster-wise Contrastive Learning for Multivariate Time Series

Qianwen Meng, Hangwei Qian, Yong Liu, Yonghui Xu, Zhiqi Shen, Lizhen Cui

Qianwen Meng, Hangwei Qian, Yong Liu, Yonghui Xu, Zhiqi Shen, Lizhen Cui - Advancing Radiograph Representation Learning with Masked Record Modeling

Hong-Yu Zhou, Chenyu Lian, Liansheng Wang, Yizhou Yu

Hong-Yu Zhou, Chenyu Lian, Liansheng Wang, Yizhou Yu - FlowFormer++: Masked Cost Volume Autoencoding for Pretraining Optical Flow Estimation Xiaoyu Shi, Zhaoyang Huang, Dasong Li, Manyuan Zhang, Ka Chun Cheung, Simon See, Hongwei Qin, Jifeng Dai, Hongsheng Li

- Traj-MAE: Masked Autoencoders for Trajectory Prediction Hao Chen, Jiaze Wang, Kun Shao, Furui Liu, Jianye Hao, Chenyong Guan, Guangyong Chen, Pheng-Ann Heng

- Self-supervised Pre-training with Masked Shape Prediction for 3D Scene Understanding Li Jiang, Zetong Yang, Shaoshuai Shi, Vladislav Golyanik, Dengxin Dai, Bernt Schiele

- Add code links

- Add authers list

- Add conference/journal venues

- Add more illustrative figures