The project aimed to transfer style realtime in the video game.

We trained the model in the tensorflow, and forward the network in the unity environment.

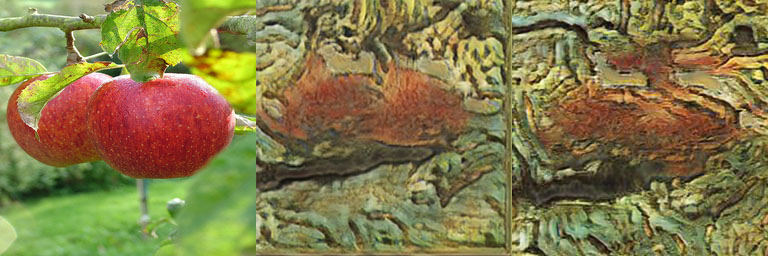

As shown in the picture,left is origin picture, middle is unity transfer picture, and right is tensorflow inference picture.

We render a effect by post-processing:

implements with scene named forest in the project, the styled effetc is displayed at bottom-right corner in preview picture.

Unity2018.2

Python2.7 or 3.5

Tensorflow 1.7 or new

PIL, numpy, scipy, cv2

tqdm

git branch description:

master: Complex implements but at the expense of performance. About 3 FPS at PC with Geforce GTX 1060 3GB

fast: We delete some unimportant layer for performance. Aount 29 FPS at PC with Geforce GTX 1060 3GB

half: Transfer float type to half type in compute shader for less memory.

python main.py \

--model_name=model_van-gogh \

--phase=export_arg \

--image_size=256You will find args.bytes generated in the unity/Assets/Resources/ directory.

The file is store arguments about neural network trained in the tensorflow.

Note: The file format is not protobuf, defined self.

preprocess the bytes to generate map.assets for efficiency。 Open the Unity Env,

run Tools->GenerateMap, the file will be generated.

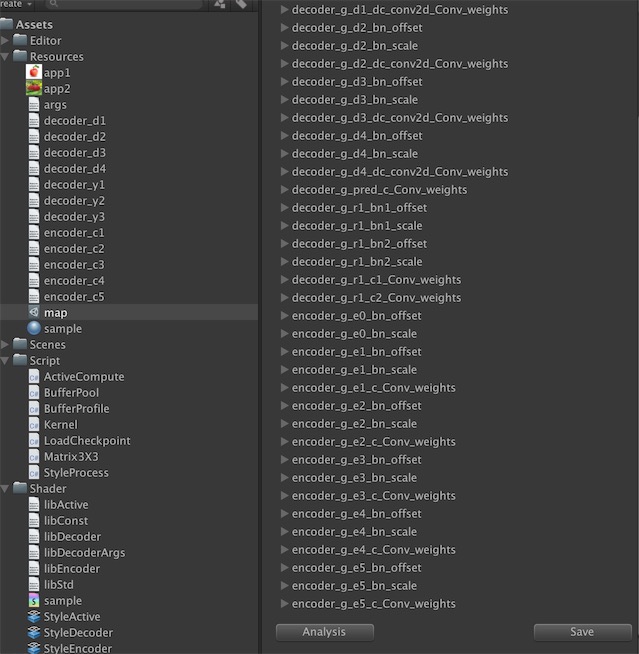

click the analysis button, the file will be filled with arg.bytes map info. And click the save button, the map will be serialzed to disk from memory.

python main.py \

--model_name=model_van-gogh \

--phase=export_layers \

--image_size=256You can also download this dataset from baidu clound disk with extraction code 6thf, and import to unity env.

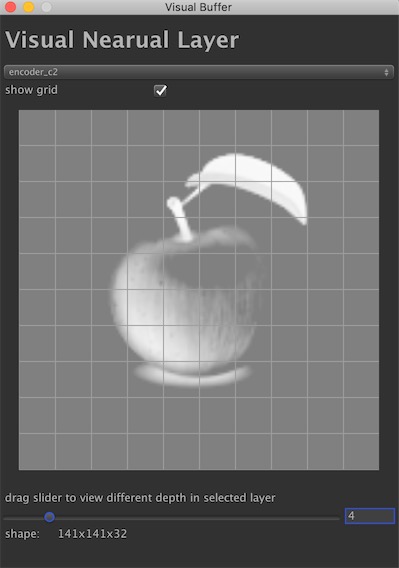

If you visual layer data as image, you can use tool in unity, and click Tools->LayerVisual, Then you will get tool like this:

python main.py \

--model_name=model_van-gogh \

--phase=inference \

--image_size=256The generated picture will be placed in model folder.

Content images used for training: microsoft coco dataset train mages (13GB)。

Style images used for training the aforementioned models: download link.

Query style examples used to collect style images: query_style_images.tar.gz.

- Download and extract style archives in folder

./data. - Download and extract content images.

- Launch the training process (for example, on van Gogh):

CUDA_VISIBLE_DEVICES=1 python main.py \

--model_name=model_van-gogh-new \

--batch_size=1 \

--phase=train \

--image_size=256 \

--lr=0.0002 \

--dsr=0.8 \

--ptcd=/data/micro_coco _dataset \

--ptad=./data/vincent-van-gogh_road-with-cypresses-1890

In encoder or decoder network, group will be satisfied with thread-z at first.

Due to limit 64 on thread-z, we exchange thread-x and thread-z on batch-normal stage.

However, thread-x is alse limited 1024 in compute shader.

During the train, arg model_name is different the inference, otherwise you will start with the trained model.

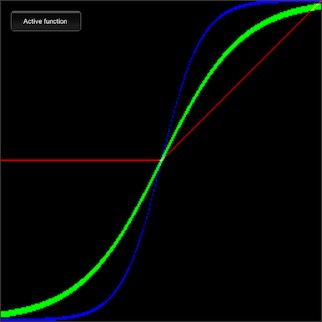

open this project with unity2018, then you can see all active function implments in the scene named ActiveFunc.

Run the unity, and click the button named Active function, you will see the behaviour like this:

We drawed the 3 kinds of active function used R G B chanel.

R stands for relu, G stands for sigmod, while B stands for tanh.

Email: peng_huailiang@qq.com