Andrea Avogaro, Andrea Toaiari, Federico Cunico, Xiangmin Xu, Haralambos Dafas, Alessandro Vinciarelli, Emma Li, and Marco Cristani

This is the official code page for the Human from an Articulated Robot Perspective (HARPER) dataset!

Abstract:

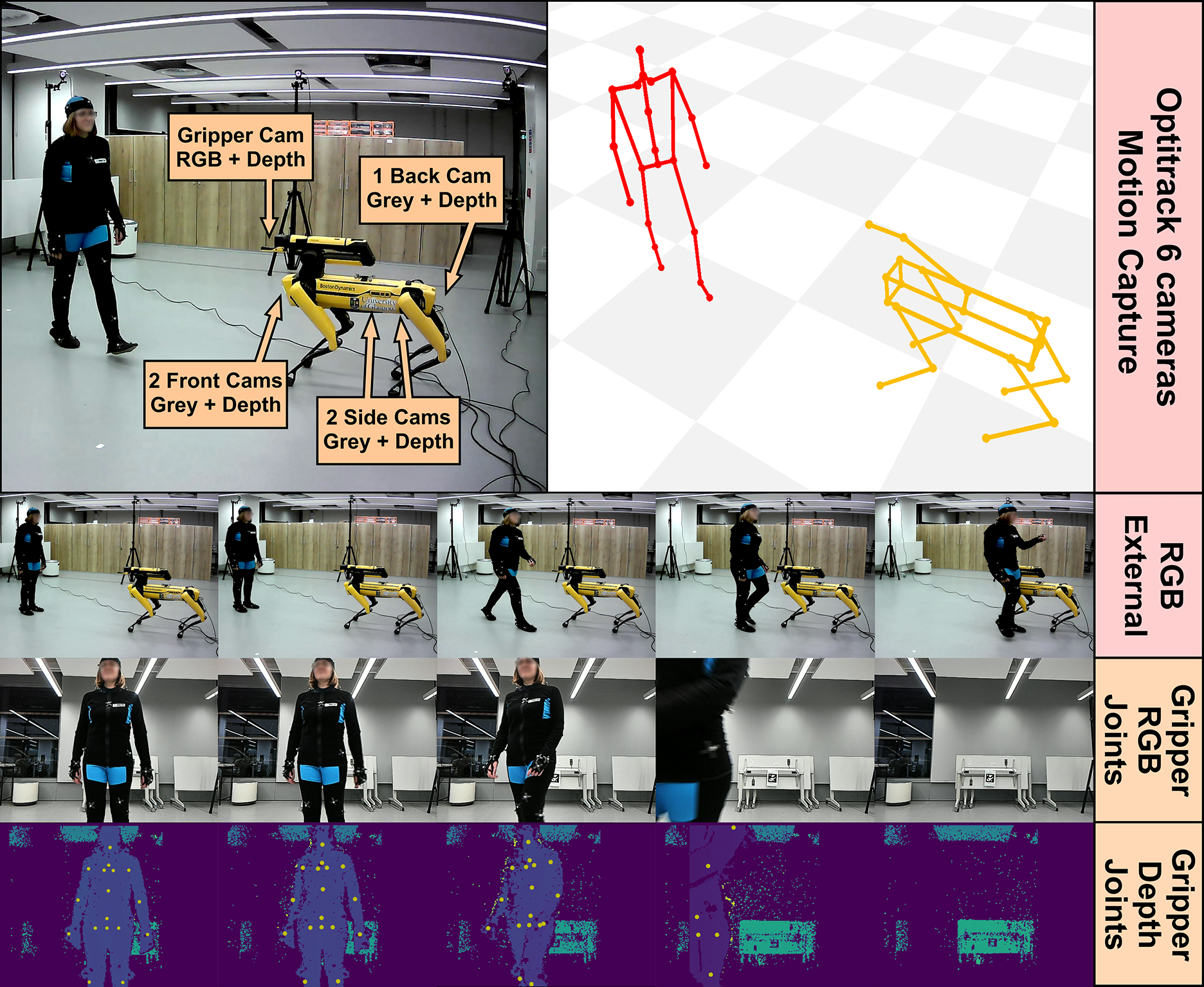

We introduce HARPER, a novel dataset for 3D body pose estimation and forecast in dyadic interactions between users and Spot, the quadruped robot manufactured by Boston Dynamics. The key-novelty is the focus on the robot's perspective, i.e., on the data captured by the robot's sensors. These make 3D body pose analysis challenging because being close to the ground captures humans only partially. The scenario underlying HARPER includes 15 actions, of which 10 involve physical contact between the robot and users. The Corpus contains not only the recordings of the built-in stereo cameras of Spot, but also those of a 6-camera OptiTrack system (all recordings are synchronized). This leads to ground-truth skeletal representations with a precision lower than a millimeter. In addition, the Corpus includes reproducible benchmarks on 3D Human Pose Estimation, Human Pose Forecasting, and Collision Prediction, all based on publicly available baseline approaches. This enables future HARPER users to rigorously compare their results with those we provide in this work.

Run the following command to download the dataset:

PYTHONPATH=. python download/harper_downloader.py --dst_folder ./dataData structure:

-coming soon-The dataset has two points of view: the panoptic point of view and the robot's perspective point of view. The first one is obtained using a 6-camera OptiTrack MoCap system. Thanks to it, the human skeleton pose (21x3) and the Spot skeleton can be located in the same 3D reference system.

For the sake of completeness, we provide the 3D panoptic data here. To download and create the data structure with train and test splits you can use the following code:

PYTHONPATH=. python download/harper_only_3d_downloader.py.py --dst_folder ./dataThis will generate the following tree structure:

data

├── harper_3d_120

│ ├── test

│ │ ├── subj_act_120hz.pkl

│ │ ├── ...

│ │ └── subj_act_120hz.pkl

│ └── train

│ ├── subj_act_120hz.pkl

│ ├── ...

│ └── subj_act_120hz.pkl

└── harper_3d_30

├── test

│ ├── subj_act_30hz.pkl

│ ├── ...

│ └── subj_act_30hz.pkl

└── train

├── subj_act_30hz.pkl

├── ...

└── subj_act_30hz.pkl

In the harper_3d_120 and harper_3d_30 folders you will find the 3D panoptic data at 120 Hz and 30 Hz respectively, both with train and test split.

Each .pkl file contains a dictionary with the frame index as key and the following values:

frame: the frame indexsubject: the subject idaction: the action idhuman_joints_3d: the 3D human pose (21x3)spot_joints_3d: the 3D Spot pose (22x3)

A torch dataloader will be provided soon.

To visualize the 3D panoptic data you can use the following code:

PYTHONPATH=. python tools/visualization/visualize_3d.py --pkl_file ./data/harper_3d_30/train/cun_act1_30hz.pklDue to limits in the SPOT SDK at the time of recording, the 2D keypoints annotations were manually verified and eventually fixed to be synchronized with the frame rate obtained by the SPOT. For the 3D pose estimation pipeline, we lift the 2D keypoints using the depth values (see key 'visibles_3d' in the annotations).