This repo contains a curative list of monocular relocalzation algorithm, which is categorized into five classes based on its utilized scene map. Comprehensive review can be found in our survey

Jinyu Miao, Kun Jiang, Tuopu Wen, Yunlong Wang, Peijing Jia, Xuhe Zhao, Qian Cheng, Zhongyang Xiao, Jin Huang, Zhihua Zhong, Diange Yang, "A Survey on Monocular Re-Localization: From the Perspective of Scene Map Representation," arXiv preprint arXiv:2311.15643, 2023.

We welcome the community to kindly pull requests or email to add related monocular relocalization papers. We make efforts to provide long-term contributions to our community!

If you find this repository useful, please consider citing and staring this repository. Feel free to share this repository with others!

- Other Relevant Survey

- Geo-tagged Frame Map

- Visual Landmark Map

- Point Cloud Map

- Vectorized HD Map

- Learned Implicit Map

- [AIR'15] Visual Simultaneous Localization and Mapping: A Survey paper

- [TRO'16] Visual place recognition: A survey paper

- [TRO'16] Past, Present, and Future of Simultaneous Localization And Mapping: Towards the Robust-Perception Age paper repo

- [PR'18] A survey on Visual-Based Localization: On the benefit of heterogeneous data paper

- [IJCAI'21] Where is your place, visual place recognition? paper

- [PR'21] Visual place recognition: A survey from deep learning perspective paper

- [TITS'22] The revisiting problem in simultaneous localization and mapping: A survey on visual loop closure detection paper

- [arxiv] General Place Recognition Survey: Towards the Real-world Autonomy Age paper

- [arxiv] A Survey on Visual Map Localization Using LiDARs and Cameras paper

- [arxiv] Visual and object geo-localization: A comprehensive survey paper

- [arxiv] A Survey on Deep Learning for Localization and Mapping: Towards the Age of Spatial Machine Intelligence paper repo

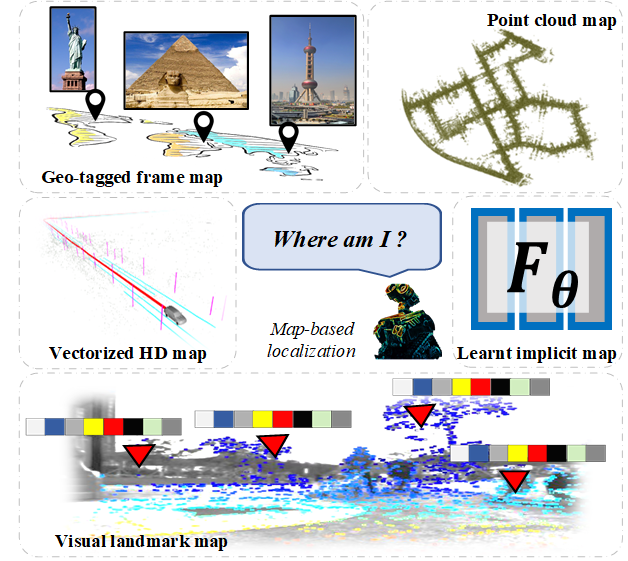

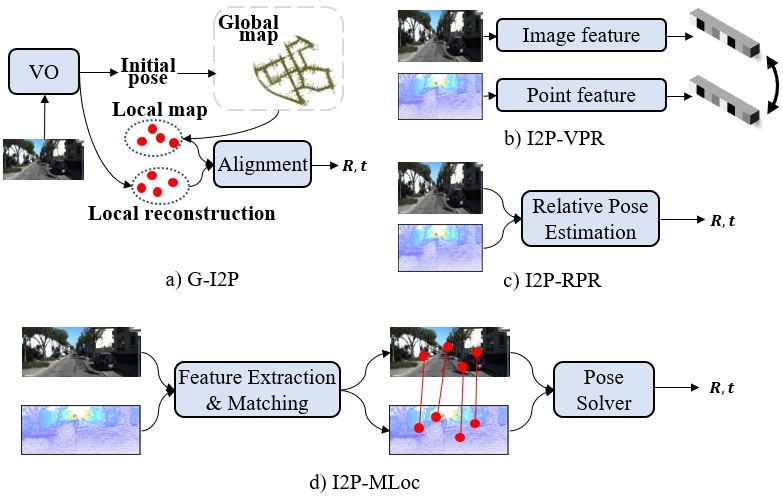

Geo-tagged Frame Map is composed of posed keyframes.

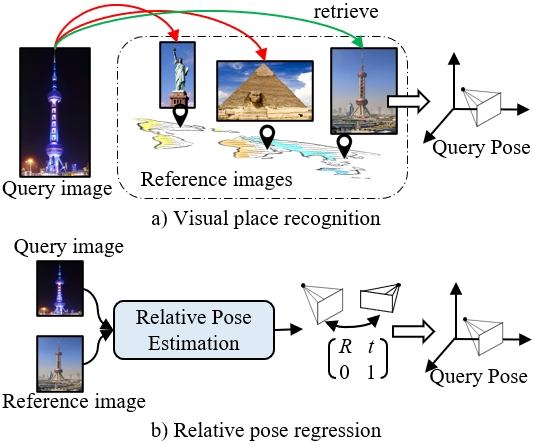

The related visual relocalization algorithm can be classified into two categories: Visual Place Recognition (VPR) and Relative Pose Estimation (RPR).

Given current query image, VPR identifies the re-observed places by retrieving reference image(s) when the vehicle goes back to a previously visited scene, which is often used as coarse step in hierarchical localization pipeline or Loop Closure Detection (LCD) module in Simultaneous Localization and Mapping (SLAM) system. The pose of retrieved reference image(s) can be regarded as an apporximated pose of current query image.

- [ICRA'00] Appearance-based place recognition for topological localization paper

- [CVPR'05] Histograms of oriented gradients for human detection paper

- [TRO'09] Biologically inspired mobile robot vision localization paper

- [CVPR'10] Aggregating local descriptors into a compact image representation paper

- [TPAMI'18] NetVLAD: CNN architecture for weakly supervised place recognition paper code(MATLAB) code(PyTorch)

- [TPAMI'19] Fine-tuning cnn image retrieval with no human annotation paper

- [IROS'19] Fast and Incremental Loop Closure Detection Using Proximity Graphs paper code

- [TNNLS'20] Spatial pyramid enhanced netvlad with weighted triplet loss for place recognition [paper

- [RAL'20] Co-HoG: A light-weight, compute-efficient, and training-free visual place recognition technique for changing environments paper

- [PR'21] Vector of locally and adaptively aggregated descriptors for image feature representation paper

- [RAL'21] ESA-VLAD: A lightweight network based on second-order attention and netvlad for loop closure detection paper

- [CVPR'21] Patch-NetVLAD: Multi-scale fusion of locally-global descriptors for place recognition paper code

- [JFR'22] Fast and incremental loop closure detection with deep features and proximity graphs paper code

- [CVPR'22] TransVPR: Transformer-based place recognition with multi-level attention aggregation paper

- [WACV'23] MixVPR: Feature mixing for visual place recognition paper code

- [arxiv] Anyloc: Towards universal visual place recognition paper code

- [IJRR'08] FAB-MAP: Probabilistic localization and mapping in the space of appearance paper code

- [IJRR'11] Appearance-only SLAM at large scale with FAB-MAP 2.0 paper

- [IROS'11] Real-time loop detection with bags of binary words paper

- [TRO'12] Automatic visual Bag-of-Words for online robot navigation and mapping paper

- [TRO'12] Bags of binary words for fast place recognition in image sequences paper DBoW DBoW2 DBoW3

- [ICRA'15] IBuILD: Incremental bag of binary words for appearance based loop closure detection paper

- [RSS'15] Place recognition with convnet landmarks: Viewpoint-robust, condition-robust, training-free paper

- [IROS'15] On the performance of convnet features for place recognition paper

- [TRO'17] Hierarchical place recognition for topological mapping paper code

- [AuRo'18] BoCNF: Efficient image matching with bag of convnet features for scalable and robust visual place recognition paper

- [RAL'18] iBoW-LCD: An appearance-based loop-closure detection approach using incremental bags of binary words paper code

- [ICRA'18] Assigning visual words to places for loop closure detection paper code

- [RAL'19] Probabilistic appearance-based place recognition through bag of tracked words paper code

- [IROS'19] Fast and Incremental Loop Closure Detection Using Proximity Graphs paper code

- [IROS'19] Robust loop closure detection based on bag of superpoints and graph verification paper

- [BMVC'20] LiPo-LCD: Combining lines and points for appearance-based loop closure detection paper

- [TII'21] Semantic Loop Closure Detection With Instance-Level Inconsistency Removal in Dynamic Industrial Scenes paper

- [JFR'22] Automatic vocabulary and graph verification for accurate loop closure detection paper

- [TITS'22] Loop-closure detection using local relative orientation matching paper

- [PRL'22] Discriminative and semantic feature selection for place recognition towards dynamic environments paper

- [JFR'22] Fast and incremental loop closure detection with deep features and proximity graphs paper code

- [ICRA'12] SeqSLAM: Visual route-based navigation for sunny summer days and stormy winter nights paper

- [ICRA'17] Fast-SeqSLAM: A fast appearance based place recognition algorithm paper

- [RAL'20] Delta descriptors: Change-based place representation for robust visual localization paper code

- [RAL'21] Seqnet: Learning descriptors for sequence-based hierarchical place recognition paper code

- [RAL'22] Learning Sequential Descriptors for Sequence-Based Visual Place Recognition paper code

- [RAL'18] X-View: Graph-based semantic multi-view localization paper

- [TII'21] Semantic Loop Closure Detection With Instance-Level Inconsistency Removal in Dynamic Industrial Scenes paper

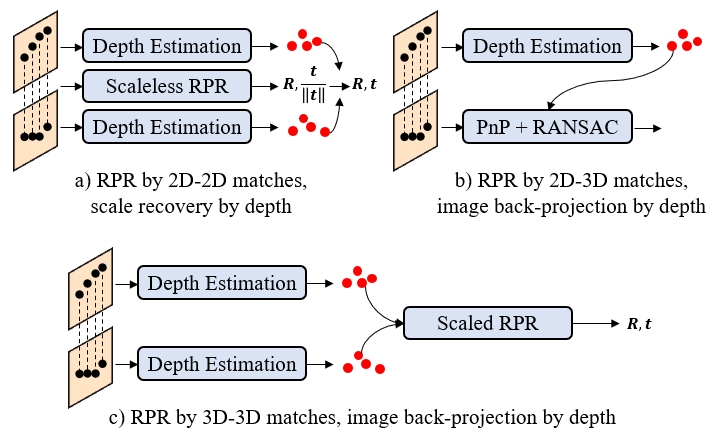

RPR methods aims to estimate the relative pose between query image and reference image in the map.

- [ICRA'20] To learn or not to learn: Visual localization from essential matrices paper

- [ICCV'21] Calibrated and partially calibrated semi-generalized homographies paper

- [ECCV'22] Map-free visual relocalization: Metric pose relative to a single image paper project

- [arxiv'23] Lazy Visual Localization via Motion Averaging paper

- [ICCVW'17] Camera relocalization by computing pairwise relative poses using convolutional neural network paper

- [ACIVS'17] Relative camera pose estimation using convolutional neural networks paper

- [ECCV'18] Relocnet: Continuous metric learning relocalisation using neural nets paper

- [ECCVW'19] Rpnet: An end-to-end network for relative camera pose estimation paper

- [IROS'21] Distillpose: Lightweight camera localization using auxiliary learning paper

- [CVPR'21] Extreme rotation estimation using dense correlation volumes paper

- [CVPR'21] Wide-baseline relative camera pose estimation with directional learning paper

- [ICRA'21] Learning to localize in new environments from synthetic training data paper

- [ECCV'22] Map-free visual relocalization: Metric pose relative to a single image paper project

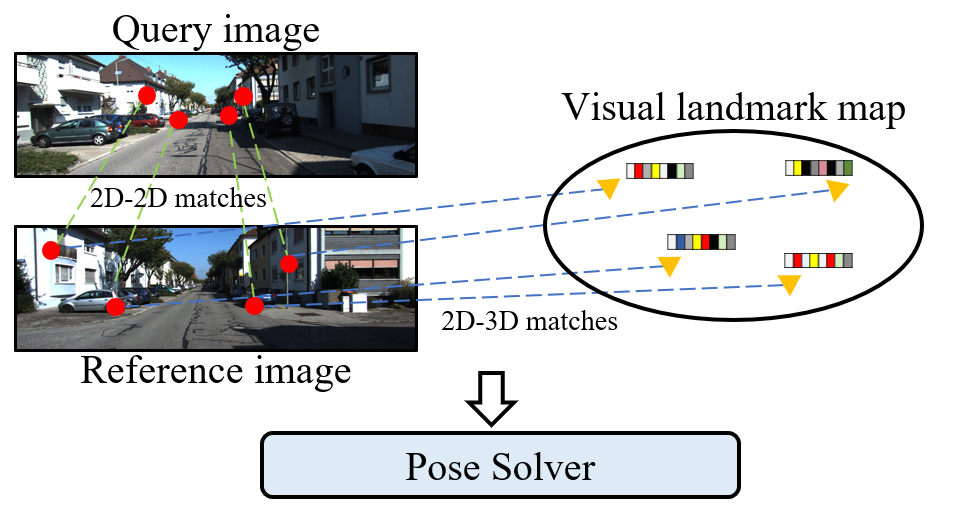

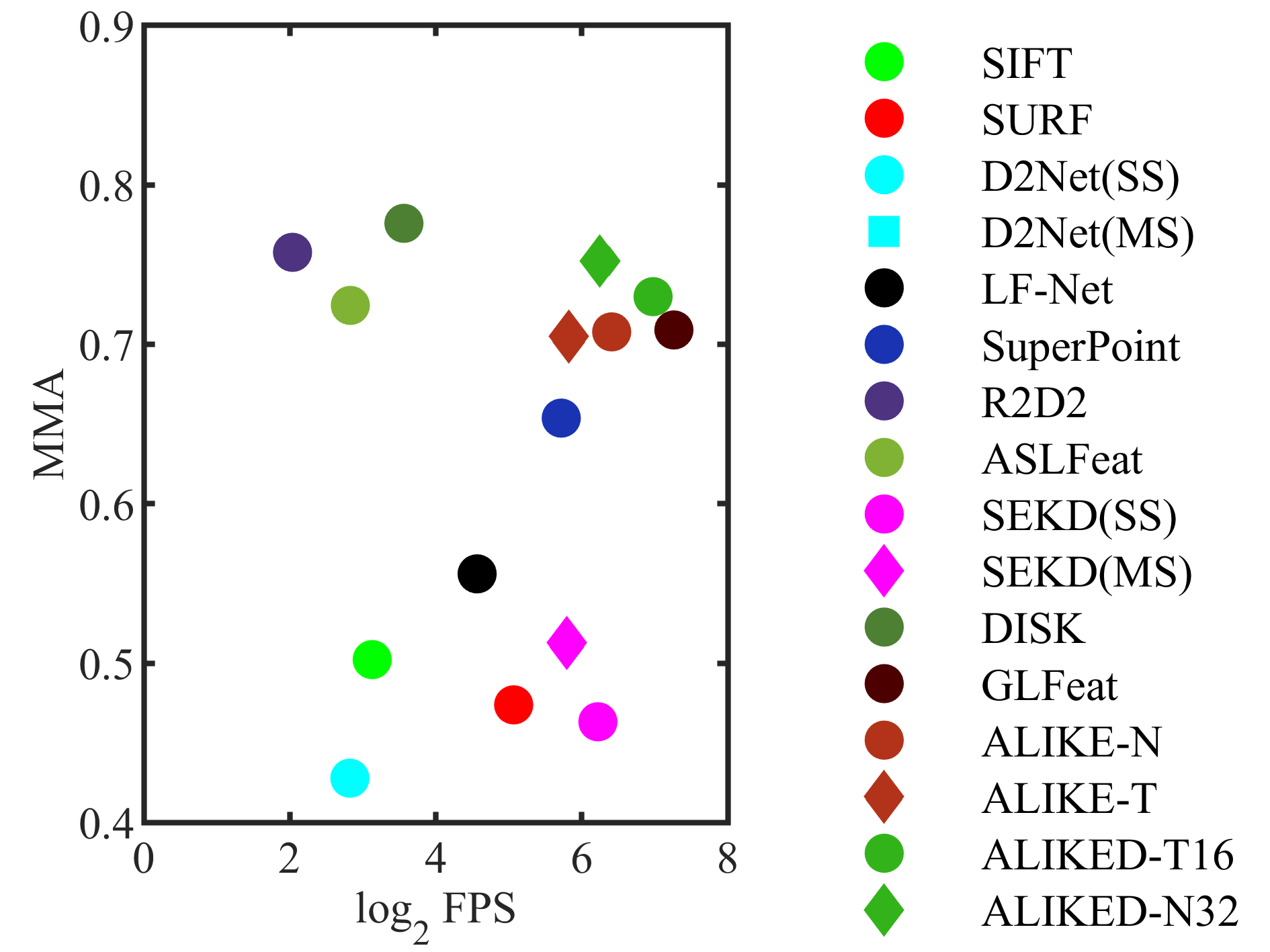

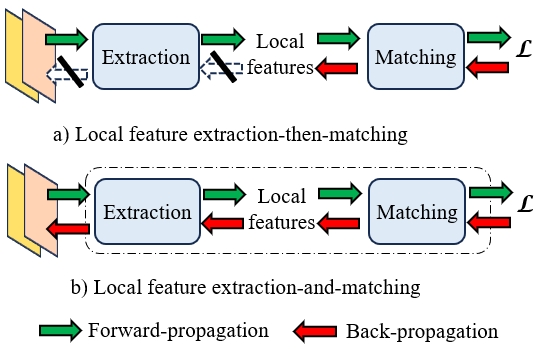

Visual landmark Map is composed by visual landmarks. Visual landmarks are some informative and representative 3D points that lifted from 2D pixels by 3D reconstruction, and they are associated with corresponding local features in various observed reference images including 2D key point and high-dimensional descriptor. During localization stage, query image is first matched with reference image(s) and the resulting 2D-2D matches are lifted to 2D-3D matches between query image and visual landmark map, which can be used to solve scaled pose as a typical Perspective-n-Point (PnP) problem.

- [IJCV'04] Distinctive image features from scale-invariant key points

- [CVIU'08] Speeded-up robust features (SURF)

- [ECCV'10] BRIEF: Binary robust independent elementary features

- [ICCV'11] BRISK: Binary robust invariant scalable keypoints

- [ICCV'11] ORB: An efficient alternative to sift or surf

- [NeurIPS'17] Working hard to know your neighbor's margins: Local descriptor learning loss paper code

- [ICCV'17] Large-scale image retrieval with attentive deep local features paper code

- [CVPRW'18] SuperPoint: Self-supervised interest point detection and description paper official code code code

- [ECCV'18] Geodesc: Learning local descriptors by integrating geometry constraints paper

- [CVPR'19] L2-net: Deep learning of discriminative patch descriptor in euclidean space paper code

- [CVPR'19] SoSNet: Second order similarity regularization for local descriptor learning paper code

- [CVPR'19] Contextdesc: Local descriptor augmentation with cross-modality context paper code

- [CVPR'19] D2-Net: A trainable CNN for joint description and detection of local features paper code

- [NeurIPS'19] R2D2: Repeatable and reliable detector and descriptor paper code

- [CVPR'20] ASLFeat: Learning local features of accurate shape and localization paper code

- [NeurIPS'20] DISK: Learning local features with policy gradient paper code

- [ICCV'21] Learning deep local features with multiple dynamic attentions for large-scale image retrieval paper code

- [IROS'21] RaP-Net: A region-wise and point-wise weighting network to extract robust features for indoor localization paper code

- [PRL'22] Discriminative and semantic feature selection for place recognition towards dynamic environments paper

- [TMM'22] ALIKE: Accurate and lightweight keypoint detection and descriptor extraction paper code

- [ICIRA'22] Real-time local feature with global visual information enhancement paper

- [AAAI'22] MTLDesc: Looking wider to describe better paper code

- [TIM'23] ALIKED: A lighter keypoint and descriptor extraction network via deformable transformation paper code

- [arxiv] SEKD: Self-evolving keypoint detection and description paper code

- [ECCV'08] A linear time histogram metric for improved sift matching paper

- [ECCV'08] Feature correspondence via graph matching: Models and global optimization paper

- [IJCV'10] Rejecting mismatches by correspondence function paper

- [PRL'12] Good match exploration using triangle constraint paper

- [ToG'14] Feature matching with bounded distortion [paper] (https://dl.acm.org/doi/abs/10.1145/2602142)

- [TPAMI'14] Scalable nearest neighbor algorithms for high dimensional data paper

- [TIP'14] Robust point matching via vector field consensus paper

- [TVCG'15] Regularization based iterative point match weighting for accurate rigid transformation estimation paper

- [TIP'15] Matching images with multiple descriptors: An unsupervised approach for locally adaptive descriptor selection paper

- [TCSVT'15] Bb-homography: Joint binary features and bipartite graph matching for homography estimation paper

- [ECCV'16] Guided matching based on statistical optical flow for fast and robust correspondence analysis paper

- [CVPR'17] GMS: Grid-based motion statistics for fast, ultra-robust feature correspondence paper code

- [TPAMI'17] Efficient & Effective Prioritized Matching for Large-Scale Image-Based Localization paper

- [CVPR'17] Deep Semantic Feature Matching paper

- [TGRS'18] Guided locality preserving feature matching for remote sensing image registration paper

- [TPAMI'18] CODE: Coherence based decision boundaries for feature correspondence paper

- [TCSVT'18] Dual calibration mechanism based l2, p-norm for graph matching paper

- [TCSVT'18] Second- and high-order graph matching for correspondence problems paper

- [CVIU'18] Hierarchical semantic image matching using cnn feature pyramid paper

- [CVPR'18] Learning to find good correspondences paper code

- [CVPR'19] NM-Net: Mining reliable neighbors for robust feature correspondences paper code

- [IJCV'19] Locality preserving matching paper

- [ECCV'20] Handcrafted outlier detection revisited paper code

- [TCSVT'20] Image correspondence with cur decomposition-based graph completion and matching paper

- [CVPR'20] ACNe: Attentive context normalization for robust permutation-equivariant learning paper code

- [CVPR'20] SuperGlue: Learning feature matching with graph neural networks paper code

- [TPAMI'22] OANet: Learning two-view correspondences and geometry using order-aware network paper

- [TCSVT'22] Probabilistic Spatial Distribution Prior Based Attentional Keypoints Matching Network paper

- [ICCV'23] LightGlue: Local Feature Matching at Light Speed paper code

- [ECCV'20] Efficient neighbourhood consensus networks via submanifold sparse convolutions paper code

- [NeurIPS'20] Dual-resolution correspondence networks paper code

- [ICCV'21] COTR: Correspondence transformer for matching across images paper code

- [CVPR'21] LoFTR: Detector-free local feature matching with transformers paper code

- [TPAMI'22] NcNet: Neighbourhood consensus networks for estimating image correspondences paper

- [ECCV'22] Aspanformer: Detector-free image matching with adaptive span transformer paper code

- [ICCV'23] Occ^2net: Robust image matching based on 3d occupancy estimation for occluded regions paper

- [arxiv] Quadtree attention for vision transformers paper code

- [arxiv] Tkwinformer: Top k window attention in vision transformers for feature matching paper

- [TPAMI'92] Exact and approximate solutions of the perspective-three-point problem paper

- [ICCV'99] Camera pose and calibration from 4 or 5 known 3d points paper

- [TPAMI'99] Linear n-point camera pose determination paper

- [TPAMI'00] Fast and globally convergent pose estimation from video images paper

- [TPAMI'01] Efficient linear solution of exterior orientation paper

- [CVPR'08] A general solution to the p4p problem for camera with unknown focal length paper

- [IJCV'09] EPnP: An accurate o(n) solution to the pnp problem paper

- [IJPRAI'11] A stable direct solution of perspective-three-point problem paper

- [ICCV'11] A direct least-squares (DLS) method for pnp paper

- [TPAMI'12] A robust o(n) solution to the perspective-n-point problem paper

- [ICCV'13] Revisiting the pnp problem: A fast, general and optimal solution paper

- [ICRA'17] 6-dof object pose from semantic keypoints paper code

- [PRL'18] A simple, robust and fast method for the perspective-n-point problem paper

- [IROS'19] An efficient and accurate algorithm for the perspecitve-n-point problem paper

- [ECCV'20] A consistently fast and globally optimal solution to the perspective-n-point problem paper code

- [arxiv] MLPnP - a real-time maximum likelihood solution to the perspective-n-point problem paper code

- [RAL'21] Line as a visual sentence: Context-aware line descriptor for visual localization paper code

- [RAL'19] GN-Net: The gauss-newton loss for multi-weather relocalization paper code

- [TPAMI'21] Inloc: Indoor visual localization with dense matching and view synthesis paper code

- [ICCV'19] Is this the right place? geometric-semantic pose verifcation for indoor visual localization paper

- [CVPR'22] Scenesqueezer: Learning to compress scene for camera relocalization paper

- [ICCV'19] Is this the right place? geometric-semantic pose verifcation for indoor visual localization paper

- [TPAMI'21] Inloc: Indoor visual localization with dense matching and view synthesis paper code

- [ICCV'21] Pose correction for highly accurate visual localization in large-scale indoor spaces paper code

Point Cloud Map only contains 3D position of point clouds and its intensity (somtimes missing). Monocular localization in point cloud map is also called cross-modal localization.

- [IROS'14] Visual localization within lidar maps for automated urban driving paper

- [IROS'16] Monocular camera localization in 3d lidar maps paper

- [IROS'18] Stereo camera localization in 3d lidar maps paper

- [IROS'19] Metric monocular localization using signed distance fields paper

- [IROS'20] Monocular camera localization in prior lidar maps with 2d-3d line correspondences paper code

- [ICRA'20] Monocular direct sparse localization in a prior 3d surfel map paper

- [RAL'20] GMMLoc: Structure consistent visual localization with gaussian mixture models paper code

- [ICRA'20] Global visual localization in lidar-maps through shared 2d-3d embedding space paper

- [ICRA'21] Spherical multi-modal place recognition for heterogeneous sensor systems paper code

- [RSS'21] i3dLoc: Image-to-range cross-domain localization robust to inconsistent environmental conditions paper

- [IEEE Sensor Journal'23] Attention-enhanced cross-modal localization between spherical images and point clouds paper

- [arxiv] I2P-Rec:Recognizing images on large-scale point cloud maps through bird's eye view projections paper

- [ITSC'19] CMRNet: Camera to lidar-map registration paper code

- [ICRA'21] Hypermap: Compressed 3d map for monocular camera registration paper

- [RAL'24] Poses as Queries: End-to-End Image-to-LiDAR Map Localization With Transformers paper

- [ICRA'19] 2d3d-matchnet: Learning to match keypoints across 2d image and 3d point cloud paper

- [CVPR'21] Neural reprojection error: Merging feature learning and camera pose estimation paper code

- [CVPR'21] Back to the feature: Learning robust camera localization from pixels to pose paper code

- [CVPR'21] Deepi2p: Image-to-point cloud registration via deep classification paper code

- [RAL'22] EFGHNet: A versatile image-to-point cloud registration network for extreme outdoor environment paper

- [IJPRS'22] I2D-Loc: Camera localization via image to lidar depth flow paper

- [TCSVT'23] Corri2p: Deep image-to-point cloud registration via dense correspondence paper code

- [arxiv] CMRet++: Map and camera agnostic monocular visual localization in lidar maps paper

- [arxiv] End-to-end 2d-3d registration between image and lidar point cloud for vehicle localization paper

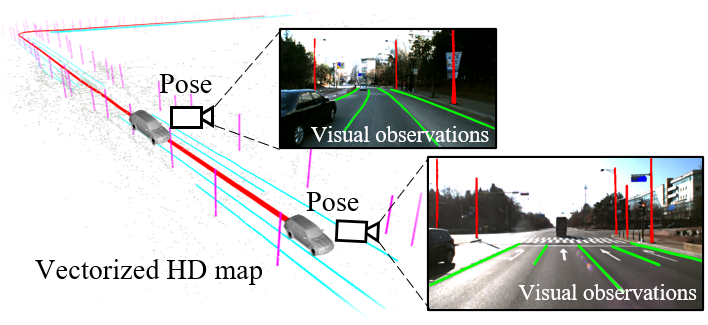

The localization feature in the HD Map includes dense point cloud and sparse map element, here we focus on the sparse map elements usually represented as vectors with semantic labels.

- [CVPRW'08] Visual map matching and localization using a global feature map paper

- [IROS'13] Mapping and localization using gps, lane markings and proprioceptive sensors paper

- [IV'14] Video based localization for bertha paper

- [IV'17] Monocular localization in urban environments using road markings paper

- [TITS'18] Self-localization based on visual lane marking maps: An accurate low-cost approach for autonomous driving paper

- [Sensors'18] Integration of gps, monocular vision, and high definition (hd) map for accurate vehicle localization paper

- [ITSC'18] Monocular vehicle self-localization method based on compact semantic map paper

- [IEEE Sensors Journal'19] Self-localization based on visual lane marking maps: An accurate low-cost approach for autonomous driving paper

- [ICCR'19] Coarse-to-fine visual localization using semantic compact map paper

- [Sensors'20] Monocular localization with vector hd map (mlvhm): A low-cost method for commercialivs paper

- [IV'20] High precision vehicle localization based on tightly-coupled visual odometry and vector hd map paper

- [IROS'20] Monocular localization in hd maps by combining semantic segmentation and distance transform paper

- [IROS'21] Coarse-to-fine semantic localization with hd map for autonomous driving in structural scenes paper

- [ICRA'21] Visual Semantic Localization based on HD Map for Autonomous Vehicles in Urban Scenarios paper

- [JAS'22] A lane-level road marking map using a monocular camera paper

- [TITS'22] Tm3loc: Tightly-coupled monocular map matching for high precision vehicle localization paper

- [CVPR'23] OrienterNet: Visual Localization in 2D Public Maps with Neural Matching paper

- [arxiv] BEV-Locator:An end-to-end visual semantic localization network using multi-view images paper

- [arxiv] Egovm: Achieving precise ego-localization using lightweight vectorized maps paper

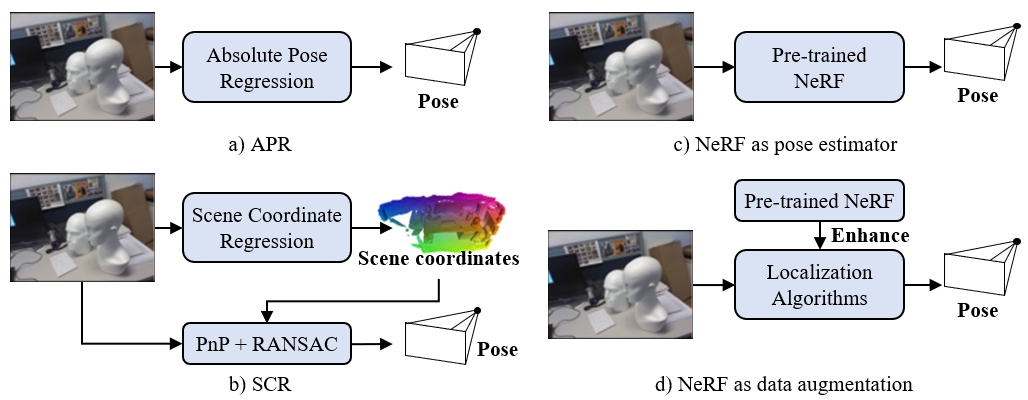

Some recently proposed works implicitly encode scene map into neural networks so that the network can achieve amazing things, such as directly recover pose of images (called Absolute Pose Regression, APR), estimate 3D coordinates of each pixel in images (called Scene Coordinate Regression, SCR), or render geometry structure and appearance of scene (called Neural Radiance Field, NeRF). We name such scene map-related information in trained neural network as Learnt Implicit Map.

- [ICCV'15] Posenet: A convolutional network for real-time 6-dof camera relocalization paper code

- [ICRR'21] Do we really need scene-specific pose encoders? paper

- [IROS'17] Deep regression for monocular camera-based 6-dof global localization in outdoor environments paper

- [ICCVW'17] Image-based localization using hourglass networks paper

- [ICRA'16] Modelling uncertainty in deep learning for camera relocalization paper code

- [ICCV'17] Image-based localization using lstms for structured feature correlation paper

- [CVPR'17] Geometric loss functions for camera pose regression with deep learning paper code

- [CVPR'18] Geometry-aware learning of maps for camera localization paper code

- [BMVC'18] Improved visual relocalization by discovering anchor points paper

- [ICCV'19] Local supports global: Deep camera relocalization with sequence enhancement paper

- [AAAI'20] Atloc: Attention guided camera localization paper code

- [ICASSP'21] CaTiLoc: Camera image transformer for indoor localization paper

- [CVPRW'20] Extending absolute pose regression to multiple scenes paper

- [ICCV'21] Learning multi-scene absolute pose regression with transformers paper code

- [TPAMI'23] Coarse-to-fine multi-scene pose regression with transformers paper code

- [CVPR'13] Scene coordinate regression forests for camera relocalization in rgb-d images paper

- [CVPR'14] Multi-output learning for camera relocalization paper

- [CVPR'15] Exploiting uncertainty in regression forests for accurate camera relocalization paper

- [CVPR'17] DSAC - differentiable ransac for camera localization paper code

- [CVPR'18] Learning less is more - 6d camera localization via 3d surface regression paper code

- [ICCV'19] Expert sample consensus applied to camera re-localization paper code

- [CVPR'20] Hierarchical scene coordinate classification and regression for visual localization paper

- [CVPR'21] VS-Net: Voting with segmentation for visual localization paper code

- [TPAMI'22] Visual camera re-localization from rgb and rgb-d images using dsac paper

- [3DV'22] Visual localization via few-shot scene region classification paper code

- [arxiv] Large scale joint semantic re-localisation and scene understanding via globally unique instance coordinate regression paper

- [arxiv] Hscnet++: Hierarchical scene coordinate classification and regression for visual localization with transformer paper

- [ICCV'19] SANet: Scene agnostic network for camera localization paper

- [CVPR'21] Learning camera localization via dense scene matching paper code

- [CVPR'2023] Neumap: Neural coordinate mapping by auto-transdecoder for camera localization paper code

- [arxiv] D2S: Representing local descriptors and global scene coordinates for camera relocalization paper code

- [arxiv] SACReg: Scene-agnostic coordinate regression for visual localization paper

- [ICCV'21] GNeRF: Gan-based neural radiance field without posed camera paper code

- [CVPR'23] NoPe-NeRF: Optimising neural radiance field with no pose prior paper code

- [ICRA'23] LATITUDE: Robotic global localization with truncated dynamic low-pass filter in city-scale nerf paper code

- [ICRA'23] LocNeRF: Monte carlo localization using neural radiance fields paper

- [arxiv] NeRF-Loc: Visual localization with conditional neural radiance field paper code

- [3DV'21] Direct-PoseNet: Absolute pose regression with photometric consistency paper code

- [ECCV'22] DFNet: Enhance absolute pose regression with direct feature matching paper code

- [CoRL'22] LENS: Localization enhanced by nerf synthesis paper

If you found this repository and survey helpful, please consider citing our related survey:

@article{miao2023MRL,

title={A Survey on Monocular Re-Localization: From the Perspective of Scene Map Representation},

author={Jinyu Miao, Kun Jiang, Tuopu Wen, Yunlong Wang, Peijing Jia, Benny Wijaya, Xuhe Zhao, Qian Cheng, Zhongyang Xiao, Jin Huang, Zhihua Zhong, Diange Yang},

journal={arXiv preprint arXiv:2311.15643},

year={2023}

}