Kirill Mazur · Gwangbin Bae · Andrew J. Davison

Paper | Video | Project Page

git clone https://github.com/makezur/super_primitive.git --recursive

cd super_primitiveSetup the environment by running our installation script:

source install.shNote that the provided software was tested on Ubuntu 20.04 with a single Nvidia RTX 4090.

To download the required checkpoints and datasets, please run our download script:

bash ./download.shThe script will download the pre-trained checkpoints for both SAM and surface normal estimation networks. A replica scene and TUM_fr1 sequences will also be downloaded and unpacked automatically.

N.B. in case of the system CUDA version mismatch you might have to change the pytorch-cuda version in the installation script.

Run the following script for a minimal example of our SuperPrimitive-based joint pose and geometry estimation. Here, we estimate a relative pose between two frames and the depth of the source frame.

python sfm_gui_runner.py --config config/replica_sfm_example.yamlRun our MonoVO on a TUM sequence by executing the following command:

python sfm_gui_runner.py --config config/tum/odom_desk.yaml --odomWe provide a tool to convert estimated trajectories into the TUM format.

Conversion and subsequent evaluation of the

python convert_traj_to_tum.py --root results/desk_undistort_fin_TIMESTAMP

cd results/desk_undistort_fin_TIMESTAMP

evo_ape tum converted_gt_tum_traj.txt converted_tum_traj.txt -as --plot --plot_mode xy --save_results ./res.zipTo download VOID please follow the official instructions.

Please run this script to reproduce the quantitative evaluation of the SuperPrimitive-based depth completion described in the paper:

python evaluate_void.py --dataset PATH_TO_VOID_DATASETOur code draws a lot from the DepthCov codebase and we want to give a special thanks to its author.

We additionally thank the authors of the following codebases that made our project possible:

- Bilateral Normal Integration

- Estimating and Exploiting the Aleatoric Uncertainty in Surface Normal Estimation

- Segment Anything

If you found our code/work useful, please consider citing our publication:

@inproceedings{Mazur:etal:CVPR2024,

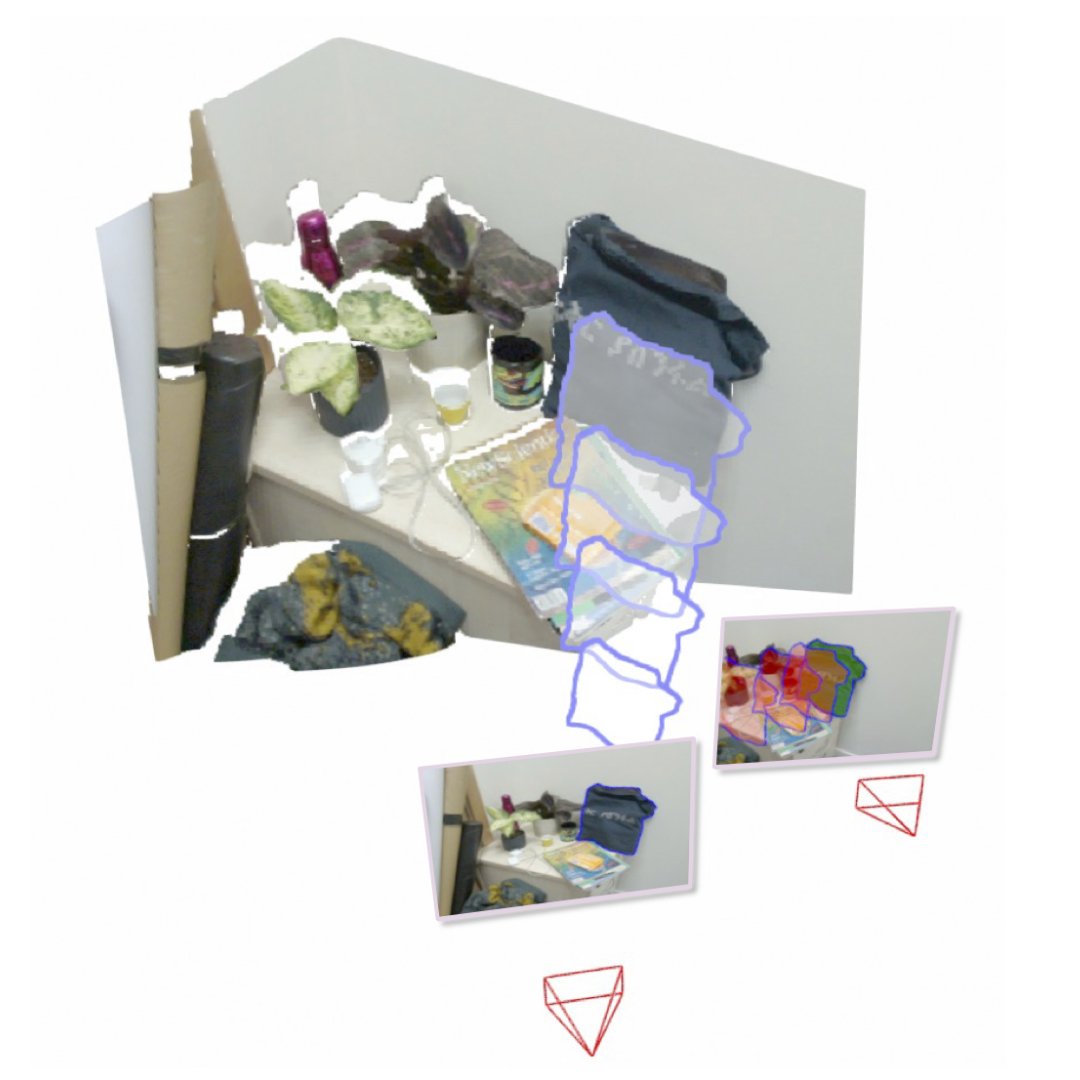

title={{SuperPrimitive}: Scene Reconstruction at a Primitive Level},

author={Kirill Mazur and Gwangbin Bae and Andrew Davison},

booktitle={IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2024},

}