本人研究方向是视觉SLAM,针对感兴趣的内容进行总结:

- ORB-SLAM2算法: 源码及其改进;

- Line-SLAM:paper及其源码;

- 经典SLAM算法: 经典、优秀的开源工程 ;

- 科研工具 :论文或实验用到的一些工具;

- 优秀作者和实验室:自己感兴趣的大佬和实验室;

- 学习材料:入门学习SLAM学习资料;

- SLAM、三维重建相关资源:常用的 Github 仓库,如何找论文;

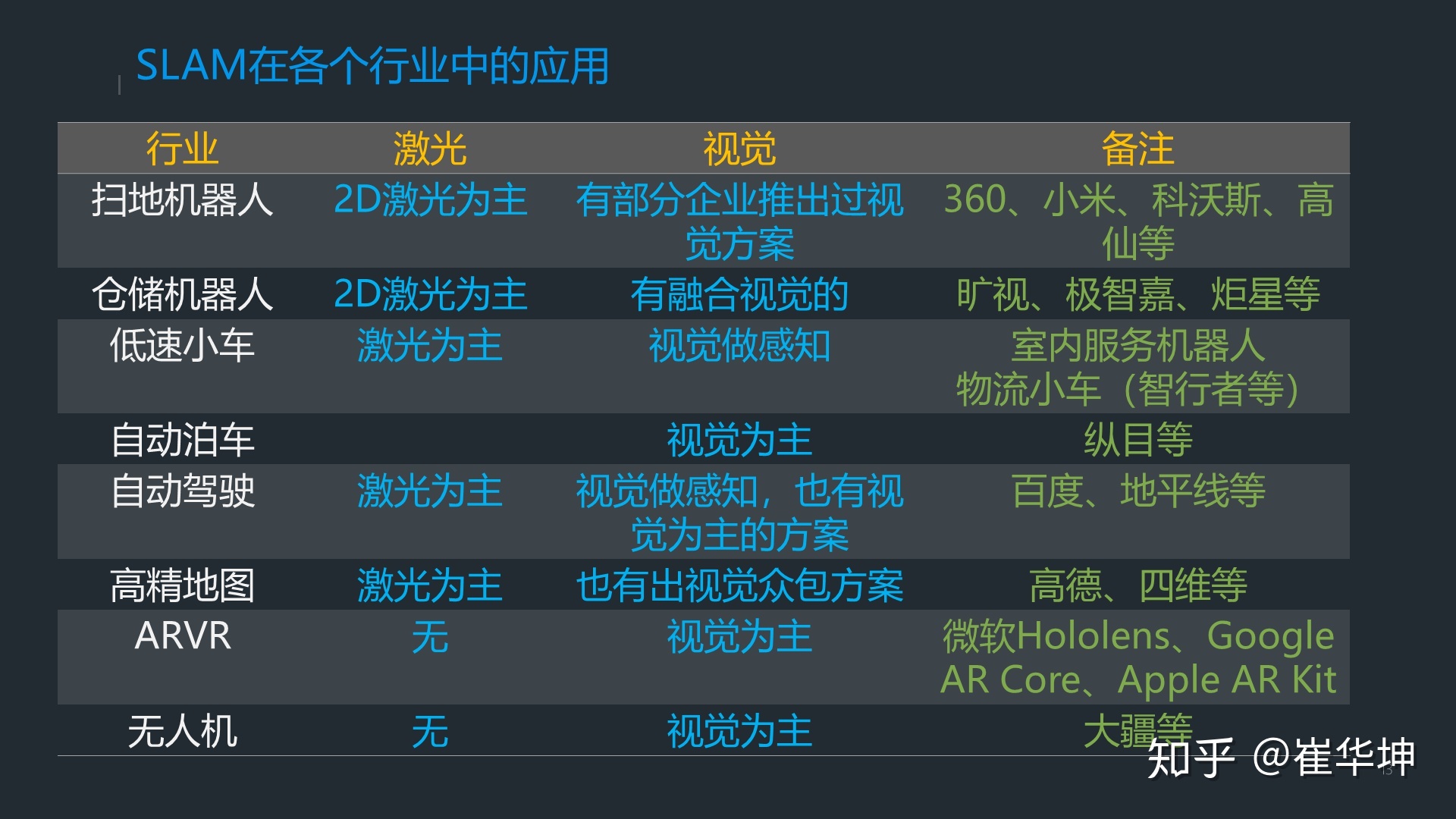

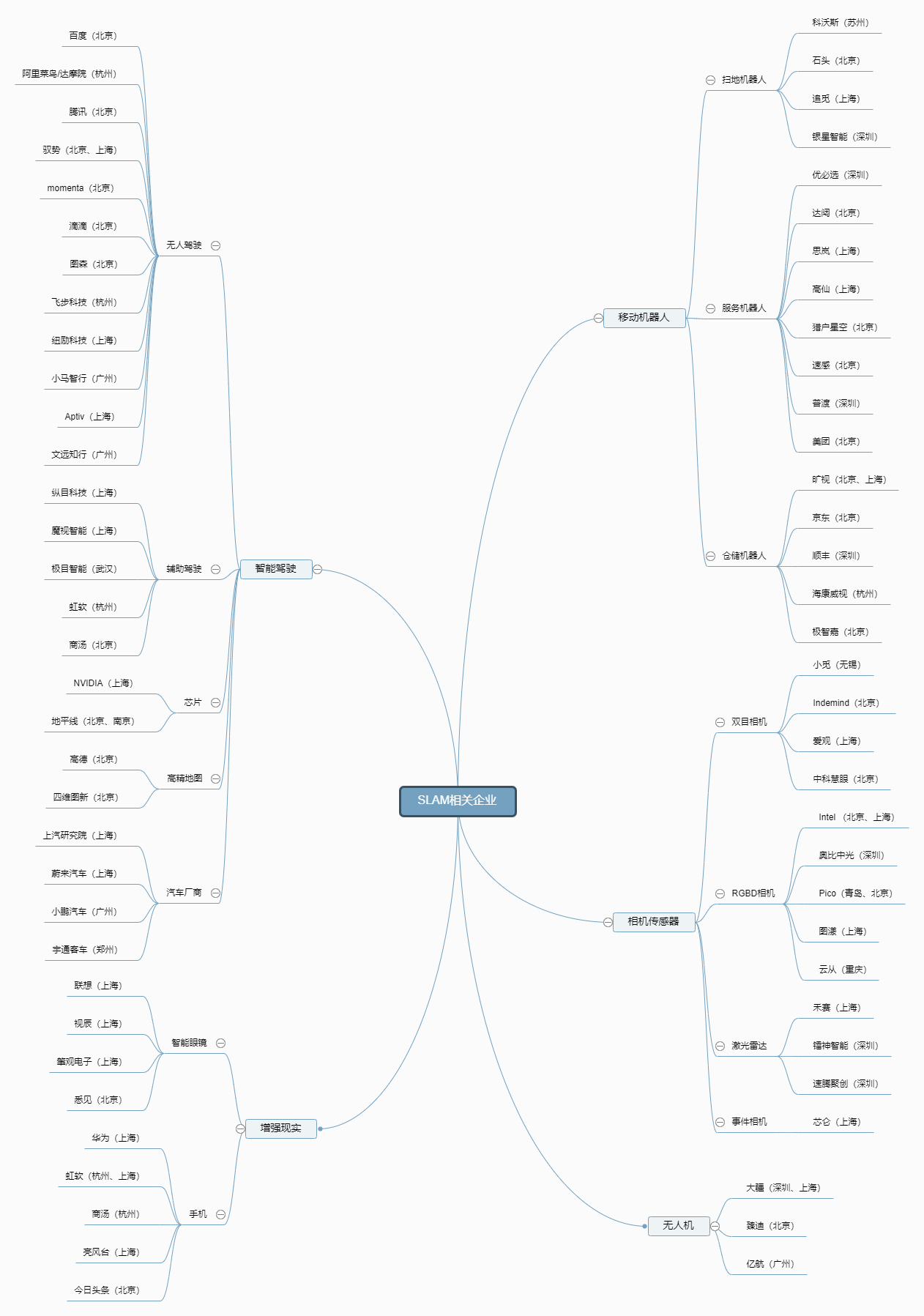

- SLAM应用和企业:包含SLAM的应用,及其招聘公司的公司(名单可能不完善);

- 工作面试:包含SLAM算法面试的时候准备经验;

- 1. 加入点线特征

- 2. 加入IMU

- 3. 加入wheel Odometer

- 4. 改进直接法

- 5. 加入Odometer和gyro

- 6. ORB-SLAM2 + 目标检测/分割的方案语义建图

- 7. 增加鱼眼相机模型

- 8. 动态环境

- 9. 其他的改变

- 4.1 Julian Straub MIT,facebook VR 实验室

- 4.2 牛津大学 Duncan Frost(PTAM 课题组)

- 4.3 Yoshikatsu NAKAJIMA (中島 由勝)

- 4.4 Alejo Concha(苏黎世Oculus VR)

- 4.5 波兹南理工大学移动机器人实验室

- 4.6 Xiaohu Lu

- 4.7 澳大利亚机器人视觉中心 Yasir Latif

- 4.8 西班牙马拉加大学博士生:RubénGómezOjeda

- 4.9法国运输,规划和网络科学与技术研究所博士:Nicolas Antigny

- 4.10 三星 AI 实验室(莫斯科):Alexander Vakhitov

- 4.11 苏黎世联邦理工学院计算机视觉与几何实验室:Prof. Marc Pollefeys

- 4.12 香港中文大学机器人、感知与 AI 实验室

- 4.13 微软高级工程师、苏黎世联邦理工:Johannes L. Schönberger

- 4.14 美国犹他大学计算机学院:Srikumar Ramalingam

- 4.15 德国马克斯普朗克智能系统研究所:Jörg Stückler

- 4.16 麻省理工学院航空航天控制实验室

- 4.17 约翰·霍普金斯大学计算机系博士 Long Qian

- 4.18 佐治亚理工学院机器人与机器智能研究所

- 4.19 其他实验室和大牛补充 -

六、学习材料 -

九、工作面经 -

paper: ORB-SLAM2: an Open-Source SLAM System for Monocular, Stereo and RGB-D Cameras

code:ORB-SLAM: https://github.com/raulmur/ORB_SLAM . (Monocular. ROS integrated)

paper:ORB-SLAM: A Versatile and Accurate Monocular SLAM System

code:ORB-SLAM2: https://github.com/raulmur/ORB_SLAM2 . (Monocular, Stereo, RGB-D. ROS optional)

-

paper:https://github.com/maxee1900/RGBD-PL-SLAM (点线)

-

paper: PL-SLAM: a Stereo SLAM System through the Combination of Points and Line Segments

-

https://github.com/maxee1900/RGBD-PL-SLAM (RGBD-SLAM with Point and Line Features, developed based on the famous ORB_SLAM2)

-

code:https://github.com/jingpang/LearnVIORB(ROS)

https://github.com/ZuoJiaxing/Learn-ORB-VIO-Stereo-Mono (非ROS)

-

code:https://github.com/gaoxiang12/ygz-stereo-inertial ( uses a LK optical flow as front-end and a sliding window bundle adjustment as a backend )

-

paper: [Gyro-Aided Camera-Odometer Online Calibration and Localization]( https://ieeexplore.ieee.org/document/7963501

code: https://github.com/image-amazing/Wheel_Encoder_aided_vo

-

paper:Zheng F, Liu Y H. Visual-Odometric Localization and Mapping for Ground Vehicles Using SE (2)-XYZ Constraints[C]//2019 International Conference on Robotics and Automation (ICRA). IEEE, 2019: 3556-3562.

-

paper:Zheng F, Tang H, Liu Y H. Odometry-vision-based ground vehicle motion estimation with se (2)-constrained se (3) poses[J]. IEEE transactions on cybernetics, 2018, 49(7): 2652-2663.

- code:https://github.com/gaoxiang12/ORB-YGZ-SLAM ( put the direct tracking in SVO to accelerate the feature matching in ORB-SLAM2 )

- https://github.com/floatlazer/semantic_slam

- https://github.com/qixuxiang/orb-slam2_with_semantic_labelling

- https://github.com/Ewenwan/ORB_SLAM2_SSD_Semantic

- paper: Yijia H , Ji Z , Yue G , et al. PL-VIO: Tightly-Coupled Monocular Visual–Inertial Odometry Using Point and Line Features[J]. Sensors, 2018, 18(4):1159-.

code: https://github.com/HeYijia/PL-VIO

-

paper: Gomez-Ojeda R , Briales J , Gonzalez-Jimenez J . PL-SVO: Semi-direct Monocular Visual Odometry by combining points and line segments[C]// IEEE/RSJ International Conference on Intelligent Robots & Systems. IEEE, 2016.

-

paper: Gomez-Ojeda R , Gonzalez-Jimenez J . Robust stereo visual odometry through a probabilistic combination of points and line segments[C]// 2016 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2016.

-

paper: PL-SLAM: a Stereo SLAM System through the Combination of Points and Line Segments

- 论文:Klein G, Murray D. Parallel tracking and mapping for small AR workspaces[C]//Mixed and Augmented Reality, 2007. ISMAR 2007. 6th IEEE and ACM International Symposium on. IEEE, 2007: 225-234.

- 代码:https://github.com/Oxford-PTAM/PTAM-GPL

- 工程地址:http://www.robots.ox.ac.uk/~gk/PTAM/

- 作者其他研究:http://www.robots.ox.ac.uk/~gk/publications.html

- 论文:Taihú Pire,Thomas Fischer, Gastón Castro, Pablo De Cristóforis, Javier Civera and Julio Jacobo Berlles. S-PTAM: Stereo Parallel Tracking and Mapping. Robotics and Autonomous Systems, 2017.

- 代码:https://github.com/lrse/sptam

- 作者其他论文:Castro G, Nitsche M A, Pire T, et al. Efficient on-board Stereo SLAM through constrained-covisibility strategies[J]. Robotics and Autonomous Systems, 2019.

- 论文:Davison A J, Reid I D, Molton N D, et al. MonoSLAM: Real-time single camera SLAM[J]. IEEE transactions on pattern analysis and machine intelligence, 2007, 29(6): 1052-1067.

- 代码:https://github.com/hanmekim/SceneLib2

- 论文:Mur-Artal R, Tardós J D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras[J]. IEEE Transactions on Robotics, 2017, 33(5): 1255-1262.

- 代码:https://github.com/raulmur/ORB_SLAM2

- 作者其他论文:

- 单目半稠密建图:Mur-Artal R, Tardós J D. Probabilistic Semi-Dense Mapping from Highly Accurate Feature-Based Monocular SLAM[C]//Robotics: Science and Systems. 2015, 2015.

- VIORB:Mur-Artal R, Tardós J D. Visual-inertial monocular SLAM with map reuse[J]. IEEE Robotics and Automation Letters, 2017, 2(2): 796-803.

- 多地图:Elvira R, Tardós J D, Montiel J M M. ORBSLAM-Atlas: a robust and accurate multi-map system[J]. arXiv preprint arXiv:1908.11585, 2019.

以下5, 6, 7, 8几项是 TUM 计算机视觉组全家桶,官方主页:https://vision.in.tum.de/research/vslam/dso

- 论文:Engel J, Koltun V, Cremers D. Direct sparse odometry[J]. IEEE transactions on pattern analysis and machine intelligence, 2017, 40(3): 611-625.

- 代码:https://github.com/JakobEngel/dso

- 双目 DSO:Wang R, Schworer M, Cremers D. Stereo DSO: Large-scale direct sparse visual odometry with stereo cameras[C]//Proceedings of the IEEE International Conference on Computer Vision. 2017: 3903-3911.

- VI-DSO:Von Stumberg L, Usenko V, Cremers D. Direct sparse visual-inertial odometry using dynamic marginalization[C]//2018 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2018: 2510-2517.

- 高翔在 DSO 上添加闭环的工作

- 论文:Gao X, Wang R, Demmel N, et al. LDSO: Direct sparse odometry with loop closure[C]//2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2018: 2198-2204.

- 代码:https://github.com/tum-vision/LDSO

- 论文:Engel J, Schöps T, Cremers D. LSD-SLAM: Large-scale direct monocular SLAM[C]//European conference on computer vision. Springer, Cham, 2014: 834-849.

- 代码:https://github.com/tum-vision/lsd_slam

- 论文:Kerl C, Sturm J, Cremers D. Dense visual SLAM for RGB-D cameras[C]//2013 IEEE/RSJ International Conference on Intelligent Robots and Systems. IEEE, 2013: 2100-2106.

- 代码 1:https://github.com/tum-vision/dvo_slam

- 代码 2:https://github.com/tum-vision/dvo

- 其他论文:

- Kerl C, Sturm J, Cremers D. Robust odometry estimation for RGB-D cameras[C]//2013 IEEE international conference on robotics and automation. IEEE, 2013: 3748-3754.

- Steinbrücker F, Sturm J, Cremers D. Real-time visual odometry from dense RGB-D images[C]//2011 IEEE international conference on computer vision workshops (ICCV Workshops). IEEE, 2011: 719-722.

- 苏黎世大学机器人与感知课题组

- 论文:Forster C, Pizzoli M, Scaramuzza D. SVO: Fast semi-direct monocular visual odometry[C]//2014 IEEE international conference on robotics and automation (ICRA). IEEE, 2014: 15-22.

- 代码:https://github.com/uzh-rpg/rpg_svo

- Forster C, Zhang Z, Gassner M, et al. SVO: Semidirect visual odometry for monocular and multicamera systems[J]. IEEE Transactions on Robotics, 2016, 33(2): 249-265.

- 论文:Sumikura S, Shibuya M, Sakurada K. OpenVSLAM: A Versatile Visual SLAM Framework[C]//Proceedings of the 27th ACM International Conference on Multimedia. 2019: 2292-2295.

- 代码:https://github.com/xdspacelab/openvslam ;文档

- 论文:Sun K, Mohta K, Pfrommer B, et al. Robust stereo visual inertial odometry for fast autonomous flight[J]. IEEE Robotics and Automation Letters, 2018, 3(2): 965-972.

- 代码:https://github.com/KumarRobotics/msckf_vio ;Video

- 论文:Bloesch M, Omari S, Hutter M, et al. Robust visual inertial odometry using a direct EKF-based approach[C]//2015 IEEE/RSJ international conference on intelligent robots and systems (IROS). IEEE, 2015: 298-304.

- 代码:https://github.com/ethz-asl/rovio ;Video

- 论文:Huai Z, Huang G. Robocentric visual-inertial odometry[C]//2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2018: 6319-6326.

- 代码:https://github.com/rpng/R-VIO ;Video

- 论文:Leutenegger S, Lynen S, Bosse M, et al. Keyframe-based visual–inertial odometry using nonlinear optimization[J]. The International Journal of Robotics Research, 2015, 34(3): 314-334.

- 代码:https://github.com/ethz-asl/okvis

- 论文:Mur-Artal R, Tardós J D. Visual-inertial monocular SLAM with map reuse[J]. IEEE Robotics and Automation Letters, 2017, 2(2): 796-803.

- 代码:https://github.com/jingpang/LearnVIORB (VIORB 本身是没有开源的,这是王京大佬复现的一个版本)

- 论文:Qin T, Li P, Shen S. Vins-mono: A robust and versatile monocular visual-inertial state estimator[J]. IEEE Transactions on Robotics, 2018, 34(4): 1004-1020.

- 代码:https://github.com/HKUST-Aerial-Robotics/VINS-Mono

- 双目版 VINS-Fusion:https://github.com/HKUST-Aerial-Robotics/VINS-Fusion

- 移动段 VINS-mobile:https://github.com/HKUST-Aerial-Robotics/VINS-Mobile

- 论文:Shan Z, Li R, Schwertfeger S. RGBD-Inertial Trajectory Estimation and Mapping for Ground Robots[J]. Sensors, 2019, 19(10): 2251.

- 代码:https://github.com/STAR-Center/VINS-RGBD ;Video

- 论文:Geneva P, Eckenhoff K, Lee W, et al. Openvins: A research platform for visual-inertial estimation[C]//IROS 2019 Workshop on Visual-Inertial Navigation: Challenges and Applications, Macau, China. IROS 2019.

- 代码:https://github.com/rpng/open_vins

- 个人主页 Google Scholar Github

- 人工 3D 感知方面,曼哈顿世界,很多开源方案

- 谷歌学术

- github:

- 论文:

- 博士论文:Long Range Monocular SLAM 2017

- Recovering Stable Scale in Monocular SLAM Using Object-Supplemented Bundle Adjustment[J]. IEEE Transactions on Robotics, 2018

- Direct Line Guidance Odometry[C]//2018 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2018

- Object-aware bundle adjustment for correcting monocular scale drift[C]//Robotics and Automation (ICRA), 2016 IEEE International Conference on. IEEE, 2016

- 深度学习状态估计、语义分割、目标检测

- 个人主页

- 论文:

- Yoshikatsu Nakajima and Hideo Saito, Efficient Object-oriented Semantic Mapping with Object Detector, IEEE Access, Vol.7, pp.3206-3213, 2019

- Yoshikatsu Nakajima and Hideo Saito, Simultaneous Object Segmentation and Recognition by Merging CNN Outputs from Uniformly Distributed Multiple Viewpoints, IEICE Transactions on Information and Systems, Vol.E101-D, No.5, pp.1308-1316, 2018

- Yoshikatsu Nakajima and Hideo Saito, Robust camera pose estimation by viewpoint classification using deep learning, Computational Visual Media (Springer), DOI 10.1007/s41095-016-0067-z, Vol.3, No.2, pp.189-198, 2017 [Best Paper Award]

- 有很多基于RGB-D平面的稠密建图工作

- 论文:

- Wietrzykowski J, Skrzypczyński P. A probabilistic framework for global localization with segmented planes[C]//Mobile Robots (ECMR), 2017 European Conference on. IEEE, 2017: 1-6.

- Wietrzykowski J, Skrzypczyński P. PlaneLoc: Probabilistic global localization in 3-D using local planar features[J]. Robotics and Autonomous Systems, 2019.

- 开源代码:

-

俄亥俄州立大学在读博士

- github:https://github.com/xiaohulugo

- 相关作者:Yahui Liu

-

消失点

检测

- Lu X, Yaoy J, Li H, et al. 2-Line Exhaustive Searching for Real-Time Vanishing Point Estimation in Manhattan World[C]//Applications of Computer Vision (WACV), 2017 IEEE Winter Conference on. IEEE, 2017: 345-353.

- 代码:https://github.com/xiaohulugo/VanishingPointDetection

-

点云分割聚类

- Lu X, Yao J, Tu J, et al. PAIRWISE LINKAGE FOR POINT CLOUD SEGMENTATION[J]. ISPRS Annals of Photogrammetry, Remote Sensing & Spatial Information Sciences, 2016, 3(3).

- 代码:https://github.com/xiaohulugo/PointCloudSegmentation

-

点云中3D 线检测

- Lu X, Liu Y, Li K. Fast 3D Line Segment Detection From Unorganized Point Cloud[J]. arXiv preprint arXiv:1901.02532, 2019.

- 代码:https://github.com/xiaohulugo/3DLineDetection

-

曼哈顿结构化环境的

单目 SLAM

- Li H, Yao J, Bazin J C, et al. A monocular SLAM system leveraging structural regularity in Manhattan world[C]//2018 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2018: 2518-2525.

- 机器人视觉中心

- 团队代表作:SLAM 的过去现在和未来,Meaningful Maps,平面物体路标,Rat SLAM,SeqSLAM

- Yasir Latif:作者主页 谷歌学术

- Lachlan Nicholson:二次平面作为物体SLAM的路标 谷歌学术

- Michael Milford:Rat SLAM,SeqSLAM 谷歌学术

- 论文:

- Cadena C, Carlone L, Carrillo H, et al. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age[J]. IEEE Transactions on Robotics, 2016, 32(6): 1309-1332.

- Sünderhauf N, Pham T T, Latif Y, et al. Meaningful maps with object-oriented semantic mapping[C]//Intelligent Robots and Systems (IROS), 2017 IEEE/RSJ International Conference on. IEEE, 2017: 5079-5085.

- Hosseinzadeh M, Li K, Latif Y, et al. Real-Time Monocular Object-Model Aware Sparse SLAM[J]. arXiv preprint arXiv:1809.09149, 2018.实时单目目标-模型感知的稀疏SLAM

- Hosseinzadeh M, Latif Y, Pham T, et al. Towards Semantic SLAM: Points, Planes and Objects[J]. arXiv preprint arXiv:1804.09111, 2018.基于二次曲面和平面的结构感知SLAM

- 主要研究城市环境下单目 SLAM,基于场景和已知物体的大环境增强现实,传感器融合

- researchgate YouTube

-

点线融合

-

相关

论文:

- Vakhitov A, Lempitsky V. Learnable Line Segment Descriptor for Visual SLAM[J]. IEEE Access, 2019.

- Vakhitov A, Lempitsky V, Zheng Y. Stereo relative pose from line and point feature triplets[C]//Proceedings of the European Conference on Computer Vision (ECCV). 2018: 648-663.

- Pumarola A, Vakhitov A, Agudo A, et al. PL-SLAM: Real-time monocular visual SLAM with points and lines[C]//2017 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2017: 4503-4508.

- Vakhitov A, Funke J, Moreno-Noguer F. Accurate and linear time pose estimation from points and lines[C]//European Conference on Computer Vision. Springer, Cham, 2016: 583-599.

- 三维语义重建、多相机协同 SLAM

- 实验室主页:http://www.cvg.ethz.ch/people/faculty/

- 实验室博士:http://people.inf.ethz.ch/liup/ , http://people.inf.ethz.ch/sppablo/ , http://people.inf.ethz.ch/schoepst/publications/ , https://www.nsavinov.com/ , http://people.inf.ethz.ch/cian/Publications/

- 实验室主页:http://www.ee.cuhk.edu.hk/~qhmeng/research.html

- 动态 SLAM ,医疗服务型机器人

- 主要研究:飞机,航天器和地面车辆的自动系统和控制设计相关

- 实验室主页

- 主要文章:

- Mu B, Liu S Y, Paull L, et al. Slam with objects using a nonparametric pose graph[C]//2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2016: 4602-4609.

- ICRA 2019:Robust Object-Based SLAM for High-Speed Autonomous Navigation

- ICRA 2019:Efficient Constellation-Based Map-Merging for Semantic SLAM

- IJRR 2018:Reliable Graphs for SLAM

- Resource-aware collaborative SLAM

- 主要研究:增强现实、医疗机器人

- 个人主页,project

- 主要文章:

- Long Qian, Anton Deguet, Zerui Wang, Yun-Hui Liu and Peter Kazanzides. Augmented Reality Assisted Instrument Insertion and Tool Manipulation for the First Assistant in Robotic Surgery. 2019 ICRA

- https://blog.csdn.net/qq_15698613/article/details/84871119

- https://blog.csdn.net/electech6/article/details/94590781?depth_1-utm_source=distribute.pc_relevant.none-task&utm_source=distribute.pc_relevant.none-task

- https://blog.csdn.net/carson2005/article/details/6601109?depth_1-utm_source=distribute.pc_relevant.none-task&utm_source=distribute.pc_relevant.none-task

- https://www.zhihu.com/question/332075078/answer/738194144

- https://www.zhihu.com/question/332075078/answer/734910130

- http://bbs.cvmart.net/topics/481/outstanding-Computer-Vision-Team

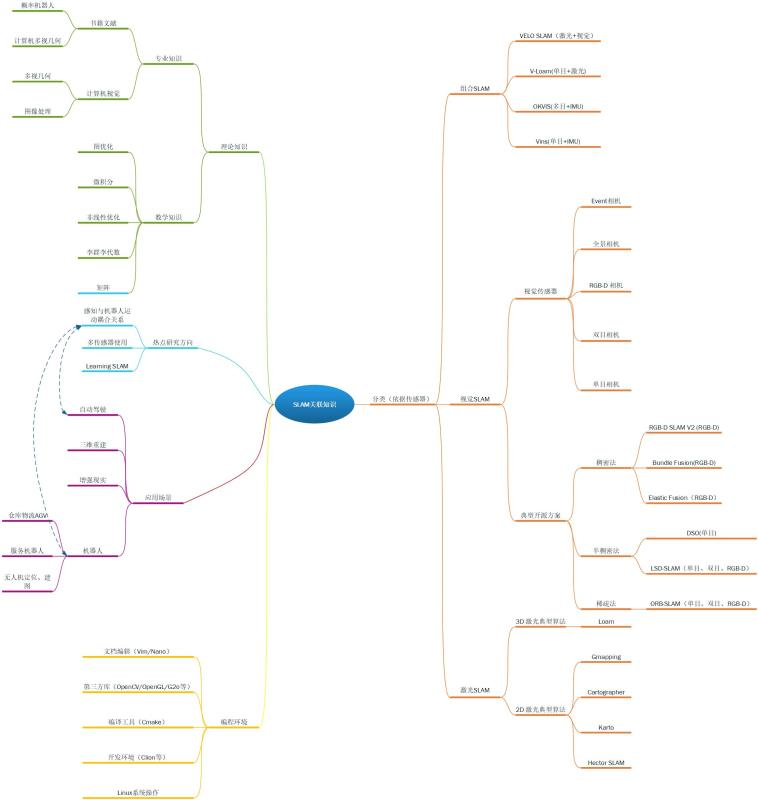

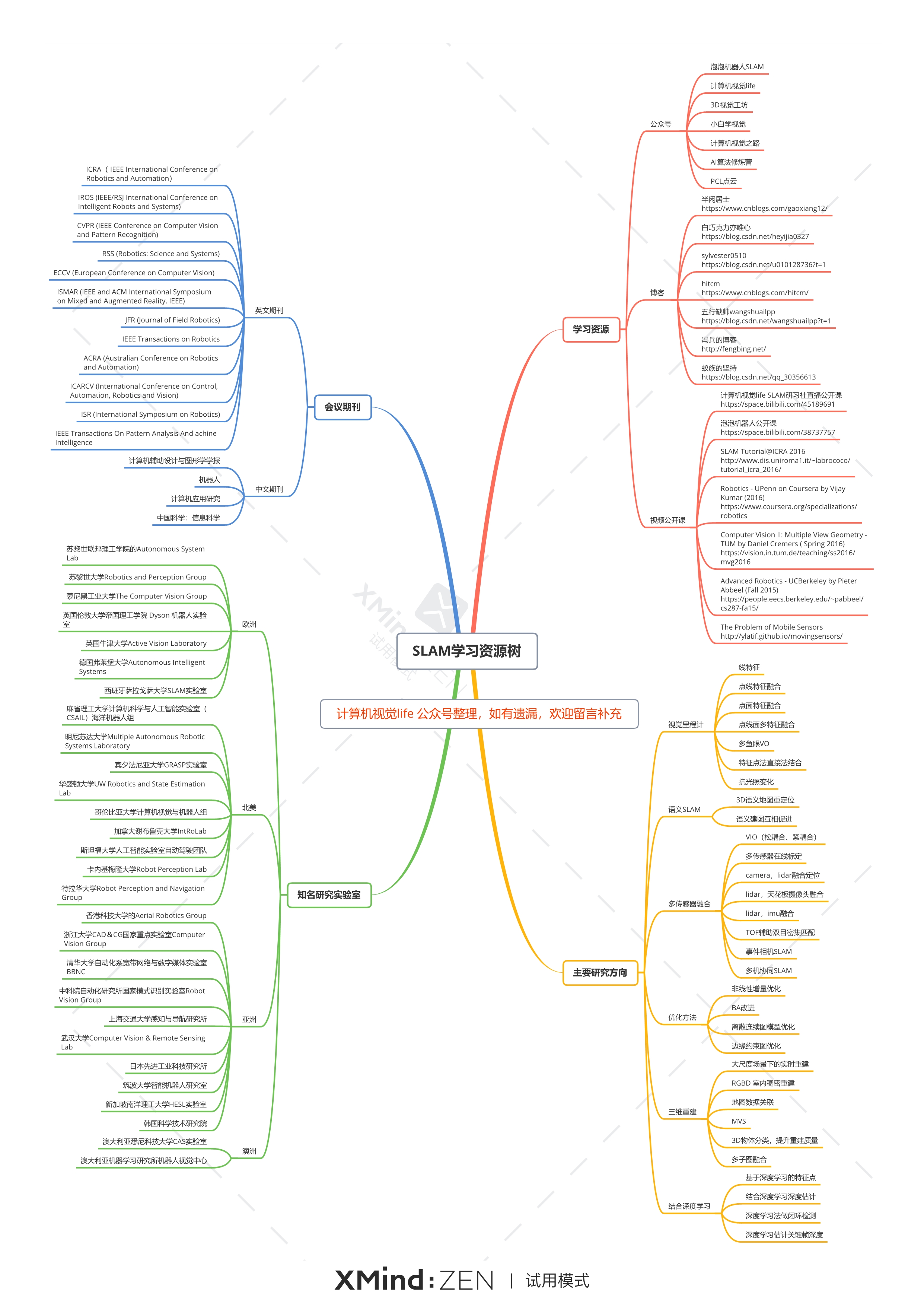

图为曹秀洁师姐(北航博士)整理

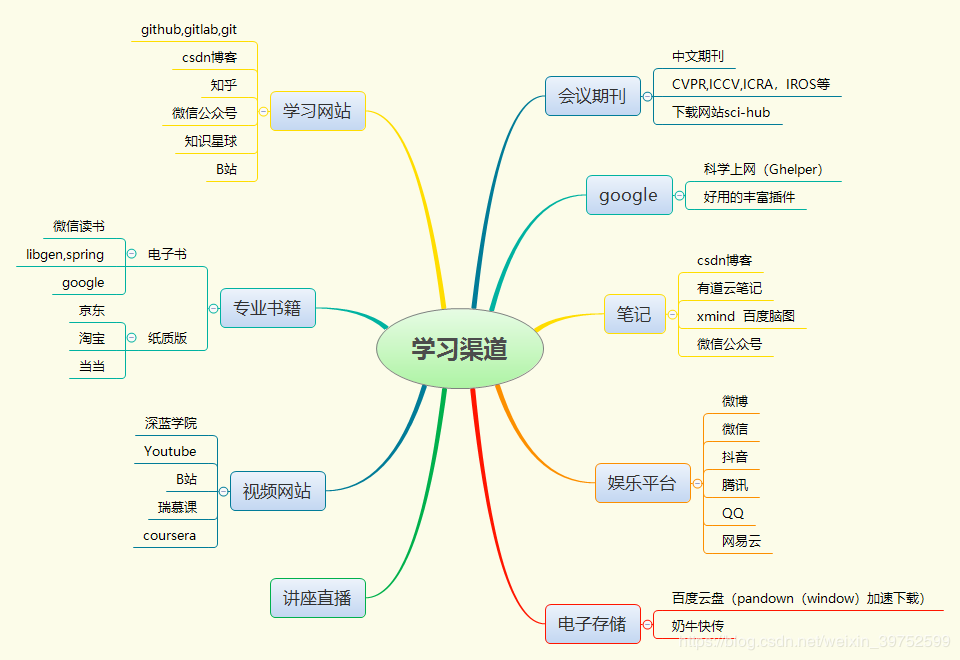

学习网站

-

Linux**:https://linux.cn/

-

鸟哥的linux私房菜:http://linux.vbird.org/

-

Linux公社: https://www.linuxidc.com/

学习书籍

-

《鸟哥的Linux私房菜》

学习资源

- Shell在线速查表:

-

Bash Guide for Beginners:

https://link.zhihu.com/?target=http%3A//www.tldp.org/LDP/Bash-Beginners-Guide/html/

-

Advanced Bash-Scripting Guide:

https://link.zhihu.com/?target=http%3A//www.tldp.org/LDP/abs/html/

学习网站

- OpenVim:

https://link.zhihu.com/?target=http%3A//www.openvim.com/tutorial.html

- Vim Adventures:

https://link.zhihu.com/?target=http%3A//vim-adventures.com/

- Vim详细教程:

https://zhuanlan.zhihu.com/p/68111471

- Interactive Vim tutorial:

https://link.zhihu.com/?target=http%3A//www.openvim.com/

- 最详细的Vim编辑器指南:

https://www.shiyanlou.com/questions/2721/

- 简明Vim教程:

https://link.zhihu.com/?target=http%3A//coolshell.cn/articles/5426.html

- Vim学习资源整理:

https://link.zhihu.com/?target=https%3A//github.com/vim-china/hello-vim

-

Cmake-tutoria:

-

Learning-cmake:

-

awesome-cmake(公司常用的培训资料):

Github:https://github.com/lishuwei0424/Git-Github-Notes

-

Git官方文档:

-

Git-book:

https://git-scm.com/book/zh/v2

- Github超详细的Git学习资料:

https://link.zhihu.com/?target=https%3A//github.com/xirong/my-git

- Think like Git:

http://think-like-a-git.net/

Atlassian Git Tutorial:

https://link.zhihu.com/?target=https%3A//www.atlassian.com/git/tutorials

- Git Workflows and Tutorials:

原文: https://www.atlassian.com/git/tutorials/comparing-workflows

译文: https://github.com/xirong/my-git/blob/master/git-workflow-tutorial.md

- 版本管理工具介绍--Git篇:

https://link.zhihu.com/?target=http%3A//www.imooc.com/learn/208

- 廖雪峰Git教程:

https://www.liaoxuefeng.com/wiki/896043488029600

-

GDB调试入门指南:

-

GDB Documentation:

http://blog.gqylpy.com/gqy/20285/

https://github.com/stevenlovegrove/Pangolin

- 苏黎世大学rpg_trajectory_evaluation:https://github.com/uzh-rpg/rpg_trajectory_evaluation

- EVO:https://github.com/MichaelGrupp/evo

-

C++ Primer

-

C++ Primer Plus

-

Effective C++

-

C++标准库

-

视频:程序设计实习 https://space.bilibili.com/447579002/favlist?fid=758303502&ftype=create

xmind for computer science course

1.c++与c语言 2.Linux系统 3.计算机网络 4.计算机组成原理 5.操作系统 6.数据结构 7.编译原理 8.软件设计模式 9.数据库 10.面试刷题

https://github.com/lishuwei0424/xmind_for_cs_basics

-

SLAM 最新研究更新 Recent_SLAM_Research :https://github.com/YiChenCityU/Recent_SLAM_Research

-

西北工大智能系统实验室 SLAM 培训:https://github.com/zdzhaoyong/SummerCamp2018

-

视频资料:

1.激光SLAM:

https://pan.baidu.com/s/1PQT_YDeD5kVNACrXBX7QVQ

提取码:8erw

2.无人驾驶工程师

https://pan.baidu.com/s/1XptGb7NMhu7YPAvVI5jGkw

提取码:deop

3.计算机视觉基础-图像处理

https://pan.baidu.com/s/13OvyD5Ceyi2AnvRYgav4gA

提取码:acw4

4.SLAM_VIO学习总结

https://pan.baidu.com/s/1mMaS-OE1oJvEQ-fpwaxJpQ

提取码:4bel

5.泡泡机器人公开课

https://pan.baidu.com/s/1yA8yRZIpUTslkaK_5xTLXA

提取码:suz8

6.宾夕法尼亚大学-SLAM公开课

https://pan.baidu.com/s/1eoIrJVnb_xxGJQMCX6lv2Q

提取码:fhgq

7.MVG(多视角几何)-TUM-2014

https://pan.baidu.com/s/1HocTwHoJqfwckWJ5UbXQcw

提取码:wrbn

8.ROS-胡春旭

https://pan.baidu.com/s/1H9yhLhyhkZCIrWe04jFkNw

提取码:h9lj

-

书籍:

https://pan.baidu.com/s/14KdoJbPFKVFBNjJmqlVdpA

提取码:u040

https://github.com/lishuwei0424/Self_Reference_Book_for_VSALM

-

公众号和B站

- 泡泡机器人:paopaorobot_slam

- 计算机视觉life

- 极市平台

- 将门创投

- 3D视觉工坊

-

自己学习途径

https://github.com/YiChenCityU/Recent_SLAM_Research(跟踪SLAM前沿动态论文,更新的很频繁)

https://github.com/wuxiaolang/Visual_SLAM_Related_Research

https://github.com/tzutalin/awesome-visual-slam

https://github.com/OpenSLAM/awesome-SLAM-list

https://github.com/kanster/awesome-slam

https://github.com/youngguncho/awesome-slam-datasets

https://github.com/openMVG/awesome_3DReconstruction_list

https://github.com/Soietre/awesome_3DReconstruction_list

https://github.com/electech6/owesome-RGBD-SLAM

https://github.com/uzh-rpg/event-based_vision_resources

https://github.com/GeekLiB/Lee-SLAM-source

百度脑图:https://naotu.baidu.com/file/edf7d340203d40d9abc65e59596e0ad5?token=2eafad7bf90fd163

8.1 图来之崔大佬整理

8.2 图来之六哥整理(公众号:计算机视觉life)

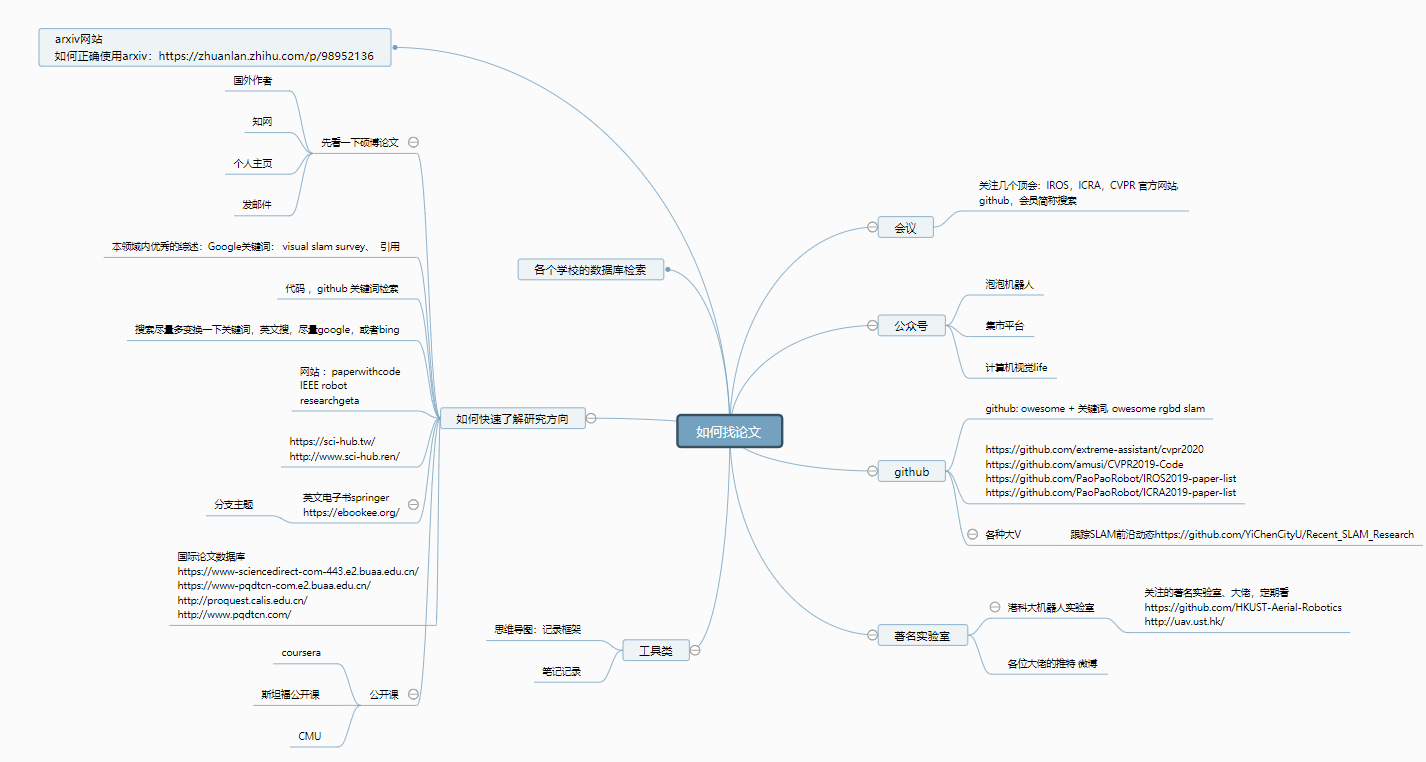

百度脑图: https://naotu.baidu.com/file/1b084951927b765ba0584743bfaa9ac4?token=7f67de64501c0884

-

【泡泡机器人成员原创-SLAM求职宝典】SLAM求职经验帖 https://zhuanlan.zhihu.com/p/28565563

-

SLAM-Jobs https://github.com/nebula-beta/SLAM-Jobs

-

不断更新

1.https://github.com/wuxiaolang/Visual_SLAM_Related_Research